Template:Weibull++ Examples and Case Studies

Confidence Bound Examples

Likelihood Ratio Bounds on Parameters

Likelihood Ratio Bounds on Parameters

Five units were put on a reliability test and experienced failures at 10, 20, 30, 40 and 50 hours. Assuming a Weibull distribution, the MLE parameter estimates are calculated to be [math]\displaystyle{ \widehat{\beta }=2.2938\,\! }[/math] and [math]\displaystyle{ \widehat{\eta }=33.9428.\,\! }[/math] Calculate the 90% two-sided confidence bounds on these parameters using the likelihood ratio method.

Solution

The first step is to calculate the likelihood function for the parameter estimates:

- [math]\displaystyle{ \begin{align} L(\widehat{\beta },\widehat{\eta })= & \underset{i=1}{\overset{N}{\mathop \prod }}\,f({{x}_{i}};\widehat{\beta },\widehat{\eta })=\underset{i=1}{\overset{5}{\mathop \prod }}\,\frac{\widehat{\beta }}{\widehat{\eta }}\cdot {{\left( \frac{{{x}_{i}}}{\widehat{\eta }} \right)}^{\widehat{\beta }-1}}\cdot {{e}^{-{{\left( \tfrac{{{x}_{i}}}{\widehat{\eta }} \right)}^{\widehat{\beta }}}}} \\ \\ L(\widehat{\beta },\widehat{\eta })= & \underset{i=1}{\overset{5}{\mathop \prod }}\,\frac{2.2938}{33.9428}\cdot {{\left( \frac{{{x}_{i}}}{33.9428} \right)}^{1.2938}}\cdot {{e}^{-{{\left( \tfrac{{{x}_{i}}}{33.9428} \right)}^{2.2938}}}} \\ \\ L(\widehat{\beta },\widehat{\eta })= & 1.714714\times {{10}^{-9}} \end{align}\,\! }[/math]

where [math]\displaystyle{ {{x}_{i}}\,\! }[/math] are the original time-to-failure data points. We can now rearrange the likelihood ratio equation to the form:

- [math]\displaystyle{ L(\beta ,\eta )-L(\widehat{\beta },\widehat{\eta })\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}=0\,\! }[/math]

Since our specified confidence level, [math]\displaystyle{ \delta\,\! }[/math], is 90%, we can calculate the value of the chi-squared statistic, [math]\displaystyle{ \chi _{0.9;1}^{2}=2.705543.\,\! }[/math] We then substitute this information into the equation:

- [math]\displaystyle{ \begin{align} L(\beta ,\eta )-L(\widehat{\beta },\widehat{\eta })\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}= & 0 \\ \\ L(\beta ,\eta )-1.714714\times {{10}^{-9}}\cdot {{e}^{\tfrac{-2.705543}{2}}}= & 0 \\ \\ L(\beta ,\eta )-4.432926\cdot {{10}^{-10}}= & 0 \end{align}\,\! }[/math]

The next step is to find the set of values of [math]\displaystyle{ \beta\,\! }[/math] and [math]\displaystyle{ \eta\,\! }[/math] that satisfy this equation, or find the values of [math]\displaystyle{ \beta\,\! }[/math] and [math]\displaystyle{ \eta\,\! }[/math] such that [math]\displaystyle{ L(\beta ,\eta )=4.432926\cdot {{10}^{-10}}.\,\! }[/math]

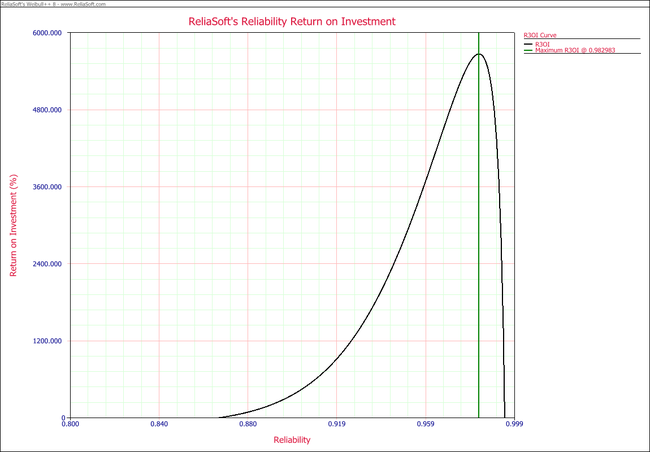

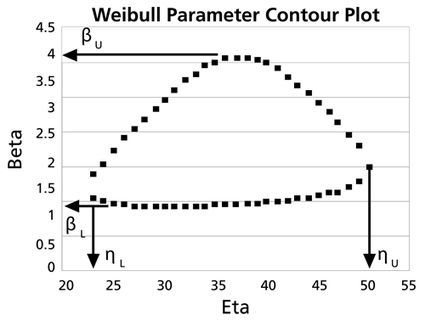

The solution is an iterative process that requires setting the value of [math]\displaystyle{ \beta\,\! }[/math] and finding the appropriate values of [math]\displaystyle{ \eta\,\! }[/math], and vice versa. The following table gives values of [math]\displaystyle{ \beta\,\! }[/math] based on given values of [math]\displaystyle{ \eta\,\! }[/math].

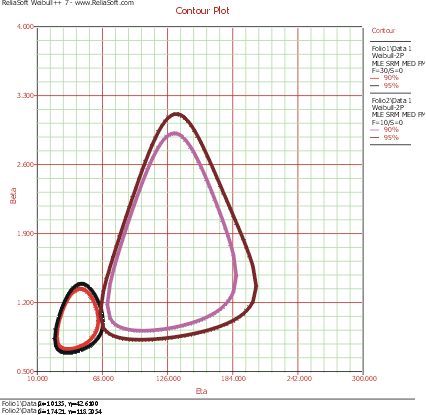

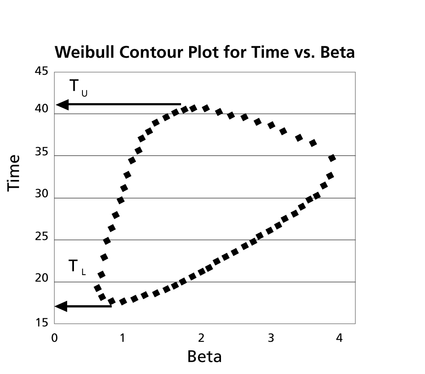

These data are represented graphically in the following contour plot:

(Note that this plot is generated with degrees of freedom [math]\displaystyle{ k = 1\,\! }[/math], as we are only determining bounds on one parameter. The contour plots generated in Weibull++ are done with degrees of freedom [math]\displaystyle{ k = 2\,\! }[/math], for use in comparing both parameters simultaneously.) As can be determined from the table, the lowest calculated value for [math]\displaystyle{ \beta\,\! }[/math] is 1.142, while the highest is 3.950. These represent the two-sided 90% confidence limits on this parameter. Since solutions for the equation do not exist for values of [math]\displaystyle{ \eta\,\! }[/math] below 23 or above 50, these can be considered the 90% confidence limits for this parameter. In order to obtain more accurate values for the confidence limits on [math]\displaystyle{ \eta\,\! }[/math], we can perform the same procedure as before, but finding the two values of [math]\displaystyle{ \eta\,\! }[/math] that correspond with a given value of [math]\displaystyle{ \beta\,\! }[/math] Using this method, we find that the 90% confidence limits on [math]\displaystyle{ \eta\,\! }[/math] are 22.474 and 49.967, which are close to the initial estimates of 23 and 50.

Note that the points where [math]\displaystyle{ \beta\,\! }[/math] are maximized and minimized do not necessarily correspond with the points where [math]\displaystyle{ \eta\,\! }[/math] are maximized and minimized. This is due to the fact that the contour plot is not symmetrical, so that the parameters will have their extremes at different points.

Likelihood Ratio Bounds on Time (Type I)

Likelihood Ratio Bounds on Time (Type I)

For the data given in Example 1, determine the 90% two-sided confidence bounds on the time estimate for a reliability of 50%. The ML estimate for the time at which [math]\displaystyle{ R(t)=50%\,\! }[/math] is 28.930.

Solution

In this example, we are trying to determine the 90% two-sided confidence bounds on the time estimate of 28.930. As was mentioned, we need to rewrite the likelihood ratio equation so that it is in terms of [math]\displaystyle{ t\,\! }[/math] and [math]\displaystyle{ \beta .\,\! }[/math] This is accomplished by using a form of the Weibull reliability equation, [math]\displaystyle{ R={{e}^{-{{\left( \tfrac{t}{\eta } \right)}^{\beta }}}}.\,\! }[/math] This can be rearranged in terms of [math]\displaystyle{ \eta \,\! }[/math], with [math]\displaystyle{ R\,\! }[/math] being considered a known variable or:

- [math]\displaystyle{ \eta =\frac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}}\,\! }[/math]

This can then be substituted into the [math]\displaystyle{ \eta \,\! }[/math] term in the likelihood ratio equation to form a likelihood equation in terms of [math]\displaystyle{ t\,\! }[/math] and [math]\displaystyle{ \beta \,\! }[/math] or:

- [math]\displaystyle{ \begin{align} & L(\beta ,t)= & \underset{i=1}{\overset{N}{\mathop \prod }}\,f({{x}_{i}};\beta ,t,R) \\ & & \end{align}\,\! }[/math]

- [math]\displaystyle{ =\underset{i=1}{\overset{5}{\mathop \prod }}\,\frac{\beta }{\left( \tfrac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}} \right)}\cdot {{\left( \frac{{{x}_{i}}}{\left( \tfrac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}} \right)} \right)}^{\beta -1}}\cdot \text{exp}\left[ -{{\left( \frac{{{x}_{i}}}{\left( \tfrac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}} \right)} \right)}^{\beta }} \right]\,\! }[/math]

where [math]\displaystyle{ {{x}_{i}}\,\! }[/math] are the original time-to-failure data points. We can now rearrange the likelihood ratio equation to the form:

- [math]\displaystyle{ L(\beta ,t)-L(\widehat{\beta },\widehat{\eta })\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}=0\,\! }[/math]

Since our specified confidence level, [math]\displaystyle{ \delta \,\! }[/math], is 90%, we can calculate the value of the chi-squared statistic, [math]\displaystyle{ \chi _{0.9;1}^{2}=2.705543.\,\! }[/math] We can now substitute this information into the equation:

- [math]\displaystyle{ \begin{align} L(\beta ,t)-L(\widehat{\beta },\widehat{\eta })\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}= & 0 \\ \\ L(\beta ,t)-1.714714\times {{10}^{-9}}\cdot {{e}^{\tfrac{-2.705543}{2}}}= & 0 \\ & \\ L(\beta ,t)-4.432926\cdot {{10}^{-10}}= & 0 \end{align}\,\! }[/math]

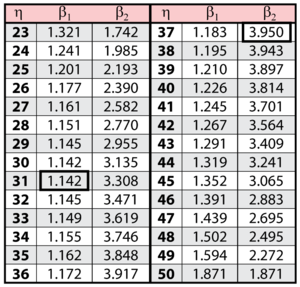

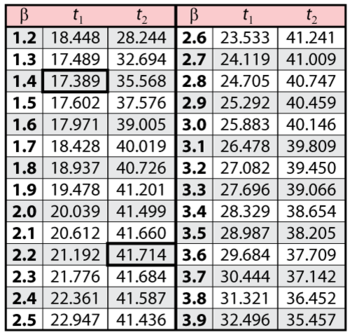

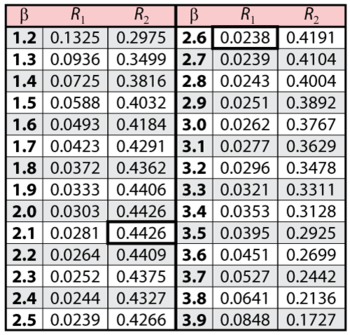

Note that the likelihood value for [math]\displaystyle{ L(\widehat{\beta },\widehat{\eta })\,\! }[/math] is the same as it was for Example 1. This is because we are dealing with the same data and parameter estimates or, in other words, the maximum value of the likelihood function did not change. It now remains to find the values of [math]\displaystyle{ \beta \,\! }[/math] and [math]\displaystyle{ t\,\! }[/math] which satisfy this equation. This is an iterative process that requires setting the value of [math]\displaystyle{ \beta \,\! }[/math] and finding the appropriate values of [math]\displaystyle{ t\,\! }[/math]. The following table gives the values of [math]\displaystyle{ t\,\! }[/math] based on given values of [math]\displaystyle{ \beta \,\! }[/math].

These points are represented graphically in the following contour plot:

As can be determined from the table, the lowest calculated value for [math]\displaystyle{ t\,\! }[/math] is 17.389, while the highest is 41.714. These represent the 90% two-sided confidence limits on the time at which reliability is equal to 50%.

Likelihood Ratio Bounds on Reliability (Type 2)

Likelihood Ratio Bounds on Reliability (Type 2)

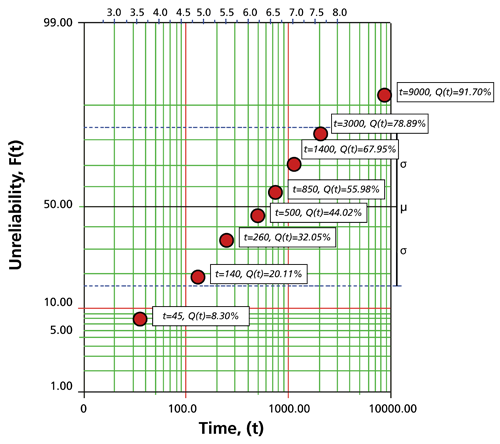

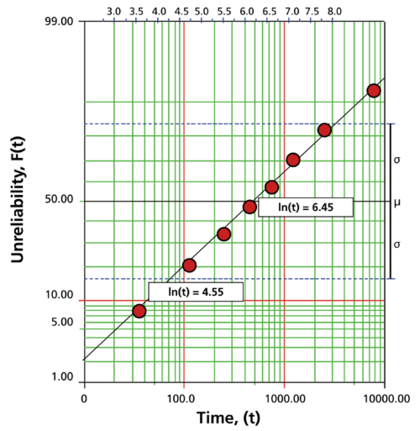

For the data given in Example 1, determine the 90% two-sided confidence bounds on the reliability estimate for [math]\displaystyle{ t=45\,\! }[/math]. The ML estimate for the reliability at [math]\displaystyle{ t=45\,\! }[/math] is 14.816%.

Solution

In this example, we are trying to determine the 90% two-sided confidence bounds on the reliability estimate of 14.816%. As was mentioned, we need to rewrite the likelihood ratio equation so that it is in terms of [math]\displaystyle{ R\,\! }[/math] and [math]\displaystyle{ \beta .\,\! }[/math] This is again accomplished by substituting the Weibull reliability equation into the [math]\displaystyle{ \eta \,\! }[/math] term in the likelihood ratio equation to form a likelihood equation in terms of [math]\displaystyle{ R\,\! }[/math] and [math]\displaystyle{ \beta \,\! }[/math]:

- [math]\displaystyle{ \begin{align} & L(\beta ,R)= & \underset{i=1}{\overset{N}{\mathop \prod }}\,f({{x}_{i}};\beta ,t,R) \\ & & \end{align}\,\! }[/math]

- [math]\displaystyle{ =\underset{i=1}{\overset{5}{\mathop \prod }}\,\frac{\beta }{\left( \tfrac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}} \right)}\cdot {{\left( \frac{{{x}_{i}}}{\left( \tfrac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}} \right)} \right)}^{\beta -1}}\cdot \text{exp}\left[ -{{\left( \frac{{{x}_{i}}}{\left( \tfrac{t}{{{(-\text{ln}(R))}^{\tfrac{1}{\beta }}}} \right)} \right)}^{\beta }} \right]\,\! }[/math]

where [math]\displaystyle{ {{x}_{i}}\,\! }[/math] are the original time-to-failure data points. We can now rearrange the likelihood ratio equation to the form:

- [math]\displaystyle{ L(\beta ,R)-L(\widehat{\beta },\widehat{\eta })\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}=0\,\! }[/math]

Since our specified confidence level, [math]\displaystyle{ \delta \,\! }[/math], is 90%, we can calculate the value of the chi-squared statistic, [math]\displaystyle{ \chi _{0.9;1}^{2}=2.705543.\,\! }[/math] We can now substitute this information into the equation:

- [math]\displaystyle{ \begin{align} L(\beta ,R)-L(\widehat{\beta },\widehat{\eta })\cdot {{e}^{\tfrac{-\chi _{\alpha ;1}^{2}}{2}}}= & 0 \\ \\ L(\beta ,R)-1.714714\times {{10}^{-9}}\cdot {{e}^{\tfrac{-2.705543}{2}}}= & 0 \\ \\ L(\beta ,R)-4.432926\cdot {{10}^{-10}}= & 0 \end{align}\,\! }[/math]

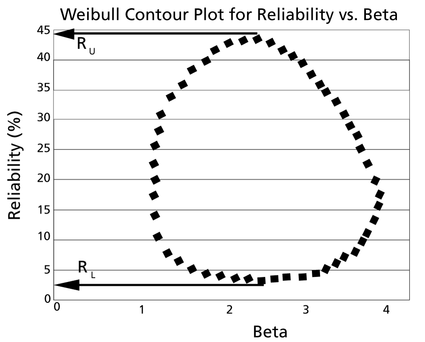

It now remains to find the values of [math]\displaystyle{ \beta \,\! }[/math] and [math]\displaystyle{ R\,\! }[/math] that satisfy this equation. This is an iterative process that requires setting the value of [math]\displaystyle{ \beta \,\! }[/math] and finding the appropriate values of [math]\displaystyle{ R\,\! }[/math]. The following table gives the values of [math]\displaystyle{ R\,\! }[/math] based on given values of [math]\displaystyle{ \beta \,\! }[/math].

These points are represented graphically in the following contour plot:

As can be determined from the table, the lowest calculated value for [math]\displaystyle{ R\,\! }[/math] is 2.38%, while the highest is 44.26%. These represent the 90% two-sided confidence limits on the reliability at [math]\displaystyle{ t=45\,\! }[/math].

Comparing Parameter Estimation Methods Using Simulation Based Bounds

Comparing Parameter Estimation Methods Using Simulation Based Bounds

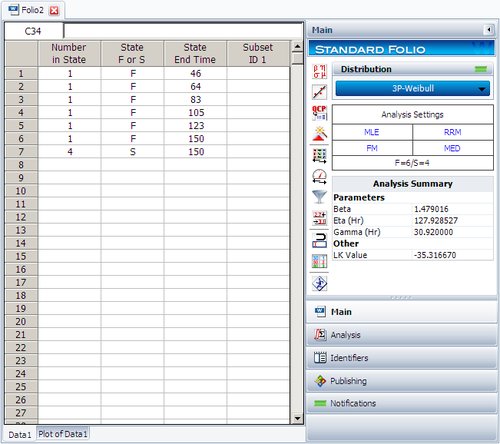

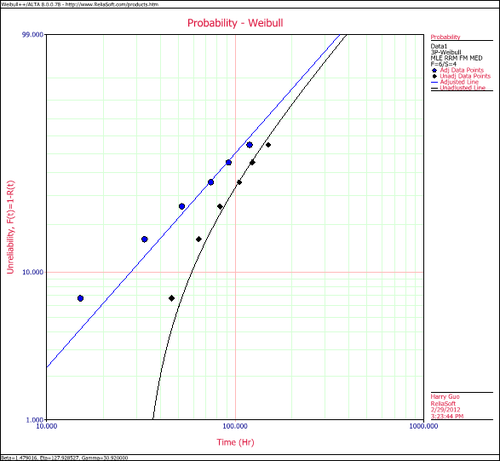

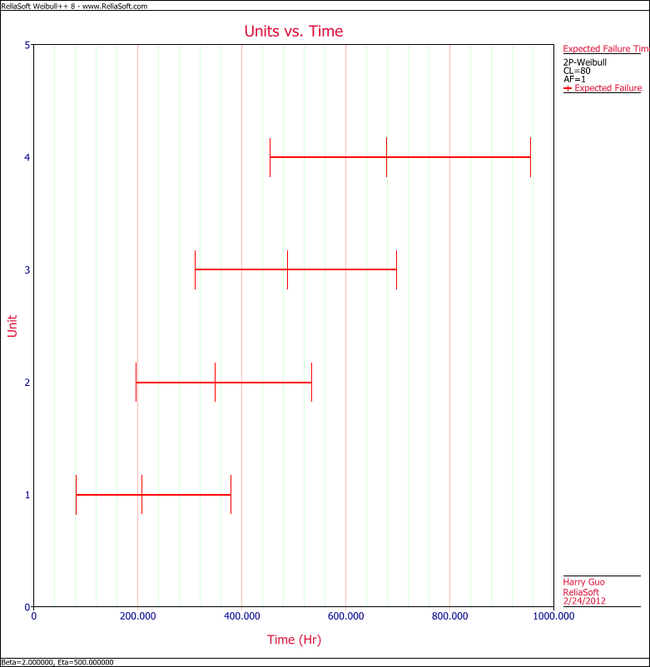

The purpose of this example is to determine the best parameter estimation method for a sample of ten units with complete time-to-failure data for each unit (i.e., no censoring). The data set follows a Weibull distribution with [math]\displaystyle{ \beta =2\,\! }[/math] and [math]\displaystyle{ \eta =100\,\! }[/math] hours.

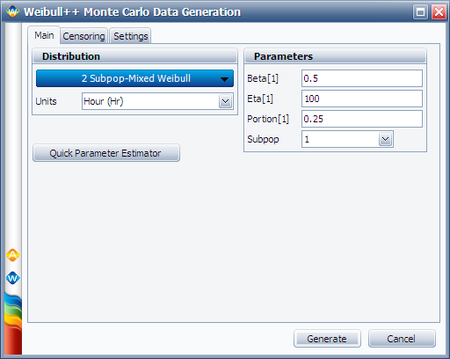

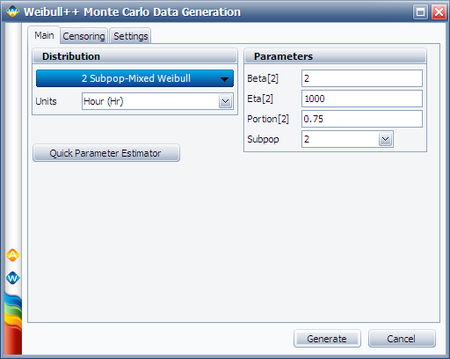

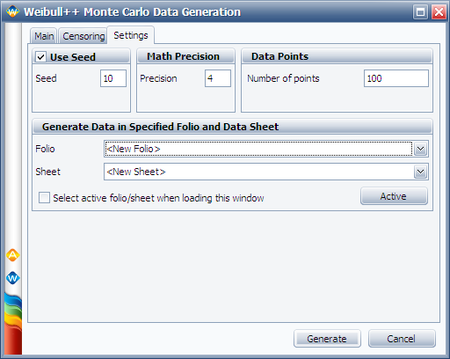

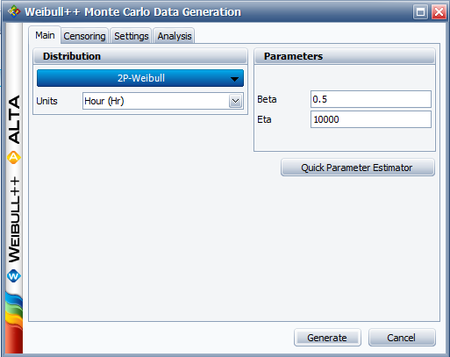

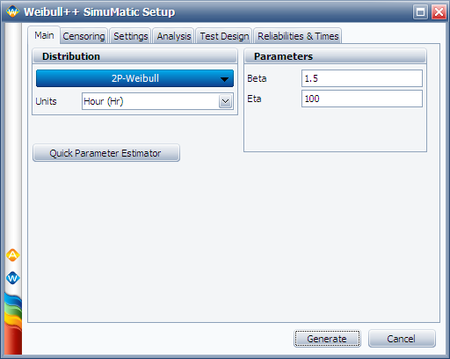

The confidence bounds for the data set could be obtained by using Weibull++'s SimuMatic utility. To obtain the results, use the following settings in SimuMatic.

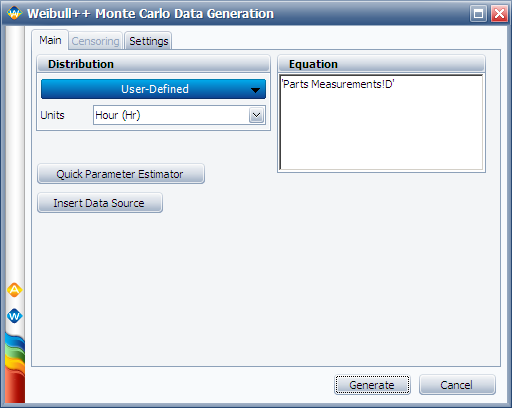

- On the Main tab, choose the 2P-Weibull distribution and enter the given parameters (i.e., [math]\displaystyle{ \beta =2\,\! }[/math] and [math]\displaystyle{ \eta =100\,\! }[/math] hours)

- On the Censoring tab, select the No censoring option.

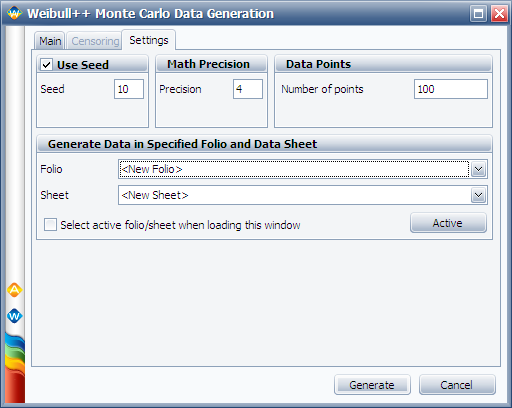

- On the Settings tab, set the number of data sets to 1,000 and the number of data points to 10.

- On the Analysis tab, choose the RRX analysis method and set the confidence bounds to 90.

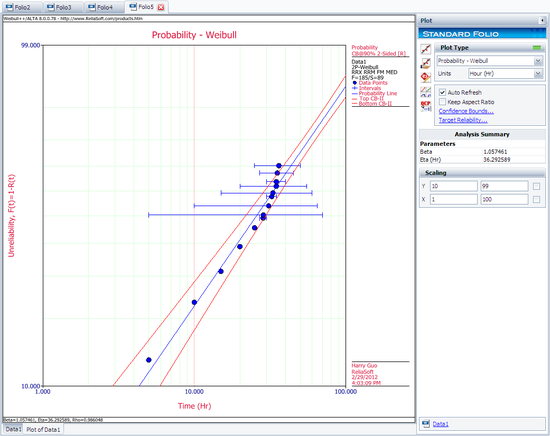

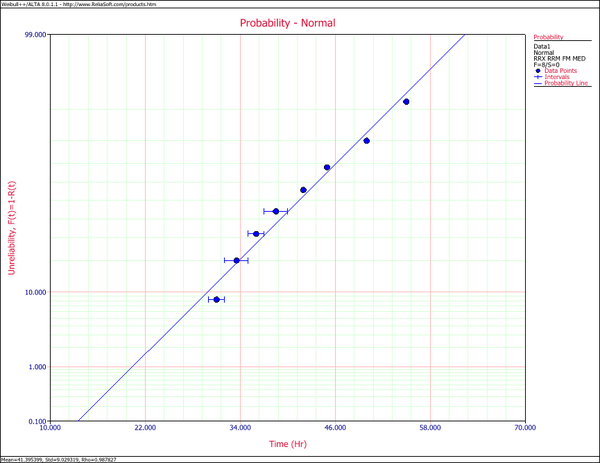

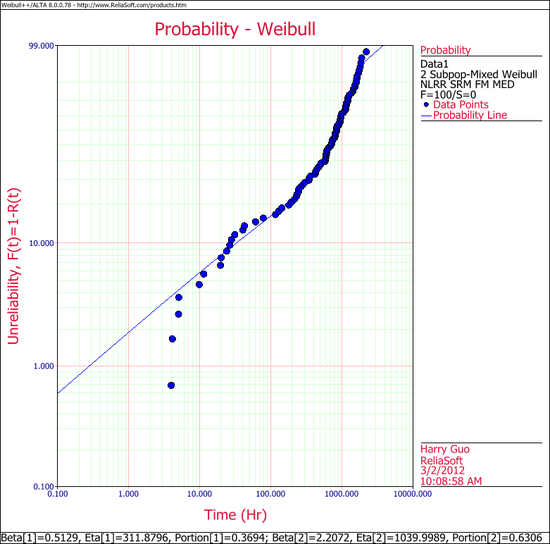

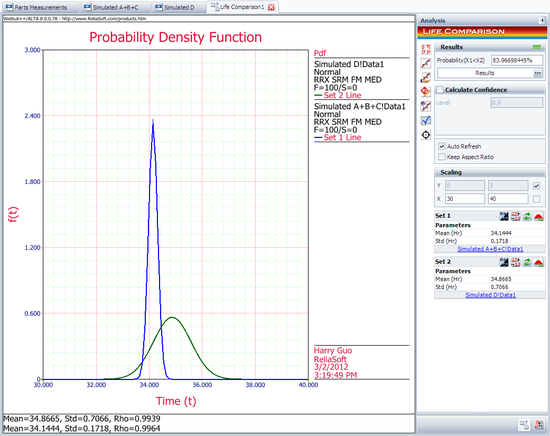

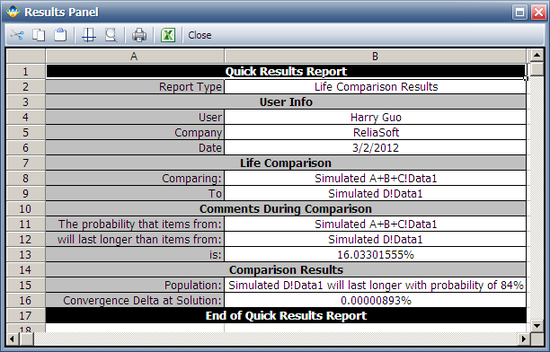

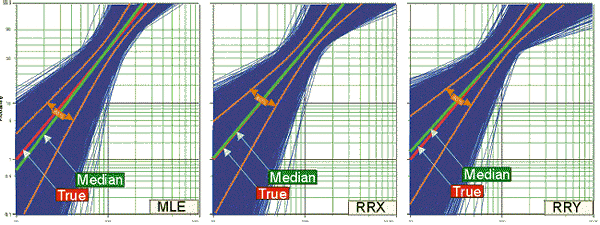

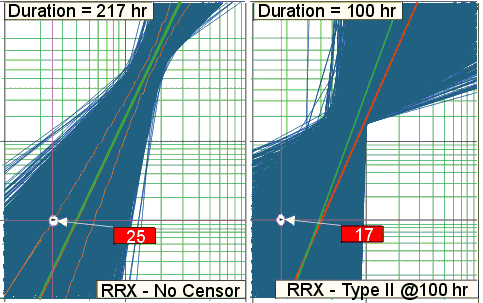

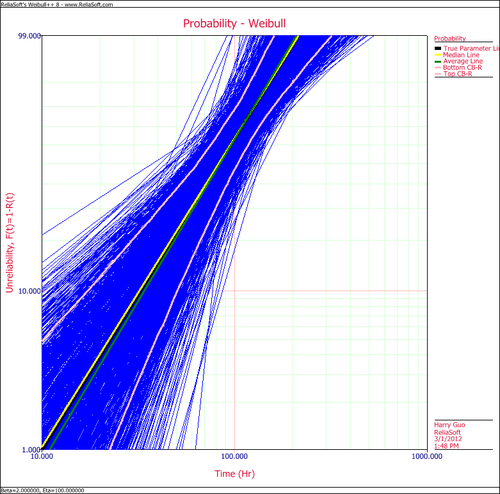

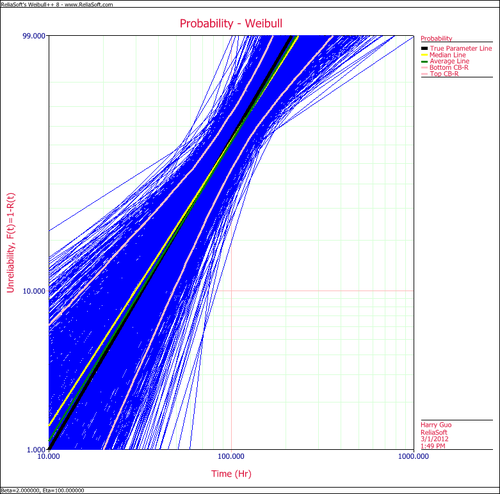

The following plot shows the simulation-based confidence bounds for the RRX parameter estimation method, as well as the expected variation due to sampling error.

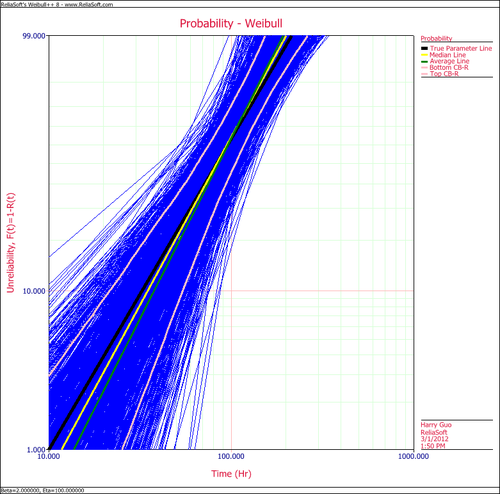

Create another SimuMatic folio and generate a second data using the same settings, but this time, select the RRY analysis method on the Analysis tab. The following plot shows the result.

The following plot shows the results using the MLE analysis method.

The results clearly demonstrate that the median RRX estimate provides the least deviation from the truth for this sample size and data type. However, the MLE outputs are grouped more closely together, as evidenced by the bounds.

This experiment can be repeated in SimuMatic using multiple censoring schemes (including Type I and Type II right censoring as well as random censoring) with various distributions. Multiple experiments can be performed with this utility to evaluate assumptions about the appropriate parameter estimation method to use for data sets.

Exponential Disribution Examples

One Parameter Exponential Probability Plot Example

1-Parameter Exponential Probability Plot Example

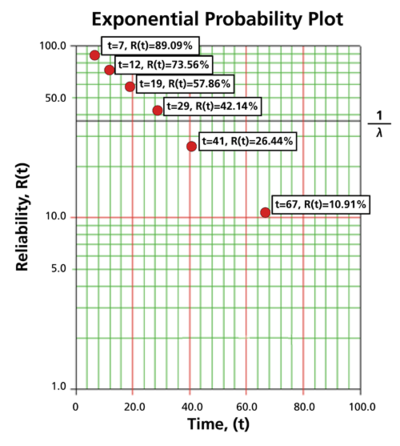

6 units are put on a life test and tested to failure. The failure times are 7, 12, 19, 29, 41, and 67 hours. Estimate the failure rate for a 1-parameter exponential distribution using the probability plotting method.

In order to plot the points for the probability plot, the appropriate reliability estimate values must be obtained. These will be equivalent to [math]\displaystyle{ 100%-MR\,\! }[/math] since the y-axis represents the reliability and the [math]\displaystyle{ MR\,\! }[/math] values represent unreliability estimates.

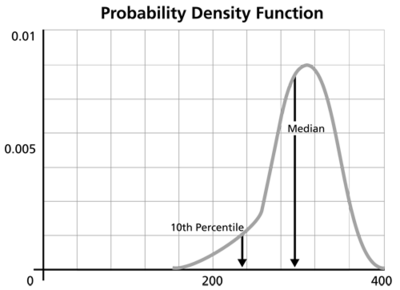

Next, these points are plotted on an exponential probability plotting paper. A sample of this type of plotting paper is shown next, with the sample points in place. Notice how these points describe a line with a negative slope.

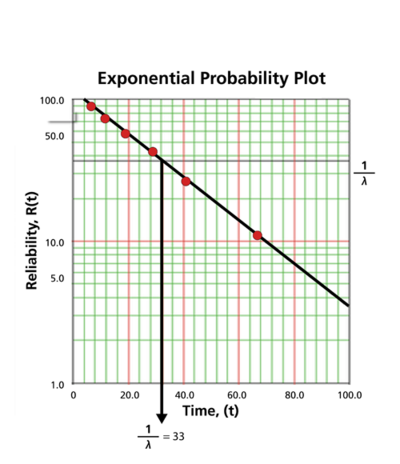

Once the points are plotted, draw the best possible straight line through these points. The time value at which this line intersects with a horizontal line drawn at the 36.8% reliability mark is the mean life, and the reciprocal of this is the failure rate [math]\displaystyle{ \lambda\,\! }[/math]. This is because at [math]\displaystyle{ t=m=\tfrac{1}{\lambda }\,\! }[/math]:

- [math]\displaystyle{ \begin{align} R(t)= & {{e}^{-\lambda \cdot t}} \\ R(t)= & {{e}^{-\lambda \cdot \tfrac{1}{\lambda }}} \\ R(t)= & {{e}^{-1}}=0.368=36.8%. \end{align}\,\! }[/math]

The following plot shows that the best-fit line through the data points crosses the [math]\displaystyle{ R=36.8%\,\! }[/math] line at [math]\displaystyle{ t=33\,\! }[/math] hours. And because [math]\displaystyle{ \tfrac{1}{\lambda }=33\,\! }[/math] hours, [math]\displaystyle{ \lambda =0.0303\,\! }[/math] failures/hour.

2 Parameter Exponential Distribution RRY

The exponential distribution is a commonly used distribution in reliability engineering. Mathematically, it is a fairly simple distribution, which many times leads to its use in inappropriate situations. It is, in fact, a special case of the Weibull distribution where [math]\displaystyle{ \beta =1\,\! }[/math]. The exponential distribution is used to model the behavior of units that have a constant failure rate (or units that do not degrade with time or wear out).

Exponential Probability Density Function

The 2-Parameter Exponential Distribution

The 2-parameter exponential pdf is given by:

- [math]\displaystyle{ f(t)=\lambda {{e}^{-\lambda (t-\gamma )}},f(t)\ge 0,\lambda \gt 0,t\ge \gamma \,\! }[/math]

where [math]\displaystyle{ \gamma \,\! }[/math] is the location parameter. Some of the characteristics of the 2-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{t}-\gamma =m-\gamma \,\! }[/math].

- The exponential pdf has no shape parameter, as it has only one shape.

- The distribution starts at [math]\displaystyle{ t=\gamma \,\! }[/math] at the level of [math]\displaystyle{ f(t=\gamma )=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases beyond [math]\displaystyle{ \gamma \,\! }[/math] and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

The 1-Parameter Exponential Distribution

The 1-parameter exponential pdf is obtained by setting [math]\displaystyle{ \gamma =0\,\! }[/math], and is given by:

- [math]\displaystyle{ \begin{align}f(t)= & \lambda {{e}^{-\lambda t}}=\frac{1}{m}{{e}^{-\tfrac{1}{m}t}}, & t\ge 0, \lambda \gt 0,m\gt 0 \end{align} \,\! }[/math]

where:

- [math]\displaystyle{ \lambda \,\! }[/math] = constant rate, in failures per unit of measurement, (e.g., failures per hour, per cycle, etc.)

- [math]\displaystyle{ \lambda =\frac{1}{m}\,\! }[/math]

- [math]\displaystyle{ m\,\! }[/math] = mean time between failures, or to failure

- [math]\displaystyle{ t\,\! }[/math] = operating time, life, or age, in hours, cycles, miles, actuations, etc.

This distribution requires the knowledge of only one parameter, [math]\displaystyle{ \lambda \,\! }[/math], for its application. Some of the characteristics of the 1-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], is zero.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=m\,\! }[/math].

- As [math]\displaystyle{ \lambda \,\! }[/math] is decreased in value, the distribution is stretched out to the right, and as [math]\displaystyle{ \lambda \,\! }[/math] is increased, the distribution is pushed toward the origin.

- This distribution has no shape parameter as it has only one shape, (i.e., the exponential, and the only parameter it has is the failure rate, [math]\displaystyle{ \lambda \,\! }[/math]).

- The distribution starts at [math]\displaystyle{ t=0\,\! }[/math] at the level of [math]\displaystyle{ f(t=0)=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases, and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

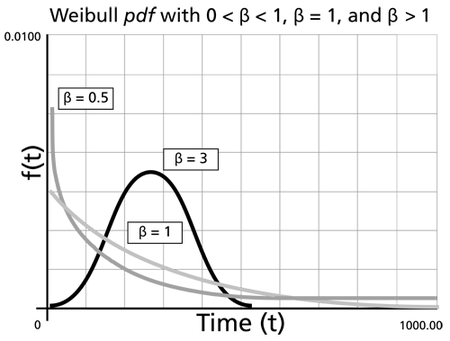

- The pdf can be thought of as a special case of the Weibull pdf with [math]\displaystyle{ \gamma =0\,\! }[/math] and [math]\displaystyle{ \beta =1\,\! }[/math].

Exponential Distribution Functions

The Mean or MTTF

The mean, [math]\displaystyle{ \overline{T},\,\! }[/math] or mean time to failure (MTTF) is given by:

- [math]\displaystyle{ \begin{align} \bar{T}= & \int_{\gamma }^{\infty }t\cdot f(t)dt \\ = & \int_{\gamma }^{\infty }t\cdot \lambda \cdot {{e}^{-\lambda t}}dt \\ = & \gamma +\frac{1}{\lambda }=m \end{align}\,\! }[/math]

Note that when [math]\displaystyle{ \gamma =0\,\! }[/math], the MTTF is the inverse of the exponential distribution's constant failure rate. This is only true for the exponential distribution. Most other distributions do not have a constant failure rate. Consequently, the inverse relationship between failure rate and MTTF does not hold for these other distributions.

The Median

The median, [math]\displaystyle{ \breve{T}, \,\! }[/math] is:

- [math]\displaystyle{ \breve{T}=\gamma +\frac{1}{\lambda}\cdot 0.693 \,\! }[/math]

The Mode

The mode, [math]\displaystyle{ \tilde{T},\,\! }[/math] is:

- [math]\displaystyle{ \tilde{T}=\gamma \,\! }[/math]

The Standard Deviation

The standard deviation, [math]\displaystyle{ {\sigma }_{T}\,\! }[/math], is:

- [math]\displaystyle{ {\sigma}_{T}=\frac{1}{\lambda }=m\,\! }[/math]

The Exponential Reliability Function

The equation for the 2-parameter exponential cumulative density function, or cdf, is given by:

- [math]\displaystyle{ \begin{align} F(t)=Q(t)=1-{{e}^{-\lambda (t-\gamma )}} \end{align}\,\! }[/math]

Recalling that the reliability function of a distribution is simply one minus the cdf, the reliability function of the 2-parameter exponential distribution is given by:

- [math]\displaystyle{ R(t)=1-Q(t)=1-\int_{0}^{t-\gamma }f(x)dx\,\! }[/math]

- [math]\displaystyle{ R(t)=1-\int_{0}^{t-\gamma }\lambda {{e}^{-\lambda x}}dx={{e}^{-\lambda (t-\gamma )}}\,\! }[/math]

The 1-parameter exponential reliability function is given by:

- [math]\displaystyle{ R(t)={{e}^{-\lambda t}}={{e}^{-\tfrac{t}{m}}}\,\! }[/math]

The Exponential Conditional Reliability Function

The exponential conditional reliability equation gives the reliability for a mission of [math]\displaystyle{ t\,\! }[/math] duration, having already successfully accumulated [math]\displaystyle{ T\,\! }[/math] hours of operation up to the start of this new mission. The exponential conditional reliability function is:

- [math]\displaystyle{ R(t|T)=\frac{R(T+t)}{R(T)}=\frac{{{e}^{-\lambda (T+t-\gamma )}}}{{{e}^{-\lambda (T-\gamma )}}}={{e}^{-\lambda t}}\,\! }[/math]

which says that the reliability for a mission of [math]\displaystyle{ t\,\! }[/math] duration undertaken after the component or equipment has already accumulated [math]\displaystyle{ T\,\! }[/math] hours of operation from age zero is only a function of the mission duration, and not a function of the age at the beginning of the mission. This is referred to as the memoryless property.

The Exponential Reliable Life Function

The reliable life, or the mission duration for a desired reliability goal, [math]\displaystyle{ {{t}_{R}}\,\! }[/math], for the 1-parameter exponential distribution is:

- [math]\displaystyle{ R({{t}_{R}})={{e}^{-\lambda ({{t}_{R}}-\gamma )}}\,\! }[/math]

- [math]\displaystyle{ \begin{align} \ln[R({{t}_{R}})]=-\lambda({{t}_{R}}-\gamma ) \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ {{t}_{R}}=\gamma -\frac{\ln [R({{t}_{R}})]}{\lambda }\,\! }[/math]

The Exponential Failure Rate Function

The exponential failure rate function is:

- [math]\displaystyle{ \lambda (t)=\frac{f(t)}{R(t)}=\frac{\lambda {{e}^{-\lambda (t-\gamma )}}}{{{e}^{-\lambda (t-\gamma )}}}=\lambda =\text{constant}\,\! }[/math]

Once again, note that the constant failure rate is a characteristic of the exponential distribution, and special cases of other distributions only. Most other distributions have failure rates that are functions of time.

Characteristics of the Exponential Distribution

The primary trait of the exponential distribution is that it is used for modeling the behavior of items with a constant failure rate. It has a fairly simple mathematical form, which makes it fairly easy to manipulate. Unfortunately, this fact also leads to the use of this model in situations where it is not appropriate. For example, it would not be appropriate to use the exponential distribution to model the reliability of an automobile. The constant failure rate of the exponential distribution would require the assumption that the automobile would be just as likely to experience a breakdown during the first mile as it would during the one-hundred-thousandth mile. Clearly, this is not a valid assumption. However, some inexperienced practitioners of reliability engineering and life data analysis will overlook this fact, lured by the siren-call of the exponential distribution's relatively simple mathematical models.

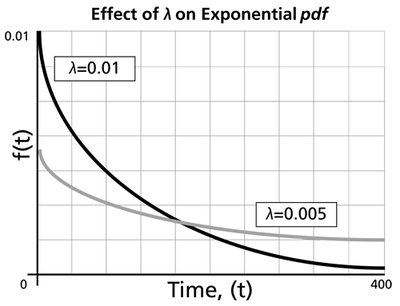

The Effect of lambda and gamma on the Exponential pdf

- The exponential pdf has no shape parameter, as it has only one shape.

- The exponential pdf is always convex and is stretched to the right as [math]\displaystyle{ \lambda \,\! }[/math] decreases in value.

- The value of the pdf function is always equal to the value of [math]\displaystyle{ \lambda \,\! }[/math] at [math]\displaystyle{ t=0\,\! }[/math] (or [math]\displaystyle{ t=\gamma \,\! }[/math]).

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before this time.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{T}-\gamma =m-\gamma \,\! }[/math].

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

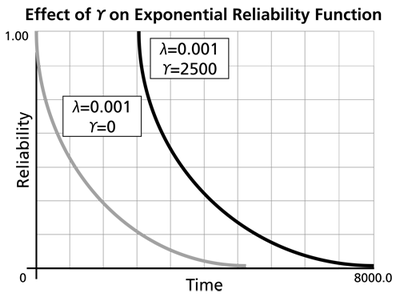

The Effect of lambda and gamma on the Exponential Reliability Function

- The 1-parameter exponential reliability function starts at the value of 100% at [math]\displaystyle{ t=0\,\! }[/math], decreases thereafter monotonically and is convex.

- The 2-parameter exponential reliability function remains at the value of 100% for [math]\displaystyle{ t=0\,\! }[/math] up to [math]\displaystyle{ t=\gamma \,\! }[/math], and decreases thereafter monotonically and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ R(t\to \infty )\to 0\,\! }[/math].

- The reliability for a mission duration of [math]\displaystyle{ t=m=\tfrac{1}{\lambda }\,\! }[/math], or of one MTTF duration, is always equal to [math]\displaystyle{ 0.3679\,\! }[/math] or 36.79%. This means that the reliability for a mission which is as long as one MTTF is relatively low and is not recommended because only 36.8% of the missions will be completed successfully. In other words, of the equipment undertaking such a mission, only 36.8% will survive their mission.

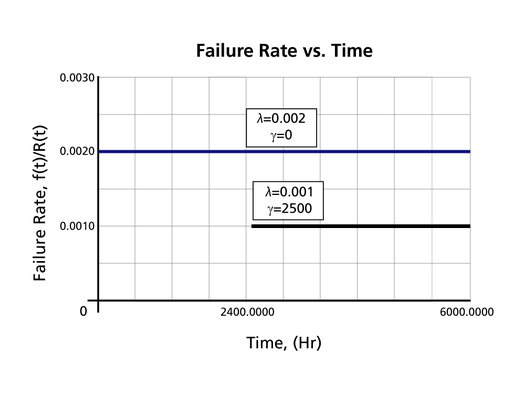

The Effect of lambda and gamma on the Failure Rate Function

- The 1-parameter exponential failure rate function is constant and starts at [math]\displaystyle{ t=0\,\! }[/math].

- The 2-parameter exponential failure rate function remains at the value of 0 for [math]\displaystyle{ t=0\,\! }[/math] up to [math]\displaystyle{ t=\gamma \,\! }[/math], and then keeps at the constant value of [math]\displaystyle{ \lambda\,\! }[/math].

Exponential Distribution Examples

Grouped Data

20 units were reliability tested with the following results:

| Table - Life Test Data | |

| Number of Units in Group | Time-to-Failure |

|---|---|

| 7 | 100 |

| 5 | 200 |

| 3 | 300 |

| 2 | 400 |

| 1 | 500 |

| 2 | 600 |

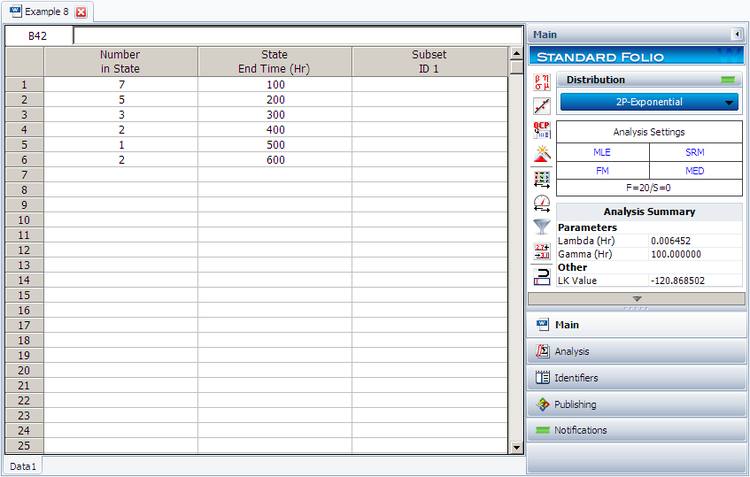

1. Assuming a 2-parameter exponential distribution, estimate the parameters by hand using the MLE analysis method.

2. Repeat the above using Weibull++. (Enter the data as grouped data to duplicate the results.)

3. Show the Probability plot for the analysis results.

4. Show the Reliability vs. Time plot for the results.

5. Show the pdf plot for the results.

6. Show the Failure Rate vs. Time plot for the results.

7. Estimate the parameters using the rank regression on Y (RRY) analysis method (and using grouped ranks).

Solution

1. For the 2-parameter exponential distribution and for [math]\displaystyle{ \hat{\gamma }=100\,\! }[/math] hours (first failure), the partial of the log-likelihood function, [math]\displaystyle{ \lambda\,\! }[/math], becomes:

- [math]\displaystyle{ \begin{align} \frac{\partial \Lambda }{\partial \lambda }= &\underset{i=1}{\overset{6}{\mathop \sum }}\,{N_i} \left[ \frac{1}{\lambda }-\left( {{T}_{i}}-100 \right) \right]=0\\ \Rightarrow & 7[\frac{1}{\lambda }-(100-100)]+5[\frac{1}{\lambda}-(200-100)] + \ldots +2[\frac{1}{\lambda}-(600-100)]\\ = & 0\\ \Rightarrow & \hat{\lambda}=\frac{20}{3100}=0.0065 \text{fr/hr} \end{align} \,\! }[/math]

2. Enter the data in a Weibull++ standard folio and calculate it as shown next.

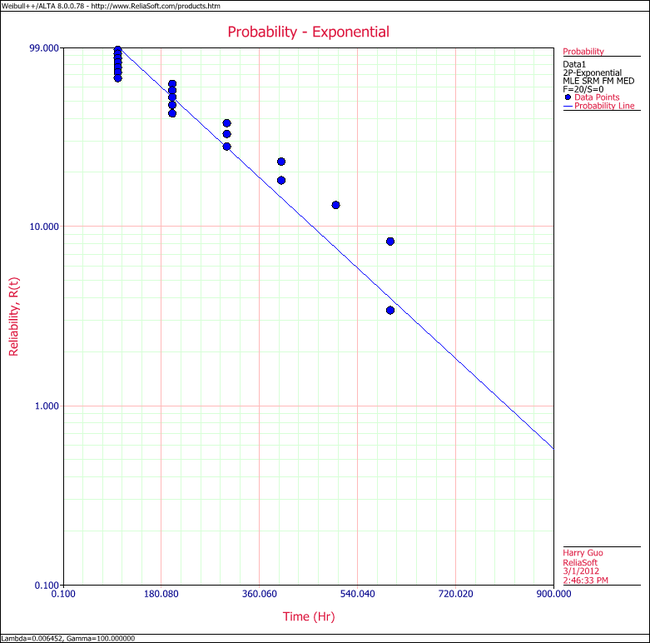

3. On the Plot page of the folio, the exponential Probability plot will appear as shown next.

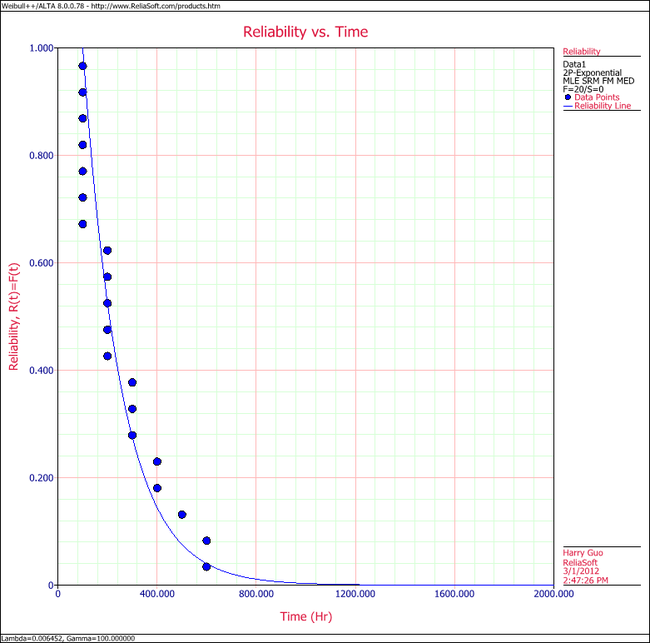

4. View the Reliability vs. Time plot.

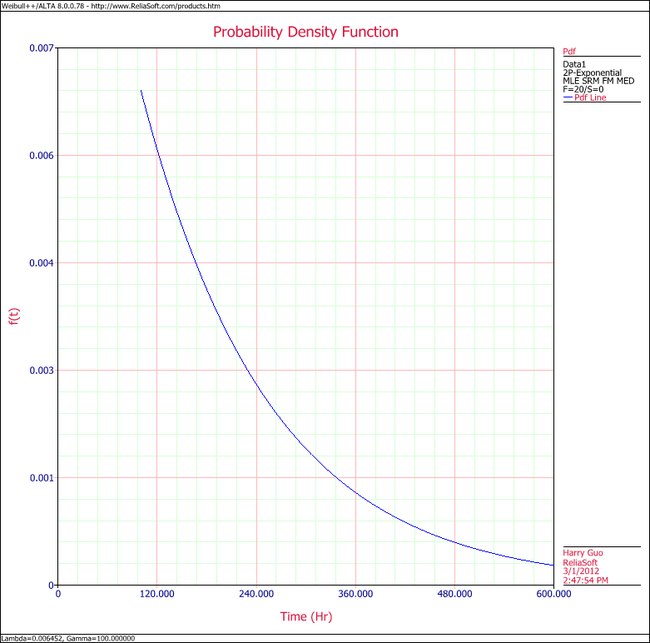

5. View the pdf plot.

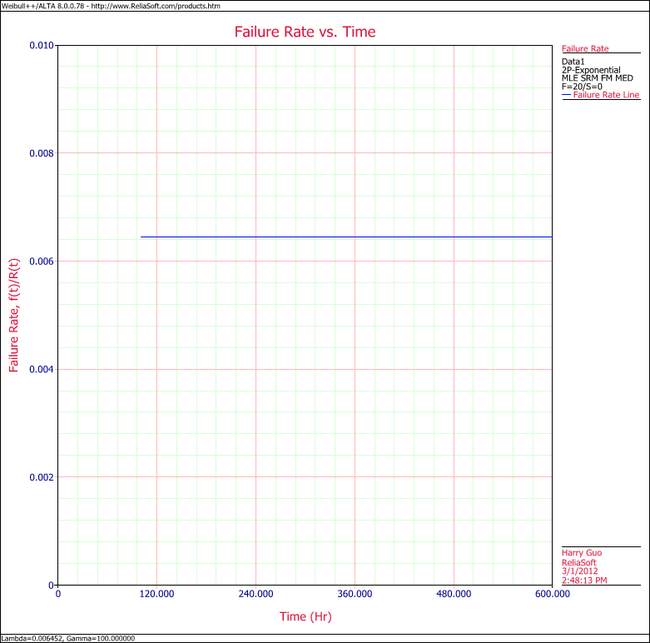

6. View the Failure Rate vs. Time plot.

Note that, as described at the beginning of this chapter, the failure rate for the exponential distribution is constant. Also note that the Failure Rate vs. Time plot does show values for times before the location parameter, [math]\displaystyle{ \gamma \,\! }[/math], at 100 hours.

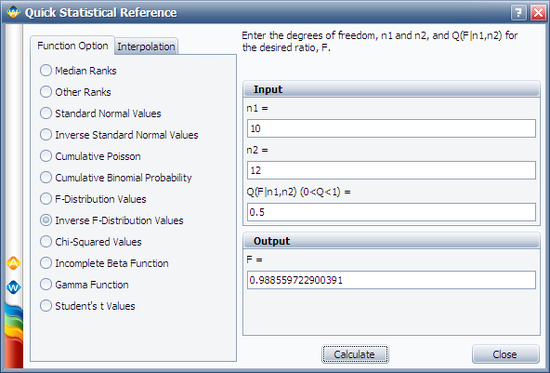

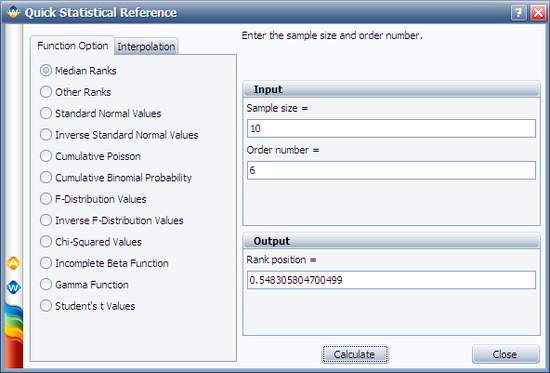

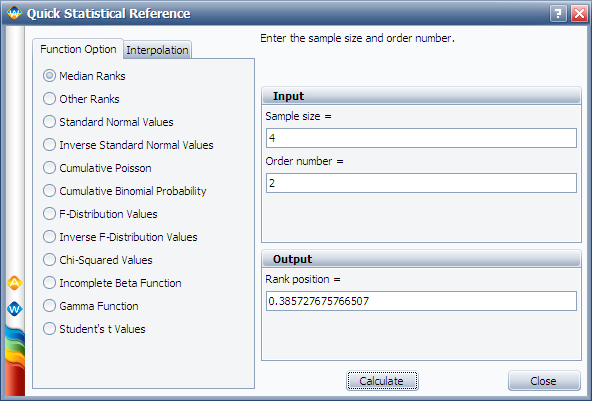

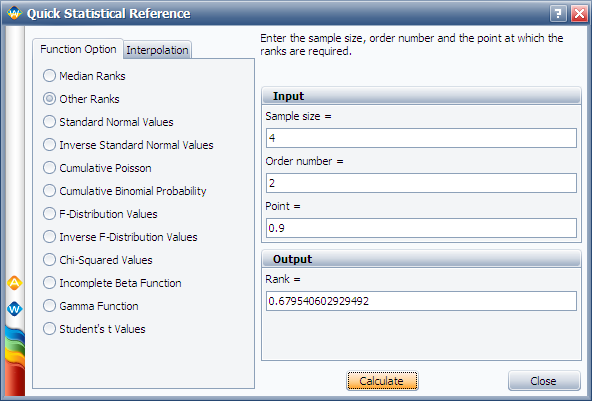

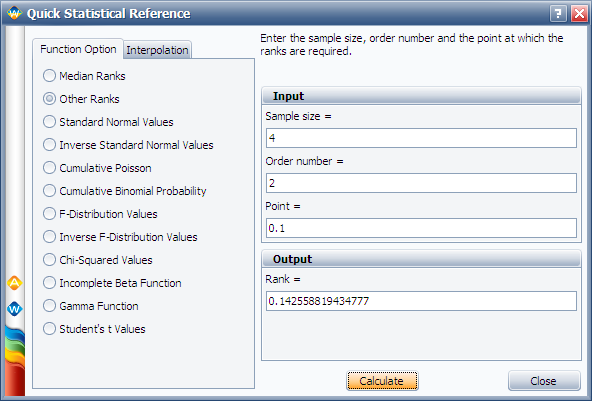

7. In the case of grouped data, one must be cautious when estimating the parameters using a rank regression method. This is because the median rank values are determined from the total number of failures observed by time [math]\displaystyle{ {{T}_{i}}\,\! }[/math] where [math]\displaystyle{ i\,\! }[/math] indicates the group number. In this example, the total number of groups is [math]\displaystyle{ N=6\,\! }[/math] and the total number of units is [math]\displaystyle{ {{N}_{T}}=20\,\! }[/math]. Thus, the median rank values will be estimated for 20 units and for the total failed units ([math]\displaystyle{ {{N}_{{{F}_{i}}}}\,\! }[/math]) up to the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] group, for the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] rank value. The median ranks values can be found from rank tables or they can be estimated using ReliaSoft's Quick Statistical Reference tool.

For example, the median rank value of the fourth group will be the [math]\displaystyle{ {{17}^{th}}\,\! }[/math] rank out of a sample size of twenty units (or 81.945%).

The following table is then constructed.

Given the values in the table above, calculate [math]\displaystyle{ \hat{a}\,\! }[/math] and [math]\displaystyle{ \hat{b}\,\! }[/math]:

- [math]\displaystyle{ \begin{align} & \hat{b}= & \frac{\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}}{{y}_{i}}-(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}})(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{y}_{i}})/6}{\underset{i=1}{\overset{6}{\mathop{\sum }}}\,t_{i}^{2}-{{(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}})}^{2}}/6} \\ & & \\ & \hat{b}= & \frac{-4320.3362-(2100)(-9.6476)/6}{910,000-{{(2100)}^{2}}/6} \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{b}=-0.005392\,\! }[/math]

and:

- [math]\displaystyle{ \hat{a}=\overline{y}-\hat{b}\overline{t}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{t}_{i}}}{N}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{a}=\frac{-9.6476}{6}-(-0.005392)\frac{2100}{6}=0.2793\,\! }[/math]

Therefore:

- [math]\displaystyle{ \hat{\lambda }=-\hat{b}=-(-0.005392)=0.05392\text{ failures/hour}\,\! }[/math]

and:

- [math]\displaystyle{ \hat{\gamma }=\frac{\hat{a}}{\hat{\lambda }}=\frac{0.2793}{0.005392}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{\gamma }\simeq 51.8\text{ hours}\,\! }[/math]

Then:

- [math]\displaystyle{ f(T)=(0.005392){{e}^{-0.005392(T-51.8)}}\,\! }[/math]

Using Weibull++, the estimated parameters are:

- [math]\displaystyle{ \begin{align} \hat{\lambda }= & 0.0054\text{ failures/hour} \\ \hat{\gamma }= & 51.82\text{ hours} \end{align}\,\! }[/math]

The small difference in the values from Weibull++ is due to rounding. In the application, the calculations and the rank values are carried out up to the [math]\displaystyle{ 15^{th}\,\! }[/math] decimal point.

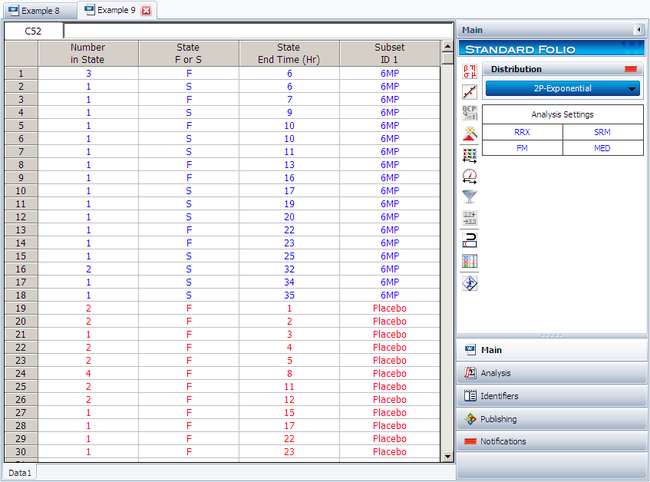

Using Auto Batch Run

A number of leukemia patients were treated with either drug 6MP or a placebo, and the times in weeks until cancer symptoms returned were recorded. Analyze each treatment separately [21, p.175].

| Table - Leukemia Treatment Results | |||

| Time (weeks) | Number of Patients | Treament | Comments |

|---|---|---|---|

| 1 | 2 | placebo | |

| 2 | 2 | placebo | |

| 3 | 1 | placebo | |

| 4 | 2 | placebo | |

| 5 | 2 | placebo | |

| 6 | 4 | 6MP | 3 patients completed |

| 7 | 1 | 6MP | |

| 8 | 4 | placebo | |

| 9 | 1 | 6MP | Not completed |

| 10 | 2 | 6MP | 1 patient completed |

| 11 | 2 | placebo | |

| 11 | 1 | 6MP | Not completed |

| 12 | 2 | placebo | |

| 13 | 1 | 6MP | |

| 15 | 1 | placebo | |

| 16 | 1 | 6MP | |

| 17 | 1 | placebo | |

| 17 | 1 | 6MP | Not completed |

| 19 | 1 | 6MP | Not completed |

| 20 | 1 | 6MP | Not completed |

| 22 | 1 | placebo | |

| 22 | 1 | 6MP | |

| 23 | 1 | placebo | |

| 23 | 1 | 6MP | |

| 25 | 1 | 6MP | Not completed |

| 32 | 2 | 6MP | Not completed |

| 34 | 1 | 6MP | Not completed |

| 35 | 1 | 6MP | Not completed |

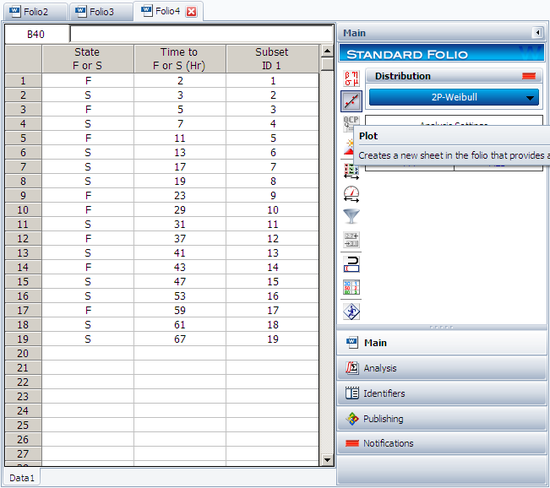

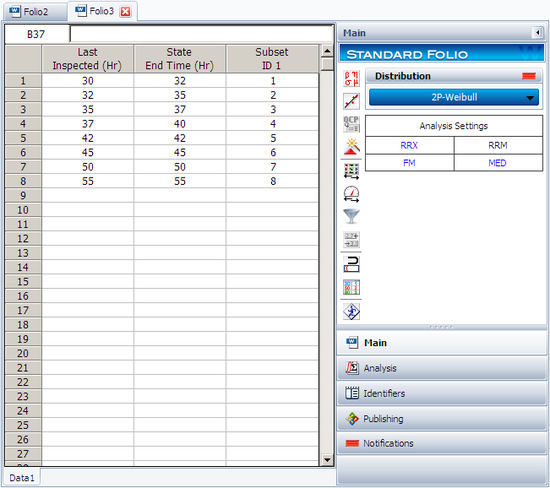

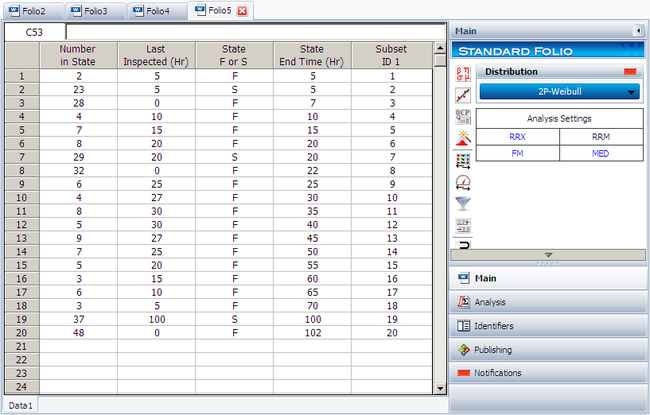

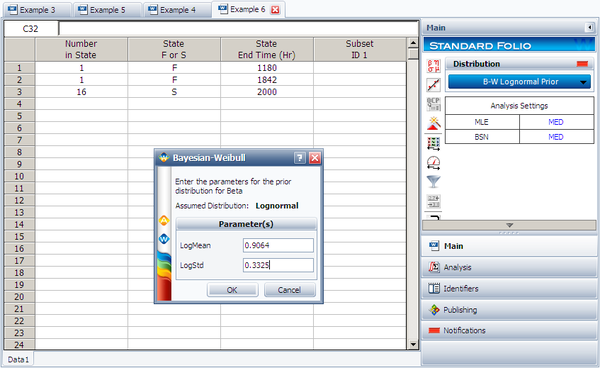

Create a new Weibull++ standard folio that's configured for grouped times-to-failure data with suspensions. In the first column, enter the number of patients. Whenever there are uncompleted tests, enter the number of patients who completed the test separately from the number of patients who did not (e.g., if 4 patients had symptoms return after 6 weeks and only 3 of them completed the test, then enter 1 in one row and 3 in another). In the second column enter F if the patients completed the test and S if they didn't. In the third column enter the time, and in the fourth column (Subset ID) specify whether the 6MP drug or a placebo was used.

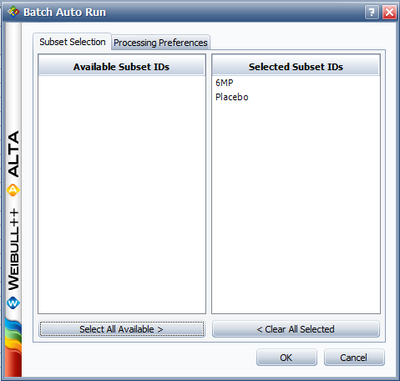

Next, open the Batch Auto Run utility and select to separate the 6MP drug from the placebo, as shown next.

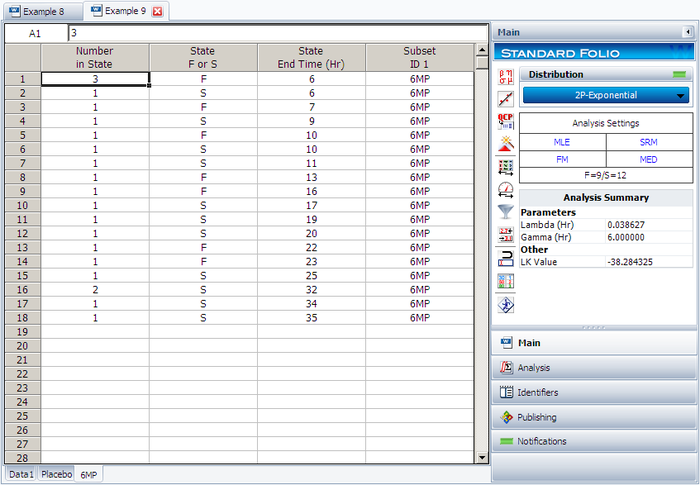

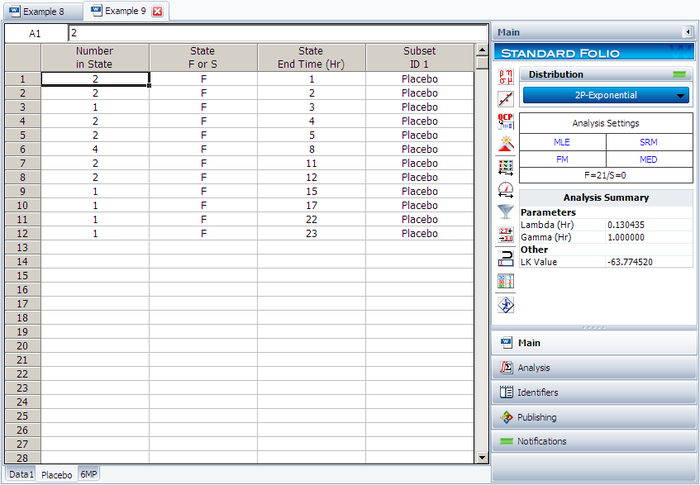

The software will create two data sheets, one for each subset ID, as shown next.

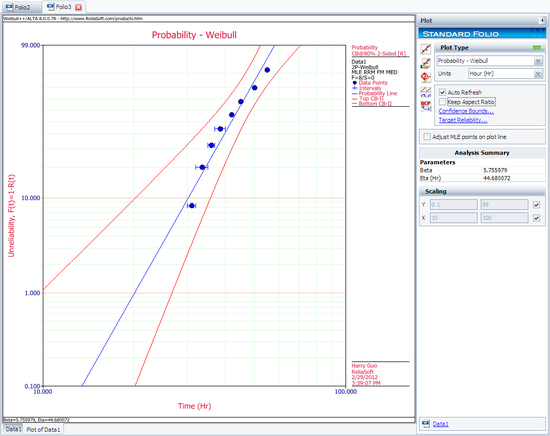

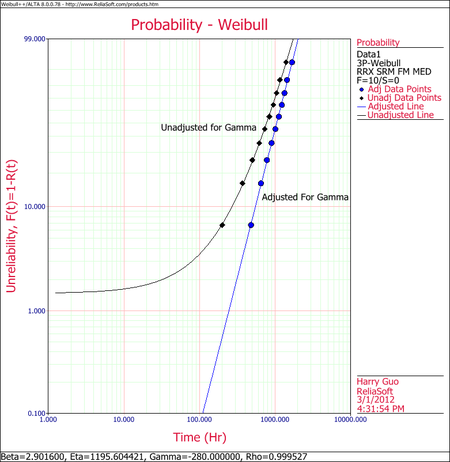

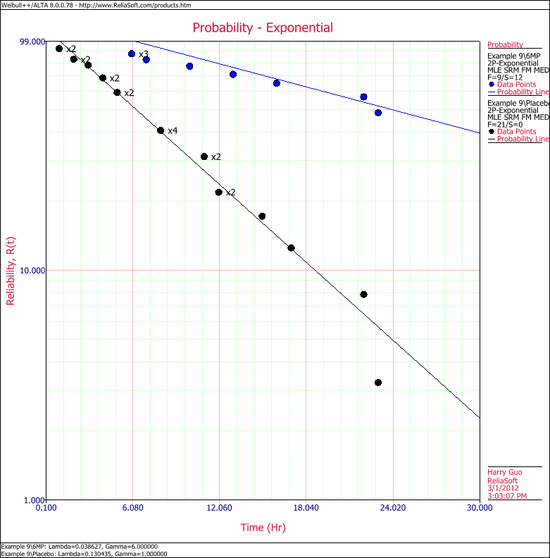

Calculate both data sheets using the 2-parameter exponential distribution and the MLE analysis method, then insert an additional plot and select to show the analysis results for both data sheets on that plot, which will appear as shown next.

2 Parameter Exponential Distribution RRX

The exponential distribution is a commonly used distribution in reliability engineering. Mathematically, it is a fairly simple distribution, which many times leads to its use in inappropriate situations. It is, in fact, a special case of the Weibull distribution where [math]\displaystyle{ \beta =1\,\! }[/math]. The exponential distribution is used to model the behavior of units that have a constant failure rate (or units that do not degrade with time or wear out).

Exponential Probability Density Function

The 2-Parameter Exponential Distribution

The 2-parameter exponential pdf is given by:

- [math]\displaystyle{ f(t)=\lambda {{e}^{-\lambda (t-\gamma )}},f(t)\ge 0,\lambda \gt 0,t\ge \gamma \,\! }[/math]

where [math]\displaystyle{ \gamma \,\! }[/math] is the location parameter. Some of the characteristics of the 2-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{t}-\gamma =m-\gamma \,\! }[/math].

- The exponential pdf has no shape parameter, as it has only one shape.

- The distribution starts at [math]\displaystyle{ t=\gamma \,\! }[/math] at the level of [math]\displaystyle{ f(t=\gamma )=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases beyond [math]\displaystyle{ \gamma \,\! }[/math] and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

The 1-Parameter Exponential Distribution

The 1-parameter exponential pdf is obtained by setting [math]\displaystyle{ \gamma =0\,\! }[/math], and is given by:

- [math]\displaystyle{ \begin{align}f(t)= & \lambda {{e}^{-\lambda t}}=\frac{1}{m}{{e}^{-\tfrac{1}{m}t}}, & t\ge 0, \lambda \gt 0,m\gt 0 \end{align} \,\! }[/math]

where:

- [math]\displaystyle{ \lambda \,\! }[/math] = constant rate, in failures per unit of measurement, (e.g., failures per hour, per cycle, etc.)

- [math]\displaystyle{ \lambda =\frac{1}{m}\,\! }[/math]

- [math]\displaystyle{ m\,\! }[/math] = mean time between failures, or to failure

- [math]\displaystyle{ t\,\! }[/math] = operating time, life, or age, in hours, cycles, miles, actuations, etc.

This distribution requires the knowledge of only one parameter, [math]\displaystyle{ \lambda \,\! }[/math], for its application. Some of the characteristics of the 1-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], is zero.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=m\,\! }[/math].

- As [math]\displaystyle{ \lambda \,\! }[/math] is decreased in value, the distribution is stretched out to the right, and as [math]\displaystyle{ \lambda \,\! }[/math] is increased, the distribution is pushed toward the origin.

- This distribution has no shape parameter as it has only one shape, (i.e., the exponential, and the only parameter it has is the failure rate, [math]\displaystyle{ \lambda \,\! }[/math]).

- The distribution starts at [math]\displaystyle{ t=0\,\! }[/math] at the level of [math]\displaystyle{ f(t=0)=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases, and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

- The pdf can be thought of as a special case of the Weibull pdf with [math]\displaystyle{ \gamma =0\,\! }[/math] and [math]\displaystyle{ \beta =1\,\! }[/math].

Exponential Distribution Functions

The Mean or MTTF

The mean, [math]\displaystyle{ \overline{T},\,\! }[/math] or mean time to failure (MTTF) is given by:

- [math]\displaystyle{ \begin{align} \bar{T}= & \int_{\gamma }^{\infty }t\cdot f(t)dt \\ = & \int_{\gamma }^{\infty }t\cdot \lambda \cdot {{e}^{-\lambda t}}dt \\ = & \gamma +\frac{1}{\lambda }=m \end{align}\,\! }[/math]

Note that when [math]\displaystyle{ \gamma =0\,\! }[/math], the MTTF is the inverse of the exponential distribution's constant failure rate. This is only true for the exponential distribution. Most other distributions do not have a constant failure rate. Consequently, the inverse relationship between failure rate and MTTF does not hold for these other distributions.

The Median

The median, [math]\displaystyle{ \breve{T}, \,\! }[/math] is:

- [math]\displaystyle{ \breve{T}=\gamma +\frac{1}{\lambda}\cdot 0.693 \,\! }[/math]

The Mode

The mode, [math]\displaystyle{ \tilde{T},\,\! }[/math] is:

- [math]\displaystyle{ \tilde{T}=\gamma \,\! }[/math]

The Standard Deviation

The standard deviation, [math]\displaystyle{ {\sigma }_{T}\,\! }[/math], is:

- [math]\displaystyle{ {\sigma}_{T}=\frac{1}{\lambda }=m\,\! }[/math]

The Exponential Reliability Function

The equation for the 2-parameter exponential cumulative density function, or cdf, is given by:

- [math]\displaystyle{ \begin{align} F(t)=Q(t)=1-{{e}^{-\lambda (t-\gamma )}} \end{align}\,\! }[/math]

Recalling that the reliability function of a distribution is simply one minus the cdf, the reliability function of the 2-parameter exponential distribution is given by:

- [math]\displaystyle{ R(t)=1-Q(t)=1-\int_{0}^{t-\gamma }f(x)dx\,\! }[/math]

- [math]\displaystyle{ R(t)=1-\int_{0}^{t-\gamma }\lambda {{e}^{-\lambda x}}dx={{e}^{-\lambda (t-\gamma )}}\,\! }[/math]

The 1-parameter exponential reliability function is given by:

- [math]\displaystyle{ R(t)={{e}^{-\lambda t}}={{e}^{-\tfrac{t}{m}}}\,\! }[/math]

The Exponential Conditional Reliability Function

The exponential conditional reliability equation gives the reliability for a mission of [math]\displaystyle{ t\,\! }[/math] duration, having already successfully accumulated [math]\displaystyle{ T\,\! }[/math] hours of operation up to the start of this new mission. The exponential conditional reliability function is:

- [math]\displaystyle{ R(t|T)=\frac{R(T+t)}{R(T)}=\frac{{{e}^{-\lambda (T+t-\gamma )}}}{{{e}^{-\lambda (T-\gamma )}}}={{e}^{-\lambda t}}\,\! }[/math]

which says that the reliability for a mission of [math]\displaystyle{ t\,\! }[/math] duration undertaken after the component or equipment has already accumulated [math]\displaystyle{ T\,\! }[/math] hours of operation from age zero is only a function of the mission duration, and not a function of the age at the beginning of the mission. This is referred to as the memoryless property.

The Exponential Reliable Life Function

The reliable life, or the mission duration for a desired reliability goal, [math]\displaystyle{ {{t}_{R}}\,\! }[/math], for the 1-parameter exponential distribution is:

- [math]\displaystyle{ R({{t}_{R}})={{e}^{-\lambda ({{t}_{R}}-\gamma )}}\,\! }[/math]

- [math]\displaystyle{ \begin{align} \ln[R({{t}_{R}})]=-\lambda({{t}_{R}}-\gamma ) \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ {{t}_{R}}=\gamma -\frac{\ln [R({{t}_{R}})]}{\lambda }\,\! }[/math]

The Exponential Failure Rate Function

The exponential failure rate function is:

- [math]\displaystyle{ \lambda (t)=\frac{f(t)}{R(t)}=\frac{\lambda {{e}^{-\lambda (t-\gamma )}}}{{{e}^{-\lambda (t-\gamma )}}}=\lambda =\text{constant}\,\! }[/math]

Once again, note that the constant failure rate is a characteristic of the exponential distribution, and special cases of other distributions only. Most other distributions have failure rates that are functions of time.

Characteristics of the Exponential Distribution

The primary trait of the exponential distribution is that it is used for modeling the behavior of items with a constant failure rate. It has a fairly simple mathematical form, which makes it fairly easy to manipulate. Unfortunately, this fact also leads to the use of this model in situations where it is not appropriate. For example, it would not be appropriate to use the exponential distribution to model the reliability of an automobile. The constant failure rate of the exponential distribution would require the assumption that the automobile would be just as likely to experience a breakdown during the first mile as it would during the one-hundred-thousandth mile. Clearly, this is not a valid assumption. However, some inexperienced practitioners of reliability engineering and life data analysis will overlook this fact, lured by the siren-call of the exponential distribution's relatively simple mathematical models.

The Effect of lambda and gamma on the Exponential pdf

- The exponential pdf has no shape parameter, as it has only one shape.

- The exponential pdf is always convex and is stretched to the right as [math]\displaystyle{ \lambda \,\! }[/math] decreases in value.

- The value of the pdf function is always equal to the value of [math]\displaystyle{ \lambda \,\! }[/math] at [math]\displaystyle{ t=0\,\! }[/math] (or [math]\displaystyle{ t=\gamma \,\! }[/math]).

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before this time.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{T}-\gamma =m-\gamma \,\! }[/math].

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

The Effect of lambda and gamma on the Exponential Reliability Function

- The 1-parameter exponential reliability function starts at the value of 100% at [math]\displaystyle{ t=0\,\! }[/math], decreases thereafter monotonically and is convex.

- The 2-parameter exponential reliability function remains at the value of 100% for [math]\displaystyle{ t=0\,\! }[/math] up to [math]\displaystyle{ t=\gamma \,\! }[/math], and decreases thereafter monotonically and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ R(t\to \infty )\to 0\,\! }[/math].

- The reliability for a mission duration of [math]\displaystyle{ t=m=\tfrac{1}{\lambda }\,\! }[/math], or of one MTTF duration, is always equal to [math]\displaystyle{ 0.3679\,\! }[/math] or 36.79%. This means that the reliability for a mission which is as long as one MTTF is relatively low and is not recommended because only 36.8% of the missions will be completed successfully. In other words, of the equipment undertaking such a mission, only 36.8% will survive their mission.

The Effect of lambda and gamma on the Failure Rate Function

- The 1-parameter exponential failure rate function is constant and starts at [math]\displaystyle{ t=0\,\! }[/math].

- The 2-parameter exponential failure rate function remains at the value of 0 for [math]\displaystyle{ t=0\,\! }[/math] up to [math]\displaystyle{ t=\gamma \,\! }[/math], and then keeps at the constant value of [math]\displaystyle{ \lambda\,\! }[/math].

Exponential Distribution Examples

Grouped Data

20 units were reliability tested with the following results:

| Table - Life Test Data | |

| Number of Units in Group | Time-to-Failure |

|---|---|

| 7 | 100 |

| 5 | 200 |

| 3 | 300 |

| 2 | 400 |

| 1 | 500 |

| 2 | 600 |

1. Assuming a 2-parameter exponential distribution, estimate the parameters by hand using the MLE analysis method.

2. Repeat the above using Weibull++. (Enter the data as grouped data to duplicate the results.)

3. Show the Probability plot for the analysis results.

4. Show the Reliability vs. Time plot for the results.

5. Show the pdf plot for the results.

6. Show the Failure Rate vs. Time plot for the results.

7. Estimate the parameters using the rank regression on Y (RRY) analysis method (and using grouped ranks).

Solution

1. For the 2-parameter exponential distribution and for [math]\displaystyle{ \hat{\gamma }=100\,\! }[/math] hours (first failure), the partial of the log-likelihood function, [math]\displaystyle{ \lambda\,\! }[/math], becomes:

- [math]\displaystyle{ \begin{align} \frac{\partial \Lambda }{\partial \lambda }= &\underset{i=1}{\overset{6}{\mathop \sum }}\,{N_i} \left[ \frac{1}{\lambda }-\left( {{T}_{i}}-100 \right) \right]=0\\ \Rightarrow & 7[\frac{1}{\lambda }-(100-100)]+5[\frac{1}{\lambda}-(200-100)] + \ldots +2[\frac{1}{\lambda}-(600-100)]\\ = & 0\\ \Rightarrow & \hat{\lambda}=\frac{20}{3100}=0.0065 \text{fr/hr} \end{align} \,\! }[/math]

2. Enter the data in a Weibull++ standard folio and calculate it as shown next.

3. On the Plot page of the folio, the exponential Probability plot will appear as shown next.

4. View the Reliability vs. Time plot.

5. View the pdf plot.

6. View the Failure Rate vs. Time plot.

Note that, as described at the beginning of this chapter, the failure rate for the exponential distribution is constant. Also note that the Failure Rate vs. Time plot does show values for times before the location parameter, [math]\displaystyle{ \gamma \,\! }[/math], at 100 hours.

7. In the case of grouped data, one must be cautious when estimating the parameters using a rank regression method. This is because the median rank values are determined from the total number of failures observed by time [math]\displaystyle{ {{T}_{i}}\,\! }[/math] where [math]\displaystyle{ i\,\! }[/math] indicates the group number. In this example, the total number of groups is [math]\displaystyle{ N=6\,\! }[/math] and the total number of units is [math]\displaystyle{ {{N}_{T}}=20\,\! }[/math]. Thus, the median rank values will be estimated for 20 units and for the total failed units ([math]\displaystyle{ {{N}_{{{F}_{i}}}}\,\! }[/math]) up to the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] group, for the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] rank value. The median ranks values can be found from rank tables or they can be estimated using ReliaSoft's Quick Statistical Reference tool.

For example, the median rank value of the fourth group will be the [math]\displaystyle{ {{17}^{th}}\,\! }[/math] rank out of a sample size of twenty units (or 81.945%).

The following table is then constructed.

Given the values in the table above, calculate [math]\displaystyle{ \hat{a}\,\! }[/math] and [math]\displaystyle{ \hat{b}\,\! }[/math]:

- [math]\displaystyle{ \begin{align} & \hat{b}= & \frac{\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}}{{y}_{i}}-(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}})(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{y}_{i}})/6}{\underset{i=1}{\overset{6}{\mathop{\sum }}}\,t_{i}^{2}-{{(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}})}^{2}}/6} \\ & & \\ & \hat{b}= & \frac{-4320.3362-(2100)(-9.6476)/6}{910,000-{{(2100)}^{2}}/6} \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{b}=-0.005392\,\! }[/math]

and:

- [math]\displaystyle{ \hat{a}=\overline{y}-\hat{b}\overline{t}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{t}_{i}}}{N}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{a}=\frac{-9.6476}{6}-(-0.005392)\frac{2100}{6}=0.2793\,\! }[/math]

Therefore:

- [math]\displaystyle{ \hat{\lambda }=-\hat{b}=-(-0.005392)=0.05392\text{ failures/hour}\,\! }[/math]

and:

- [math]\displaystyle{ \hat{\gamma }=\frac{\hat{a}}{\hat{\lambda }}=\frac{0.2793}{0.005392}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{\gamma }\simeq 51.8\text{ hours}\,\! }[/math]

Then:

- [math]\displaystyle{ f(T)=(0.005392){{e}^{-0.005392(T-51.8)}}\,\! }[/math]

Using Weibull++, the estimated parameters are:

- [math]\displaystyle{ \begin{align} \hat{\lambda }= & 0.0054\text{ failures/hour} \\ \hat{\gamma }= & 51.82\text{ hours} \end{align}\,\! }[/math]

The small difference in the values from Weibull++ is due to rounding. In the application, the calculations and the rank values are carried out up to the [math]\displaystyle{ 15^{th}\,\! }[/math] decimal point.

Using Auto Batch Run

A number of leukemia patients were treated with either drug 6MP or a placebo, and the times in weeks until cancer symptoms returned were recorded. Analyze each treatment separately [21, p.175].

| Table - Leukemia Treatment Results | |||

| Time (weeks) | Number of Patients | Treament | Comments |

|---|---|---|---|

| 1 | 2 | placebo | |

| 2 | 2 | placebo | |

| 3 | 1 | placebo | |

| 4 | 2 | placebo | |

| 5 | 2 | placebo | |

| 6 | 4 | 6MP | 3 patients completed |

| 7 | 1 | 6MP | |

| 8 | 4 | placebo | |

| 9 | 1 | 6MP | Not completed |

| 10 | 2 | 6MP | 1 patient completed |

| 11 | 2 | placebo | |

| 11 | 1 | 6MP | Not completed |

| 12 | 2 | placebo | |

| 13 | 1 | 6MP | |

| 15 | 1 | placebo | |

| 16 | 1 | 6MP | |

| 17 | 1 | placebo | |

| 17 | 1 | 6MP | Not completed |

| 19 | 1 | 6MP | Not completed |

| 20 | 1 | 6MP | Not completed |

| 22 | 1 | placebo | |

| 22 | 1 | 6MP | |

| 23 | 1 | placebo | |

| 23 | 1 | 6MP | |

| 25 | 1 | 6MP | Not completed |

| 32 | 2 | 6MP | Not completed |

| 34 | 1 | 6MP | Not completed |

| 35 | 1 | 6MP | Not completed |

Create a new Weibull++ standard folio that's configured for grouped times-to-failure data with suspensions. In the first column, enter the number of patients. Whenever there are uncompleted tests, enter the number of patients who completed the test separately from the number of patients who did not (e.g., if 4 patients had symptoms return after 6 weeks and only 3 of them completed the test, then enter 1 in one row and 3 in another). In the second column enter F if the patients completed the test and S if they didn't. In the third column enter the time, and in the fourth column (Subset ID) specify whether the 6MP drug or a placebo was used.

Next, open the Batch Auto Run utility and select to separate the 6MP drug from the placebo, as shown next.

The software will create two data sheets, one for each subset ID, as shown next.

Calculate both data sheets using the 2-parameter exponential distribution and the MLE analysis method, then insert an additional plot and select to show the analysis results for both data sheets on that plot, which will appear as shown next.

MLE for Exponential Distribution

The exponential distribution is a commonly used distribution in reliability engineering. Mathematically, it is a fairly simple distribution, which many times leads to its use in inappropriate situations. It is, in fact, a special case of the Weibull distribution where [math]\displaystyle{ \beta =1\,\! }[/math]. The exponential distribution is used to model the behavior of units that have a constant failure rate (or units that do not degrade with time or wear out).

Exponential Probability Density Function

The 2-Parameter Exponential Distribution

The 2-parameter exponential pdf is given by:

- [math]\displaystyle{ f(t)=\lambda {{e}^{-\lambda (t-\gamma )}},f(t)\ge 0,\lambda \gt 0,t\ge \gamma \,\! }[/math]

where [math]\displaystyle{ \gamma \,\! }[/math] is the location parameter. Some of the characteristics of the 2-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{t}-\gamma =m-\gamma \,\! }[/math].

- The exponential pdf has no shape parameter, as it has only one shape.

- The distribution starts at [math]\displaystyle{ t=\gamma \,\! }[/math] at the level of [math]\displaystyle{ f(t=\gamma )=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases beyond [math]\displaystyle{ \gamma \,\! }[/math] and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

The 1-Parameter Exponential Distribution

The 1-parameter exponential pdf is obtained by setting [math]\displaystyle{ \gamma =0\,\! }[/math], and is given by:

- [math]\displaystyle{ \begin{align}f(t)= & \lambda {{e}^{-\lambda t}}=\frac{1}{m}{{e}^{-\tfrac{1}{m}t}}, & t\ge 0, \lambda \gt 0,m\gt 0 \end{align} \,\! }[/math]

where:

- [math]\displaystyle{ \lambda \,\! }[/math] = constant rate, in failures per unit of measurement, (e.g., failures per hour, per cycle, etc.)

- [math]\displaystyle{ \lambda =\frac{1}{m}\,\! }[/math]

- [math]\displaystyle{ m\,\! }[/math] = mean time between failures, or to failure

- [math]\displaystyle{ t\,\! }[/math] = operating time, life, or age, in hours, cycles, miles, actuations, etc.

This distribution requires the knowledge of only one parameter, [math]\displaystyle{ \lambda \,\! }[/math], for its application. Some of the characteristics of the 1-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], is zero.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=m\,\! }[/math].

- As [math]\displaystyle{ \lambda \,\! }[/math] is decreased in value, the distribution is stretched out to the right, and as [math]\displaystyle{ \lambda \,\! }[/math] is increased, the distribution is pushed toward the origin.

- This distribution has no shape parameter as it has only one shape, (i.e., the exponential, and the only parameter it has is the failure rate, [math]\displaystyle{ \lambda \,\! }[/math]).

- The distribution starts at [math]\displaystyle{ t=0\,\! }[/math] at the level of [math]\displaystyle{ f(t=0)=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases, and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

- The pdf can be thought of as a special case of the Weibull pdf with [math]\displaystyle{ \gamma =0\,\! }[/math] and [math]\displaystyle{ \beta =1\,\! }[/math].

Exponential Distribution Functions

The Mean or MTTF

The mean, [math]\displaystyle{ \overline{T},\,\! }[/math] or mean time to failure (MTTF) is given by:

- [math]\displaystyle{ \begin{align} \bar{T}= & \int_{\gamma }^{\infty }t\cdot f(t)dt \\ = & \int_{\gamma }^{\infty }t\cdot \lambda \cdot {{e}^{-\lambda t}}dt \\ = & \gamma +\frac{1}{\lambda }=m \end{align}\,\! }[/math]

Note that when [math]\displaystyle{ \gamma =0\,\! }[/math], the MTTF is the inverse of the exponential distribution's constant failure rate. This is only true for the exponential distribution. Most other distributions do not have a constant failure rate. Consequently, the inverse relationship between failure rate and MTTF does not hold for these other distributions.

The Median

The median, [math]\displaystyle{ \breve{T}, \,\! }[/math] is:

- [math]\displaystyle{ \breve{T}=\gamma +\frac{1}{\lambda}\cdot 0.693 \,\! }[/math]

The Mode

The mode, [math]\displaystyle{ \tilde{T},\,\! }[/math] is:

- [math]\displaystyle{ \tilde{T}=\gamma \,\! }[/math]

The Standard Deviation

The standard deviation, [math]\displaystyle{ {\sigma }_{T}\,\! }[/math], is:

- [math]\displaystyle{ {\sigma}_{T}=\frac{1}{\lambda }=m\,\! }[/math]

The Exponential Reliability Function

The equation for the 2-parameter exponential cumulative density function, or cdf, is given by:

- [math]\displaystyle{ \begin{align} F(t)=Q(t)=1-{{e}^{-\lambda (t-\gamma )}} \end{align}\,\! }[/math]

Recalling that the reliability function of a distribution is simply one minus the cdf, the reliability function of the 2-parameter exponential distribution is given by:

- [math]\displaystyle{ R(t)=1-Q(t)=1-\int_{0}^{t-\gamma }f(x)dx\,\! }[/math]

- [math]\displaystyle{ R(t)=1-\int_{0}^{t-\gamma }\lambda {{e}^{-\lambda x}}dx={{e}^{-\lambda (t-\gamma )}}\,\! }[/math]

The 1-parameter exponential reliability function is given by:

- [math]\displaystyle{ R(t)={{e}^{-\lambda t}}={{e}^{-\tfrac{t}{m}}}\,\! }[/math]

The Exponential Conditional Reliability Function

The exponential conditional reliability equation gives the reliability for a mission of [math]\displaystyle{ t\,\! }[/math] duration, having already successfully accumulated [math]\displaystyle{ T\,\! }[/math] hours of operation up to the start of this new mission. The exponential conditional reliability function is:

- [math]\displaystyle{ R(t|T)=\frac{R(T+t)}{R(T)}=\frac{{{e}^{-\lambda (T+t-\gamma )}}}{{{e}^{-\lambda (T-\gamma )}}}={{e}^{-\lambda t}}\,\! }[/math]

which says that the reliability for a mission of [math]\displaystyle{ t\,\! }[/math] duration undertaken after the component or equipment has already accumulated [math]\displaystyle{ T\,\! }[/math] hours of operation from age zero is only a function of the mission duration, and not a function of the age at the beginning of the mission. This is referred to as the memoryless property.

The Exponential Reliable Life Function

The reliable life, or the mission duration for a desired reliability goal, [math]\displaystyle{ {{t}_{R}}\,\! }[/math], for the 1-parameter exponential distribution is:

- [math]\displaystyle{ R({{t}_{R}})={{e}^{-\lambda ({{t}_{R}}-\gamma )}}\,\! }[/math]

- [math]\displaystyle{ \begin{align} \ln[R({{t}_{R}})]=-\lambda({{t}_{R}}-\gamma ) \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ {{t}_{R}}=\gamma -\frac{\ln [R({{t}_{R}})]}{\lambda }\,\! }[/math]

The Exponential Failure Rate Function

The exponential failure rate function is:

- [math]\displaystyle{ \lambda (t)=\frac{f(t)}{R(t)}=\frac{\lambda {{e}^{-\lambda (t-\gamma )}}}{{{e}^{-\lambda (t-\gamma )}}}=\lambda =\text{constant}\,\! }[/math]

Once again, note that the constant failure rate is a characteristic of the exponential distribution, and special cases of other distributions only. Most other distributions have failure rates that are functions of time.

Characteristics of the Exponential Distribution

The primary trait of the exponential distribution is that it is used for modeling the behavior of items with a constant failure rate. It has a fairly simple mathematical form, which makes it fairly easy to manipulate. Unfortunately, this fact also leads to the use of this model in situations where it is not appropriate. For example, it would not be appropriate to use the exponential distribution to model the reliability of an automobile. The constant failure rate of the exponential distribution would require the assumption that the automobile would be just as likely to experience a breakdown during the first mile as it would during the one-hundred-thousandth mile. Clearly, this is not a valid assumption. However, some inexperienced practitioners of reliability engineering and life data analysis will overlook this fact, lured by the siren-call of the exponential distribution's relatively simple mathematical models.

The Effect of lambda and gamma on the Exponential pdf

- The exponential pdf has no shape parameter, as it has only one shape.

- The exponential pdf is always convex and is stretched to the right as [math]\displaystyle{ \lambda \,\! }[/math] decreases in value.

- The value of the pdf function is always equal to the value of [math]\displaystyle{ \lambda \,\! }[/math] at [math]\displaystyle{ t=0\,\! }[/math] (or [math]\displaystyle{ t=\gamma \,\! }[/math]).

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before this time.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{T}-\gamma =m-\gamma \,\! }[/math].

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

The Effect of lambda and gamma on the Exponential Reliability Function

- The 1-parameter exponential reliability function starts at the value of 100% at [math]\displaystyle{ t=0\,\! }[/math], decreases thereafter monotonically and is convex.

- The 2-parameter exponential reliability function remains at the value of 100% for [math]\displaystyle{ t=0\,\! }[/math] up to [math]\displaystyle{ t=\gamma \,\! }[/math], and decreases thereafter monotonically and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ R(t\to \infty )\to 0\,\! }[/math].

- The reliability for a mission duration of [math]\displaystyle{ t=m=\tfrac{1}{\lambda }\,\! }[/math], or of one MTTF duration, is always equal to [math]\displaystyle{ 0.3679\,\! }[/math] or 36.79%. This means that the reliability for a mission which is as long as one MTTF is relatively low and is not recommended because only 36.8% of the missions will be completed successfully. In other words, of the equipment undertaking such a mission, only 36.8% will survive their mission.

The Effect of lambda and gamma on the Failure Rate Function

- The 1-parameter exponential failure rate function is constant and starts at [math]\displaystyle{ t=0\,\! }[/math].

- The 2-parameter exponential failure rate function remains at the value of 0 for [math]\displaystyle{ t=0\,\! }[/math] up to [math]\displaystyle{ t=\gamma \,\! }[/math], and then keeps at the constant value of [math]\displaystyle{ \lambda\,\! }[/math].

Exponential Distribution Examples

Grouped Data

20 units were reliability tested with the following results:

| Table - Life Test Data | |

| Number of Units in Group | Time-to-Failure |

|---|---|

| 7 | 100 |

| 5 | 200 |

| 3 | 300 |

| 2 | 400 |

| 1 | 500 |

| 2 | 600 |

1. Assuming a 2-parameter exponential distribution, estimate the parameters by hand using the MLE analysis method.

2. Repeat the above using Weibull++. (Enter the data as grouped data to duplicate the results.)

3. Show the Probability plot for the analysis results.

4. Show the Reliability vs. Time plot for the results.

5. Show the pdf plot for the results.

6. Show the Failure Rate vs. Time plot for the results.

7. Estimate the parameters using the rank regression on Y (RRY) analysis method (and using grouped ranks).

Solution

1. For the 2-parameter exponential distribution and for [math]\displaystyle{ \hat{\gamma }=100\,\! }[/math] hours (first failure), the partial of the log-likelihood function, [math]\displaystyle{ \lambda\,\! }[/math], becomes:

- [math]\displaystyle{ \begin{align} \frac{\partial \Lambda }{\partial \lambda }= &\underset{i=1}{\overset{6}{\mathop \sum }}\,{N_i} \left[ \frac{1}{\lambda }-\left( {{T}_{i}}-100 \right) \right]=0\\ \Rightarrow & 7[\frac{1}{\lambda }-(100-100)]+5[\frac{1}{\lambda}-(200-100)] + \ldots +2[\frac{1}{\lambda}-(600-100)]\\ = & 0\\ \Rightarrow & \hat{\lambda}=\frac{20}{3100}=0.0065 \text{fr/hr} \end{align} \,\! }[/math]

2. Enter the data in a Weibull++ standard folio and calculate it as shown next.

3. On the Plot page of the folio, the exponential Probability plot will appear as shown next.

4. View the Reliability vs. Time plot.

5. View the pdf plot.

6. View the Failure Rate vs. Time plot.

Note that, as described at the beginning of this chapter, the failure rate for the exponential distribution is constant. Also note that the Failure Rate vs. Time plot does show values for times before the location parameter, [math]\displaystyle{ \gamma \,\! }[/math], at 100 hours.

7. In the case of grouped data, one must be cautious when estimating the parameters using a rank regression method. This is because the median rank values are determined from the total number of failures observed by time [math]\displaystyle{ {{T}_{i}}\,\! }[/math] where [math]\displaystyle{ i\,\! }[/math] indicates the group number. In this example, the total number of groups is [math]\displaystyle{ N=6\,\! }[/math] and the total number of units is [math]\displaystyle{ {{N}_{T}}=20\,\! }[/math]. Thus, the median rank values will be estimated for 20 units and for the total failed units ([math]\displaystyle{ {{N}_{{{F}_{i}}}}\,\! }[/math]) up to the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] group, for the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] rank value. The median ranks values can be found from rank tables or they can be estimated using ReliaSoft's Quick Statistical Reference tool.

For example, the median rank value of the fourth group will be the [math]\displaystyle{ {{17}^{th}}\,\! }[/math] rank out of a sample size of twenty units (or 81.945%).

The following table is then constructed.

Given the values in the table above, calculate [math]\displaystyle{ \hat{a}\,\! }[/math] and [math]\displaystyle{ \hat{b}\,\! }[/math]:

- [math]\displaystyle{ \begin{align} & \hat{b}= & \frac{\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}}{{y}_{i}}-(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}})(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{y}_{i}})/6}{\underset{i=1}{\overset{6}{\mathop{\sum }}}\,t_{i}^{2}-{{(\underset{i=1}{\overset{6}{\mathop{\sum }}}\,{{t}_{i}})}^{2}}/6} \\ & & \\ & \hat{b}= & \frac{-4320.3362-(2100)(-9.6476)/6}{910,000-{{(2100)}^{2}}/6} \end{align}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{b}=-0.005392\,\! }[/math]

and:

- [math]\displaystyle{ \hat{a}=\overline{y}-\hat{b}\overline{t}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{t}_{i}}}{N}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{a}=\frac{-9.6476}{6}-(-0.005392)\frac{2100}{6}=0.2793\,\! }[/math]

Therefore:

- [math]\displaystyle{ \hat{\lambda }=-\hat{b}=-(-0.005392)=0.05392\text{ failures/hour}\,\! }[/math]

and:

- [math]\displaystyle{ \hat{\gamma }=\frac{\hat{a}}{\hat{\lambda }}=\frac{0.2793}{0.005392}\,\! }[/math]

or:

- [math]\displaystyle{ \hat{\gamma }\simeq 51.8\text{ hours}\,\! }[/math]

Then:

- [math]\displaystyle{ f(T)=(0.005392){{e}^{-0.005392(T-51.8)}}\,\! }[/math]

Using Weibull++, the estimated parameters are:

- [math]\displaystyle{ \begin{align} \hat{\lambda }= & 0.0054\text{ failures/hour} \\ \hat{\gamma }= & 51.82\text{ hours} \end{align}\,\! }[/math]

The small difference in the values from Weibull++ is due to rounding. In the application, the calculations and the rank values are carried out up to the [math]\displaystyle{ 15^{th}\,\! }[/math] decimal point.

Using Auto Batch Run

A number of leukemia patients were treated with either drug 6MP or a placebo, and the times in weeks until cancer symptoms returned were recorded. Analyze each treatment separately [21, p.175].

| Table - Leukemia Treatment Results | |||

| Time (weeks) | Number of Patients | Treament | Comments |

|---|---|---|---|

| 1 | 2 | placebo | |

| 2 | 2 | placebo | |

| 3 | 1 | placebo | |

| 4 | 2 | placebo | |

| 5 | 2 | placebo | |

| 6 | 4 | 6MP | 3 patients completed |

| 7 | 1 | 6MP | |

| 8 | 4 | placebo | |

| 9 | 1 | 6MP | Not completed |

| 10 | 2 | 6MP | 1 patient completed |

| 11 | 2 | placebo | |

| 11 | 1 | 6MP | Not completed |

| 12 | 2 | placebo | |

| 13 | 1 | 6MP | |

| 15 | 1 | placebo | |

| 16 | 1 | 6MP | |

| 17 | 1 | placebo | |

| 17 | 1 | 6MP | Not completed |

| 19 | 1 | 6MP | Not completed |

| 20 | 1 | 6MP | Not completed |

| 22 | 1 | placebo | |

| 22 | 1 | 6MP | |

| 23 | 1 | placebo | |

| 23 | 1 | 6MP | |

| 25 | 1 | 6MP | Not completed |

| 32 | 2 | 6MP | Not completed |

| 34 | 1 | 6MP | Not completed |

| 35 | 1 | 6MP | Not completed |

Create a new Weibull++ standard folio that's configured for grouped times-to-failure data with suspensions. In the first column, enter the number of patients. Whenever there are uncompleted tests, enter the number of patients who completed the test separately from the number of patients who did not (e.g., if 4 patients had symptoms return after 6 weeks and only 3 of them completed the test, then enter 1 in one row and 3 in another). In the second column enter F if the patients completed the test and S if they didn't. In the third column enter the time, and in the fourth column (Subset ID) specify whether the 6MP drug or a placebo was used.

Next, open the Batch Auto Run utility and select to separate the 6MP drug from the placebo, as shown next.

The software will create two data sheets, one for each subset ID, as shown next.

Calculate both data sheets using the 2-parameter exponential distribution and the MLE analysis method, then insert an additional plot and select to show the analysis results for both data sheets on that plot, which will appear as shown next.

Likelihood Ratio Bound on Lambda

The exponential distribution is a commonly used distribution in reliability engineering. Mathematically, it is a fairly simple distribution, which many times leads to its use in inappropriate situations. It is, in fact, a special case of the Weibull distribution where [math]\displaystyle{ \beta =1\,\! }[/math]. The exponential distribution is used to model the behavior of units that have a constant failure rate (or units that do not degrade with time or wear out).

Exponential Probability Density Function

The 2-Parameter Exponential Distribution

The 2-parameter exponential pdf is given by:

- [math]\displaystyle{ f(t)=\lambda {{e}^{-\lambda (t-\gamma )}},f(t)\ge 0,\lambda \gt 0,t\ge \gamma \,\! }[/math]

where [math]\displaystyle{ \gamma \,\! }[/math] is the location parameter. Some of the characteristics of the 2-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], if positive, shifts the beginning of the distribution by a distance of [math]\displaystyle{ \gamma \,\! }[/math] to the right of the origin, signifying that the chance failures start to occur only after [math]\displaystyle{ \gamma \,\! }[/math] hours of operation, and cannot occur before.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=\bar{t}-\gamma =m-\gamma \,\! }[/math].

- The exponential pdf has no shape parameter, as it has only one shape.

- The distribution starts at [math]\displaystyle{ t=\gamma \,\! }[/math] at the level of [math]\displaystyle{ f(t=\gamma )=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases beyond [math]\displaystyle{ \gamma \,\! }[/math] and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

The 1-Parameter Exponential Distribution

The 1-parameter exponential pdf is obtained by setting [math]\displaystyle{ \gamma =0\,\! }[/math], and is given by:

- [math]\displaystyle{ \begin{align}f(t)= & \lambda {{e}^{-\lambda t}}=\frac{1}{m}{{e}^{-\tfrac{1}{m}t}}, & t\ge 0, \lambda \gt 0,m\gt 0 \end{align} \,\! }[/math]

where:

- [math]\displaystyle{ \lambda \,\! }[/math] = constant rate, in failures per unit of measurement, (e.g., failures per hour, per cycle, etc.)

- [math]\displaystyle{ \lambda =\frac{1}{m}\,\! }[/math]

- [math]\displaystyle{ m\,\! }[/math] = mean time between failures, or to failure

- [math]\displaystyle{ t\,\! }[/math] = operating time, life, or age, in hours, cycles, miles, actuations, etc.

This distribution requires the knowledge of only one parameter, [math]\displaystyle{ \lambda \,\! }[/math], for its application. Some of the characteristics of the 1-parameter exponential distribution are discussed in Kececioglu [19]:

- The location parameter, [math]\displaystyle{ \gamma \,\! }[/math], is zero.

- The scale parameter is [math]\displaystyle{ \tfrac{1}{\lambda }=m\,\! }[/math].

- As [math]\displaystyle{ \lambda \,\! }[/math] is decreased in value, the distribution is stretched out to the right, and as [math]\displaystyle{ \lambda \,\! }[/math] is increased, the distribution is pushed toward the origin.

- This distribution has no shape parameter as it has only one shape, (i.e., the exponential, and the only parameter it has is the failure rate, [math]\displaystyle{ \lambda \,\! }[/math]).

- The distribution starts at [math]\displaystyle{ t=0\,\! }[/math] at the level of [math]\displaystyle{ f(t=0)=\lambda \,\! }[/math] and decreases thereafter exponentially and monotonically as [math]\displaystyle{ t\,\! }[/math] increases, and is convex.

- As [math]\displaystyle{ t\to \infty \,\! }[/math], [math]\displaystyle{ f(t)\to 0\,\! }[/math].

- The pdf can be thought of as a special case of the Weibull pdf with [math]\displaystyle{ \gamma =0\,\! }[/math] and [math]\displaystyle{ \beta =1\,\! }[/math].

Exponential Distribution Functions

The Mean or MTTF

The mean, [math]\displaystyle{ \overline{T},\,\! }[/math] or mean time to failure (MTTF) is given by:

- [math]\displaystyle{ \begin{align} \bar{T}= & \int_{\gamma }^{\infty }t\cdot f(t)dt \\ = & \int_{\gamma }^{\infty }t\cdot \lambda \cdot {{e}^{-\lambda t}}dt \\ = & \gamma +\frac{1}{\lambda }=m \end{align}\,\! }[/math]

Note that when [math]\displaystyle{ \gamma =0\,\! }[/math], the MTTF is the inverse of the exponential distribution's constant failure rate. This is only true for the exponential distribution. Most other distributions do not have a constant failure rate. Consequently, the inverse relationship between failure rate and MTTF does not hold for these other distributions.

The Median

The median, [math]\displaystyle{ \breve{T}, \,\! }[/math] is:

- [math]\displaystyle{ \breve{T}=\gamma +\frac{1}{\lambda}\cdot 0.693 \,\! }[/math]

The Mode

The mode, [math]\displaystyle{ \tilde{T},\,\! }[/math] is:

- [math]\displaystyle{ \tilde{T}=\gamma \,\! }[/math]

The Standard Deviation

The standard deviation, [math]\displaystyle{ {\sigma }_{T}\,\! }[/math], is:

- [math]\displaystyle{ {\sigma}_{T}=\frac{1}{\lambda }=m\,\! }[/math]

The Exponential Reliability Function

The equation for the 2-parameter exponential cumulative density function, or cdf, is given by:

- [math]\displaystyle{ \begin{align} F(t)=Q(t)=1-{{e}^{-\lambda (t-\gamma )}} \end{align}\,\! }[/math]

Recalling that the reliability function of a distribution is simply one minus the cdf, the reliability function of the 2-parameter exponential distribution is given by:

- [math]\displaystyle{ R(t)=1-Q(t)=1-\int_{0}^{t-\gamma }f(x)dx\,\! }[/math]

- [math]\displaystyle{ R(t)=1-\int_{0}^{t-\gamma }\lambda {{e}^{-\lambda x}}dx={{e}^{-\lambda (t-\gamma )}}\,\! }[/math]

The 1-parameter exponential reliability function is given by:

- [math]\displaystyle{ R(t)={{e}^{-\lambda t}}={{e}^{-\tfrac{t}{m}}}\,\! }[/math]

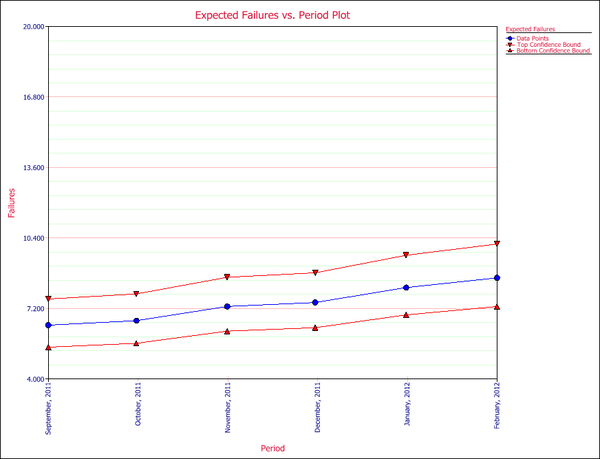

The Exponential Conditional Reliability Function