Markov Diagrams

The term “Markov Chain", invented by Russian mathematician Andrey Markov, is used across many applications to represent a stochastic process made up of a sequence of random variables representing the evolution of a system, where the future state only depends on the current state of the system as past and future states are independent. Events are “chained” or “linked” serially together though memoryless transitions from one state to another. The term memoryless is used because past events are forgotten as they are irrelevant since an event or state is only dependent on the state or event that immediately preceded it.

Concept and Methodology

The concept behind the method is that given a system of states with transitions between them the analysis will give the probability of being in a particular state at a particular time. If some of the states are considered to be unavailable states for the system, then availability/reliability analysis can be performed for the system as a whole.

Discrete Markov Chains: Limiting Probabilities

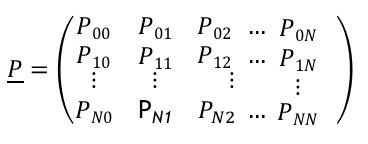

Transition Matrix

A system has finitely many states {0, 1, 2…,N} and transition from state to state is random. The matrix shows the potential inputs and outputs from one state to another to describe transitions of a Markov chain. P(X(n+1)=j│X,n=i)=Pij where 0≦Pij≦1

Markov Chain Diagram

Markov Chain State diagrams can be used to label events and transitions based upon a transition matrix.

Chapman-Kolmogorov Equation

The Chapman-Kolmogorov Equation was realized and defined independently by British mathematician Sydney Chapman and Russian mathematician Andrew Kolmogorov. It can be used to provide the transitional densities of a Markov sequence.

Let pi(n)=P(Xn=i), then

P(X(n+1)=j) = [math]\displaystyle{ \sum_{i \mathop =0}^{N}P }[/math] (Xn+1 = j|Xn = i)

so Pj(n+1) = [math]\displaystyle{ \sum_{i \mathop =0}^{N}P }[/math] (Xi(n) Pij

With vector notation [math]\displaystyle{ \underline{p} }[/math](n) = (p0(n),p1(n), ... ,pN(n)) (row vector)

[math]\displaystyle{ \underline{p} }[/math](n+1) = p(n)[math]\displaystyle{ \underline{P} }[/math] = ([math]\displaystyle{ \underline{p} }[/math](n-1)[math]\displaystyle{ \underline{P} }[/math]2 = p(0)p(n+1)

Let Pij(m) = P (Xn+m = j| Xn = i) and [math]\displaystyle{ \underline{p} }[/math](m) = Pij(m)

then [math]\displaystyle{ \underline{P} }[/math]n+m = [math]\displaystyle{ \underline{P} }[/math](n) * [math]\displaystyle{ \underline{P} }[/math](m) and [math]\displaystyle{ \underline{P} }[/math](n)= [math]\displaystyle{ \underline{P} }[/math]n

Accessible and Communicating States

State j is accessible from state i, if for some m,

Pij(m) > 0

State i communicates with state j, if j is accessible from i and also state i is accessible from j:

[math]\displaystyle{ \sum_{m \mathop =1}^{\infty}P }[/math]ij(m) and [math]\displaystyle{ \sum_{m \mathop =1}^{\infty}P }[/math]ji(m)

Markov chain is irreducible if every state i communicates with all other states and with itself.

Recurrent and Transient States

Let fi = P (starting at state i, system will return to state i) If fi = 1, then state i is recurrent , repeated infinitely often If fi < 1, then state i is transient , repeated returns have smaller and smaller probabilities.

fi = [math]\displaystyle{ \sum_{m \mathop =1}^{\infty}P }[/math]ii(m)

Markov chain is ergodic, if all states are recurrent and not periodic (there is no d>0 such that Pii(m) > 0 if and only if m is multiple of d)

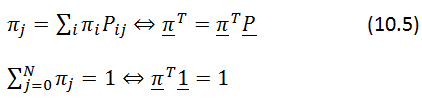

Limiting Probabilities

- Theorem:

For an irreducible, ergodic Markov chain [math]\displaystyle{ \lim_{m \to \infty}P }[/math]ij(m) = πj for all j for all j (10.4) and limit is independent of i( steady state probabilities):

0 ≦ πj≦ 1

- Method:

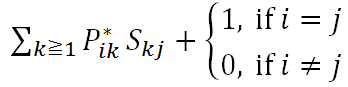

Mean Time Spent in States

Mean time spent in recurrent states = ∞

Mean time spent in transient states :

Sij = Starting at state i , expected number of time periods that state is j

Sij =

where P * contains rows and columns of transient states of matrix ▁P: S = I + P* S [math]\displaystyle{ \underline{S} }[/math] = (I-P *)-1

Continuous Markov Chains: Applications to Non-Repairable Systems

- Non-repairable component with failure rate λ

- P0(t) = P ( at time t component works)

- P1(t) = P ( at time t component is broken)

P0 (t+ ∆ t) = (1- λ ∆ t) P0 (t) +0 P1 (t) Does not fail during ∆ t times

P1(t+ ∆ t) = λ ∆ t P0 (t) + 1 P1 (t) since P (Fails in ∆ Time) =1- e- λ∆t ≈ 1- (1- [math]\displaystyle{ \tfrac{\lambda\Delta(t)}{1!}+\tfrac{(\lambda^2(\Delta(t))^2)}{2!} }[/math] - …) ≈ λ∆t if ∆t is small

Method

The method employed to solve a continuous Markov Chain problem will be a modified RK45 Runga-Kutta-Fehlberg, which is an adaptive step size Runga-Kutta method.

User Inputs

The user must provide initial probabilities for each state (must add up to exactly 1.0), and a transition probability between each state. If a transition probability is not given, it should be assumed to be zero.

Symbol Definitions

- αj,0 is the initial probability of being in state j (given by the user).

- ε is the user defined tolerance (accuracy). Default should be 1e-5 and can only get smaller.

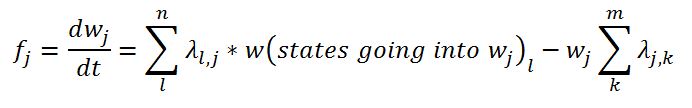

- λl,j is the transitional failure rate into state wj from state wl

- wl is the probability of being in the state associated with the λl,j’s

- λj,k is the transitional failure rate leaving state wj to state wk

- fj is the change in state probability function (for a given state wj):

fj is not a function of time as only constant failure rates will be allowed in initial version. This means that the various k’s calculated during the RK45 method are only functions of all the w’s and the constant failure rates, λ’s.

Here's the formula for the Runge-Kutta-Fehlberg method (RK45).

w0 = α

k1 = hf(ti, wi)

k2 = hf(ti+[math]\displaystyle{ \tfrac{h}{4} }[/math], wi+[math]\displaystyle{ \tfrac{k_1}{4} }[/math])

k3 = hf(ti+[math]\displaystyle{ \tfrac{3h}{8} }[/math], wi+[math]\displaystyle{ \tfrac{3}{32} }[/math]k1+[math]\displaystyle{ \tfrac{9}{32} }[/math]k2)

k4 = hf(ti+[math]\displaystyle{ \tfrac{12h}{13} }[/math], wi+[math]\displaystyle{ \tfrac{1932}{2197} }[/math]k1-[math]\displaystyle{ \tfrac{7200}{2197} }[/math]k2+[math]\displaystyle{ \tfrac{7296}{2197} }[/math]k3)

k5 = hf(ti+h wi+[math]\displaystyle{ \tfrac{439}{216} }[/math]k1-8k2+[math]\displaystyle{ \tfrac{3680}{513} }[/math]k3-[math]\displaystyle{ \tfrac{845}{4104} }[/math]k4)

k6 = hf(ti+[math]\displaystyle{ \tfrac{h}{2} }[/math] wi+[math]\displaystyle{ \tfrac{8}{27} }[/math]k1-2k2+[math]\displaystyle{ \tfrac{3544}{2565} }[/math]k3-[math]\displaystyle{ \tfrac{1859}{4104} }[/math]k4-[math]\displaystyle{ \tfrac{11}{40} }[/math]k5)

wi+1 = wi+[math]\displaystyle{ \tfrac{25}{216} }[/math]k1+[math]\displaystyle{ \tfrac{1408}{2565} }[/math]k3-[math]\displaystyle{ \tfrac{2197}{4104} }[/math]k4-[math]\displaystyle{ \tfrac{1}{5} }[/math]k5)

w'i+1 = wi+[math]\displaystyle{ \tfrac{16}{135} }[/math]k1+[math]\displaystyle{ \tfrac{6656}{12825} }[/math]k1+[math]\displaystyle{ \tfrac{28561}{56430} }[/math]k4-[math]\displaystyle{ \tfrac{9}{50} }[/math]k5-[math]\displaystyle{ \tfrac{2}{55} }[/math]k6)

R = [math]\displaystyle{ \tfrac{1}{h}| }[/math]w'i+1-wi+1|

δ = 0.84 * [math]\displaystyle{ \tfrac{\varepsilon}{R}^\tfrac{1}{4} }[/math]

if R≤ε keep w as the current step solution and move to the next step with step size δh

if R>ε recalculate the current step with step size δh The above method is for each individual state, and not for the system as a whole. The w is the equivalent of the probability of being in a particular state, where the subscript i represents the time based variation. This still has to be done for all the states in the system, which later on is represented by the subscript j (for each state).

Detailed Methodology

Generate an initial step size h from the available failure rates (1% of the smallest MTTF)

Use the RK45 method on all states simultaneously using the given h. (this means that each state must have its k1 value calculated/used together, then k2, then k3, etc).

If all calculations are within tolerance, keep results (RK4, so the w without the hat) and increase h to the smallest of the increases generated by the method. If some of the calculations are not within tolerance, decrease the step size to the smallest of the decreases generated by the method and recalculate with the new h. h should not be increased to more than double, so s should have a stipulation on it that forbids from that occurring. Be aware that s may become infinite if the difference between the RK4 and the RK5 is zero. This should be addressed as well with a catch of some sort to make s = 2 in that case. (So basically if s is calculated to be greater than 2 for any state, make it equal to 2 for that state).

Repeat steps 2 & 3 as necessary.

If there are multiple phases, then 1-4 needs to be done for each phase where the initial probability of being in a state is = the final value from the previous phase.

This methodology will provide the ability to give availability and unavailability metrics for the system, as well as point probabilities of being in a certain state. For the reliability metrics, the methodology differs in that each unavailable state is considered as a “sink”, or in other words all transitions from unavailable to available states are to be ignored. This could be calculated simultaneously with the availability using the same step sizes as generated there.

The two results that need to be stored are the time and the corresponding probability of being in the state for each state.