Repairable Systems Analysis Through Simulation: Difference between revisions

Lisa Hacker (talk | contribs) No edit summary |

|||

| (185 intermediate revisions by 9 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:bsbook|7}} | {{Template:bsbook|7}} | ||

{{TU}} | |||

Having introduced some of the basic theory and terminology for repairable systems in [[Introduction to Repairable Systems]], we will now examine the steps involved in the analysis of such complex systems. We will begin by examining system behavior through a sequence of discrete deterministic events and expand the analysis using discrete event simulation. | Having introduced some of the basic theory and terminology for repairable systems in [[Introduction to Repairable Systems]], we will now examine the steps involved in the analysis of such complex systems. We will begin by examining system behavior through a sequence of discrete deterministic events and expand the analysis using discrete event simulation. | ||

=Simple Repairs= | =Simple Repairs= | ||

==Deterministic View, Simple Series== | ==Deterministic View, Simple Series== | ||

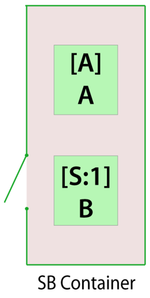

To first understand how component failures and simple repairs affect the system and to visualize the steps involved, let's begin with a very simple deterministic example with two components, | To first understand how component failures and simple repairs affect the system and to visualize the steps involved, let's begin with a very simple deterministic example with two components, <math>A\,\!</math> and <math>B\,\!</math>, in series. | ||

[[Image:i8.1.png|center| | [[Image:i8.1.png|center|200px|link=]] | ||

Component | Component <math>A\,\!</math> fails every 100 hours and component <math>B\,\!</math> fails every 120 hours. Both require 10 hours to get repaired. Furthermore, assume that the surviving component stops operating when the system fails (thus not aging). | ||

'''NOTE''': When a failure occurs in certain systems, some or all of the system's components | '''NOTE''': When a failure occurs in certain systems, some or all of the system's components | ||

may or may not continue to accumulate operating time while the system is down. For example, | may or may not continue to accumulate operating time while the system is down. For example, | ||

| Line 19: | Line 21: | ||

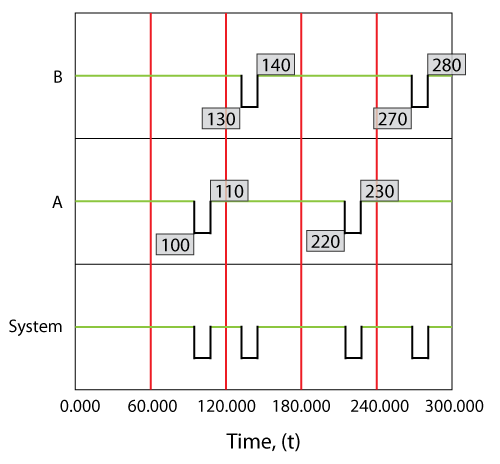

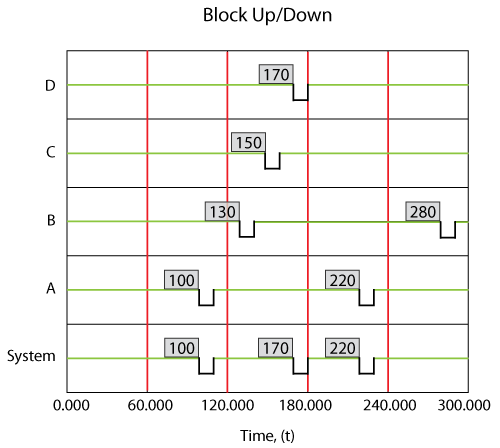

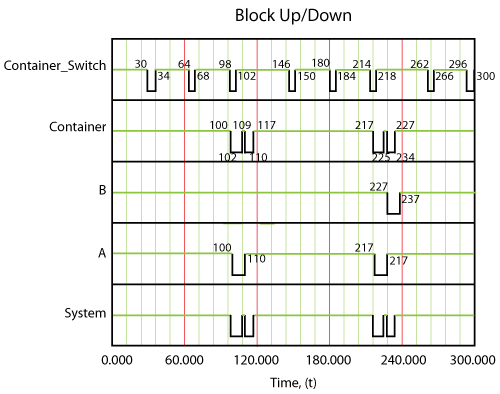

The system behavior during an operation from 0 to 300 hours would be as shown in the figure below. | The system behavior during an operation from 0 to 300 hours would be as shown in the figure below. | ||

[[Image:BS8.1.png|center|500px|Overview of system and components for a simple series system with two components. Component A fails every 100 hours and component B fails every 120 hours. Both require 10 hours to get repaired and do not age(operate through failure) when the system is in a failed state.|link=]] | |||

Specifically, component | Specifically, component <math>A\,\!</math> would fail at 100 hours, causing the system to fail. After 10 hours, component <math>A\,\!</math> would be restored and so would the system. The next event would be the failure of component <math>B\,\!</math>. We know that component <math>B\,\!</math> fails every 120 hours (or after an age of 120 hours). Since a component does not age while the system is down, component <math>B\,\!</math> would have reached an age of 120 when the clock reaches 130 hours. Thus, component <math>B\,\!</math> would fail at 130 hours and be repaired by 140 and so forth. Overall in this scenario, the system would be failed for a total of 40 hours due to four downing events (two due to <math>A\,\!</math> and two due to <math>B\,\!</math> ). The overall system availability (average or mean availability) would be <math>260/300=0.86667\,\!</math>. Point availability is the availability at a specific point time. In this deterministic case, the point availability would always be equal to 1 if the system is up at that time and equal to zero if the system is down at that time. | ||

====Operating Through System Failure==== | ====Operating Through System Failure==== | ||

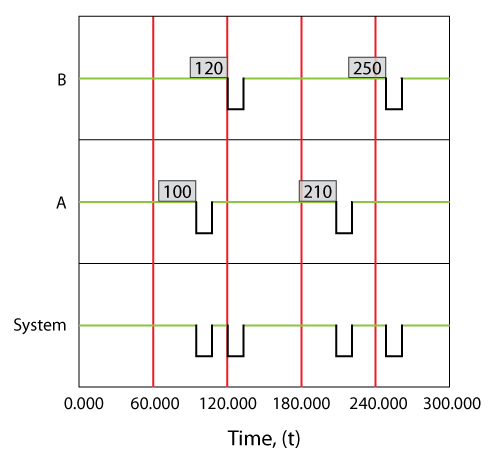

In the prior section we made the assumption that components do not age when the system is down. This assumption applies to most systems. However, under special circumstances, a unit may age even while the system is down. In such cases, the operating profile will be different from the one presented in the prior section. The figure below illustrates the case where the components operate continuously, regardless of the system status. | In the prior section we made the assumption that components do not age when the system is down. This assumption applies to most systems. However, under special circumstances, a unit may age even while the system is down. In such cases, the operating profile will be different from the one presented in the prior section. The figure below illustrates the case where the components operate continuously, regardless of the system status. | ||

[[Image:BS8.2.png|center| | [[Image:BS8.2.png|center|500px|Overview of up and down states for a simple series system with two components. Component ''A'' failes every 100 hours and component ''B'' fails every 120 hours. Both require 10 hours to get repaired and age when the system is in a failed state(operate through failure).|link=]] | ||

====Effects of Operating Through Failure==== | ====Effects of Operating Through Failure==== | ||

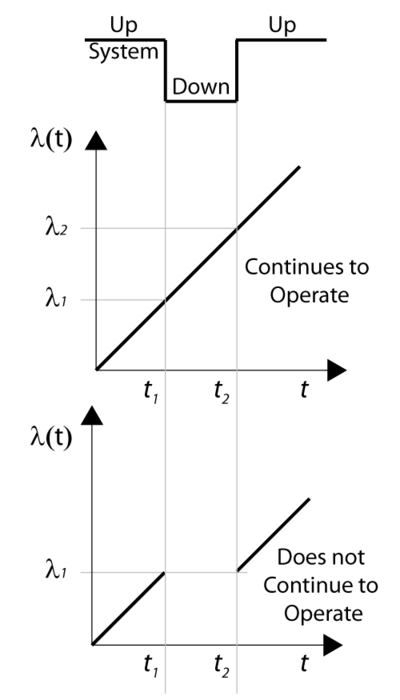

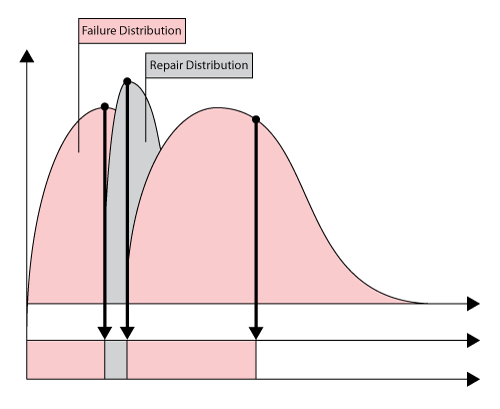

Consider a component with an increasing failure rate, as shown in the figure below. In the case that the component continues to operate through system failure, then when the system fails at | Consider a component with an increasing failure rate, as shown in the figure below. In the case that the component continues to operate through system failure, then when the system fails at <math>{{t}_{1}}\,\!</math> the surviving component's failure rate will be <math>{{\lambda }_{1}}\,\!</math>, as illustrated in figure below. When the system is restored at <math>{{t}_{2}}\,\!</math>, the component would have aged by <math>{{t}_{2}}-{{t}_{1}}\,\!</math> and its failure rate would now be <math>{{\lambda }_{2}}\,\!</math>. | ||

In the case of a component that does not operate through failure, then the surviving component would be at the same failure rate, | In the case of a component that does not operate through failure, then the surviving component would be at the same failure rate, <math>{{\lambda }_{1}},\,\!</math> when the system resumes operation. | ||

[[Image:BS8.3.png|center|400px|Illustration of a component with a linearly increasing failure rate and the effect of operation through system failure.|link=]] | |||

[[Image:BS8.3.png|center| | |||

==Deterministic View, Simple Parallel== | ==Deterministic View, Simple Parallel== | ||

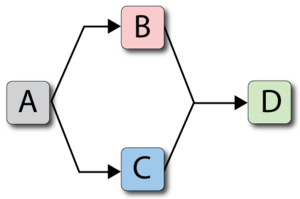

Consider the following system where | Consider the following system where <math>A\,\!</math> fails every 100, <math>B\,\!</math> every 120, <math>C\,\!</math> every 140 and <math>D\,\!</math> every 160 time units. Each takes 10 time units to restore. Furthermore, assume that components do not age when the system is down. | ||

[[Image:i8.2.png|center| | [[Image:i8.2.png|center|300px|link=]] | ||

A deterministic system view is shown in the figure below. The sequence of events is as follows:<br> | A deterministic system view is shown in the figure below. The sequence of events is as follows:<br> | ||

#At 100, | #At 100, <math>A\,\!</math> fails and is repaired by 110. The system is failed. | ||

#At 130, | #At 130, <math>B\,\!</math> fails and is repaired by 140. The system continues to operate. | ||

#At 150, | #At 150, <math>C\,\!</math> fails and is repaired by 160. The system continues to operate. | ||

#At 170, | #At 170, <math>D\,\!</math> fails and is repaired by 180. The system is failed. | ||

#At 220, | #At 220, <math>A\,\!</math> fails and is repaired by 230. The system is failed. | ||

#At 280, | #At 280, <math>B\,\!</math> fails and is repaired by 290. The system continues to operate. | ||

#End at 300. | #End at 300. | ||

[[Image:BS8.4.png|center|500px|Overview of simple redundant system with four components.|link=]] | |||

[[Image:BS8.4.png|center| | |||

====Additional Notes==== | ====Additional Notes==== | ||

It should be noted that we are dealing with these events deterministically in order to better illustrate the methodology. When dealing with deterministic events, it is possible to create a sequence of events that one would not expect to encounter probabilistically. One such example consists of two units in series that do not operate through failure but both fail at exactly 100, which is highly unlikely in a real-world scenario. In this case, the assumption is that one of the events must occur at least an infinitesimal amount of time ( <math>dt)</math> | It should be noted that we are dealing with these events deterministically in order to better illustrate the methodology. When dealing with deterministic events, it is possible to create a sequence of events that one would not expect to encounter probabilistically. One such example consists of two units in series that do not operate through failure but both fail at exactly 100, which is highly unlikely in a real-world scenario. In this case, the assumption is that one of the events must occur at least an infinitesimal amount of time ( <math>dt)\,\!</math> before the other. Probabilistically, this event is extremely rare, since both randomly generated times would have to be exactly equal to each other, to 15 decimal points. In the rare event that this happens, BlockSim would pick the unit with the lowest ID value as the first failure. BlockSim assigns a unique numerical ID when each component is created. These can be viewed by selecting the '''Show Block ID''' option in the Diagram Options window. | ||

==Deterministic Views of More Complex Systems== | ==Deterministic Views of More Complex Systems== | ||

| Line 77: | Line 68: | ||

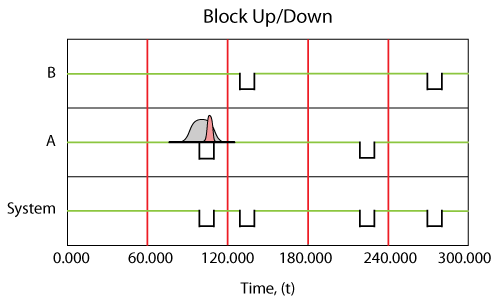

In a probabilistic case, the failures and repairs do not happen at a fixed time and for a fixed duration, but rather occur randomly and based on an underlying distribution, as shown in the following figures. | In a probabilistic case, the failures and repairs do not happen at a fixed time and for a fixed duration, but rather occur randomly and based on an underlying distribution, as shown in the following figures. | ||

[[Image:8.5.png|center|600px| A single component with a probabilistic failure time and repair duration.|link=]] | |||

[[Image:8.5.png|center| | [[Image:BS8.6.png|center|500px|A system up/down plot illustrating a probabilistic failure time and repair duration for component B.|link=]] | ||

[[Image:BS8.6.png|center| | |||

We use discrete event simulation in order to analyze (understand) the system behavior. Discrete event simulation looks at each system/component event very similarly to the way we looked at these events in the deterministic example. However, instead of using deterministic (fixed) times for each event occurrence or duration, random times are used. These random times are obtained from the underlying distribution for each event. As an example, consider an event following a 2-parameter Weibull distribution. The | We use discrete event simulation in order to analyze (understand) the system behavior. Discrete event simulation looks at each system/component event very similarly to the way we looked at these events in the deterministic example. However, instead of using deterministic (fixed) times for each event occurrence or duration, random times are used. These random times are obtained from the underlying distribution for each event. As an example, consider an event following a 2-parameter Weibull distribution. The ''cdf'' of the 2-parameter Weibull distribution is given by: | ||

::<math>F(T)=1-{{e}^{-{{\left( \tfrac{T}{\eta } \right)}^{\beta }}}}</math | ::<math>F(T)=1-{{e}^{-{{\left( \tfrac{T}{\eta } \right)}^{\beta }}}}\,\!</math> | ||

The Weibull reliability function is given by: | The Weibull reliability function is given by: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

R(T)= & 1-F(t) \\ | R(T)= & 1-F(t) \\ | ||

= & {{e}^{-{{\left( \tfrac{T}{\eta } \right)}^{\beta }}}} | = & {{e}^{-{{\left( \tfrac{T}{\eta } \right)}^{\beta }}}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Then, to generate a random time from a Weibull distribution with a given <math>\eta \,\!</math> and <math>\beta \,\!</math>, a uniform random number from 0 to 1, <math>{{U}_{R}}[0,1]\,\!</math>, is first obtained. The random time from a Weibull distribution is then obtained from: | |||

::<math>{{T}_{R}}=\eta \cdot {{\left\{ -\ln \left[ {{U}_{R}}[0,1] \right] \right\}}^{\tfrac{1}{\beta }}}\,\!</math> | |||

To obtain a conditional time, the Weibull conditional reliability function is given by: | To obtain a conditional time, the Weibull conditional reliability function is given by: | ||

::<math>R(t|T)=\frac{R(T+t)}{R(T)}=\frac{{{e}^{-{{\left( \tfrac{T+t}{\eta } \right)}^{\beta }}}}}{{{e}^{-{{\left( \tfrac{T}{\eta } \right)}^{\beta }}}}}\,\!</math> | |||

Or: | Or: | ||

::<math>R(t|T)={{e}^{-\left[ {{\left( \tfrac{T+t}{\eta } \right)}^{\beta }}-{{\left( \tfrac{T}{\eta } \right)}^{\beta }} \right]}}\,\!</math> | |||

The random time would be the solution for <math>t\,\!</math> for <math>R(t|T)={{U}_{R}}[0,1]\,\!</math>. | |||

The random time would be the solution for | |||

To illustrate the sequence of events, assume a single block with a failure and a repair distribution. The first event, | To illustrate the sequence of events, assume a single block with a failure and a repair distribution. The first event, <math>{{E}_{{{F}_{1}}}}\,\!</math>, would be the failure of the component. Its first time-to-failure would be a random number drawn from its failure distribution, <math>{{T}_{{{F}_{1}}}}\,\!</math>. Thus, the first failure event, <math>{{E}_{{{F}_{1}}}}\,\!</math>, would be at <math>{{T}_{{{F}_{1}}}}\,\!</math>. Once failed, the next event would be the repair of the component, <math>{{E}_{{{R}_{1}}}}\,\!</math>. The time to repair the component would now be drawn from its repair distribution, <math>{{T}_{{{R}_{1}}}}\,\!</math>. The component would be restored by time <math>{{T}_{{{F}_{1}}}}+{{T}_{{{R}_{1}}}}\,\!</math>. The next event would now be the second failure of the component after the repair, <math>{{E}_{{{F}_{2}}}}\,\!</math>. This event would occur after a component operating time of <math>{{T}_{{{F}_{2}}}}\,\!</math> after the item is restored (again drawn from the failure distribution), or at <math>{{T}_{{{F}_{1}}}}+{{T}_{{{R}_{1}}}}+{{T}_{{{F}_{2}}}}\,\!</math>. This process is repeated until the end time. It is important to note that each run will yield a different sequence of events due to the probabilistic nature of the times. To arrive at the desired result, this process is repeated many times and the results from each run (simulation) are recorded. In other words, if we were to repeat this 1,000 times, we would obtain 1,000 different values for <math>{{E}_{{{F}_{1}}}}\,\!</math>, or <math>\left[ {{E}_{{{F}_{{{1}_{1}}}}}},{{E}_{{{F}_{{{1}_{2}}}}}},...,{{E}_{{{F}_{{{1}_{1,000}}}}}} \right]\,\!</math>. | ||

The average of these values, | The average of these values, <math>\left( \tfrac{1}{1000}\underset{i=1}{\overset{1,000}{\mathop{\sum }}}\,{{E}_{{{F}_{{{1}_{i}}}}}} \right)\,\!</math>, would then be the average time to the first event, <math>{{E}_{{{F}_{1}}}}\,\!</math>, or the mean time to first failure (MTTFF) for the component. Obviously, if the component were to be 100% renewed after each repair, then this value would also be the same for the second failure, etc. | ||

=General Simulation Results= | =General Simulation Results= | ||

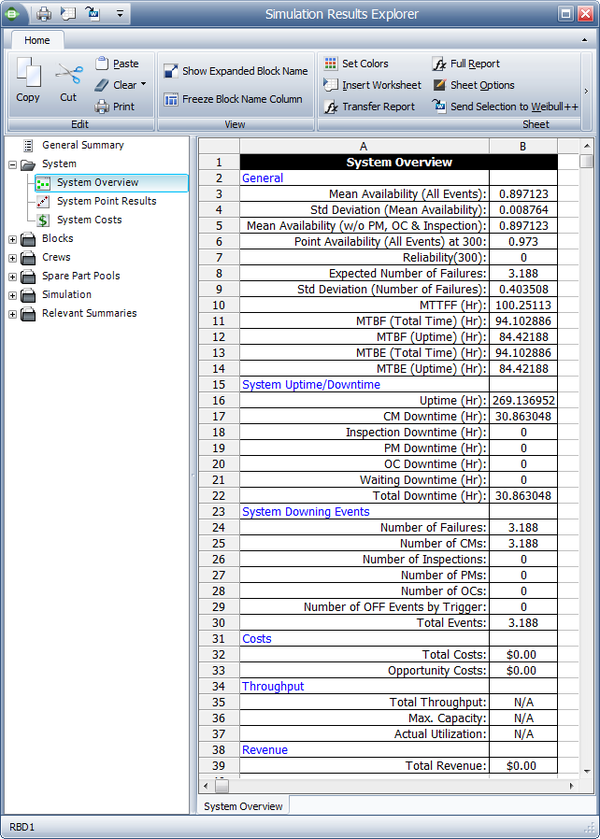

To further illustrate this, assume that components A and B in the prior example had normal failure and repair distributions with their means equal to the deterministic values used in the prior example and standard deviations of 10 and 1 respectively. That is, | To further illustrate this, assume that components A and B in the prior example had normal failure and repair distributions with their means equal to the deterministic values used in the prior example and standard deviations of 10 and 1 respectively. That is, <math>{{F}_{A}}\tilde{\ }N(100,10),\,\!</math> <math>{{F}_{B}}\tilde{\ }N(120,10),\,\!</math> <math>{{R}_{A}}={{R}_{B}}\tilde{\ }N(10,1)\,\!</math>. The settings for components C and D are not changed. Obviously, given the probabilistic nature of the example, the times to each event will vary. If one were to repeat this <math>X\,\!</math> number of times, one would arrive at the results of interest for the system and its components. Some of the results for this system and this example, over 1,000 simulations, are provided in the figure below and explained in the next sections. | ||

[[Image:r2.png|center| | [[Image:r2.png|center|600px|Summary of system results for 1,000 simulations.|link=]] | ||

The simulation settings are shown in figure below. | The simulation settings are shown in the figure below. | ||

[[Image:8.7.gif|center| | [[Image:8.7.gif|center|600px|BlockSim simulation window.|link=]] | ||

===General=== | ===General=== | ||

====Mean Availability (All Events), | ====Mean Availability (All Events), <math>{{\overline{A}}_{ALL}}\,\!</math>==== | ||

This is the mean availability due to all downing events, which can be thought of as the operational availability. It is the ratio of the system uptime divided by the total simulation time (total time). For this example: | This is the mean availability due to all downing events, which can be thought of as the operational availability. It is the ratio of the system uptime divided by the total simulation time (total time). For this example: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 139: | Line 115: | ||

= & \frac{269.137}{300} \\ | = & \frac{269.137}{300} \\ | ||

= & 0.8971 | = & 0.8971 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

====Std Deviation (Mean Availability)==== | ====Std Deviation (Mean Availability)==== | ||

This is the standard deviation of the mean availability of all downing events for the system during the simulation. | This is the standard deviation of the mean availability of all downing events for the system during the simulation. | ||

====Mean Availability (w/o PM, OC & Inspection), <math>{{\overline{A}}_{CM}}\,\!</math>==== | |||

====Mean Availability (w/o PM, OC & Inspection), | |||

This is the mean availability due to failure events only and it is 0.971 for this example. Note that for this case, the mean availability without preventive maintenance, on condition maintenance and inspection is identical to the mean availability for all events. This is because no preventive maintenance actions or inspections were defined for this system. We will discuss the inclusion of these actions in later sections. | This is the mean availability due to failure events only and it is 0.971 for this example. Note that for this case, the mean availability without preventive maintenance, on condition maintenance and inspection is identical to the mean availability for all events. This is because no preventive maintenance actions or inspections were defined for this system. We will discuss the inclusion of these actions in later sections. | ||

Downtimes caused by PM and inspections are not included. However, if the PM or inspection action results in the discovery of a failure, then these times are included. As an example, consider a component that has failed but its failure is not discovered until the component is inspected. Then the downtime from the time failed to the time restored after the inspection is counted as failure downtime, since the original event that caused this was the component's failure. | Downtimes caused by PM and inspections are not included. However, if the PM or inspection action results in the discovery of a failure, then these times are included. As an example, consider a component that has failed but its failure is not discovered until the component is inspected. Then the downtime from the time failed to the time restored after the inspection is counted as failure downtime, since the original event that caused this was the component's failure. | ||

====Point Availability (All Events), | ====Point Availability (All Events), <math>A\left( t \right)\,\!</math>==== | ||

This is the probability that the system is up at time | This is the probability that the system is up at time <math>t\,\!</math>. As an example, to obtain this value at <math>t\,\!</math> = 300, a special counter would need to be used during the simulation. This counter is increased by one every time the system is up at 300 hours. Thus, the point availability at 300 would be the times the system was up at 300 divided by the number of simulations. For this example, this is 0.930, or 930 times out of the 1000 simulations the system was up at 300 hours. | ||

====Reliability (Fail Events), <math>R(t)</math>==== | ====Reliability (Fail Events), <math>R(t)\,\!</math>==== | ||

This is the probability that the system has not failed by time | This is the probability that the system has not failed by time <math>t\,\!</math>. This is similar to point availability with the major exception that it only looks at the probability that the system did not have a single failure. Other (non-failure) downing events are ignored. During the simulation, a special counter again must be used. This counter is increased by one (once in each simulation) if the system has had at least one failure up to 300 hours. Thus, the reliability at 300 would be the number of times the system did not fail up to 300 divided by the number of simulations. For this example, this is 0 because the system failed prior to 300 hours 1000 times out of the 1000 simulations. | ||

It is very important to note that this value is not always the same as the reliability computed using the analytical methods, depending on the redundancy present. The reason that it may differ is best explained by the following scenario: | It is very important to note that this value is not always the same as the reliability computed using the analytical methods, depending on the redundancy present. The reason that it may differ is best explained by the following scenario: | ||

Assume two units in parallel. The analytical system reliability, which does not account for repairs, is the probability that both units fail. In this case, when one unit goes down, it does not get repaired and the system fails after the second unit fails. In the case of repairs, however, it is possible for one of the two units to fail and get repaired before the second unit fails. Thus, when the second unit fails, the system will still be up due to the fact that the first unit was repaired. | Assume two units in parallel. The analytical system reliability, which does not account for repairs, is the probability that both units fail. In this case, when one unit goes down, it does not get repaired and the system fails after the second unit fails. In the case of repairs, however, it is possible for one of the two units to fail and get repaired before the second unit fails. Thus, when the second unit fails, the system will still be up due to the fact that the first unit was repaired. | ||

====Expected Number of Failures, <math>{{N}_{F}}\,\!</math>==== | |||

====Expected Number of Failures, | |||

This is the average number of system failures. The system failures (not downing events) for all simulations are counted and then averaged. For this case, this is 3.188, which implies that a total of 3,188 system failure events occurred over 1000 simulations. Thus, the expected number of system failures for one run is 3.188. This number includes all failures, even those that may have a duration of zero. | This is the average number of system failures. The system failures (not downing events) for all simulations are counted and then averaged. For this case, this is 3.188, which implies that a total of 3,188 system failure events occurred over 1000 simulations. Thus, the expected number of system failures for one run is 3.188. This number includes all failures, even those that may have a duration of zero. | ||

====Std Deviation (Number of Failures)==== | ====Std Deviation (Number of Failures)==== | ||

| Line 174: | Line 144: | ||

====MTTFF==== | ====MTTFF==== | ||

MTTFF is the mean time to first failure for the system. This is computed by keeping track of the time at which the first system failure occurred for each simulation. MTTFF is then the average of these times. This may or may not be identical to the MTTF obtained in the analytical solution for the same reasons as those discussed in the Point Reliability section. For this case, this is 100.2511. This is fairly obvious for this case since the mean of one of the components in series was 100 hours. | MTTFF is the mean time to first failure for the system. This is computed by keeping track of the time at which the first system failure occurred for each simulation. MTTFF is then the average of these times. This may or may not be identical to the MTTF obtained in the analytical solution for the same reasons as those discussed in the Point Reliability section. For this case, this is 100.2511. This is fairly obvious for this case since the mean of one of the components in series was 100 hours. | ||

It is important to note that for each simulation run, if a first failure time is observed, then this is recorded as the system time to first failure. If no failure is observed in the system, then the simulation end time is used as a right censored (suspended) data point. MTTFF is then computed using the total operating time until the first failure divided by the number of observed failures (constant failure rate assumption). Furthermore, and if the simulation end time is much less than the time to first failure for the system, it is also possible that all data points are right censored (i.e., no system failures were observed). In this case, the MTTFF is again computed using a constant failure rate assumption, or: | It is important to note that for each simulation run, if a first failure time is observed, then this is recorded as the system time to first failure. If no failure is observed in the system, then the simulation end time is used as a right censored (suspended) data point. MTTFF is then computed using the total operating time until the first failure divided by the number of observed failures (constant failure rate assumption). Furthermore, and if the simulation end time is much less than the time to first failure for the system, it is also possible that all data points are right censored (i.e., no system failures were observed). In this case, the MTTFF is again computed using a constant failure rate assumption, or: | ||

::<math>MTTFF=\frac{2\cdot ({{T}_{S}})\cdot N}{\chi _{0.50;2}^{2}}</math> | ::<math>MTTFF=\frac{2\cdot ({{T}_{S}})\cdot N}{\chi _{0.50;2}^{2}}\,\!</math> | ||

where <math>{{T}_{S}}\,\!</math> is the simulation end time and <math>N\,\!</math> is the number of simulations. One should be aware that this formulation may yield unrealistic (or erroneous) results if the system does not have a constant failure rate. If you are trying to obtain an accurate (realistic) estimate of this value, then your simulation end time should be set to a value that is well beyond the MTTF of the system (as computed analytically). As a general rule, the simulation end time should be at least three times larger than the MTTF of the system. | |||

====MTBF (Total Time)==== | |||

This is the mean time between failures for the system based on the total simulation time and the expected number of system failures. For this example: | |||

::<math>\begin{align} | |||

MTBF (Total Time)= & \frac{TotalTime}{{N}_{F}} \\ | |||

= & \frac{300}{3.188} \\ | |||

= & 94.102886 | |||

\end{align}\,\!</math> | |||

====MTBF (Uptime)==== | |||

This is the mean time between failures for the system, considering only the time that the system was up. This is calculated by dividing system uptime by the expected number of system failures. You can also think of this as the mean uptime. For this example: | |||

::<math>\begin{align} | |||

MTBF (Uptime)= & \frac{Uptime}{{N}_{F}} \\ | |||

= & \frac{269.136952}{3.188} \\ | |||

= & 84.42188 | |||

\end{align}\,\!</math> | |||

====MTBE (Total Time)==== | |||

< | This is the mean time between all downing events for the system, based on the total simulation time and including all system downing events. This is calculated by dividing the simulation run time by the number of downing events (<math>{{N}_{AL{{L}_{Down}}}}\,\!</math>). | ||

====MTBE (Uptime)==== | |||

his is the mean time between all downing events for the system, considering only the time that the system was up. This is calculated by dividing system uptime by the number of downing events (<math>{{N}_{AL{{L}_{Down}}}}\,\!</math>). | |||

===System Uptime/Downtime=== | ===System Uptime/Downtime=== | ||

====Uptime, | ====Uptime, <math>{{T}_{UP}}\,\!</math> ==== | ||

This is the average time the system was up and operating. This is obtained by taking the sum of the uptimes for each simulation and dividing it by the number of simulations. For this example, the uptime is 269.137. To compute the Operational Availability, | This is the average time the system was up and operating. This is obtained by taking the sum of the uptimes for each simulation and dividing it by the number of simulations. For this example, the uptime is 269.137. To compute the Operational Availability, <math>{{A}_{o}},\,\!</math> for this system, then: | ||

::<math>{{A}_{o}}=\frac{{{T}_{UP}}}{{{T}_{S}}} | ::<math>{{A}_{o}}=\frac{{{T}_{UP}}}{{{T}_{S}}}\,\!</math> | ||

====CM Downtime, | ====CM Downtime, <math>{{T}_{C{{M}_{Down}}}}\,\!</math> ==== | ||

This is the average time the system was down for corrective maintenance actions (CM) only. This is obtained by taking the sum of the CM downtimes for each simulation and dividing it by the number of simulations. For this example, this is 30.863. | This is the average time the system was down for corrective maintenance actions (CM) only. This is obtained by taking the sum of the CM downtimes for each simulation and dividing it by the number of simulations. For this example, this is 30.863. | ||

To compute the Inherent Availability, | To compute the Inherent Availability, <math>{{A}_{I}},\,\!</math> for this system over the observed time (which may or may not be steady state, depending on the length of the simulation), then: | ||

::<math>{{A}_{I}}=\frac{{{T}_{S}}-{{T}_{C{{M}_{Down}}}}}{{{T}_{S}}}\,\!</math> | |||

====Inspection Downtime ==== | |||

This is the average time the system was down due to inspections. This is obtained by taking the sum of the inspection downtimes for each simulation and dividing it by the number of simulations. For this example, this is zero because no inspections were defined. | This is the average time the system was down due to inspections. This is obtained by taking the sum of the inspection downtimes for each simulation and dividing it by the number of simulations. For this example, this is zero because no inspections were defined. | ||

====PM Downtime, | ====PM Downtime, <math>{{T}_{P{{M}_{Down}}}}\,\!</math>==== | ||

This is the average time the system was down due to preventive maintenance (PM) actions. This is obtained by taking the sum of the PM downtimes for each simulation and dividing it by the number of simulations. For this example, this is zero because no PM actions were defined. | This is the average time the system was down due to preventive maintenance (PM) actions. This is obtained by taking the sum of the PM downtimes for each simulation and dividing it by the number of simulations. For this example, this is zero because no PM actions were defined. | ||

====OC Downtime, | ====OC Downtime, <math>{{T}_{O{{C}_{Down}}}}\,\!</math>==== | ||

This is the average time the system was down due to on-condition maintenance (PM) actions. This is obtained by taking the sum of the OC downtimes for each simulation and dividing it by the number of simulations. For this example, this is zero because no OC actions were defined. | This is the average time the system was down due to on-condition maintenance (PM) actions. This is obtained by taking the sum of the OC downtimes for each simulation and dividing it by the number of simulations. For this example, this is zero because no OC actions were defined. | ||

==== | ====Waiting Downtime, <math>{{T}_{W{{ait}_{Down}}}}\,\!</math>==== | ||

< | |||

This is the downtime due to all events. | This is the amount of time that the system was down due to crew and spare part wait times or crew conflict times. For this example, this is zero because no crews or spare part pools were defined. | ||

====Total Downtime, <math>{{T}_{Down}}\,\!</math>==== | |||

This is the downtime due to all events. In general, one may look at this as the sum of the above downtimes. However, this is not always the case. It is possible to have actions that overlap each other, depending on the options and settings for the simulation. Furthermore, there are other events that can cause the system to go down that do not get counted in any of the above categories. As an example, in the case of standby redundancy with a switch delay, if the settings are to reactivate the failed component after repair, the system may be down during the switch-back action. This downtime does not fall into any of the above categories but it is counted in the total downtime. | |||

For this example, this is identical to <math>{{T}_{C{{M}_{Down}}}}\,\!</math>. | |||

===System Downing Events=== | ===System Downing Events=== | ||

System downing events are events associated with downtime. Note that events with zero duration will appear in this section only if the task properties specify that the task brings the system down or if the task properties specify that the task brings the item down and the item’s failure brings the system down. | System downing events are events associated with downtime. Note that events with zero duration will appear in this section only if the task properties specify that the task brings the system down or if the task properties specify that the task brings the item down and the item’s failure brings the system down. | ||

====Number of Failures, <math>{{N}_{{{F}_{Down}}}}\,\!</math>==== | |||

This is the average number of system downing failures. Unlike the Expected Number of Failures, <math>{{N}_{F}},\,\!</math> this number does not include failures with zero duration. For this example, this is 3.188. | |||

====Number of Failures, | |||

This is the average number of system downing failures. Unlike the Expected Number of Failures, | |||

====Number of CMs, | ====Number of CMs, <math>{{N}_{C{{M}_{Down}}}}\,\!</math>==== | ||

This is the number of corrective maintenance actions that caused the system to fail. It is obtained by taking the sum of all CM actions that caused the system to fail divided by the number of simulations. It does not include CM events of zero duration. For this example, this is 3.188. Note that this may differ from the Number of Failures, | This is the number of corrective maintenance actions that caused the system to fail. It is obtained by taking the sum of all CM actions that caused the system to fail divided by the number of simulations. It does not include CM events of zero duration. For this example, this is 3.188. Note that this may differ from the Number of Failures, <math>{{N}_{{{F}_{Down}}}}\,\!</math>. An example would be a case where the system has failed, but due to other settings for the simulation, a CM is not initiated (e.g., an inspection is needed to initiate a CM). | ||

====Number of Inspections, | ====Number of Inspections, <math>{{N}_{{{I}_{Down}}}}\,\!</math>==== | ||

This is the number of inspection actions that caused the system to fail. It is obtained by taking the sum of all inspection actions that caused the system to fail divided by the number of simulations. It does not include inspection events of zero duration. For this example, this is zero. | This is the number of inspection actions that caused the system to fail. It is obtained by taking the sum of all inspection actions that caused the system to fail divided by the number of simulations. It does not include inspection events of zero duration. For this example, this is zero. | ||

====Number of PMs, | ====Number of PMs, <math>{{N}_{P{{M}_{Down}}}}\,\!</math>==== | ||

This is the number of PM actions that caused the system to fail. It is obtained by taking the sum of all PM actions that caused the system to fail divided by the number of simulations. It does not include PM events of zero duration. For this example, this is zero. | This is the number of PM actions that caused the system to fail. It is obtained by taking the sum of all PM actions that caused the system to fail divided by the number of simulations. It does not include PM events of zero duration. For this example, this is zero. | ||

====Number of OCs, | ====Number of OCs, <math>{{N}_{O{{C}_{Down}}}}\,\!</math>==== | ||

This is the number of OC actions that caused the system to fail. It is obtained by taking the sum of all OC actions that caused the system to fail divided by the number of simulations. It does not include OC events of zero duration. For this example, this is zero. | This is the number of OC actions that caused the system to fail. It is obtained by taking the sum of all OC actions that caused the system to fail divided by the number of simulations. It does not include OC events of zero duration. For this example, this is zero. | ||

====Total Events, | ====Number of OFF Events by Trigger, <math>{{N}_{O{{FF}_{Down}}}}\,\!</math>==== | ||

This is the total number of system downing events. It also does not include events of zero duration. It is possible that this number may differ from the sum of the other listed events. As an example, consider the case where a failure does not get repaired until an inspection, but the inspection occurs after the simulation end time. In this case, the number of inspections, CMs and PMs will be zero while the number of total events will be one. | This is the total number of events where the system is turned off by state change triggers. An OFF event is not a system failure but it may be included in system reliability calculations. For this example, this is zero. | ||

====Total Events, <math>{{N}_{AL{{L}_{Down}}}}\,\!</math>==== | |||

This is the total number of system downing events. It also does not include events of zero duration. It is possible that this number may differ from the sum of the other listed events. As an example, consider the case where a failure does not get repaired until an inspection, but the inspection occurs after the simulation end time. In this case, the number of inspections, CMs and PMs will be zero while the number of total events will be one. | |||

===Costs and Throughput=== | ===Costs and Throughput=== | ||

Cost and throughput results are discussed in later sections. | Cost and throughput results are discussed in later sections. | ||

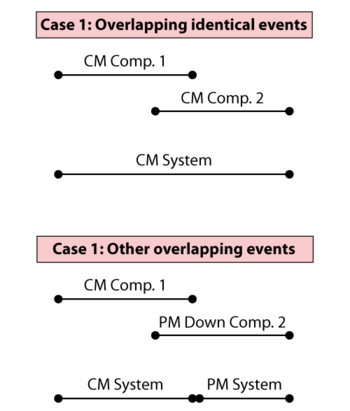

===Note About Overlapping Downing Events=== | ===Note About Overlapping Downing Events=== | ||

It is important to note that two identical system downing events (that are continuous or overlapping) may be counted and viewed differently. As shown in Case 1 of the following figure, two overlapping failure events are counted as only one event from the system perspective because the system was never restored and remained in the same down state, even though that state was caused by two different components. Thus, the number of downing events in this case is one and the duration is as shown in CM system. In the case that the events are different, as shown in Case 2 of the figure below, two events are counted, the CM and the PM. However, the downtime attributed to each event is different from the actual time of each event. In this case, the system was first down due to a CM and remained in a down state due to the CM until that action was over. However, immediately upon completion of that action, the system remained down but now due to a PM action. In this case, only the PM action portion that kept the system down is counted. | It is important to note that two identical system downing events (that are continuous or overlapping) may be counted and viewed differently. As shown in Case 1 of the following figure, two overlapping failure events are counted as only one event from the system perspective because the system was never restored and remained in the same down state, even though that state was caused by two different components. Thus, the number of downing events in this case is one and the duration is as shown in CM system. In the case that the events are different, as shown in Case 2 of the figure below, two events are counted, the CM and the PM. However, the downtime attributed to each event is different from the actual time of each event. In this case, the system was first down due to a CM and remained in a down state due to the CM until that action was over. However, immediately upon completion of that action, the system remained down but now due to a PM action. In this case, only the PM action portion that kept the system down is counted. | ||

===System Point | [[Image:8.9.png|center|350px|Duration and count of different overlapping events.|link=]] | ||

< | |||

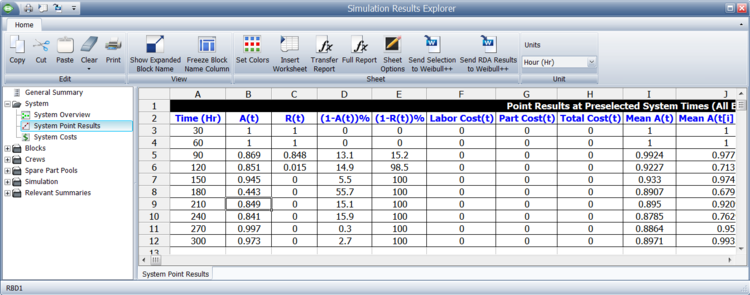

===System Point Results=== | |||

The system point results, as shown in the figure below, shows the Point Availability (All Events), <math>A\left( t \right)\,\!</math>, and Point Reliability, <math>R(t)\,\!</math>, as defined in the previous section. These are computed and returned at different points in time, based on the number of intervals selected by the user. Additionally, this window shows <math>(1-A(t))\,\!</math>, <math>(1-R(t))\,\!</math>, <math>\text{Labor Cost(t)}\,\!</math>,<math>\text{Part Cost(t)}\,\!</math>, <math>Cost(t)\,\!</math>, <math>Mean\,\!</math> <math>A(t)\,\!</math>, <math>Mean\,\!</math> <math>A({{t}_{i}}-{{t}_{i-1}})\,\!</math>, <math>System\,\!</math>, <math>Failures(t)\,\!</math>, <math>\text{System Off Events by Trigger(t)}\,\!</math> and <math>Throughput(t)\,\!</math>. | |||

[[Image:BS8.10.png|center|750px|link=]] | |||

The number of intervals shown is based on the increments set. In this figure, the number of increments set was 300, which implies that the results should be shown every hour. The results shown in this figure are for 10 increments, or shown every 30 hours. | |||

[[Image:BS8.10.png | |||

=Results by Component= | =Results by Component= | ||

Simulation results for each component can also be viewed. The figure below shows the results for component A. These results are explained in the sections that follow. | Simulation results for each component can also be viewed. The figure below shows the results for component A. These results are explained in the sections that follow. | ||

[[Image:8.11.gif|center|600px|The Block Details results for component A.|link=]] | |||

[[Image:8.11.gif|center| | |||

===General Information=== | ===General Information=== | ||

====Number of Block Downing Events, | ====Number of Block Downing Events, <math>Componen{{t}_{NDE}}\,\!</math>==== | ||

This the number of times the component went down (failed). It includes all downing events. | This the number of times the component went down (failed). It includes all downing events. | ||

====Number of System Downing Events, | ====Number of System Downing Events, <math>Componen{{t}_{NSDE}}\,\!</math>==== | ||

This is the number of times that this component's downing caused the system to be down. For component <math>A\,\!</math>, this is 2.038. Note that this value is the same in this case as the number of component failures, since the component A is reliability-wise in series with components D and components B, C. If this were not the case (e.g., if they were in a parallel configuration, like B and C), this value would be different. | |||

This is the number of times | |||

====Number of System Downing Failures, | ====Number of Failures, <math>Componen{{t}_{NF}}\,\!</math>==== | ||

This is the number of times the component failed and does not include other downing events. Note that this could also be interpreted as the number of spare parts required for CM actions for this component. For component <math>A\,\!</math>, this is 2.038. | |||

====Number of System Downing Failures, <math>Componen{{t}_{NSDF}}\,\!</math>==== | |||

This is the number of times that this component's failure caused the system to be down. Note that this may be different from the Number of System Downing Events. It only counts the failure events that downed the system and does not include zero duration system failures. | This is the number of times that this component's failure caused the system to be down. Note that this may be different from the Number of System Downing Events. It only counts the failure events that downed the system and does not include zero duration system failures. | ||

====Number of OFF events by Trigger, <math>Componen{{t}_{OFF}}\,\!</math>==== | |||

The total number of events where the block is turned off by state change triggers. An OFF event is not a failure but it may be included in system reliability calculations. | |||

==== | ====Mean Availability (All Events), <math>{{\overline{A}}_{AL{{L}_{Component}}}}\,\!</math>==== | ||

This has the same definition as for the system with the exception that this accounts only for the component. | This has the same definition as for the system with the exception that this accounts only for the component. | ||

====Mean Availability (w/o PM, OC & Inspection), | ====Mean Availability (w/o PM, OC & Inspection), <math>{{\overline{A}}_{C{{M}_{Component}}}}\,\!</math>==== | ||

The mean availability of all downing events for the block, not including preventive, on condition or inspection tasks, during the simulation. | The mean availability of all downing events for the block, not including preventive, on condition or inspection tasks, during the simulation. | ||

====Block Uptime, | ====Block Uptime, <math>{{T}_{Componen{{t}_{UP}}}}\,\!</math>==== | ||

This is tThe total amount of time that the block was up (i.e., operational) during the simulation. For component <math>A\,\!</math>, this is 279.8212. | |||

====Block Downtime, <math>{{T}_{Componen{{t}_{Down}}}}\,\!</math>==== | |||

This is the average time the component was down for any reason. For component <math>A\,\!</math>, this is 20.1788. | |||

Block Downtime shows the total amount of time that the block was down (i.e., not operational) during the simulation. | Block Downtime shows the total amount of time that the block was down (i.e., not operational) during the simulation. | ||

===Metrics=== | ===Metrics=== | ||

====RS DECI==== | ====RS DECI==== | ||

The ReliaSoft Downing Event Criticality Index for the block. This is a relative index showing the percentage of times that a downing event of the block caused the system to go down (i.e., the number of system downing events caused by the block divided by the total number of system downing events). For component | The ReliaSoft Downing Event Criticality Index for the block. This is a relative index showing the percentage of times that a downing event of the block caused the system to go down (i.e., the number of system downing events caused by the block divided by the total number of system downing events). For component <math>A\,\!</math>, this is 63.93%. This implies that 63.93% of the times that the system went down, the system failure was due to the fact that component <math>A\,\!</math> went down. This is obtained from: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

RSDECI=\frac{Componen{{t}_{NSDE}}}{{{N}_{AL{{L}_{Down}}}}} | RSDECI=\frac{Componen{{t}_{NSDE}}}{{{N}_{AL{{L}_{Down}}}}} | ||

\end{align}</math | \end{align}\,\!</math> | ||

====Mean Time Between Downing Events==== | ====Mean Time Between Downing Events==== | ||

This is the mean time between downing events of the component, which is computed from: | This is the mean time between downing events of the component, which is computed from: | ||

::<math>MTBDE=\frac{{{T}_{Componen{{t}_{UP}}}}}{Componen{{t}_{NDE}}}</math> | ::<math>MTBDE=\frac{{{T}_{Componen{{t}_{UP}}}}}{Componen{{t}_{NDE}}}\,\!</math> | ||

For component | For component <math>A\,\!</math>, this is 137.3019. | ||

====RS FCI==== | ====RS FCI==== | ||

ReliaSoft's Failure Criticality Index (RS FCI) is a relative index showing the percentage of times that a failure of this component caused a system failure. For component | ReliaSoft's Failure Criticality Index (RS FCI) is a relative index showing the percentage of times that a failure of this component caused a system failure. For component <math>A\,\!</math>, this is 63.93%. This implies that 63.93% of the times that the system failed, it was due to the fact that component <math>A\,\!</math> failed. This is obtained from: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

RSFCI=\frac{Componen{{t}_{NSDF}}+{{F}_{ZD}}}{{{N}_{F}}} | RSFCI=\frac{Componen{{t}_{NSDF}}+{{F}_{ZD}}}{{{N}_{F}}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

<math>{{F}_{ZD}}</math> | <math>{{F}_{ZD}}\,\!</math> is a special counter of system failures not included in <math>Componen{{t}_{NSDF}}\,\!</math>. This counter is not explicitly shown in the results but is maintained by the software. The reason for this counter is the fact that zero duration failures are not counted in <math>Componen{{t}_{NSDF}}\,\!</math> since they really did not down the system. However, these zero duration failures need to be included when computing RS FCI. | ||

It is important to note that for both RS DECI and RS FCI, and if overlapping events are present, the component that caused the system event gets credited with the system event. Subsequent component events that do not bring the system down (since the system is already down) do not get counted in this metric. | It is important to note that for both RS DECI and RS FCI, and if overlapping events are present, the component that caused the system event gets credited with the system event. Subsequent component events that do not bring the system down (since the system is already down) do not get counted in this metric. | ||

====MTBF, | ====MTBF, <math>MTB{{F}_{C}}\,\!</math>==== | ||

Mean time between failures is the mean (average) time between failures of this component, in real clock time. This is computed from: | Mean time between failures is the mean (average) time between failures of this component, in real clock time. This is computed from: | ||

::<math>MTB{{F}_{C}}=\frac{{{T}_{S}}-CFDowntime}{Componen{{t}_{NF}}}</math> | ::<math>MTB{{F}_{C}}=\frac{{{T}_{S}}-CFDowntime}{Componen{{t}_{NF}}}\,\!</math> | ||

<math>CFDowntime</math> | <math>CFDowntime\,\!</math> is the downtime of the component due to failures only (without PM, OC and inspection). The discussion regarding what is a failure downtime that was presented in the section explaining Mean Availability (w/o PM & Inspection) also applies here. | ||

For component | For component <math>A\,\!</math>, this is 137.3019. Note that this value could fluctuate for the same component depending on the simulation end time. As an example, consider the deterministic scenario for this component. It fails every 100 hours and takes 10 hours to repair. Thus, it would be failed at 100, repaired by 110, failed at 210 and repaired by 220. Therefore, its uptime is 280 with two failure events, MTBF = 280/2 = 140. Repeating the same scenario with an end time of 330 would yield failures at 100, 210 and 320. Thus, the uptime would be 300 with three failures, or MTBF = 300/3 = 100. Note that this is not the same as the MTTF (mean time to failure), commonly referred to as MTBF by many practitioners. | ||

====Mean Downtime per Event, | ====Mean Downtime per Event, <math>MDPE\,\!</math>==== | ||

Mean downtime per event is the average downtime for a component event. This is computed from: | Mean downtime per event is the average downtime for a component event. This is computed from: | ||

::<math>MDPE=\frac{{{T}_{Componen{{t}_{Down}}}}}{Componen{{t}_{NDE}}}</math | ::<math>MDPE=\frac{{{T}_{Componen{{t}_{Down}}}}}{Componen{{t}_{NDE}}}\,\!</math> | ||

====RS DTCI==== | ====RS DTCI==== | ||

The ReliaSoft Downtime Criticality Index for the block. This is a relative index showing the contribution of the block to the system’s downtime (i.e., the system downtime caused by the block divided by the total system downtime). | The ReliaSoft Downtime Criticality Index for the block. This is a relative index showing the contribution of the block to the system’s downtime (i.e., the system downtime caused by the block divided by the total system downtime). | ||

==== | ====RS BCCI==== | ||

The | The ReliaSoft Block Cost Criticality Index for the block. This is a relative index showing the contribution of the block to the total costs (i.e., the total block costs divided by the total costs). | ||

=== | ====Non-Waiting Time CI==== | ||

A relative index showing the contribution of repair times to the block’s total downtime. (The ratio of the time that the crew is actively working on the item to the total down time). | |||

The | |||

=== | ====Total Waiting Time CI==== | ||

A relative index showing the contribution of wait factor times to the block’s total downtime. Wait factors include crew conflict times, crew wait times and spare part wait times. (The ratio of downtime not including active repair time). | |||

====Waiting for Opportunity/Maximum Wait Time Ratio==== | |||

A relative index showing the contribution of crew conflict times. This is the ratio of the time spent waiting for the crew to respond (not including crew logistic delays) to the total wait time (not including the active repair time). | |||

====Crew/Part Wait Ratio==== | |||

The ratio of the crew and part delays. A value of 100% means that both waits are equal. A value greater than 100% indicates that the crew delay was in excess of the part delay. For example, a value of 200% would indicate that the wait for the crew is two times greater than the wait for the part. | |||

=== | ====Part/Crew Wait Ratio==== | ||

The ratio of the part and crew delays. A value of 100% means that both waits are equal. A value greater than 100% indicates that the part delay was in excess of the crew delay. For example, a value of 200% would indicate that the wait for the part is two times greater than the wait for the crew. | |||

===Downtime Summary=== | |||

====Non-Waiting Time==== | |||

Time that the block was undergoing active maintenance/inspection by a crew. If no crew is defined, then this will return zero. | |||

====Waiting for Opportunity==== | |||

The total downtime for the block due to crew conflicts (i.e., time spent waiting for a crew while the crew is busy with another task). If no crew is defined, then this will return zero. | |||

=== | ====Waiting for Crew==== | ||

The total downtime for the block due to crew wait times (i.e., time spent waiting for a crew due to logistical delay). If no crew is defined, then this will return zero. | |||

=== | ====Waiting for Parts==== | ||

The total downtime for the block due to spare part wait times. If no spare part pool is defined then this will return zero. | |||

====Other Results of Interest==== | |||

The remaining component (block) results are similar to those defined for the system with the exception that now they apply only to the component. | |||

=Subdiagrams and Multi Blocks in Simulation= | |||

= | |||

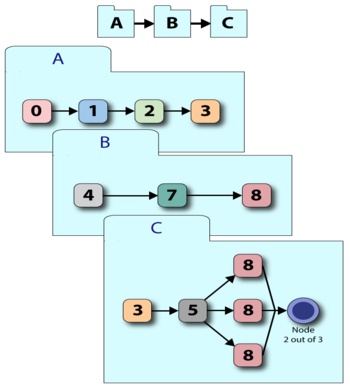

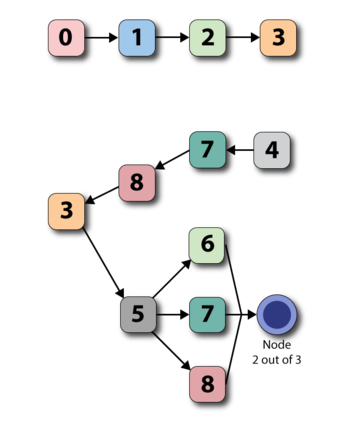

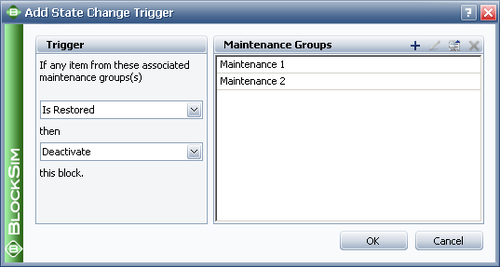

Any subdiagrams and multi blocks that may be present in the BlockSim RBD are expanded and/or merged into a single diagram before the system is simulated. As an example, consider the system shown in the figure below. | |||

[[Image:r38.png|center|350px| A system made up of three subsystems, A, B, and C.|link=]] | |||

BlockSim will internally merge the system into a single diagram before the simulation, as shown in the figure below. This means that all the failure and repair properties of the items in the subdiagrams are also considered. | BlockSim will internally merge the system into a single diagram before the simulation, as shown in the figure below. This means that all the failure and repair properties of the items in the subdiagrams are also considered. | ||

[[Image:r39.png|center|350px| The simulation engine view of the system and subdiagrams]] | [[Image:r39.png|center|350px| The simulation engine view of the system and subdiagrams|link=]] | ||

In the case of multi blocks, the blocks are also fully expanded before simulation. This means that unlike the analytical solution, the execution speed (and memory requirements) for a multi block representing ten blocks in series is identical to the representation of ten individual blocks in series. | In the case of multi blocks, the blocks are also fully expanded before simulation. This means that unlike the analytical solution, the execution speed (and memory requirements) for a multi block representing ten blocks in series is identical to the representation of ten individual blocks in series. | ||

=Containers in Simulation= | =Containers in Simulation= | ||

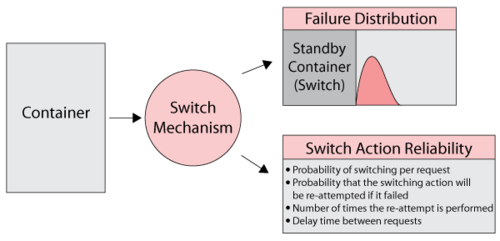

===Standby Containers=== | ===Standby Containers=== | ||

When you simulate a diagram that contains a standby container, the container acts as the switch mechanism (as shown below) in addition to defining the standby relationships and the number of active units that are required. The container's failure and repair properties are really that of the switch itself. The switch can fail with a distribution, while waiting to switch or during the switch action. Repair properties restore the switch regardless of how the switch failed. Failure of the switch itself does not bring the container down because the switch is not really needed unless called upon to switch. The container will go down if the units within the container fail or the switch is failed when a switch action is needed. The restoration time for this is based on the repair distributions of the contained units and the switch. Furthermore, the container is down during a switch process that has a delay. | |||

[[Image:8.43. | [[Image:8.43.png|center|500px| The standby container acts as the switch, thus the failure distribution of the container is the failure distribution of the switch. The container can also fail when called upon to switch.|link=]] | ||

[[Image:8_43_1_new.png|center|150px|link=]] | |||

To better illustrate this, consider the following deterministic case. | To better illustrate this, consider the following deterministic case. | ||

::#Units | ::#Units <math>A\,\!</math> and <math>B\,\!</math> are contained in a standby container. | ||

::#The standby container is the only item in the diagram, thus failure of the container is the same as failure of the system. | ::#The standby container is the only item in the diagram, thus failure of the container is the same as failure of the system. | ||

::#<math>A</math> | ::#<math>A\,\!</math> is the active unit and <math>B\,\!</math> is the standby unit. | ||

::#Unit | ::#Unit <math>A\,\!</math> fails every 100 <math>tu\,\!</math> (active) and takes 10 <math>tu\,\!</math> to repair. | ||

::#<math>B</math> | ::#<math>B\,\!</math> fails every 3 <math>tu\,\!</math> (active) and also takes 10 <math>tu\,\!</math> to repair. | ||

::#The units cannot fail while in quiescent (standby) mode. | ::#The units cannot fail while in quiescent (standby) mode. | ||

::#Furthermore, assume that the container (acting as the switch) fails every 30 | ::#Furthermore, assume that the container (acting as the switch) fails every 30 <math>tu\,\!</math> while waiting to switch and takes 4 <math>tu\,\!</math> to repair. If not failed, the container switches with 100% probability. | ||

::#The switch action takes 7 | ::#The switch action takes 7 <math>tu\,\!</math> to complete. | ||

::#After repair, unit | ::#After repair, unit <math>A\,\!</math> is always reactivated. | ||

::#The container does not operate through system failure and thus the components do not either. | ::#The container does not operate through system failure and thus the components do not either. | ||

| Line 891: | Line 409: | ||

The system event log is shown in the figure below and is as follows: | The system event log is shown in the figure below and is as follows: | ||

[[Image:BS8.44.png|center| | [[Image:BS8.44.png|center|600px| The system behavior using a standby container.|link=]] | ||

::#At 30, the switch fails and gets repaired by 34. The container switch is failed and being repaired; however, the container is up during this time. | ::#At 30, the switch fails and gets repaired by 34. The container switch is failed and being repaired; however, the container is up during this time. | ||

::#At 64, the switch fails and gets repaired by 68. The container is up during this time. | ::#At 64, the switch fails and gets repaired by 68. The container is up during this time. | ||

::#At 98, the switch fails. It will be repaired by 102. | ::#At 98, the switch fails. It will be repaired by 102. | ||

::#At 100, unit | ::#At 100, unit <math>A\,\!</math> fails. Unit <math>A\,\!</math> attempts to activate the switch to go to <math>B\,\!</math> ; however, the switch is failed. | ||

::#At 102, the switch is operational. | ::#At 102, the switch is operational. | ||

::#From 102 to 109, the switch is in the process of switching from unit | ::#From 102 to 109, the switch is in the process of switching from unit <math>A\,\!</math> to unit <math>B\,\!</math>. The container and system are down from 100 to 109. | ||

::#By 110, unit | ::#By 110, unit <math>A\,\!</math> is fixed and the system is switched back to <math>A\,\!</math> from <math>B\,\!</math>. The return switch action brings the container down for 7 <math>tu\,\!</math>, from 110 to 117. During this time, note that unit <math>B\,\!</math> has only functioned for 1 <math>tu\,\!</math>, 109 to 110. | ||

::#At 146, the switch fails and gets repaired by 150. The container is up during this time. | ::#At 146, the switch fails and gets repaired by 150. The container is up during this time. | ||

::#At 180, the switch fails and gets repaired by 184. The container is up during this time. | ::#At 180, the switch fails and gets repaired by 184. The container is up during this time. | ||

::#At 214, the switch fails and gets repaired by 218. | ::#At 214, the switch fails and gets repaired by 218. | ||

::#At 217, unit | ::#At 217, unit <math>A\,\!</math> fails. The switch is failed at this time. | ||

::#At 218, the switch is operational and the system is switched to unit | ::#At 218, the switch is operational and the system is switched to unit <math>B\,\!</math> within 7 <math>tu\,\!</math>. The container is down from 218 to 225. | ||

::#At 225, unit | ::#At 225, unit <math>B\,\!</math> takes over. After 2 <math>tu\,\!</math> of operation at 227, unit <math>B\,\!</math> fails. It will be restored by 237. | ||

::#At 227, unit | ::#At 227, unit <math>A\,\!</math> is repaired and the switchback action to unit <math>A\,\!</math> is initiated. By 234, the system is up. | ||

::#At 262, the switch fails and gets repaired by 266. The container is up during this time. | ::#At 262, the switch fails and gets repaired by 266. The container is up during this time. | ||

::#At 296, the switch fails and gets repaired by 300. The container is up during this time. | ::#At 296, the switch fails and gets repaired by 300. The container is up during this time. | ||

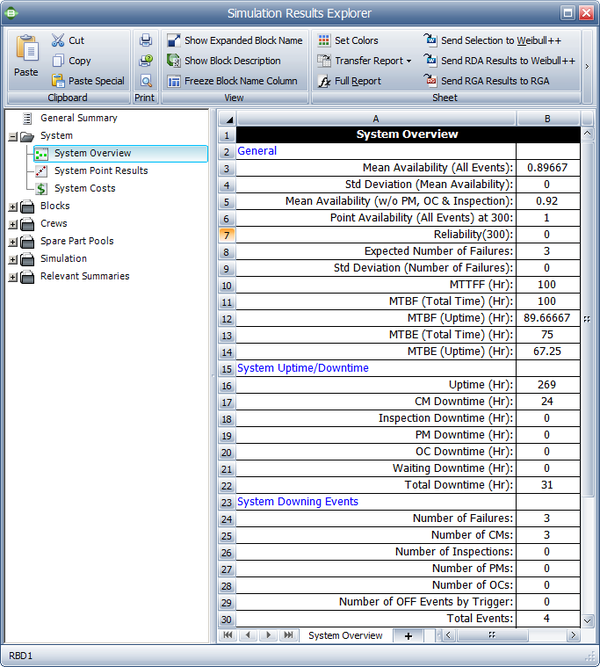

The system results are shown in the figure below and discussed next. | The system results are shown in the figure below and discussed next. | ||

[[Image:BS8.45.png | [[Image:BS8.45.png|center|600px| System overview results.|link=]] | ||

::1. System CM Downtime is 24. | ::1. System CM Downtime is 24. | ||

:::a) CM downtime includes all downtime due to failures as well as the delay in switching from a failed active unit to a standby unit. It does not include the switchback time from the standby to the restored active unit. Thus, the times from 100 to 109, 217 to 225 and 227 to 234 are included. The time to switchback, 110 to 117, is not included. | :::a) CM downtime includes all downtime due to failures as well as the delay in switching from a failed active unit to a standby unit. It does not include the switchback time from the standby to the restored active unit. Thus, the times from 100 to 109, 217 to 225 and 227 to 234 are included. The time to switchback, 110 to 117, is not included. | ||

| Line 928: | Line 445: | ||

::1) A container will only attempt to switch if there is an available non-failed item to switch to. If there is no such item, it will then switch if and when an item becomes available. The switch will cancel the action if it gets restored before an item becomes available. | ::1) A container will only attempt to switch if there is an available non-failed item to switch to. If there is no such item, it will then switch if and when an item becomes available. The switch will cancel the action if it gets restored before an item becomes available. | ||

:::a) As an example, consider the case of unit | :::a) As an example, consider the case of unit <math>A\,\!</math> failing active while unit <math>B\,\!</math> failed in a quiescent mode. If unit <math>B\,\!</math> gets restored before unit <math>A\,\!</math>, then the switch will be initiated. If unit <math>A\,\!</math> is restored before unit <math>B\,\!</math>, the switch action will not occur. | ||

::2) In cases where not all active units are required, a switch will only occur if the failed combination causes the container to fail. | ::2) In cases where not all active units are required, a switch will only occur if the failed combination causes the container to fail. | ||

:::a) For example, if | :::a) For example, if <math>A\,\!</math>, <math>B\,\!</math>, and <math>C\,\!</math> are in a container for which one unit is required to be operating and <math>A\,\!</math> and <math>B\,\!</math> are active with <math>C\,\!</math> on standby, then the failure of either <math>A\,\!</math> or <math>B\,\!</math> will not cause a switching action. The container will switch to <math>C\,\!</math> only if both <math>A\,\!</math> and <math>B\,\!</math> are failed. | ||

::3) If the container switch is failed and a switching action is required, the switching action will occur after the switch has been restored if it is still required (i.e., if the active unit is still failed). | ::3) If the container switch is failed and a switching action is required, the switching action will occur after the switch has been restored if it is still required (i.e., if the active unit is still failed). | ||

::4) If a switch fails during the delay time of the switching action based on the reliability distribution (quiescent failure mode), the action is still carried out unless a failure based on the switch probability/restarts occurs when attempting to switch. | ::4) If a switch fails during the delay time of the switching action based on the reliability distribution (quiescent failure mode), the action is still carried out unless a failure based on the switch probability/restarts occurs when attempting to switch. | ||

| Line 942: | Line 459: | ||

::9) A restart is executed every time the switch fails to switch (based on its fixed probability of switching). | ::9) A restart is executed every time the switch fails to switch (based on its fixed probability of switching). | ||

::10) If a delay is specified, restarts happen after the delay. | ::10) If a delay is specified, restarts happen after the delay. | ||

::11) If a container brings the system down, the container is responsible for the system going down (not the blocks inside the container). | ::11) If a container brings the system down, the container is responsible for the system going down (not the blocks inside the container). | ||

===Load Sharing Containers=== | ===Load Sharing Containers=== | ||

When you simulate a diagram that contains a load sharing container, the container defines the load that is shared. A load sharing container has no failure or repair distributions. The container itself is considered failed if all the blocks inside the container have failed (or <math>k\,\!</math> blocks in a <math>k\,\!</math> -out-of- <math>n\,\!</math> configuration). | |||

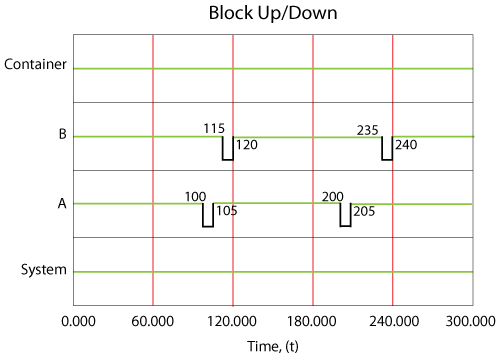

To illustrate this, consider the following container with items <math>A\,\!</math> and <math>B\,\!</math> in a load sharing redundancy. | |||

Assume that <math>A\,\!</math> fails every 100 <math>tu\,\!</math> and <math>B\,\!</math> every 120 <math>tu\,\!</math> if both items are operating and they fail in half that time if either is operating alone (i.e., the items age twice as fast when operating alone). They both get repaired in 5 <math>tu\,\!</math>. | |||

[[Image:8.46.png|center|600px| Behavior of a simple load sharing system.|link=]] | |||

[[Image:8.46.png|center| | |||

The system event log is shown in the figure above and is as follows: | The system event log is shown in the figure above and is as follows: | ||

::1. At 100, | ::1. At 100, <math>A\,\!</math> fails. It takes 5 <math>tu\,\!</math> to restore <math>A\,\!</math>. | ||

::2. From 100 to 105, | ::2. From 100 to 105, <math>B\,\!</math> is operating alone and is experiencing a higher load. | ||

::3. At 115, | ::3. At 115, <math>B\,\!</math> fails. would normally be expected to fail at 120, however: | ||

:::a) From 0 to 100, it accumulated the equivalent of 100 | :::a) From 0 to 100, it accumulated the equivalent of 100 <math>tu\,\!</math> of damage. | ||

:::b) From 100 to 105, it accumulated 10 | :::b) From 100 to 105, it accumulated 10 <math>tu\,\!</math> of damage, which is twice the damage since it was operating alone. Put another way, <math>B\,\!</math> aged by 10 <math>tu\,\!</math> over a period of 5 <math>tu\,\!</math>. | ||

:::c) At 105, | :::c) At 105, <math>A\,\!</math> is restored but <math>B\,\!</math> has only 10 <math>tu\,\!</math> of life remaining at this point. | ||

:::d) <math>B</math> | :::d) <math>B\,\!</math> fails at 115. | ||

::4. At 120, | ::4. At 120, <math>B\,\!</math> is repaired. | ||

::5. At 200, | ::5. At 200, <math>A\,\!</math> fails again. <math>A\,\!</math> would normally be expected to fail at 205; however, the failure of <math>B\,\!</math> at 115 to 120 added additional damage to <math>A\,\!</math>. In other words, the age of <math>A\,\!</math> at 115 was 10; by 120 it was 20. Thus it reached an age of 100 95 <math>tu\,\!</math> later at 200. | ||

::6. <math>A</math> | ::6. <math>A\,\!</math> is restored by 205. | ||

::7. At 235, | ::7. At 235, <math>B\,\!</math> fails. <math>B\,\!</math> would normally be expected to fail at 240; however, the failure of <math>A\,\!</math> at 200 caused the reduction. | ||

:::a) At 200, | :::a) At 200, <math>B\,\!</math> had an age of 80. | ||

:::b) By 205, | :::b) By 205, <math>B\,\!</math> had an age of 90. | ||

:::c) <math>B</math> | :::c) <math>B\,\!</math> fails 30 <math>tu\,\!</math> later at 235. | ||

::8. The system itself never failed. | ::8. The system itself never failed. | ||

| Line 979: | Line 494: | ||

:::a) If a path inside the container is down, blocks inside the container that are in that path do not continue to operate. | :::a) If a path inside the container is down, blocks inside the container that are in that path do not continue to operate. | ||

:::b) Blocks that are up do not continue to operate while the container is down. | :::b) Blocks that are up do not continue to operate while the container is down. | ||

::2. If a container brings the system down, the block that brought the container down is responsible for the system going down. (This is the opposite of standby containers.) | ::2. If a container brings the system down, the block that brought the container down is responsible for the system going down. (This is the opposite of standby containers.) | ||

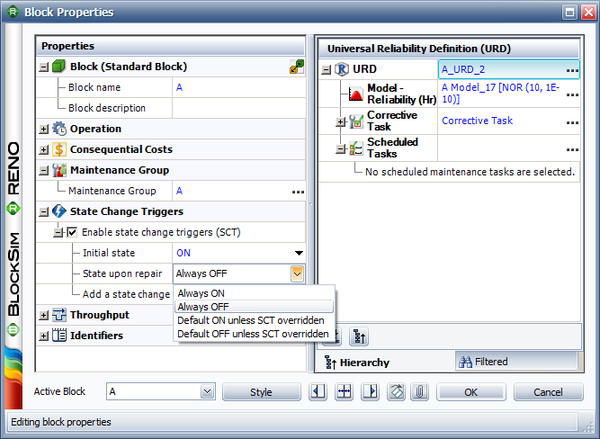

=State Change Triggers= | =State Change Triggers= | ||

| Line 988: | Line 502: | ||

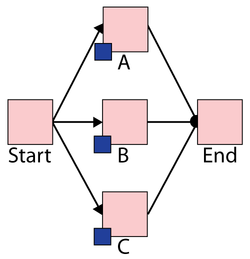

Even though the examples and explanations presented here are deterministic, the sequence of events and logic used to view the system is the same as the one that would be used during simulation. The difference is that the process would be repeated multiple times during simulation and the results presented would be the average results over the multiple runs. | Even though the examples and explanations presented here are deterministic, the sequence of events and logic used to view the system is the same as the one that would be used during simulation. The difference is that the process would be repeated multiple times during simulation and the results presented would be the average results over the multiple runs. | ||

Additionally, multiple metrics and results are presented and defined in this chapter. Many of these results can also be used to obtain additional metrics not explicitly given in BlockSim's Simulation Results Explorer. As an example, to compute mean availability with inspections but without PMs, the explicit downtimes given for each event could be used. Furthermore, all of the results given are for operating times starting at zero to a specified end time (although the components themselves could have been defined with a non-zero starting age). Results for a starting time other than zero could be obtained by running two simulations and looking at the difference in the detailed results where applicable. As an example, the difference in uptimes and downtimes can be used to determine availabilities for a specific time window. | Additionally, multiple metrics and results are presented and defined in this chapter. Many of these results can also be used to obtain additional metrics not explicitly given in BlockSim's Simulation Results Explorer. As an example, to compute mean availability with inspections but without PMs, the explicit downtimes given for each event could be used. Furthermore, all of the results given are for operating times starting at zero to a specified end time (although the components themselves could have been defined with a non-zero starting age). Results for a starting time other than zero could be obtained by running two simulations and looking at the difference in the detailed results where applicable. As an example, the difference in uptimes and downtimes can be used to determine availabilities for a specific time window. | ||

Latest revision as of 18:48, 10 March 2023

Having introduced some of the basic theory and terminology for repairable systems in Introduction to Repairable Systems, we will now examine the steps involved in the analysis of such complex systems. We will begin by examining system behavior through a sequence of discrete deterministic events and expand the analysis using discrete event simulation.

Simple Repairs

Deterministic View, Simple Series

To first understand how component failures and simple repairs affect the system and to visualize the steps involved, let's begin with a very simple deterministic example with two components, [math]\displaystyle{ A\,\! }[/math] and [math]\displaystyle{ B\,\! }[/math], in series.

Component [math]\displaystyle{ A\,\! }[/math] fails every 100 hours and component [math]\displaystyle{ B\,\! }[/math] fails every 120 hours. Both require 10 hours to get repaired. Furthermore, assume that the surviving component stops operating when the system fails (thus not aging). NOTE: When a failure occurs in certain systems, some or all of the system's components may or may not continue to accumulate operating time while the system is down. For example, consider a transmitter-satellite-receiver system. This is a series system and the probability of failure for this system is the probability that any of the subsystems fail. If the receiver fails, the satellite continues to operate even though the receiver is down. In this case, the continued aging of the components during the system inoperation must be taken into consideration, since this will affect their failure characteristics and have an impact on the overall system downtime and availability.

The system behavior during an operation from 0 to 300 hours would be as shown in the figure below.

Specifically, component [math]\displaystyle{ A\,\! }[/math] would fail at 100 hours, causing the system to fail. After 10 hours, component [math]\displaystyle{ A\,\! }[/math] would be restored and so would the system. The next event would be the failure of component [math]\displaystyle{ B\,\! }[/math]. We know that component [math]\displaystyle{ B\,\! }[/math] fails every 120 hours (or after an age of 120 hours). Since a component does not age while the system is down, component [math]\displaystyle{ B\,\! }[/math] would have reached an age of 120 when the clock reaches 130 hours. Thus, component [math]\displaystyle{ B\,\! }[/math] would fail at 130 hours and be repaired by 140 and so forth. Overall in this scenario, the system would be failed for a total of 40 hours due to four downing events (two due to [math]\displaystyle{ A\,\! }[/math] and two due to [math]\displaystyle{ B\,\! }[/math] ). The overall system availability (average or mean availability) would be [math]\displaystyle{ 260/300=0.86667\,\! }[/math]. Point availability is the availability at a specific point time. In this deterministic case, the point availability would always be equal to 1 if the system is up at that time and equal to zero if the system is down at that time.

Operating Through System Failure

In the prior section we made the assumption that components do not age when the system is down. This assumption applies to most systems. However, under special circumstances, a unit may age even while the system is down. In such cases, the operating profile will be different from the one presented in the prior section. The figure below illustrates the case where the components operate continuously, regardless of the system status.