|

|

| (111 intermediate revisions by 9 users not shown) |

| Line 1: |

Line 1: |

| {{template:RGA BOOK|13|Fielded Systems}} | | {{template:RGA BOOK|6.1|Repairable Systems Analysis}} |

| | Data from systems in the field can be analyzed in the RGA software. This type of data is called ''fielded systems data'' and is analogous to warranty data. Fielded systems can be categorized into two basic types: one-time or non-repairable systems, and reusable or repairable systems. In the latter case, under continuous operation, the system is repaired, but not replaced after each failure. For example, if a water pump in a vehicle fails, the water pump is replaced and the vehicle is repaired. |

|

| |

|

| =Fielded Systems=

| | This chapter presents repairable systems analysis, where the reliability of a system can be tracked and quantified based on data from multiple systems in the field. The next chapter will present [[Fleet Data Analysis|fleet analysis]], where data from multiple systems in the field can be collected and analyzed so that reliability metrics for the fleet as a whole can be quantified. |

| The previous chapters presented analysis methods for data obtained during developmental testing. However, data from systems in the field can also be analyzed in RGA. This type of data is called fielded systems data and is analogous to warranty data. Fielded systems can be categorized into two basic types: one-time or nonrepairable systems and reusable or repairable systems. In the latter case, under continuous operation, the system is repaired, but not replaced after each failure. For example, if a water pump in a vehicle fails, the water pump is replaced and the vehicle is repaired.

| |

| Two types of analysis are presented in this chapter. The first is repairable systems analysis where the reliability of a system can be tracked and quantified based on data from multiple systems in the field. The second is fleet analysis where data from multiple systems in the field can be collected and analyzed so that reliability metrics for the fleet as a whole can be quantified.

| |

| ==Repairable Systems Analysis==

| |

| ===Background===

| |

| Most complex systems, such as automobiles, communication systems, aircraft, printers, medical diagnostics systems, helicopters, etc., are repaired and not replaced when they fail. When these systems are fielded or subjected to a customer use environment, it is often of considerable interest to determine the reliability and other performance characteristics under these conditions. Areas of interest may include assessing the expected number of failures during the warranty period, maintaining a minimum mission reliability, evaluating the rate of wearout, determining when to replace or overhaul a system and minimizing life cycle costs. In general, a lifetime distribution, such as the Weibull distribution, cannot be used to address these issues. In order to address the reliability characteristics of complex repairable systems, a process is often used instead of a distribution. The most popular process model is the Power Law model. This model is popular for several reasons. One is that it has a very practical foundation in terms of minimal repair. This is the situation when the repair of a failed system is just enough to get the system operational again. Second, if the time to first failure follows the Weibull distribution, then each succeeding failure is governed by the Power Law model in the case of minimal repair. From this point of view, the Power Law model is an extension of the Weibull distribution.

| |

| <br>

| |

|

| |

|

| Sometimes, the Crow Extended model , which was introduced in Chapter 9 for the developmental data, is also applied for fielded repairable systems. Applying the Crow Extended model on repairable system data allows analysts to project the system MTBF after reliability-related issues are addressed during the field operation. Projections are calculated based on the mode classifications (A, BC and BD). The calculation procedure is the same as the one for the developmental data.and is not repeated in this chapter. | | ==Background== |

| <br>

| | Most complex systems, such as automobiles, communication systems, aircraft, printers, medical diagnostics systems, helicopters, etc., are repaired and not replaced when they fail. When these systems are fielded or subjected to a customer use environment, it is often of considerable interest to determine the reliability and other performance characteristics under these conditions. Areas of interest may include assessing the expected number of failures during the warranty period, maintaining a minimum mission reliability, evaluating the rate of wearout, determining when to replace or overhaul a system and minimizing life cycle costs. In general, a lifetime distribution, such as the Weibull distribution, cannot be used to address these issues. In order to address the reliability characteristics of complex repairable systems, a process is often used instead of a distribution. The most popular process model is the Power Law model. This model is popular for several reasons. One is that it has a very practical foundation in terms of minimal repair, which is a situation where the repair of a failed system is just enough to get the system operational again. Second, if the time to first failure follows the Weibull distribution, then each succeeding failure is governed by the Power Law model as in the case of minimal repair. From this point of view, the Power Law model is an extension of the Weibull distribution. |

| | |

| | Sometimes, the [[Crow Extended]] model, which was introduced in a previous chapter for analyzing developmental data, is also applied for fielded repairable systems. Applying the Crow Extended model on repairable system data allows analysts to project the system MTBF after reliability-related issues are addressed during the field operation. Projections are calculated based on the mode classifications (A, BC and BD). The calculation procedure is the same as the one for the developmental data, and is not repeated in this chapter. |

|

| |

|

| ===Distribution Example=== | | ===Distribution Example=== |

| <br>

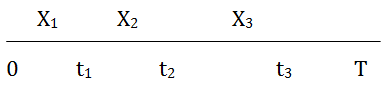

| | Visualize a socket into which a component is inserted at time 0. When the component fails, it is replaced immediately with a new one of the same kind. After each replacement, the socket is put back into an ''as good as new'' condition. Each component has a time-to-failure that is determined by the underlying distribution. It is important to note that a distribution relates to a single failure. The sequence of failures for the socket constitutes a random process called a ''renewal process''. In the illustration below, the component life is <math>{{X}_{j}}\,\!</math>, and <math>{{t}_{j}}\,\!</math> is the system time to the <math>{{j}^{th}}\,\!</math> failure. |

| Visualize a socket into which a component is inserted at time <math>0</math> . When the component fails, it is replaced immediately with a new one of the same kind. After each failure, the socket is put back into an ''as good as new'' condition. Each component has a time-to-failure that is determined by the underlying distribution. It is important to note that a distribution relates to a single failure. The sequence of failures for the socket constitutes a random process called a renewal process. In the illustration below, the component life is <math>{{X}_{j}}</math> and <math>{{t}_{j}}</math> is the system time to the <math>{{j}^{th}}</math> failure. | | |

|

| | [[File:Rga12.png|center|450px|Instantaneous Failure Intensity vs. Time plot.]] |

| Each component life <math>{{X}_{j}}</math> in the socket is governed by the same distribution <math>F(x)</math> .

| |

| <br>

| |

| A distribution, such as the Weibull, governs a single lifetime. There is only one event associated with a distribution. The distribution <math>F(x)</math> is the probability that the life of the component in the socket is less than <math>x</math> . In the illustration above, <math>{{X}_{1}}</math> is the life of the first component in the socket. <math>F(x)</math> is the probability that the first component in the socket fails in time <math>x</math> . When the first component fails, it is replaced in the socket with a new component of the same type. The probability that the life of the second component is less than <math>x</math> is given by the same distribution function, <math>F(x)</math> . For the Weibull distribution:

| |

|

| |

|

| | Each component life <math>{{X}_{j}}\,\!</math> in the socket is governed by the same distribution <math>F(x)\,\!</math>. |

|

| |

|

| ::<math>F(x)=1-{{e}^{-\lambda {{x}^{\beta }}}}</math>

| | A distribution, such as Weibull, governs a single lifetime. There is only one event associated with a distribution. The distribution <math>F(x)\,\!</math> is the probability that the life of the component in the socket is less than <math>x\,\!</math>. In the illustration above, <math>{{X}_{1}}\,\!</math> is the life of the first component in the socket. <math>F(x)\,\!</math> is the probability that the first component in the socket fails in time <math>x\,\!</math>. When the first component fails, it is replaced in the socket with a new component of the same type. The probability that the life of the second component is less than <math>x\,\!</math> is given by the same distribution function, <math>F(x)\,\!</math>. For the Weibull distribution: |

|

| |

|

| | :<math>F(x)=1-{{e}^{-\lambda {{x}^{\beta }}}}\,\!</math> |

|

| |

|

| A distribution is also characterized by its density function, such that: | | A distribution is also characterized by its density function, such that: |

|

| |

|

| | | :<math>f(x)=\frac{d}{dx}F(x)\,\!</math> |

| ::<math>f(x)=\frac{d}{dx}F(x)</math>

| |

| | |

|

| |

|

| The density function for the Weibull distribution is: | | The density function for the Weibull distribution is: |

|

| |

|

| | | :<math>f(x)=\lambda \beta {{x}^{\beta -1}}\cdot {{e}^{-\lambda \beta x}}\,\!</math> |

| ::<math>f(x)=\lambda \beta {{x}^{\beta -1}}\cdot {{e}^{-\lambda \beta x}}</math>

| |

| | |

|

| |

|

| In addition, an important reliability property of a distribution function is the failure rate, which is given by: | | In addition, an important reliability property of a distribution function is the failure rate, which is given by: |

|

| |

|

| | :<math>r(x)=\frac{f(x)}{1-F(x)}\,\!</math> |

|

| |

|

| ::<math>r(x)=\frac{f(x)}{1-F(x)}</math>

| | The interpretation of the failure rate is that for a small interval of time <math>\Delta x\,\!</math>, <math>r(x)\Delta x\,\!</math> is approximately the probability that a component in the socket will fail between time <math>x\,\!</math> and time <math>x+\Delta x\,\!</math>, given that the component has not failed by time <math>x\,\!</math>. For the Weibull distribution, the failure rate is given by: |

|

| |

|

| | :<math>\begin{align} |

| | r(x)=\lambda \beta {{x}^{\beta -1}} |

| | \end{align}\,\!</math> |

|

| |

|

| The interpretation of the failure rate is that for a small interval of time <math>\Delta x</math> , <math>r(x)\Delta x</math> is approximately the probability that a component in the socket will fail between time <math>x</math> and time <math>x+\Delta x</math> , given that the component has not failed by time <math>x</math> . For the Weibull distribution, the failure rate is given by:

| | It is important to note the condition that the component has not failed by time <math>x\,\!</math>. Again, a distribution deals with one lifetime of a component and does not allow for more than one failure. The socket has many failures and each failure time is individually governed by the same distribution. In other words, the failure times are independent of each other. If the failure rate is increasing, then this is indicative of component wearout. If the failure rate is decreasing, then this is indicative of infant mortality. If the failure rate is constant, then the component failures follow an exponential distribution. For the Weibull distribution, the failure rate is increasing for <math>\beta >1\,\!</math>, decreasing for <math>\beta<1\,\!</math> and constant for <math>\beta =1\,\!</math>. Each time a component in the socket is replaced, the failure rate of the new component goes back to the value at time 0. This means that the socket is as good as new after each failure and each subsequent replacement by a new component. This process is continued for the operation of the socket. |

|

| |

|

|

| |

| ::<math>r(x)=\lambda \beta {{x}^{\beta -1}}</math>

| |

|

| |

|

| |

| It is important to note the condition that the component has not failed by time <math>x</math> . Again, a distribution deals with one lifetime of a component and does not allow for more than one failure. The socket has many failures and each failure time is individually governed by the same distribution. In other words, the failure times are independent of each other. If the failure rate is increasing, then this is indicative of component wearout. If the failure rate is decreasing, then this is indicative of infant mortality. If the failure rate is constant, then the component failures follow an exponential distribution. For the Weibull distribution, the failure rate is increasing for <math>\beta >1</math> , decreasing for <math>\beta </math> <math><1</math> and constant for <math>\beta =1</math> . Each time a component in the socket is replaced, the failure rate of the new component converts back to the value at time <math>0</math> . This means that the socket is as good as new after each failure and the subsequent replacement by a new component. This process is continued for the operation of the socket.

| |

| <br>

| |

| ===Process Example=== | | ===Process Example=== |

| <br>

| | Now suppose that a system consists of many components with each component in a socket. A failure in any socket constitutes a failure of the system. Each component in a socket is a renewal process governed by its respective distribution function. When the system fails due to a failure in a socket, the component is replaced and the socket is again as good as new. The system has been repaired. Because there are many other components still operating with various ages, the system is not typically put back into a like new condition after the replacement of a single component. For example, a car is not as good as new after the replacement of a failed water pump. Therefore, distribution theory does not apply to the failures of a complex system, such as a car. In general, the intervals between failures for a complex repairable system do not follow the same distribution. Distributions apply to the components that are replaced in the sockets, but not at the system level. At the system level, a distribution applies to the very first failure. There is one failure associated with a distribution. For example, the very first system failure may follow a Weibull distribution. |

| Now suppose that a system consists of many components with each component in a socket. A failure in any socket constitutes a failure of the system. Each component in a socket is a renewal process governed by its respective distribution function. When the system fails due to a failure in a socket, the component is replaced and the socket is again as good as new. The system has been repaired. Because there are many other components still operating with various ages, the system is not typically put back into a like new condition after the replacement of a single component. For example, a car is not as good as new after the replacement of a failed water pump. Therefore, distribution theory does not apply to the failures of a complex system, such as a car. In general, the intervals between failures for a complex repairable system do not follow the same distribution. Distributions apply to the components that are replaced in the sockets but not at the system level. At the system level, a distribution applies to the very first failure. There is one failure associated with a distribution. For example, the very first system failure may follow a Weibull distribution. | |

| <br>

| |

| | |

| For many systems in a real world environment, a repair is only enough to get the system operational again. If the water pump fails on the car, the repair consists only of installing a new water pump. If a seal leaks, the seal is replaced but no additional maintenance is done, etc. This is the concept of minimal repair. For a system with many failure modes, the repair of a single failure mode does not greatly improve the system reliability from what it was just before the failure. Under minimal repair for a complex system with many failure modes, the system reliability after a repair is the same as it was just before the failure. In this case, the sequence of failure at the system level follows a non-homogeneous Poisson process (NHPP).

| |

| The system age when the system is first put into service is time <math>0</math> . Under the NHPP, the first failure is governed by a distribution <math>F(x)</math> with failure rate <math>r(x)</math> . Each succeeding failure is governed by the intensity function <math>u(t)</math> of the process. Let <math>t</math> be the age of the system and <math>\Delta t</math> is very small. The probability that a system of age <math>t</math> fails between <math>t</math> and <math>t+\Delta t</math> is given by the intensity function <math>u(t)\Delta t</math> . Notice that this probability is not conditioned on not having any system failures up to time <math>t</math> , as is the case for a failure rate. The failure intensity <math>u(t)</math> for the NHPP has the same functional form as the failure rate governing the first system failure. Therefore, <math>u(t)=r(t)</math> , where <math>r(t)</math> is the failure rate for the distribution function of the first system failure. If the first system failure follows the Weibull distribution, the failure rate is:

| |

|

| |

|

| | For many systems in a real world environment, a repair may only be enough to get the system operational again. If the water pump fails on the car, the repair consists only of installing a new water pump. Similarly, if a seal leaks, the seal is replaced but no additional maintenance is done. This is the concept of ''minimal repair''. For a system with many failure modes, the repair of a single failure mode does not greatly improve the system reliability from what it was just before the failure. Under minimal repair for a complex system with many failure modes, the system reliability after a repair is the same as it was just before the failure. In this case, the sequence of failures at the system level follows a non-homogeneous Poisson process (NHPP). |

|

| |

|

| ::<math>r(x)=\lambda \beta {{x}^{\beta -1}}</math>

| | The system age when the system is first put into service is time 0. Under the NHPP, the first failure is governed by a distribution <math>F(x)\,\!</math> with failure rate <math>r(x)\,\!</math>. Each succeeding failure is governed by the intensity function <math>u(t)\,\!</math> of the process. Let <math>t\,\!</math> be the age of the system and <math>\Delta t\,\!</math> is very small. The probability that a system of age <math>t\,\!</math> fails between <math>t\,\!</math> and <math>t+\Delta t\,\!</math> is given by the intensity function <math>u(t)\Delta t\,\!</math>. Notice that this probability is not conditioned on not having any system failures up to time <math>t\,\!</math>, as is the case for a failure rate. The failure intensity <math>u(t)\,\!</math> for the NHPP has the same functional form as the failure rate governing the first system failure. Therefore, <math>u(t)=r(t)\,\!</math>, where <math>r(t)\,\!</math> is the failure rate for the distribution function of the first system failure. If the first system failure follows the Weibull distribution, the failure rate is: |

|

| |

|

| | :<math>\begin{align} |

| | r(x)=\lambda \beta {{x}^{\beta -1}} |

| | \end{align}\,\!</math> |

|

| |

|

| Under minimal repair, the system intensity function is: | | Under minimal repair, the system intensity function is: |

|

| |

|

| | :<math>\begin{align} |

| | u(t)=\lambda \beta {{t}^{\beta -1}} |

| | \end{align}\,\!</math> |

|

| |

|

| ::<math>u(t)=\lambda \beta {{t}^{\beta -1}}</math>

| | This is the Power Law model. It can be viewed as an extension of the Weibull distribution. The Weibull distribution governs the first system failure, and the Power Law model governs each succeeding system failure. If the system has a constant failure intensity <math>u(t) = \lambda \,\!</math>, then the intervals between system failures follow an exponential distribution with failure rate <math>\lambda \,\!</math>. If the system operates for time <math>T\,\!</math>, then the random number of failures <math>N(T)\,\!</math> over 0 to <math>T\,\!</math> is given by the Power Law mean value function. |

|

| |

|

| | :<math>\begin{align} |

| | E[N(T)]=\lambda {{T}^{\beta }} |

| | \end{align}\,\!</math> |

|

| |

|

| This is the Power Law model. It can be viewed as an extension of the Weibull distribution. The Weibull distribution governs the first system failure and the Power Law model governs each succeeding system failure. If the system has a constant failure intensity <math>u(t)</math> = <math>\lambda </math> , then the intervals between system failures follow an exponential distribution with failure rate <math>\lambda </math> . If the system operates for time <math>T</math> , then the random number of failures <math>N(T)</math> over <math>0</math> to <math>T</math> is given by the Power Law mean value function.

| | Therefore, the probability <math>N(T)=n\,\!</math> is given by the Poisson probability. |

|

| |

|

| | :<math>\frac{{{\left( \lambda T \right)}^{n}}{{e}^{-\lambda T}}}{n!};\text{ }n=0,1,2\ldots \,\!</math> |

|

| |

|

| ::<math>E[N(T)]=\lambda {{T}^{\beta }}</math>

| | This is referred to as a ''homogeneous Poisson process'' because there is no change in the intensity function. This is a special case of the Power Law model for <math>\beta =1\,\!</math>. The Power Law model is a generalization of the homogeneous Poisson process and allows for change in the intensity function as the repairable system ages. For the Power Law model, the failure intensity is increasing for <math>\beta >1\,\!</math> (wearout), decreasing for <math>\beta <1\,\!</math> (infant mortality) and constant for <math>\beta =1\,\!</math> (useful life). |

|

| |

|

| | ==Power Law Model== |

| | The Power Law model is often used to analyze the reliability of complex repairable systems in the field. The system of interest may be the total system, such as a helicopter, or it may be subsystems, such as the helicopter transmission or rotator blades. When these systems are new and first put into operation, the start time is 0. As these systems are operated, they accumulate age (e.g., miles on automobiles, number of pages on copiers, flights of helicopters). When these systems fail, they are repaired and put back into service. |

|

| |

|

| Therefore, the probability <math>N(T)=n</math> is given by the Poisson probability.

| | Some system types may be overhauled and some may not, depending on the maintenance policy. For example, an automobile may not be overhauled but helicopter transmissions may be overhauled after a period of time. In practice, an overhaul may not convert the system reliability back to where it was when the system was new. However, an overhaul will generally make the system more reliable. Appropriate data for the Power Law model is over cycles. If a system is not overhauled, then there is only one cycle and the zero time is when the system is first put into operation. If a system is overhauled, then the same serial number system may generate many cycles. Each cycle will start a new zero time, the beginning of the cycle. The age of the system is from the beginning of the cycle. For systems that are not overhauled, there is only one cycle and the reliability characteristics of a system as the system ages during its life is of interest. For systems that are overhauled, you are interested in the reliability characteristics of the system as it ages during its cycle. |

|

| |

|

| | For the Power Law model, a data set for a system will consist of a starting time <math>S\,\!</math>, an ending time <math>T\,\!</math> and the accumulated ages of the system during the cycle when it had failures. Assume that the data exists from the beginning of a cycle (i.e., the starting time is 0), although non-zero starting times are possible with the Power Law model. For example, suppose data has been collected for a system with 2,000 hours of operation during a cycle. The starting time is <math>S=0\,\!</math> and the ending time is <math>T=2000\,\!</math>. Over this period, failures occurred at system ages of 50.6, 840.7, 1060.5, 1186.5, 1613.6 and 1843.4 hours. These are the accumulated operating times within the cycle, and there were no failures between 1843.4 and 2000 hours. It may be of interest to determine how the systems perform as part of a fleet. For a fleet, it must be verified that the systems have the same configuration, same maintenance policy and same operational environment. In this case, a random sample must be gathered from the fleet. Each item in the sample will have a cycle starting time <math>S=0\,\!</math>, an ending time <math>T\,\!</math> for the data period and the accumulated operating times during this period when the system failed. |

|

| |

|

| ::<math>\frac{{{\left( \lambda T \right)}^{n}}{{e}^{-\lambda T}}}{n!};\text{ }n=0,1,2\ldots </math>

| | There are many ways to generate a random sample of <math>K\,\!</math> systems. One way is to generate <math>K\,\!</math> random serial numbers from the fleet. Then go to the records corresponding to the randomly selected systems. If the systems are not overhauled, then record when each system was first put into service. For example, the system may have been put into service when the odometer mileage equaled zero. Each system may have a different amount of total usage, so the ending times, <math>T\,\!</math>, may be different. If the systems are overhauled, then the records for the last completed cycle will be needed. The starting and ending times and the accumulated times to failure for the <math>K\,\!</math> systems constitute the random sample from the fleet. There is a useful and efficient method for generating a random sample for systems that are overhauled. If the overhauled systems have been in service for a considerable period of time, then each serial number system in the fleet would go through many overhaul cycles. In this case, the systems coming in for overhaul actually represent a random sample from the fleet. As <math>K\,\!</math> systems come in for overhaul, the data for the current completed cycles would be a random sample of size <math>K\,\!</math>. |

|

| |

|

| | In addition, the warranty period may be of particular interest. In this case, randomly choose <math>K\,\!</math> serial numbers for systems that have been in customer use for a period longer than the warranty period. Then check the warranty records. For each of the <math>K\,\!</math> systems that had warranty work, the ages corresponding to this service are the failure times. If a system did not have warranty work, then the number of failures recorded for that system is zero. The starting times are all equal to zero and the ending time for each of the <math>K\,\!</math> systems is equal to the warranty operating usage time (e.g., hours, copies, miles). |

|

| |

|

| This is referred to as a homogeneous Poisson process because there is no change in the intensity function. This is a special case of the Power Law model for <math>\beta =1</math> . The Power Law model is a generalization of the homogeneous Poisson process and allows for change in the intensity function as the repairable system ages. For the Power Law model, the failure intensity is increasing for <math>\beta >1</math> (wearout), decreasing for <math>\beta <1</math> (infant morality) and constant for <math>\beta =1</math> (useful life).

| | In addition to the intensity function <math>u(t)\,\!</math> and the mean value function, which were given in the [[Repairable Systems Analysis#Process_Example|section above]], other relationships based on the Power Law are often of practical interest. For example, the probability that the system will survive to age <math>t+d\,\!</math> without failure is given by: |

| <br>

| |

|

| |

|

| ===Using the Power Law Model to Analyze Complex Repairable Systems===

| | :<math>R(t)={{e}^{-\left[ \lambda {{\left( t+d \right)}^{\beta }}-\lambda {{t}^{\beta }} \right]}}\,\!</math> |

| <br> | |

| The Power Law model is often used to analyze the reliability for complex repairable systems in the field. A system of interest may be the total system, such as a helicopter, or it may be subsystems, such as the helicopter transmission or rotator blades. When these systems are new and first put into operation, the start time is <math>0</math> . As these systems are operated, they accumulate age, e.g. miles on automobiles, number of pages on copiers, hours on helicopters. When these systems fail, they are repaired and put back into service.

| |

| <br>

| |

|

| |

|

| Some system types may be overhauled and some may not, depending on the maintenance policy. For example, an automobile may not be overhauled but helicopter transmissions may be overhauled after a period of time. In practice, an overhaul may not convert the system reliability back to where it was when the system was new.

| | This is the mission reliability for a system of age <math>t\,\!</math> and mission length <math>d\,\!</math>. |

| However, an overhaul will generally make the system more reliable. Appropriate data for the Power Law model is over cycles. If a system is not overhauled, then there is only one cycle and the zero time is when the system is first put into operation. If a system is overhauled, then the same serial number system may generate many cycles. Each cycle will start a new zero time, the beginning of the cycle. The age of the system is from the beginning of the cycle. For systems that are not overhauled, there is only one cycle and the reliability characteristics of a system as the system ages during its life is of interest. For systems that are overhauled, you are interested in the reliability characteristics of the system as it ages during its cycle.

| |

| <br> | |

|

| |

|

| For the Power Law model, a data set for a system will consist of a starting time <math>S</math> , an ending time <math>T</math> and the accumulated ages of the system during the cycle when it had failures. Assume the data exists from the beginning of a cycle (i.e. the starting time is 0), although non-zero starting times are possible with the Power Law model. For example, suppose data has been collected for a system with 2000 hours of operation during a cycle. The starting time is <math>S=0</math> and the ending time is <math>T=2000</math> . Over this period, failures occurred at system ages of 50.6, 840.7, 1060.5, 1186.5, 1613.6 and 1843.4 hours. These are the accumulated operating times within the cycle and there were no failures between 1843.4 and 2000 hours. It may be of interest to determine how the systems perform as part of a fleet. For a fleet, it must be verified that the systems have the same configuration, same maintenance policy and same operational environment. In this case, a random sample must be gathered from the fleet. Each item in the sample will have a cycle starting time <math>S=0</math> , an ending time <math>T</math> for the data period and the accumulated operating times during this period when the system failed.

| | ===Parameter Estimation=== |

| <br> | | Suppose that the number of systems under study is <math>K\,\!</math> and the <math>{{q}^{th}}\,\!</math> system is observed continuously from time <math>{{S}_{q}}\,\!</math> to time <math>{{T}_{q}}\,\!</math>, <math>q=1,2,\ldots ,K\,\!</math>. During the period <math>[{{S}_{q}},{{T}_{q}}]\,\!</math>, let <math>{{N}_{q}}\,\!</math> be the number of failures experienced by the <math>{{q}^{th}}\,\!</math> system and let <math>{{X}_{i,q}}\,\!</math> be the age of this system at the <math>{{i}^{th}}\,\!</math> occurrence of failure, <math>i=1,2,\ldots ,{{N}_{q}}\,\!</math>. It is also possible that the times <math>{{S}_{q}}\,\!</math> and <math>{{T}_{q}}\,\!</math> may be the observed failure times for the <math>{{q}^{th}}\,\!</math> system. If <math>{{X}_{{{N}_{q}},q}}={{T}_{q}}\,\!</math>, then the data on the <math>{{q}^{th}}\,\!</math> system is said to be failure terminated, and <math>{{T}_{q}}\,\!</math> is a random variable with <math>{{N}_{q}}\,\!</math> fixed. If <math>{{X}_{{{N}_{q}},q}}<{{T}_{q}}\,\!</math>, then the data on the <math>{{q}^{th}}\,\!</math> system is said to be time terminated with <math>{{N}_{q}}\,\!</math> a random variable. The maximum likelihood estimates of <math>\lambda \,\!</math> and <math>\beta \,\!</math> are values satisfying the equations shown next. |

|

| |

|

| There are many ways to generate a random sample of <math>K</math> systems. One way is to generate <math>K</math> random serial numbers from the fleet. Then go to the records corresponding to the randomly selected systems. If the systems are not overhauled, then record when each system was first put into service. For example, the system may have been put into service when the odometer mileage equaled zero. Each system may have a different amount of total usage, so the ending times, <math>T</math> , may be different. If the systems are overhauled, then the records for the last completed cycle will be needed. The starting and ending times and the accumulated times to failure for the <math>K</math> systems constitute the random sample from the fleet. There is a useful and efficient method for generating a random sample for systems that are overhauled. If the overhauled systems have been in service for a considerable period of time, then each serial number system in the fleet would go through many overhaul cycles. In this case, the systems coming in for overhaul actually represent a random sample from the fleet. As <math>K</math> systems come in for overhaul, the data for the current completed cycles would be a random sample of size <math>K</math> .

| | :<math>\begin{align} |

| <br>

| | \widehat{\lambda }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\left( T_{q}^{\widehat{\beta }}-S_{q}^{\widehat{\beta }} \right)} \\ |

| | \widehat{\beta }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\widehat{\lambda }\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\left[ T_{q}^{\widehat{\beta }}\ln ({{T}_{q}})-S_{q}^{\widehat{\beta }}\ln ({{S}_{q}}) \right]-\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{{{N}_{q}}}{\mathop{\sum }}}\,\ln ({{X}_{i,q}})} |

| | \end{align}\,\!</math> |

|

| |

|

| In addition, the warranty period may be of particular interest. In this case, randomly choose <math>K</math> serial numbers for systems that have been in customer use for a period longer than the warranty period. Then check the warranty records. For each of the <math>K</math> systems that had warranty work, the ages corresponding to this service are the failure times. If a system did not have warranty work, then the number of failures recorded for that system is zero. The starting times are all equal to zero and the ending time for each of the <math>K</math> systems is equal to the warranty operating usage time, e.g. hours, copies, miles.

| | where <math>0\ln 0\,\!</math> is defined to be 0. In general, these equations cannot be solved explicitly for <math>\widehat{\lambda }\,\!</math> and <math>\widehat{\beta },\,\!</math> but must be solved by iterative procedures. Once <math>\widehat{\lambda }\,\!</math> and <math>\widehat{\beta }\,\!</math> have been estimated, the maximum likelihood estimate of the intensity function is given by: |

| In addition to the intensity function <math>u(t)</math> given by Eqn. (intensity) and the mean value function given by Eqn. (expected failures), other relationships based on the Power Law are often of practical interest. For example, the probability that the system will survive to age <math>t+d</math> without failure is given by:

| |

|

| |

|

| | :<math>\widehat{u}(t)=\widehat{\lambda }\widehat{\beta }{{t}^{\widehat{\beta }-1}}\,\!</math> |

|

| |

|

| ::<math>R(t)={{e}^{-\left[ \lambda {{\left( t+d \right)}^{\beta }}-\lambda {{t}^{\beta }} \right]}}</math>

| | If <math>{{S}_{1}}={{S}_{2}}=\ldots ={{S}_{q}}=0\,\!</math> and <math>{{T}_{1}}={{T}_{2}}=\ldots ={{T}_{q}}\,\!</math> <math>\,(q=1,2,\ldots ,K)\,\!</math> then the maximum likelihood estimates <math>\widehat{\lambda }\,\!</math> and <math>\widehat{\beta }\,\!</math> are in closed form. |

|

| |

|

| | :<math>\begin{align} |

| | \widehat{\lambda }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{K{{T}^{\beta }}} \\ |

| | \widehat{\beta }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{{{N}_{q}}}{\mathop{\sum }}}\,\ln (\tfrac{T}{{{X}_{iq}}})} |

| | \end{align}\,\!</math> |

|

| |

|

| This is the mission reliability for a system of age <math>t</math> and mission length <math>d</math> .

| | The following example illustrates these estimation procedures. |

| <br>

| |

|

| |

|

| ==Parameter Estimation== | | ===Power Law Model Example=== <!-- THIS SECTION HEADER IS LINKED FROM ANOTHER SECTION IN THIS DOCUMENT. IF YOU RENAME THE SECTION, YOU MUST UPDATE THE LINK(S). --> |

| <br>

| | {{:Power Law Model Parameter Estimation Example}} |

| Suppose that the number of systems under study is <math>K</math> and the <math>{{q}^{th}}</math> system is observed continuously from time <math>{{S}_{q}}</math> to time <math>{{T}_{q}}</math> , <math>q=1,2,\ldots ,K</math> . During the period <math>[{{S}_{q}},{{T}_{q}}]</math> , let <math>{{N}_{q}}</math> be the number of failures experienced by the <math>{{q}^{th}}</math> system and let <math>{{X}_{i,q}}</math> be the age of this system at the <math>{{i}^{th}}</math> occurrence of failure, <math>i=1,2,\ldots ,{{N}_{q}}</math> . It is also possible that the times <math>{{S}_{q}}</math> and <math>{{T}_{q}}</math> may be observed failure times for the <math>{{q}^{th}}</math> system. If <math>{{X}_{{{N}_{q}},q}}={{T}_{q}}</math> then the data on the <math>{{q}^{th}}</math> system is said to be failure terminated and <math>{{T}_{q}}</math> is a random variable with <math>{{N}_{q}}</math> fixed. If <math>{{X}_{{{N}_{q}},q}}<{{T}_{q}}</math> then the data on the <math>{{q}^{th}}</math> system is said to be time terminated with <math>{{N}_{q}}</math> a random variable. The maximum likelihood estimates of <math>\lambda </math> and <math>\beta </math> are values satisfying the Eqns. (lambdaPowerLaw) and (BetaPowerLaw).

| |

|

| |

|

| | ==Goodness-of-Fit Tests for Repairable System Analysis==<!-- THIS SECTION HEADER IS LINKED TO: Hypothesis Tests. IF YOU RENAME THE SECTION, YOU MUST UPDATE THE LINK. --> |

| | It is generally desirable to test the compatibility of a model and data by a statistical goodness-of-fit test. A parametric Cramér-von Mises goodness-of-fit test is used for the multiple system and repairable system Power Law model, as proposed by Crow in [[RGA_References|[17]]]. This goodness-of-fit test is appropriate whenever the start time for each system is 0 and the failure data is complete over the continuous interval <math>[0,{{T}_{q}}]\,\!</math> with no gaps in the data. The Chi-Squared test is a goodness-of-fit test that can be applied under more general circumstances. In addition, the Common Beta Hypothesis test also can be used to compare the intensity functions of the individual systems by comparing the <math>{{\beta }_{q}}\,\!</math> values of each system. Lastly, the Laplace Trend test checks for trends within the data. Due to their general application, the Common Beta Hypothesis test and the Laplace Trend test are both presented in [[Hypothesis_Tests|Appendix B]]. The Cramér-von Mises and Chi-Squared goodness-of-fit tests are illustrated next. |

|

| |

|

| ::<math>\begin{align}

| | ===Cramér-von Mises Test=== |

| & \widehat{\lambda }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\left( T_{q}^{\widehat{\beta }}-S_{q}^{\widehat{\beta }} \right)} \\

| | To illustrate the application of the Cramér-von Mises statistic for multiple systems data, suppose that <math>K\,\!</math> like systems are under study and you wish to test the hypothesis <math>{{H}_{1}}\,\!</math> that their failure times follow a non-homogeneous Poisson process. Suppose information is available for the <math>{{q}^{th}}\,\!</math> system over the interval <math>[0,{{T}_{q}}]\,\!</math>, with successive failure times , <math>(q=1,2,\ldots ,\,K)\,\!</math>. The Cramér-von Mises test can be performed with the following steps: |

| & \widehat{\beta }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\widehat{\lambda }\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\left[ T_{q}^{\widehat{\beta }}\ln ({{T}_{q}})-S_{q}^{\widehat{\beta }}\ln ({{S}_{q}}) \right]-\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{{{N}_{q}}}{\mathop{\sum }}}\,\ln ({{X}_{i,q}})}

| |

| \end{align}</math> | |

|

| |

|

| | '''Step 1:''' If <math>{{x}_{{{N}_{q}}q}}={{T}_{q}}\,\!</math> (failure terminated), let <math>{{M}_{q}}={{N}_{q}}-1\,\!</math>, and if <math>{{x}_{{{N}_{q}}q}}<T\,\!</math> (time terminated), let <math>{{M}_{q}}={{N}_{q}}\,\!</math>. Then: |

|

| |

|

| where <math>0\ln 0</math> is defined to be 0. In general, these equations cannot be solved explicitly for <math>\widehat{\lambda }</math> and <math>\widehat{\beta },</math> but must be solved by iterative procedures. Once <math>\widehat{\lambda }</math> and <math>\widehat{\beta }</math> have been estimated, the maximum likelihood estimate of the intensity function is given by:

| | :<math>M=\underset{q=1}{\overset{K}{\mathop \sum }}\,{{M}_{q}}\,\!</math> |

|

| |

|

| ::<math>\widehat{u}(t)=\widehat{\lambda }\widehat{\beta }{{t}^{\widehat{\beta }-1}}</math> | | '''Step 2:''' For each system, divide each successive failure time by the corresponding end time <math>{{T}_{q}}\,\!</math>, <math>\,i=1,2,...,{{M}_{q}}.\,\!</math> Calculate the <math>M\,\!</math> values: |

|

| |

|

| If <math>{{S}_{1}}={{S}_{2}}=\ldots ={{S}_{q}}=0</math> and <math>{{T}_{1}}={{T}_{2}}=\ldots ={{T}_{q}}</math> <math>\,(q=1,2,\ldots ,K)</math> then the maximum likelihood estimates <math>\widehat{\lambda }</math> and <math>\widehat{\beta }</math> are in closed form.

| | :<math>{{Y}_{iq}}=\frac{{{X}_{iq}}}{{{T}_{q}}},i=1,2,\ldots ,{{M}_{q}},\text{ }q=1,2,\ldots ,K\,\!</math> |

|

| |

|

| ::<math>\begin{align} | | '''Step 3:''' Next calculate <math>\bar{\beta }\,\!</math>, the unbiased estimate of <math>\beta \,\!</math>, from: |

| & \widehat{\lambda }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{K{{T}^{\beta }}} \\

| |

| & \widehat{\beta }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{{{N}_{q}}}{\mathop{\sum }}}\,\ln (\tfrac{T}{{{X}_{iq}}})}

| |

| \end{align}</math>

| |

|

| |

|

| | :<math>\bar{\beta }=\frac{M-1}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{Mq}{\mathop{\sum }}}\,\ln \left( \tfrac{{{T}_{q}}}{{{X}_{i}}{{}_{q}}} \right)}\,\!</math> |

|

| |

|

| The following examples illustrate these estimation procedures.

| | '''Step 4:''' Treat the <math>{{Y}_{iq}}\,\!</math> values as one group, and order them from smallest to largest. Name these ordered values <math>{{z}_{1}},\,{{z}_{2}},\ldots ,{{z}_{M}}\,\!</math>, such that <math>{{z}_{1}}<\ \ {{z}_{2}}<\ldots <{{z}_{M}}\,\!</math>. |

| <br>

| |

| <br>

| |

| =====Example 1=====

| |

| <br>

| |

| For the data in Table 13.1, the starting time for each system is equal to <math>0</math> and the ending time for each system is 2000 hours. Calculate the maximum likelihood estimates <math>\widehat{\lambda }</math> and <math>\widehat{\beta }</math> .

| |

|

| |

|

| <br>

| | '''Step 5:''' Calculate the parametric Cramér-von Mises statistic. |

| {|system= align="center" border="1"

| |

| |-

| |

| |colspan="3" style="text-align:center"|Table 13.1 - Repairable system failure data

| |

| |-

| |

| !System 1 ( <math>{{X}_{i1}}</math> )

| |

| !System 2 ( <math>{{X}_{i2}}</math> )

| |

| !System 3 ( <math>{{X}_{i3}}</math> )

| |

| |-

| |

| |1.2|| 1.4|| 0.3

| |

| |-

| |

| |55.6|| 35.0|| 32.6

| |

| |-

| |

| |72.7|| 46.8|| 33.4

| |

| |-

| |

| |111.9|| 65.9|| 241.7

| |

| |-

| |

| |121.9|| 181.1|| 396.2

| |

| |-

| |

| |303.6|| 712.6|| 444.4

| |

| |-

| |

| |326.9|| 1005.7|| 480.8

| |

| |-

| |

| |1568.4|| 1029.9 ||588.9

| |

| |-

| |

| |1913.5|| 1675.7|| 1043.9

| |

| |-

| |

| | ||1787.5|| 1136.1

| |

| |-

| |

| | ||1867.0|| 1288.1

| |

| |-

| |

| | || ||1408.1

| |

| |-

| |

| | || ||1439.4

| |

| |-

| |

| | || ||1604.8

| |

| |-

| |

| |<math>{{N}_{1}}=9</math> || <math>{{N}_{2}}=11</math> ||<math>{{N}_{3}}=14</math>

| |

| |}

| |

|

| |

|

| <br> | | :<math>C_{M}^{2}=\frac{1}{12M}+\underset{j=1}{\overset{M}{\mathop \sum }}\,{{(Z_{j}^{\overline{\beta }}-\frac{2j-1}{2M})}^{2}}\,\!</math> |

| '''Solution'''

| |

| <br>

| |

| Since the starting time for each system is equal to zero and each system has an equivalent ending time, the general Eqns. (lambdaPowerLaw) and (BetaPowerLaw) reduce to the closed form Eqns. (sample1) and (sample2). The maximum likelihood estimates of <math>\hat{\beta }</math> and <math>\hat{\lambda }</math> are then calculated as follows:

| |

|

| |

|

| ::<math>\begin{align}

| | Critical values for the Cramér-von Mises test are presented in the [[Crow-AMSAA (NHPP)#Critical_Values|Crow-AMSAA (NHPP)]] page. |

| & \widehat{\beta }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{{{N}_{q}}}{\mathop{\sum }}}\,\ln (\tfrac{T}{{{X}_{iq}}})} \\

| |

| & = & 0.45300

| |

| \end{align}</math>

| |

|

| |

|

| | '''Step 6:''' If the calculated <math>C_{M}^{2}\,\!</math> is less than the critical value, then accept the hypothesis that the failure times for the <math>K\,\!</math> systems follow the non-homogeneous Poisson process with intensity function <math>u(t)=\lambda \beta {{t}^{\beta -1}}\,\!</math>. |

|

| |

|

| ::<math>\begin{align}

| | ===Chi-Squared Test=== |

| & \widehat{\lambda }= & \frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{K{{T}^{\beta }}} \\

| | The parametric Cramér-von Mises test described above requires that the starting time, <math>{{S}_{q}}\,\!</math>, be equal to 0 for each of the <math>K\,\!</math> systems. Although not as powerful as the Cramér-von Mises test, the chi-squared test can be applied regardless of the starting times. The expected number of failures for a system over its age <math>(a,b)\,\!</math> for the chi-squared test is estimated by <math>\widehat{\lambda }{{b}^{\widehat{\beta }}}-\widehat{\lambda }{{a}^{\widehat{\beta }}}=\widehat{\theta }\,\!</math>, where <math>\widehat{\lambda }\,\!</math> and <math>\widehat{\beta }\,\!</math> are the maximum likelihood estimates. |

| & = & 0.36224

| |

| \end{align}</math> | |

|

| |

|

| | The computed <math>{{\chi }^{2}}\,\!</math> statistic is: |

|

| |

|

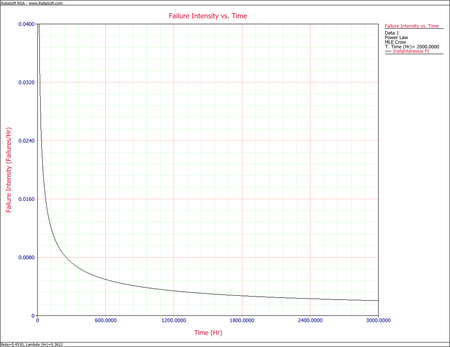

| [[Image:rga13.2.png|thumb|center|300px|Instantaneous Failure Intensity vs. Time plot.]]

| | :<math>{{\chi }^{2}}=\underset{j=1}{\overset{d}{\mathop \sum }}\,{{\frac{\left[ N(j)-\theta (j) \right]}{\widehat{\theta }(j)}}^{2}}\,\!</math> |

|

| |

|

| <br> | | where <math>d\,\!</math> is the total number of intervals. The random variable <math>{{\chi }^{2}}\,\!</math> is approximately chi-square distributed with <math>df=d-2\,\!</math> degrees of freedom. There must be at least three intervals and the length of the intervals do not have to be equal. It is common practice to require that the expected number of failures for each interval, <math>\theta (j)\,\!</math>, be at least five. If <math>\chi _{0}^{2}>\chi _{\alpha /2,d-2}^{2}\,\!</math> or if <math>\chi _{0}^{2}<\chi _{1-(\alpha /2),d-2}^{2}\,\!</math>, reject the null hypothesis. |

| The system failure intensity function is then estimated by: | |

|

| |

|

| ::<math>\widehat{u}(t)=\widehat{\lambda }\widehat{\beta }{{t}^{\widehat{\beta }-1}},\text{ }t>0</math>

| | ===Cramér-von Mises Example=== |

| | For the data from [[Repairable Systems Analysis#Power_Law_Model_Example|power law model example]] given above, use the Cramér-von Mises test to examine the compatibility of the model at a significance level <math>\alpha =0.10\,\!</math> |

|

| |

|

| Figure wpp intensity is a plot of <math>\widehat{u}(t)</math> over the period (0, 3000). Clearly, the estimated failure intensity function is most representative over the range of the data and any extrapolation should be viewed with the usual caution.

| | '''Solution''' |

| | |

| ===Goodness-of-Fit Tests for Repairable System Analysis===

| |

| <br>

| |

| It is generally desirable to test the compatibility of a model and data by a statistical goodness-of-fit test. A parametric Cramér-von Mises goodness-of-fit test is used for the multiple system and repairable system Power Law model, as proposed by Crow in [17]. This goodness-of-fit test is appropriate whenever the start time for each system is 0 and the failure data is complete over the continuous interval <math>[0,{{T}_{q}}]</math> with no gaps in the data. The Chi-Squared test is a goodness-of-fit test that can be applied under more general circumstances. In addition, the Common Beta Hypothesis test also can be used to compare the intensity functions of the individual systems by comparing the <math>{{\beta }_{q}}</math> values of each system. Lastly, the Laplace Trend test checks for trends within the data. Due to their general applicatoin, the Common Beta Hypothesis test and the Laplace Trend test are both presented in Appendix B. The Cramér-von Mises and Chi-Squared goodness-of-fit tests are illustrated next.

| |

| <br>

| |

| <br>

| |

| ====Cramér-von Mises Test====

| |

| <br>

| |

| To illustrate the application of the Cramér-von Mises statistic for multiple system data, suppose that <math>K</math> like systems are under study and you wish to test the hypothesis <math>{{H}_{1}}</math> that their failure times follow a non-homogeneous Poisson process. Suppose information is available for the <math>{{q}^{th}}</math> system over the interval <math>[0,{{T}_{q}}]</math> , with successive failure times , <math>(q=1,2,\ldots ,\,K)</math> . The Cramér-von Mises test can be performed with the following steps:

| |

| <br>

| |

| <br>

| |

| Step 1: If <math>{{x}_{{{N}_{q}}q}}={{T}_{q}}</math> (failure terminated) let <math>{{M}_{q}}={{N}_{q}}-1</math> , and if <math>{{x}_{{{N}_{q}}q}}<T</math> (time terminated) let <math>{{M}_{q}}={{N}_{q}}</math> . Then:

| |

| | |

| ::<math>M=\underset{q=1}{\overset{K}{\mathop \sum }}\,{{M}_{q}}</math>

| |

| | |

| Step 2: For each system divide each successive failure time by the corresponding end time <math>{{T}_{q}}</math> , <math>\,i=1,2,...,{{M}_{q}}.</math> Calculate the <math>M</math> values:

| |

| | |

| ::<math>{{Y}_{iq}}=\frac{{{X}_{iq}}}{{{T}_{q}}},i=1,2,\ldots ,{{M}_{q}},\text{ }q=1,2,\ldots ,K</math>

| |

|

| |

|

| | '''Step 1''': |

|

| |

|

| Step 3: Next calculate <math>\overline{\beta }</math> , the unbiased estimate of <math>\beta </math> , from:

| | :<math>\begin{align} |

| | {{X}_{9,1}}= & 1913.5<2000,\,\ {{M}_{1}}=9 \\ |

| | {{X}_{11,2}}= & 1867<2000,\,\ {{M}_{2}}=11 \\ |

| | {{X}_{14,3}}= & 1604.8<2000,\,\ {{M}_{3}}=14 \\ |

| | M= & \underset{q=1}{\overset{3}{\mathop \sum }}\,{{M}_{q}}=34 |

| | \end{align}\,\!</math> |

|

| |

|

| ::<math>\overline{\beta }=\frac{M-1}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{Mq}{\mathop{\sum }}}\,\ln \left( \tfrac{{{T}_{q}}}{{{X}_{i}}{{}_{q}}} \right)}</math> | | '''Step 2''': Calculate <math>{{Y}_{iq}},\,\!</math> treat the <math>{{Y}_{iq}}\,\!</math> values as one group and order them from smallest to largest. Name these ordered values <math>{{z}_{1}},\,{{z}_{2}},\ldots ,{{z}_{M}}\,\!</math>. |

|

| |

|

| | '''Step 3''': Calculate: |

|

| |

|

| Step 4: Treat the <math>{{Y}_{iq}}</math> values as one group and order them from smallest to largest. Name these ordered values <math>{{z}_{1}},\,{{z}_{2}},\ldots ,{{z}_{M}}</math> , such that <math>{{z}_{1}}<\ \ {{z}_{2}}<\ldots <{{z}_{M}}</math> .

| | :<math>\bar{\beta }=\tfrac{M-1}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{Mq}{\mathop{\sum }}}\,\ln \left( \tfrac{{{T}_{q}}}{{{X}_{i}}{{}_{q}}} \right)}=0.4397\,\!</math> |

| <br>

| |

| <br>

| |

| Step 5: Calculate the parametric Cramér-von Mises statistic.

| |

|

| |

|

| ::<math>C_{M}^{2}=\frac{1}{12M}+\underset{j=1}{\overset{M}{\mathop \sum }}\,{{(Z_{j}^{\overline{\beta }}-\frac{2j-1}{2M})}^{2}}</math> | | '''Step 4''': Calculate: |

|

| |

|

| | :<math>C_{M}^{2}=\tfrac{1}{12M}+\underset{j=1}{\overset{M}{\mathop{\sum }}}\,{{(Z_{j}^{\overline{\beta }}-\tfrac{2j-1}{2M})}^{2}}=0.0636\,\!</math> |

|

| |

|

| Critical values for the Cramér-von Mises test are presented in Table B.2 of Appendix B.

| | '''Step 5''': From the [[Crow-AMSAA (NHPP)#Critical_Values|table of critical values for the Cramér-von Mises test]], find the critical value (CV) for <math>M=34\,\!</math> at a significance level <math>\alpha =0.10\,\!</math>. <math>CV=0.172\,\!</math>. |

| <br>

| |

| <br>

| |

| Step 6: If the calculated <math>C_{M}^{2}</math> is less than the critical value then accept the hypothesis that the failure times for the <math>K</math> systems follow the non-homogeneous Poisson process with intensity function <math>u(t)=\lambda \beta {{t}^{\beta -1}}</math> .

| |

| <br>

| |

| <br>

| |

| =====Example 2=====

| |

| <br>

| |

| For the data from Example 1, use the Cramér-von Mises test to examine the compatibility of the model at a significance level <math>\alpha =0.10</math>

| |

| <br> | |

| <br> | |

| ''Solution''

| |

| <br>

| |

| Step 1:

| |

|

| |

|

| ::<math>\begin{align} | | '''Step 6''': Since <math>C_{M}^{2}<CV\,\!</math>, accept the hypothesis that the failure times for the <math>K=3\,\!</math> repairable systems follow the non-homogeneous Poisson process with intensity function <math>u(t)=\lambda \beta {{t}^{\beta -1}}\,\!</math>. |

| & {{X}_{9,1}}= & 1913.5<2000,\,\ {{M}_{1}}=9 \\

| |

| & {{X}_{11,2}}= & 1867<2000,\,\ {{M}_{2}}=11 \\

| |

| & {{X}_{14,3}}= & 1604.8<2000,\,\ {{M}_{3}}=14 \\

| |

| & M= & \underset{q=1}{\overset{3}{\mathop \sum }}\,{{M}_{q}}=34

| |

| \end{align}</math> | |

|

| |

|

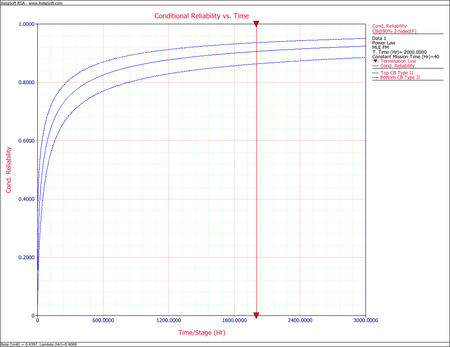

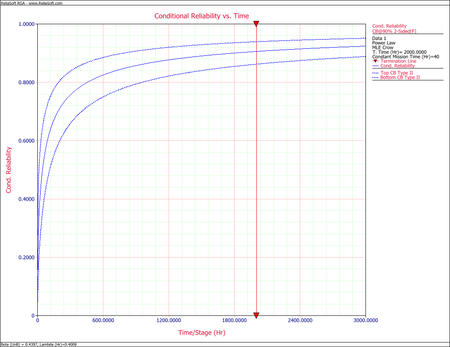

| | ==Confidence Bounds for Repairable Systems Analysis== |

| | The RGA software provides two methods to estimate the confidence bounds for repairable systems analysis. The Fisher matrix approach is based on the Fisher information matrix and is commonly employed in the reliability field. The Crow bounds were developed by Dr. Larry Crow. See [[Confidence Bounds for Repairable Systems Analysis]] for details on how these confidence bounds are calculated. |

|

| |

|

| Step 2: Calculate <math>{{Y}_{iq}},</math> treat the <math>{{Y}_{iq}}</math> values as one group and order them from smallest to largest. Name these ordered values <math>{{z}_{1}},\,{{z}_{2}},\ldots ,{{z}_{M}}</math> .

| | ===Confidence Bounds Example=== |

| <br>

| | {{:Power_Law_Model_Confidence_Bounds_Example}} |

| <br>

| |

| Step 3: Calculate <math>\overline{\beta }=\tfrac{M-1}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{Mq}{\mathop{\sum }}}\,\ln \left( \tfrac{{{T}_{q}}}{{{X}_{i}}{{}_{q}}} \right)}=0.4397</math>

| |

| <br>

| |

| <br>

| |

| Step 4: Calculate <math>C_{M}^{2}=\tfrac{1}{12M}+\underset{j=1}{\overset{M}{\mathop{\sum }}}\,{{(Z_{j}^{\overline{\beta }}-\tfrac{2j-1}{2M})}^{2}}=0.0611</math>

| |

| <br>

| |

| <br>

| |

| Step 5: Find the critical value (CV) from Table B.2 for <math>M=34</math> at a significance level <math>\alpha =0.10</math> . <math>CV=0.172</math> .

| |

| <br>

| |

| <br>

| |

| Step 6: Since <math>C_{M}^{2}<CV</math> , accept the hypothesis that the failure times for the <math>K=3</math> repairable systems follow the non-homogeneous Poisson process with intensity function <math>u(t)=\lambda \beta {{t}^{\beta -1}}</math> .

| |

| <br>

| |

| <br>

| |

|

| |

|

| ====Chi-Squared Test==== | | ==Economical Life Model== |

| <br>

| | One consideration in reducing the cost to maintain repairable systems is to establish an overhaul policy that will minimize the total life cost of the system. However, an overhaul policy makes sense only if <math>\beta >1\,\!</math>. It does not make sense to implement an overhaul policy if <math>\beta <1\,\!</math> since wearout is not present. If you assume that there is a point at which it is cheaper to overhaul a system than to continue repairs, what is the overhaul time that will minimize the total life cycle cost while considering repair cost and the cost of overhaul? |

| The parametric Cramér-von Mises test described above requires that the starting time, <math>{{S}_{q}}</math> , be equal to 0 for each of the <math>K</math> systems. Although not as powerful as the Cramér-von Mises test, the Chi-Squared test can be applied regardless of the starting times. The expected number of failures for a system over its age <math>(a,b)</math> for the Chi-Squared test is estimated by <math>\widehat{\lambda }{{b}^{\widehat{\beta }}}-\widehat{\lambda }{{a}^{\widehat{\beta }}}=\widehat{\theta }</math> , where <math>\widehat{\lambda }</math> and <math>\widehat{\beta }</math> are the maximum likelihood estimates.

| |

| The computed <math>{{\chi }^{2}}</math> statistic is:

| |

|

| |

|

| ::<math>{{\chi }^{2}}=\underset{j=1}{\overset{d}{\mathop \sum }}\,{{\frac{\left[ N(j)-\theta (j) \right]}{\widehat{\theta }(j)}}^{2}}</math>

| | Denote <math>{{C}_{1}}\,\!</math> as the average repair cost (unscheduled), <math>{{C}_{2}}\,\!</math> as the replacement or overhaul cost and <math>{{C}_{3}}\,\!</math> as the average cost of scheduled maintenance. Scheduled maintenance is performed for every <math>S\,\!</math> miles or time interval. In addition, let <math>{{N}_{1}}\,\!</math> be the number of failures in <math>[0,t]\,\!</math>, and let <math>{{N}_{2}}\,\!</math> be the number of replacements in <math>[0,t]\,\!</math>. Suppose that replacement or overhaul occurs at times <math>T\,\!</math>, <math>2T\,\!</math>, and <math>3T\,\!</math>. The problem is to select the optimum overhaul time <math>T={{T}_{0}}\,\!</math> so as to minimize the long term average system cost (unscheduled maintenance, replacement cost and scheduled maintenance). Since <math>\beta >1\,\!</math>, the average system cost is minimized when the system is overhauled (or replaced) at time <math>{{T}_{0}}\,\!</math> such that the instantaneous maintenance cost equals the average system cost. |

| | |

| where <math>d</math> is the total number of intervals. The random variable <math>{{\chi }^{2}}</math> is approximately Chi-Square distributed with <math>df=d-2</math> degrees of freedom. There must be at least three intervals and the length of the intervals do not have to be equal. It is common practice to require that the expected number of failures for each interval, <math>\theta (j)</math> , be at least five. If <math>\chi _{0}^{2}>\chi _{\alpha /2,d-2}^{2}</math> or if <math>\chi _{0}^{2}<\chi _{1-(\alpha /2),d-2}^{2}</math> , reject the null hypothesis.

| |

|

| |

| | |

| ===Confidence Bounds for Repairable Systems Analysis===

| |

| ====Bounds on <math>\beta </math>====

| |

| =====Fisher Matrix Bounds=====

| |

| The parameter <math>\beta </math> must be positive, thus <math>\ln \beta </math> is approximately treated as being normally distributed.

| |

| | |

| | |

| ::<math>\frac{\ln (\widehat{\beta })-\ln (\beta )}{\sqrt{Var\left[ \ln (\widehat{\beta }) \right]}}\ \tilde{\ }\ N(0,1)</math>

| |

| | |

| | |

| ::<math>C{{B}_{\beta }}=\widehat{\beta }{{e}^{\pm {{z}_{\alpha }}\sqrt{Var(\widehat{\beta })}/\widehat{\beta }}}</math>

| |

| | |

| | |

| ::<math>\widehat{\beta }=\frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{\widehat{\lambda }\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\left[ (T_{q}^{\widehat{\beta }}\ln ({{T}_{q}})-S_{q}^{\widehat{\beta }}\ln ({{S}_{q}}) \right]-\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{{{N}_{q}}}{\mathop{\sum }}}\,\ln ({{X}_{i}}{{}_{q}})}</math>

| |

| | |

| | |

| All variance can be calculated using the Fisher Information Matrix.

| |

| <br>

| |

| <math>\Lambda </math> is the natural log-likelihood function.

| |

| | |

| | |

| ::<math>\Lambda =\underset{q=1}{\overset{K}{\mathop \sum }}\,\left[ {{N}_{q}}(\ln (\lambda )+\ln (\beta ))-\lambda (T_{q}^{\beta }-S_{q}^{\beta })+(\beta -1)\underset{i=1}{\overset{{{N}_{q}}}{\mathop \sum }}\,\ln ({{x}_{iq}}) \right]</math>

| |

| | |

| | |

| ::<math>\frac{{{\partial }^{2}}\Lambda }{\partial {{\lambda }^{2}}}=-\frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{{{\lambda }^{2}}}</math>

| |

| | |

| | |

| ::<math>\frac{{{\partial }^{2}}\Lambda }{\partial \lambda \partial \beta }=-\underset{q=1}{\overset{K}{\mathop \sum }}\,\left[ T_{q}^{\beta }\ln ({{T}_{q}})-S_{q}^{\beta }\ln ({{S}_{q}}) \right]</math>

| |

| | |

| | |

| ::<math>\frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }^{2}}}=-\frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{N}_{q}}}{{{\beta }^{2}}}-\lambda \underset{q=1}{\overset{K}{\mathop \sum }}\,\left[ T_{q}^{\beta }{{(\ln ({{T}_{q}}))}^{2}}-S_{q}^{\beta }{{(\ln ({{S}_{q}}))}^{2}} \right]</math>

| |

| | |

| =====Crow Bounds=====

| |

| Calculate the conditional maximum likelihood estimate of <math>\tilde{\beta }</math> :

| |

| | |

| | |

| ::<math>\tilde{\beta }=\frac{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,{{M}_{q}}}{\underset{q=1}{\overset{K}{\mathop{\sum }}}\,\underset{i=1}{\overset{M}{\mathop{\sum }}}\,\ln \left( \tfrac{{{T}_{q}}}{{{X}_{iq}}} \right)}</math>

| |

| | |

| | |

| The Crow 2-sided <math>(1-a)</math> 100-percent confidence bounds on <math>\beta </math> are:

| |

| | |

| ::<math>\begin{align}

| |

| & {{\beta }_{L}}= & \tilde{\beta }\frac{\chi _{\tfrac{\alpha }{2},2M}^{2}}{2M} \\

| |

| & {{\beta }_{U}}= & \tilde{\beta }\frac{\chi _{1-\tfrac{\alpha }{2},2M}^{2}}{2M}

| |

| \end{align}</math>

| |

| | |

|

| |

| ====Bounds on <math>\lambda </math>====

| |

| =====Fisher Matrix Bounds=====

| |

| The parameter <math>\lambda </math> must be positive, thus <math>\ln \lambda </math> is approximately treated as being normally distributed. These bounds are based on:

| |

| | |

| | |

| ::<math>\frac{\ln (\widehat{\lambda })-\ln (\lambda )}{\sqrt{Var\left[ \ln (\widehat{\lambda }) \right]}}\ \tilde{\ }\ N(0,1)</math>

| |

| | |

| <br>

| |

| The approximate confidence bounds on <math>\lambda </math> are given as:

| |

| | |

| | |

| ::<math>C{{B}_{\lambda }}=\widehat{\lambda }{{e}^{\pm {{z}_{\alpha }}\sqrt{Var(\widehat{\lambda })}/\widehat{\lambda }}}</math>

| |

| | |

| | |

| where <math>\widehat{\lambda }=\tfrac{n}{T_{K}^{{\hat{\beta }}}}</math> .

| |

| The variance calculation is the same as Eqns. (var1), (var2) and (var3).

| |

| <br>

| |

| <br>

| |

| =====Crow Bounds=====

| |

| ''Time Terminated''

| |

| <br>

| |

| The confidence bounds on <math>\lambda </math> for time terminated data are calculated using:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{\lambda }_{L}}= & \frac{\chi _{\tfrac{\alpha }{2},2N}^{2}}{2\cdot \underset{q=1}{\overset{K}{\mathop{\sum }}}\,T_{q}^{^{\beta }}} \\

| |

| & {{\lambda }_{u}}= & \frac{\chi _{1-\tfrac{\alpha }{2},2N+2}^{2}}{2\cdot \underset{q=1}{\overset{K}{\mathop{\sum }}}\,T_{q}^{^{\beta }}}

| |

| \end{align}</math>

| |

| | |

| | |

| | |

| ''Failure Terminated''

| |

| <br>

| |

| The confidence bounds on <math>\lambda </math> for failure terminated data are calculated using:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{\lambda }_{L}}= & \frac{\chi _{\tfrac{\alpha }{2},2N}^{2}}{2\cdot \underset{q=1}{\overset{K}{\mathop{\sum }}}\,T_{q}^{^{\beta }}} \\

| |

| & {{\lambda }_{u}}= & \frac{\chi _{1-\tfrac{\alpha }{2},2N}^{2}}{2\cdot \underset{q=1}{\overset{K}{\mathop{\sum }}}\,T_{q}^{^{\beta }}}

| |

| \end{align}</math>

| |

| | |

| | |

| ====Bounds on Growth Rate====

| |

| Since the growth rate is equal to <math>1-\beta </math> , the confidence bounds are:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & Gr.\text{ }Rat{{e}_{L}}= & 1-{{\beta }_{U}} \\

| |

| & Gr.\text{ }Rat{{e}_{U}}= & 1-{{\beta }_{L}}

| |

| \end{align}</math>

| |

| | |

| If Fisher Matrix confidence bounds are used then <math>{{\beta }_{L}}</math> and <math>{{\beta }_{U}}</math> are obtained from Eqn. (betafc). If Crow bounds are used then <math>{{\beta }_{L}}</math> and <math>{{\beta }_{U}}</math> are obtained from Eqn. (betacc).

| |

| <br>

| |

| <br>

| |

| ====Bounds on Cumulative MTBF====

| |

| =====Fisher Matrix Bounds=====

| |

| The cumulative MTBF, <math>{{m}_{c}}(t)</math> , must be positive, thus <math>\ln {{m}_{c}}(t)</math> is approximately treated as being normally distributed.

| |

| | |

| ::<math>\frac{\ln ({{\widehat{m}}_{c}}(t))-\ln ({{m}_{c}}(t))}{\sqrt{Var\left[ \ln ({{\widehat{m}}_{c}}(t)) \right]}}\ \tilde{\ }\ N(0,1)</math>

| |

| | |

| The approximate confidence bounds on the cumulative MTBF are then estimated from:

| |

| | |

| | |

| ::<math>CB={{\widehat{m}}_{c}}(t){{e}^{\pm {{z}_{\alpha }}\sqrt{Var({{\widehat{m}}_{c}}(t))}/{{\widehat{m}}_{c}}(t)}}</math>

| |

| | |

| :where:

| |

| | |

| ::<math>{{\widehat{m}}_{c}}(t)=\frac{1}{\widehat{\lambda }}{{t}^{1-\widehat{\beta }}}</math>

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & Var({{\widehat{m}}_{c}}(t))= & {{\left( \frac{\partial {{m}_{c}}(t)}{\partial \beta } \right)}^{2}}Var(\widehat{\beta })+{{\left( \frac{\partial {{m}_{c}}(t)}{\partial \lambda } \right)}^{2}}Var(\widehat{\lambda }) \\

| |

| & & +2\left( \frac{\partial {{m}_{c}}(t)}{\partial \beta } \right)\left( \frac{\partial {{m}_{c}}(t)}{\partial \lambda } \right)cov(\widehat{\beta },\widehat{\lambda })\,

| |

| \end{align}</math>

| |

| | |

| The variance calculation is the same as Eqns. (var1), (var2) and (var3).

| |

| | |

| ::<math>\begin{align}

| |

| & \frac{\partial {{m}_{c}}(t)}{\partial \beta }= & -\frac{1}{\widehat{\lambda }}{{t}^{1-\widehat{\beta }}}\ln (t) \\

| |

| & \frac{\partial {{m}_{c}}(t)}{\partial \lambda }= & -\frac{1}{{{\widehat{\lambda }}^{2}}}{{t}^{1-\widehat{\beta }}}

| |

| \end{align}</math>

| |

| | |

| | |

| =====Crow Bounds=====

| |

| To calculate the Crow confidence bounds on cumulative MTBF, first calculate the Crow cumulative failure intensity confidence bounds:

| |

| | |

| ::<math>C{{(t)}_{L}}=\frac{\chi _{\tfrac{\alpha }{2},2N}^{2}}{2\cdot t}</math>

| |

| | |

| | |

| ::<math>C{{(t)}_{u}}=\frac{\chi _{1-\tfrac{\alpha }{2},2N+2}^{2}}{2\cdot t}</math>

| |

| | |

| :Then

| |

| | |

| ::<math>\begin{align}

| |

| & {{[MTB{{F}_{c}}]}_{L}}= & \frac{1}{C{{(t)}_{U}}} \\

| |

| & {{[MTB{{F}_{c}}]}_{U}}= & \frac{1}{C{{(t)}_{L}}}

| |

| \end{align}</math>

| |

| | |

| | |

| ====Bounds on Instantaneous MTBF====

| |

| =====Fisher Matrix Bounds=====

| |

| The instantaneous MTBF, <math>{{m}_{i}}(t)</math> , must be positive, thus <math>\ln {{m}_{i}}(t)</math> is approximately treated as being normally distributed.

| |

| | |

| ::<math>\frac{\ln ({{\widehat{m}}_{i}}(t))-\ln ({{m}_{i}}(t))}{\sqrt{Var\left[ \ln ({{\widehat{m}}_{i}}(t)) \right]}}\ \tilde{\ }\ N(0,1)</math>

| |

| | |

| | |

| The approximate confidence bounds on the instantaneous MTBF are then estimated from:

| |

| | |

| ::<math>CB={{\widehat{m}}_{i}}(t){{e}^{\pm {{z}_{\alpha }}\sqrt{Var({{\widehat{m}}_{i}}(t))}/{{\widehat{m}}_{i}}(t)}}</math>

| |

| | |

| :where:

| |

| | |

| ::<math>{{\widehat{m}}_{i}}(t)=\frac{1}{\lambda \beta {{t}^{\beta -1}}}</math>

| |

| | |

|

| |

| ::<math>\begin{align}

| |

| & Var({{\widehat{m}}_{i}}(t))= & {{\left( \frac{\partial {{m}_{i}}(t)}{\partial \beta } \right)}^{2}}Var(\widehat{\beta })+{{\left( \frac{\partial {{m}_{i}}(t)}{\partial \lambda } \right)}^{2}}Var(\widehat{\lambda }) \\

| |

| & & +2\left( \frac{\partial {{m}_{i}}(t)}{\partial \beta } \right)\left( \frac{\partial {{m}_{i}}(t)}{\partial \lambda } \right)cov(\widehat{\beta },\widehat{\lambda })

| |

| \end{align}</math>

| |

| | |

| | |

| The variance calculation is the same as (var1), (var2) and (var3).

| |

| | |

| ::<math>\begin{align}

| |

| & \frac{\partial {{m}_{i}}(t)}{\partial \beta }= & -\frac{1}{\widehat{\lambda }{{\widehat{\beta }}^{2}}}{{t}^{1-\widehat{\beta }}}-\frac{1}{\widehat{\lambda }\widehat{\beta }}{{t}^{1-\widehat{\beta }}}\ln (t) \\

| |

| & \frac{\partial {{m}_{i}}(t)}{\partial \lambda }= & -\frac{1}{{{\widehat{\lambda }}^{2}}\widehat{\beta }}{{t}^{1-\widehat{\beta }}}

| |

| \end{align}</math>

| |

| | |

| | |

| =====Crow Bounds=====

| |

| ''Failure Terminated Data''

| |

| <br>

| |

| To calculate the bounds for failure terminated data, consider the following equation:

| |

| | |

| ::<math>G(\mu |n)=\mathop{}_{0}^{\infty }\frac{{{e}^{-x}}{{x}^{n-2}}}{(n-2)!}\underset{i=0}{\overset{n-1}{\mathop \sum }}\,\frac{1}{i!}{{\left( \frac{\mu }{x} \right)}^{i}}\exp (-\frac{\mu }{x})\,dx</math>

| |

| | |

| | |

| Find the values <math>{{p}_{1}}</math> and <math>{{p}_{2}}</math> by finding the solution <math>c</math> to <math>G({{n}^{2}}/c|n)=\xi </math> for <math>\xi =\tfrac{\alpha }{2}</math> and <math>\xi =1-\tfrac{\alpha }{2}</math> , respectively. If using the biased parameters, <math>\hat{\beta }</math> and <math>\hat{\lambda }</math> , then the upper and lower confidence bounds are:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{[MTB{{F}_{i}}]}_{L}}= & MTB{{F}_{i}}\cdot {{p}_{1}} \\

| |

| & {{[MTB{{F}_{i}}]}_{U}}= & MTB{{F}_{i}}\cdot {{p}_{2}}

| |

| \end{align}</math>

| |

| | |

| | |

| where <math>MTB{{F}_{i}}=\tfrac{1}{\hat{\lambda }\hat{\beta }{{t}^{\hat{\beta }-1}}}</math> . If using the unbiased parameters, <math>\bar{\beta }</math> and <math>\bar{\lambda }</math> , then the upper and lower confidence bounds are:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{[MTB{{F}_{i}}]}_{L}}= & MTB{{F}_{i}}\cdot \left( \frac{N-2}{N} \right)\cdot {{p}_{1}} \\

| |

| & {{[MTB{{F}_{i}}]}_{U}}= & MTB{{F}_{i}}\cdot \left( \frac{N-2}{N} \right)\cdot {{p}_{2}}

| |

| \end{align}</math>

| |

| | |

| | |

| where <math>MTB{{F}_{i}}=\tfrac{1}{\hat{\lambda }\hat{\beta }{{t}^{\hat{\beta }-1}}}</math> .

| |

| <br>

| |

| <br>

| |

| ''Time Terminated Data''

| |

| <br>

| |

| To calculate the bounds for time terminated data, consider the following equation where <math>{{I}_{1}}(.)</math> is the modified Bessel function of order one:

| |

| | |

| ::<math>H(x|k)=\underset{j=1}{\overset{k}{\mathop \sum }}\,\frac{{{x}^{2j-1}}}{{{2}^{2j-1}}(j-1)!j!{{I}_{1}}(x)}</math>

| |

| | |

| | |

| Find the values <math>{{\Pi }_{1}}</math> and <math>{{\Pi }_{2}}</math> by finding the solution <math>x</math> to <math>H(x|k)=\tfrac{\alpha }{2}</math> and <math>H(x|k)=1-\tfrac{\alpha }{2}</math> in the cases corresponding to the lower and upper bounds, respectively. <br>

| |

| Calculate <math>\Pi =\tfrac{{{n}^{2}}}{4{{x}^{2}}}</math> for each case. If using the biased parameters, <math>\hat{\beta }</math> and <math>\hat{\lambda }</math> , then the upper and lower confidence bounds are:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{[MTB{{F}_{i}}]}_{L}}= & MTB{{F}_{i}}\cdot {{\Pi }_{1}} \\

| |

| & {{[MTB{{F}_{i}}]}_{U}}= & MTB{{F}_{i}}\cdot {{\Pi }_{2}}

| |

| \end{align}</math>

| |

| | |

| | |

| where <math>MTB{{F}_{i}}=\tfrac{1}{\hat{\lambda }\hat{\beta }{{t}^{\hat{\beta }-1}}}</math> . If using the unbiased parameters, <math>\bar{\beta }</math> and <math>\bar{\lambda }</math> , then the upper and lower confidence bounds are:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{[MTB{{F}_{i}}]}_{L}}= & MTB{{F}_{i}}\cdot \left( \frac{N-1}{N} \right)\cdot {{\Pi }_{1}} \\

| |

| & {{[MTB{{F}_{i}}]}_{U}}= & MTB{{F}_{i}}\cdot \left( \frac{N-1}{N} \right)\cdot {{\Pi }_{2}}

| |

| \end{align}</math>

| |

| | |

| | |

| where <math>MTB{{F}_{i}}=\tfrac{1}{\hat{\lambda }\hat{\beta }{{t}^{\hat{\beta }-1}}}</math> .

| |

| <br>

| |

| <br>

| |

| ====Bounds on Cumulative Failure Intensity====

| |

| =====Fisher Matrix Bounds=====

| |

| The cumulative failure intensity, <math>{{\lambda }_{c}}(t)</math> must be positive, thus <math>\ln {{\lambda }_{c}}(t)</math> is approximately treated as being normally distributed.

| |

| | |

| ::<math>\frac{\ln ({{\widehat{\lambda }}_{c}}(t))-\ln ({{\lambda }_{c}}(t))}{\sqrt{Var\left[ \ln ({{\widehat{\lambda }}_{c}}(t)) \right]}}\ \tilde{\ }\ N(0,1)</math>

| |

| | |

| The approximate confidence bounds on the cumulative failure intensity are then estimated using:

| |

| | |

| ::<math>CB={{\widehat{\lambda }}_{c}}(t){{e}^{\pm {{z}_{\alpha }}\sqrt{Var({{\widehat{\lambda }}_{c}}(t))}/{{\widehat{\lambda }}_{c}}(t)}}</math>

| |

| | |

| :where:

| |

| | |

| ::<math>{{\widehat{\lambda }}_{c}}(t)=\widehat{\lambda }{{t}^{\widehat{\beta }-1}}</math>

| |

| | |

| :and:

| |

| | |

| ::<math>\begin{align}

| |

| & Var({{\widehat{\lambda }}_{c}}(t))= & {{\left( \frac{\partial {{\lambda }_{c}}(t)}{\partial \beta } \right)}^{2}}Var(\widehat{\beta })+{{\left( \frac{\partial {{\lambda }_{c}}(t)}{\partial \lambda } \right)}^{2}}Var(\widehat{\lambda }) \\

| |

| & & +2\left( \frac{\partial {{\lambda }_{c}}(t)}{\partial \beta } \right)\left( \frac{\partial {{\lambda }_{c}}(t)}{\partial \lambda } \right)cov(\widehat{\beta },\widehat{\lambda })

| |

| \end{align}</math>

| |

| | |

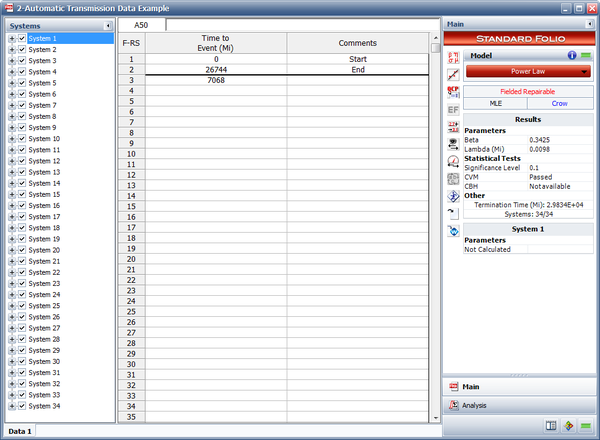

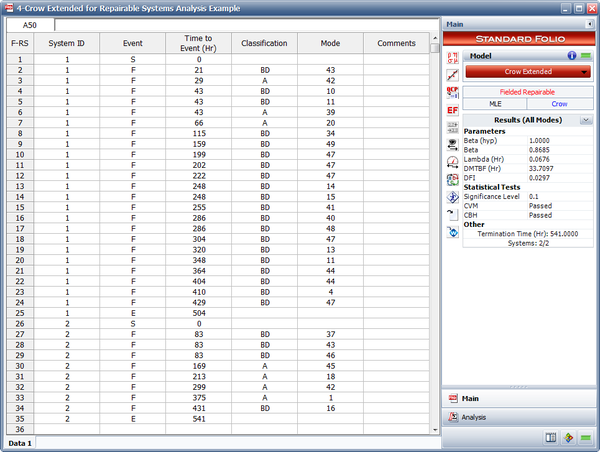

| | |