Design Evaluation and Power Study: Difference between revisions

| (171 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:Doebook| | {{Template:Doebook|11}} | ||

In general, there are three stages in applying design of experiments (DOE) to solve an issue: designing the experiment, conducting the experiment, and analyzing the data. The first stage is very critical. If the designed experiment is not efficient, you are unlikely to obtain good results. It is very common to evaluate an experiment before conducting the tests. A design evaluation often focuses on the following four properties: | |||

<ol> | |||

<li>'''The alias structure'''. Are main effects and two-way interactions in the experiment aliased with each other? What is the resolution of the design?</li> | |||

<li>'''The orthogonality'''. An orthogonal design is always preferred. If a design is non-orthogonal, how are the estimated coefficients correlated?</li> | |||

<li>'''The optimality'''. A design is called “optimal” if it can meet one or more of the following criteria: | |||

<ul> | |||

<li>''D''-optimality: minimize the determinant of the variance-covariance matrix.</li> | |||

<li>''A''-optimality: minimize the trace of the variance-covariance matrix.</li> | |||

<li>''V''-optimality: minimize the average prediction variance in the design space.</li></ul> | |||

</li> | |||

</ol> | |||

<ol start="4"><li>'''The power (or its inverse, Type II error).''' Power is the probability of detecting an effect through experiments when it is indeed active. A design with low power for main effects is not a good design.</li></ol> | |||

In the following sections, we will discuss how to evaluate a design according to these four properties. | |||

=Alias Structure= | |||

To reduce the sample size in an experiment, we usually focus only on the main effects and lower-order interactions, while assuming that higher-order interactions are not active. For example, screening experiments are often conducted with a number of runs that barely fits the main effect-only model. However, due to the limited number of runs, the estimated main effects often are actually combined effects of main effects and interaction effects. In other words the estimated main effects are ''aliased'' with interaction effects. Since these effects are aliased, the estimated main effects are said to be ''biased''. If the interaction effects are large, then the bias will be significant. Thus, it is very important to find out how all the effects in an experiment are aliased with each other. A design's alias structure is used for this purpose, and its calculation is given below. | |||

To reduce the sample size in an experiment, we usually focus only on the main effects and | |||

Assume the matrix representation of the true model for an experiment is: | Assume the matrix representation of the true model for an experiment is: | ||

:<math>Y={{X}_{1}}{{\beta }_{1}}+{{X}_{2}}{{\beta }_{2}}+\varepsilon </math> | : <math>Y={{X}_{1}}{{\beta }_{1}}+{{X}_{2}}{{\beta }_{2}}+\varepsilon \,\!</math> | ||

| Line 33: | Line 29: | ||

:<math>Y={{X}_{1}}{{\beta }_{1}}+\varepsilon </math> | : <math>Y={{X}_{1}}{{\beta }_{1}}+\varepsilon \,\!</math> | ||

then, from this experiment, the estimated <math>{{\beta }_{1}}\,\!</math> is biased. This is because the ordinary least square estimator of <math>{{\beta }_{1}}\,\!</math> is: | |||

:<math>{{\hat{\beta }}_{1}}={{\left( X_{1}^{'}{{X}_{1}} \right)}^{-1}}X_{1}^{'}Y\,\!</math> | |||

: | As discussed in [[DOE_References|[Wu, 2000]]], the expected value of this estimator is: | ||

:<math>\begin{align} | :<math>\begin{align} | ||

| Line 47: | Line 47: | ||

& ={{\left( X_{1}^{'}{{X}_{1}} \right)}^{-1}}X_{1}^{'}{{X}_{1}}{{\beta }_{1}}+{{\left( X_{1}^{'}{{X}_{1}} \right)}^{-1}}X_{1}^{'}{{X}_{2}}{{\beta }_{2}} \\ | & ={{\left( X_{1}^{'}{{X}_{1}} \right)}^{-1}}X_{1}^{'}{{X}_{1}}{{\beta }_{1}}+{{\left( X_{1}^{'}{{X}_{1}} \right)}^{-1}}X_{1}^{'}{{X}_{2}}{{\beta }_{2}} \\ | ||

& ={{\beta }_{1}}+A{{\beta }_{2}} | & ={{\beta }_{1}}+A{{\beta }_{2}} | ||

\end{align}</math> | \end{align}\,\!</math> | ||

A | where <math>A={{\left( X_{1}^{'}{{X}_{1}} \right)}^{-1}}X_{1}^{'}{{X}_{2}}\,\!</math> is called the ''alias matrix'' of the design. For example, for a three factorial screening experiment with four runs, the design matrix is: | ||

1 -1 | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |||

| A || B || C | |||

|- | |||

| -1 || -1 || 1 | |||

|- | |||

| 1 || -1 || -1 | |||

|- | |||

| -1 || 1 || -1 | |||

|- | |||

| 1 || 1 || 1 | |||

|} | |||

: | If we assume the ''true'' model is: | ||

:<math>Y={{\beta }_{0}}+{{\beta }_{1}}A+{{\beta }_{2}}B+{{\beta }_{3}}C+{{\beta }_{12}}AB+{{\beta }_{13}}AC+{{\beta }_{23}}BC+{{\beta }_{123}}ABC+\varepsilon \,\!</math> | |||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

and the ''used'' model (i.e., the model used in the experiment data analysis) is: | |||

:<math>Y={{\beta }_{0}}+{{\beta }_{1}}A+{{\beta }_{2}}B+{{\beta }_{3}}C+\varepsilon \,\!</math> | |||

then <math>{{X}_{1}}=[I\text{ }A\text{ }B\text{ }C]\,\!</math> and | |||

<math>{{X}_{2}}=[AB\text{ }AC\text{ }BC\text{ }ABC]\,\!</math>. The alias matrix ''A'' is calculated as: | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

| || AB|| AC|| BC|| ABC | | || AB|| AC|| BC|| ABC | ||

| Line 81: | Line 95: | ||

|C|| 1|| 0|| 0|| 0 | |C|| 1|| 0|| 0|| 0 | ||

|} | |} | ||

:{| style="text-align:center;" cellpadding="2" border="1" | Sometimes, we also put <math>{{X}_{1}}\,\!</math> in the above matrix. Then the ''A'' matrix becomes: | ||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

| || I|| A|| B|| C|| AB|| AC|| BC|| ABC | | || I|| A|| B|| C|| AB|| AC|| BC|| ABC | ||

| Line 97: | Line 113: | ||

|} | |} | ||

For the terms included in the used model, the alias structure is: | |||

For the terms included in the ''used'' model, the alias structure is: | |||

| Line 105: | Line 122: | ||

& [B]=B+AC \\ | & [B]=B+AC \\ | ||

& [C]=C+AB \\ | & [C]=C+AB \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||

For | From the alias structure and the definition of resolution, we know this is a resolution III design. The estimated main effects are aliased with two-way interactions. For example, ''A'' is aliased with ''BC''. If, based on engineering knowledge, the experimenter suspects that some of the interactions are important, then this design is unacceptable since it cannot distinguish the main effect from important interaction effects. | ||

For a designed experiment it is better to check its alias structure before conducting the experiment to determine whether or not some of the important effects can be clearly estimated. | |||

Orthogonality is a model-related property. For example, for a main effect-only model, if all the coefficients estimated through ordinary least squares estimation are not correlated, then this experiment is an orthogonal design for main effects. An orthogonal design has the minimal variance for the estimated model coefficients. Determining whether a design is orthogonal is very simple. Consider the following model: | =Orthogonality= | ||

Orthogonality is a model-related property. For example, for a main effect-only model, if all the coefficients estimated through ordinary least squares estimation are not correlated, then this experiment is an ''orthogonal design for main effects''. An orthogonal design has the minimal variance for the estimated model coefficients. Determining whether a design is orthogonal is very simple. Consider the following model: | |||

:<math>Y=X\beta +\varepsilon \,\!</math> | |||

The variance and covariance matrix for the model coefficients is: | The variance and covariance matrix for the model coefficients is: | ||

:<math>Var\left( {\hat{\beta }} \right)=\sigma _{\varepsilon }^{2}{{\left( {{X}^{'}}X \right)}^{-1}}</math> | :<math>Var\left( {\hat{\beta }} \right)=\sigma _{\varepsilon }^{2}{{\left( {{X}^{'}}X \right)}^{-1}}\,\!</math> | ||

where <math>\sigma _{\varepsilon }^{2}\,\!</math> is the variance of the error. When all the factors in the model are quantitative factors or all the factors are 2 levels, <math>Var\left( {\hat{\beta }} \right)\,\!</math> is a regular symmetric matrix . The diagonal elements of it are the variances of model coefficients, and the off-diagonal elements are the covariance among these coefficients. When some of the factors are qualitative factors with more than 2 levels, | |||

<math>Var\left( {\hat{\beta }} \right)\,\!</math> is a block symmetric matrix. The block elements in the diagonal represent the variance and covariance matrix of the qualitative factors, and the off-diagonal elements are the covariance among all the coefficients. | |||

Therefore, to check if a design is orthogonal for a given model, we only need to check matrix :<math>{{\left( {{X}^{'}}X \right)}^{-1}}\,\!</math>. For the example used in the previous section, if we assume the main effect-only model is used, then <math>{{\left( {{X}^{'}}X \right)}^{-1}}\,\!</math> is: | |||

:{| style="text-align:center;" cellpadding="2" border="1" | :{| style="text-align:center;" cellpadding="2" border="1" align="center" | ||

|- | |- | ||

| || I|| A|| B|| C | | || I|| A|| B|| C | ||

| Line 150: | Line 163: | ||

Since all the off diagonal elements are 0, the design is an orthogonal design for main effects. For an orthogonal design, it is also true that the diagonal elements are 1/n, where n is the number of total runs | Since all the off-diagonal elements are 0, the design is an orthogonal design for main effects. For an orthogonal design, it is also true that the diagonal elements are 1/''n'', where ''n'' is the number of total runs. | ||

:{| style="text-align:center;" cellpadding="2" border="1" | When there are qualitative factors with more than 2 levels in the model, <math>{{\left( {{X}^{'}}X \right)}^{-1}}\,\!</math> will be a block symmetric matrix. For example, assume we have the following design matrix. | ||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

!Run Order | !Run Order | ||

!A | !A | ||

| Line 198: | Line 211: | ||

Factor B has 3 levels, so 2 indicator variables are used in the regression model. The | Factor B has 3 levels, so 2 indicator variables are used in the regression model. The <math>{{\left( {{X}^{'}}X \right)}^{-1}}\,\!</math> matrix for a model with main effects and the interaction is: | ||

<math>{{\left( {{X}^{'}}X \right)}^{-1}}</math> | |||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

| || I|| A|| B[1] ||B[2]|| AB[1]|| AB[2] | | || I|| A|| B[1] ||B[2]|| AB[1]|| AB[2] | ||

| Line 224: | Line 236: | ||

For an orthogonal design for a given model, all the coefficients in the model can be estimated independently. Dropping one or more terms from the model will not affect the estimation of other coefficients and their variances. If a design is not orthogonal, it means some of the terms in the model are correlated. If the correlation is strong, then the statistical test results for these terms may not be accurate. | For an orthogonal design for a given model, all the coefficients in the model can be estimated independently. Dropping one or more terms from the model will not affect the estimation of other coefficients and their variances. If a design is not orthogonal, it means some of the terms in the model are correlated. If the correlation is strong, then the statistical test results for these terms may not be accurate. | ||

VIF (variance inflation factor) is used to examine the correlation of one term with other terms. The VIF is commonly used to diagnose multicollinearity in regression analysis. As a rule of thumb, a VIF of greater than | VIF (variance inflation factor) is used to examine the correlation of one term with other terms. The VIF is commonly used to diagnose multicollinearity in regression analysis. As a rule of thumb, a VIF of greater than 10 indicates a strong correlation between some of the terms. VIF can be simply calculated by: | ||

:<math>VI{{F}_{i}}=\frac{n}{{{\sigma }^{2}}}\operatorname{var}\left( {{{\hat{\beta }}}_{i}} \right)\,\!</math> | |||

For more detailed discussion on VIF, please see [[Multiple_Linear_Regression_Analysis#Multicollinearity|Multiple Linear Regression Analysis]]. | |||

=Optimality= | |||

Orthogonal design is always ideal. However, due to the constraints on sample size and cost, it is sometimes not possible. If this is the case, we want to get a design that is as orthogonal as possible. The so-called ''D''-efficiency is used to measure the orthogonality of a two level factorial design. It is defined as: | |||

:''D''-efficiency<math>={{\left( \frac{\left| X'X \right|}{{{n}^{p}}} \right)}^{1/p}}\,\!</math> | |||

where ''p'' is the number of coefficients in the model and ''n'' is the total sample size. ''D'' represents the determinant. | |||

<math>X'X\,\!</math> is the ''information matrix'' of a design. When you compare two different screening designs, the one with a larger determinant of <math>X'X\,\!</math> is usually better. ''D-efficiency'' can be used for comparing two designs. Other alphabetic optimal criteria are also used in design evaluation. If a model and the number of runs are given, an optimal design can be found using computer algorithms for one of the following optimality criteria: | |||

*''D''-optimality: maximize the determinant of the information matrix <math>X'X\,\!</math>. This is the same as minimizing the determinant of the variance-covariance matrix <math>{{\left( X'X \right)}^{-1}}\,\!</math>. | |||

<math>{{\left( X'X \right)}^{-1}}</math> | *''A''-optimality: minimize the trace of the variance-covariance matrix <math>{{\left( X'X \right)}^{-1}}\,\!</math>. The trace of a matrix is the sum of all its diagonal elements. | ||

*''V''-optimality (or ''I''-optimality): minimize the average prediction variance within the design space. | |||

= | |||

The determinant of <math>X'X\,\!</math> and the trace of <math>{{\left( X'X \right)}^{-1}}\,\!</math> | |||

are given in the design evaluation in the DOE folio. ''V''-optimality is not yet included. | |||

=Power Study = | |||

Power calculation is another very important topic in design evaluation. When designs are balanced, calculating the power (which, you will recall, is the probability of detecting an effect when that effect is active) is straightforward. However, for unbalanced designs, the calculation can be very complicated. We will discuss methods for calculating the power for a given effect for both balanced and unbalanced designs. | Power calculation is another very important topic in design evaluation. When designs are balanced, calculating the power (which, you will recall, is the probability of detecting an effect when that effect is active) is straightforward. However, for unbalanced designs, the calculation can be very complicated. We will discuss methods for calculating the power for a given effect for both balanced and unbalanced designs. | ||

==Power Study for Single Factor Designs (One-Way ANOVA)== | |||

Power is related to Type II error in hypothesis testing and is commonly used in statistical process control (SPC). Assume that at the normal condition, the output of a process follows a normal distribution with a mean of 10 and a standard deviation of 1.2. If the 3-sigma control limits are used and the sample size is 5, the control limits (assuming a normal distribution) for the X-bar chart are: | Power is related to Type II error in hypothesis testing and is commonly used in statistical process control (SPC). Assume that at the normal condition, the output of a process follows a normal distribution with a mean of 10 and a standard deviation of 1.2. If the 3-sigma control limits are used and the sample size is 5, the control limits (assuming a normal distribution) for the X-bar chart are: | ||

| Line 281: | Line 278: | ||

& UCL=\bar{x}+3\frac{\sigma }{\sqrt{n}}=10+3\frac{1.2}{\sqrt{5}}=11.61 \\ | & UCL=\bar{x}+3\frac{\sigma }{\sqrt{n}}=10+3\frac{1.2}{\sqrt{5}}=11.61 \\ | ||

& LCL=\bar{x}-3\frac{\sigma }{\sqrt{n}}=10-3\frac{1.2}{\sqrt{5}}=8.39 \\ | & LCL=\bar{x}-3\frac{\sigma }{\sqrt{n}}=10-3\frac{1.2}{\sqrt{5}}=8.39 \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||

If a calculated mean value from a sampling group is outside of the control limits, then the process is said to be out of control. However, since the mean value is from a random process following a normal distribution with a mean of 10 and standard derivation of | If a calculated mean value from a sampling group is outside of the control limits, then the process is said to be out of control. However, since the mean value is from a random process following a normal distribution with a mean of 10 and standard derivation of | ||

<math>{\sigma }/{\sqrt{n}}\;</math>, even when the process is under control, the sample mean still can be out of the control limits and cause a false alarm. The probability of causing a false alarm is called Type I error (or significance level or risk level). For this example, it is: | <math>{\sigma }/{\sqrt{n}}\;\,\!</math>, even when the process is under control, the sample mean still can be out of the control limits and cause a false alarm. The probability of causing a false alarm is called ''Type I error'' (or significance level or risk level). For this example, it is: | ||

:<math>\text{Type I Err}=2\times \left( 1-\Phi \left( 3 \right) \right)=0.0027</math> | :<math>\text{Type I Err}=2\times \left( 1-\Phi \left( 3 \right) \right)=0.0027\,\!</math> | ||

Similarly, if the process mean has shifted to a new value that means the process is indeed out of control (e.g., 12), applying the above control chart, the sample mean can still be within the control limits, resulting in a failure to detect the shift. The probability of causing a misdetection is called Type II error. For this example, it is: | Similarly, if the process mean has shifted to a new value that means the process is indeed out of control (e.g., 12), applying the above control chart, the sample mean can still be within the control limits, resulting in a failure to detect the shift. The probability of causing a misdetection is called ''Type II error''. For this example, it is: | ||

| Line 298: | Line 295: | ||

& =\Phi \left( -0.\text{72672} \right)-\Phi \left( -\text{6}.\text{72684} \right) \\ | & =\Phi \left( -0.\text{72672} \right)-\Phi \left( -\text{6}.\text{72684} \right) \\ | ||

& =0.2337 | & =0.2337 | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Power is defined as 1-Type II error. In this case, it is 0.766302. From this example, we can see that Type I and Type II errors are affected by sample size. Increasing sample size can reduce both errors. Engineers usually determine the sample size of a test based on the power requirement for a given effect. This is called the Power and Sample Size issue in design of experiments. | Power is defined as 1-Type II error. In this case, it is 0.766302. From this example, we can see that Type I and Type II errors are affected by sample size. Increasing sample size can reduce both errors. Engineers usually determine the sample size of a test based on the power requirement for a given effect. This is called the ''Power and Sample Size'' issue in design of experiments. | ||

==Power Calculation for Comparing Two Means== | ===Power Calculation for Comparing Two Means=== | ||

For one factor design, or one-way ANOVA, the simplest case is to design an experiment to compare the mean values at two different levels of a factor. Like the above control chart example, the calculated mean value at each level (in control and out of control) is a random variable. If the two means are different, we want to have a good chance to detect it. The difference of the two means is called the effect of this factor. For example, to compare the strength of a similar rope from two different manufacturers, 5 samples from each manufacturer are taken and tested. The test results (in newtons) are given below. | For one factor design, or one-way ANOVA, the simplest case is to design an experiment to compare the mean values at two different levels of a factor. Like the above control chart example, the calculated mean value at each level (in control and out of control) is a random variable. If the two means are different, we want to have a good chance to detect it. The difference of the two means is called the ''effect'' of this factor. For example, to compare the strength of a similar rope from two different manufacturers, 5 samples from each manufacturer are taken and tested. The test results (in newtons) are given below. | ||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

|M1|| M2 | |M1|| M2 | ||

| Line 325: | Line 323: | ||

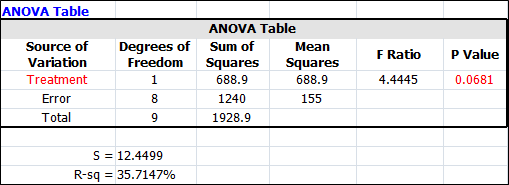

For this data, the ANOVA results are: | For this data, the ANOVA results are: | ||

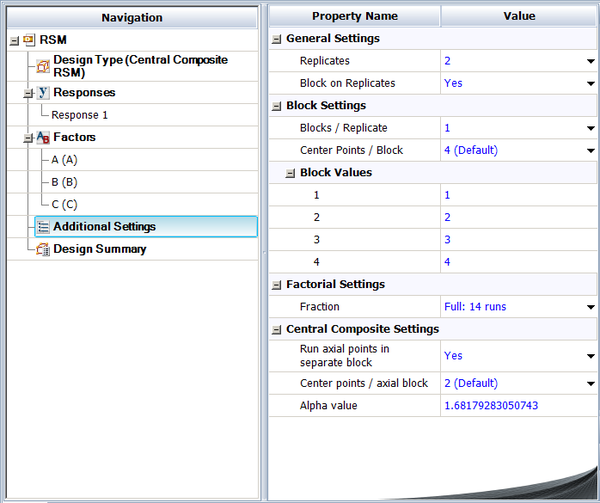

: | ::[[Image: DesignEvaluation_1.png|center|509px|link=]] | ||

The standard deviation of the error is 12.4499 as shown in the above screenshot. | |||

and the ''t''-test results are: | |||

:{| style="text-align:center;" cellpadding="2" border="1" | :{| style="text-align:center;" cellpadding="2" border="1" align="center" | ||

!bgcolor=#DDDDDD colspan="7" |Mean Comparisons | !bgcolor=#DDDDDD colspan="7" |Mean Comparisons | ||

|- | |- | ||

| Line 347: | Line 337: | ||

|} | |} | ||

To answer this question: first, from the significance level of 0.05, let’s calculate the critical limits for the t-test. They are: | Since the ''p'' value is 0.0681, there is no significant difference between these two vendors at a significance level of 0.05 (since .0681 > 0.05). However, since the samples are randomly taken from the two populations, if the true difference between the two vendors is 30, what is the power of detecting this amount of difference from this test? | ||

To answer this question: first, from the significance level of 0.05, let’s calculate the critical limits for the ''t''-test. They are: | |||

:<math>\begin{align} | :<math>\begin{align} | ||

& L=t_{0.025,v=8}^{-1}=-2.306 \\ | & L=t_{0.025,v=8}^{-1}=-2.306 \\ | ||

& U=t_{0.975,v=8}^{-1}=2.306 \\ | & U=t_{0.975,v=8}^{-1}=2.306 \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||

Define the mean of each vendor as | Define the mean of each vendor as | ||

<math>{{\mu }_{i}}</math> | <math>{{\mu }_{i}}\,\!</math> | ||

and | and | ||

<math>d={{\mu }_{1}}-{{\mu }_{2}}</math> | <math>d={{\mu }_{1}}-{{\mu }_{2}}\,\!</math> | ||

. Then the difference between the estimated sample means is: | . Then the difference between the estimated sample means is: | ||

:<math>\hat{d}={{\hat{\mu }}_{1}}-{{\hat{\mu }}_{2}}\,\!</math> | |||

Under the null hypothesis (the two vendors are the same), the ''t'' statistic is: | |||

:<math>{{t}_{0}}=\frac{{{{\hat{\mu }}}_{1}}-{{{\hat{\mu }}}_{2}}}{\sqrt{\frac{{{\sigma }^{2}}}{{{n}_{1}}}+\frac{{{\sigma }^{2}}}{{{n}_{2}}}}}\,\!</math> | |||

Under the alternative hypothesis when the true difference is 30, the calculated ''t'' statistic is from a non-central ''t'' distribution with non-centrality parameter <math>\delta \,\!</math> of: | |||

:<math>\delta =\frac{30}{\sqrt{\frac{{{\sigma }^{2}}}{{{n}_{1}}}+\frac{{{\sigma }^{2}}}{{{n}_{2}}}}}=3.81004</math> | |||

:<math>\delta =\frac{30}{\sqrt{\frac{{{\sigma }^{2}}}{{{n}_{1}}}+\frac{{{\sigma }^{2}}}{{{n}_{2}}}}}=3.81004\,\!</math> | |||

The Type II error is | The Type II error is | ||

<math>\Pr \left\{ L<{{t}_{0}}<U|d=30 \right\}=0.08609</math> | <math>\Pr \left\{ L<{{t}_{0}}<U|d=30 \right\}=0.08609\,\!</math> | ||

. So the power is 1-0.08609 =0.91391. | . So the power is 1-0.08609 =0.91391. | ||

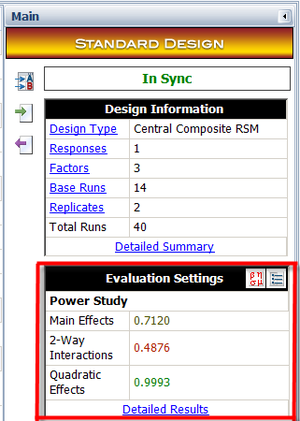

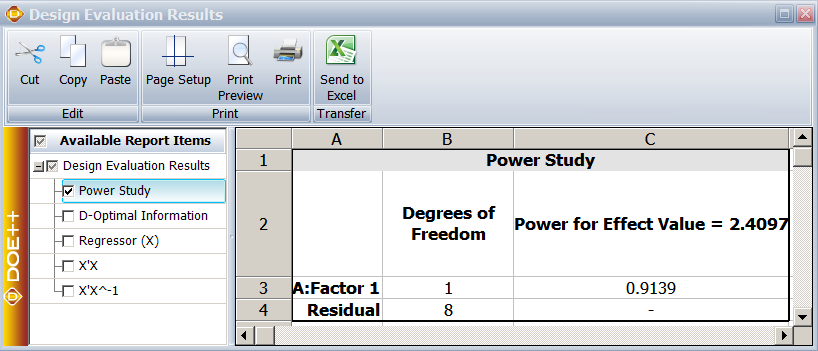

In a DOE folio, the '''''Effect''''' for the power calculation is entered as the multiple of the standard deviation of error. So effect of 30 is <math>30/S=30/12.4499=\text{2}.\text{4}0\text{9658}\,\!</math> standard deviation. This information is illustrated below. | |||

[[Image: DesignEvluation_2.png|center|317px]] | |||

and the calculated power for this effect is: | |||

::[[Image: DesignEvaluation_3.png|center|818px]] | |||

As we know, the square of a ''t'' distribution is an ''F'' distribution. The above ANOVA table uses the ''F'' distribution and the above "mean comparison" table uses the ''t'' distribution to calculate the ''p'' value. The ANOVA table is especially useful when conducting multiple level comparisons. We will illustrate how to use the ''F'' distribution to calculate the power for this example. | |||

At a significance level of 0.05, the critical value for the ''F'' distribution is: | |||

Under the alternative hypothesis when the true difference of these 2 vendors is 30, the calculated f statistic is from a non-central F distribution with non-centrality parameter | |||

<math>\phi ={{\delta }^{2}}=14.5161</math>. | :<math>U=f_{0.05,v1=1,v2=8}^{-1}=5.317655\,\!</math> | ||

Under the alternative hypothesis when the true difference of these 2 vendors is 30, the calculated ''f'' statistic is from a non-central ''F'' distribution with non-centrality parameter | |||

<math>\phi ={{\delta }^{2}}=14.5161\,\!</math>. | |||

The Type II error is | The Type II error is | ||

<math>\Pr \left\{ f<U|d=30 \right\}={{F}_{v1=1,v2=8,\phi =14.5161}}\left( f<U \right)=0.08609</math>. So the power is 1-0.08609 =0.91391. This is the same as the value we calculated using the non-central t distribution. | <math>\Pr \left\{ f<U|d=30 \right\}={{F}_{v1=1,v2=8,\phi =14.5161}}\left( f<U \right)=0.08609\,\!</math>. So the power is 1-0.08609 = 0.91391. This is the same as the value we calculated using the non-central t distribution. | ||

==Power Calculation for Comparing Multiple Means: Balanced Designs== | ===Power Calculation for Comparing Multiple Means: Balanced Designs=== | ||

When a factor has only two levels, as in the above example, there is only one effect of this factor, which is the difference of the means at these two levels. However, when there are multiple levels, there are multiple paired comparisons. For example, if there are r levels for a factor, there are | When a factor has only two levels, as in the above example, there is only one effect of this factor, which is the difference of the means at these two levels. However, when there are multiple levels, there are multiple paired comparisons. For example, if there are ''r'' levels for a factor, there are | ||

<math>\left( \begin{align} | <math>\left( \begin{align} | ||

& r \\ | & r \\ | ||

& 2 \\ | & 2 \\ | ||

\end{align} \right)</math> | \end{align} \right)\,\!</math> | ||

paired comparisons. In this case, what is the power of detecting a given difference among these comparisons? | paired comparisons. In this case, what is the power of detecting a given difference among these comparisons? | ||

In DOE | ''In a DOE folio, the power for a multiple level factor is defined as follows: given the largest difference among all the level means is'' <math>\Delta \,\!</math>'', power is the smallest probability of detecting this difference at a given significance level.'' | ||

<math>\Delta </math>, power is the smallest probability of detecting this difference at a given significance level. | |||

For example, if a factor has 4 levels and | For example, if a factor has 4 levels and | ||

<math>\Delta </math> | <math>\Delta \,\!</math> | ||

is 3, there are many scenarios that the largest difference among all the level means will be 3. The following table gives 4 possible scenarios. | is 3, there are many scenarios that the largest difference among all the level means will be 3. The following table gives 4 possible scenarios. | ||

:{| style="text-align:center;" cellpadding="2" border="1" | :{| style="text-align:center;" cellpadding="2" border="1" align="center" | ||

|- | |- | ||

|Case|| ''M1''|| ''Μ2''|| ''M3''|| ''M4'' | |Case|| ''M1''|| ''Μ2''|| ''M3''|| ''M4'' | ||

| Line 430: | Line 429: | ||

For all 4 cases, the largest difference among the means is the same: 3. The probability of detecting | For all 4 cases, the largest difference among the means is the same: 3. The probability of detecting | ||

<math>\Delta =3</math> | <math>\Delta =3\,\!</math> | ||

(individual power) can be calculated using the method in the previous section for each case. It has been proven [ | (individual power) can be calculated using the method in the previous section for each case. It has been proven in [[DOE References| [Kutner etc 2005, Guo etc 2012]]] that when the experiment is balanced, case 4 gives the lowest probability of detecting a given amount of effect. Therefore, the individual power calculated for case 4 is also the power for this experiment. In case 4, all but two factor level means are at the grand mean, and the two remaining factor level means are equally spaced around the grand mean. Is this a general pattern? Can the conclusion from this example be applied to general cases of balanced design? | ||

To answer these questions, let’s illustrate the power calculation mathematically. In one factor design or one-way ANOVA, a level is also traditionally called a treatment. The following linear regression model is used to model the data: | To answer these questions, let’s illustrate the power calculation mathematically. In one factor design or one-way ANOVA, a level is also traditionally called a ''treatment''. The following linear regression model is used to model the data: | ||

:<math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{ij1}}+{{\beta }_{2}}{{X}_{ij2}}+...+{{\beta }_{r-1}}{{X}_{ij,r-1}}+{{\varepsilon }_{ij}}</math> | :<math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{ij1}}+{{\beta }_{2}}{{X}_{ij2}}+...+{{\beta }_{r-1}}{{X}_{ij,r-1}}+{{\varepsilon }_{ij}}\,\!</math> | ||

where | where | ||

<math>{{Y}_{ij}}</math> | <math>{{Y}_{ij}}\,\!</math> | ||

is the | is the <math>j\,\!</math>th observation at the <math>i\,\!</math>th treatment and | ||

| Line 456: | Line 455: | ||

& 0\text{ otherwise} \\ | & 0\text{ otherwise} \\ | ||

\end{align} \right. \\ | \end{align} \right. \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||

First, let’s define the problem of power calculation. | '''First, let’s define the problem of power calculation.''' | ||

The power calculation of an experiment can be mathematically defined as: | The power calculation of an experiment can be mathematically defined as: | ||

:<math>\begin{align} | :<math>\begin{align} | ||

&min \text{ }P\{{{f}_{critical}}<F\left( 1-\alpha ;r-1,{{n}_{T}}-r \right)|\phi \} \\ | |||

&subject\text{ }to\text{ }\\ | |||

\end{align}</math> | &\underset{i\ne j}{\mathop{\max }}\,\left( |{{\mu }_{i}}-{{\mu }_{j}}| \right)=\Delta ,\text{ }i,j=1,...,r\text{ } \\ | ||

\end{align}\,\!</math> | |||

where r is the number of levels, | where <math>r\,\!</math> is the number of levels, <math>{{n}_{T}}\,\!</math> is the total samples, α is the significance level of the hypothesis testing, and <math>{{f}_{critical}}\,\!</math> is the critical value. The obtained minimal of the objective function in the above optimization problem is the power. The above optimization is the same as minimizing | ||

<math>{{f}_{critical}}</math> | <math>\phi \,\!</math>, the non-centrality parameter, since all the other variables in the non-central F distribution are fixed. | ||

is the critical value. The obtained minimal of the objective function in the above optimization problem is the power. The above optimization is the same as minimizing | |||

<math>\phi </math>, the non-centrality parameter, since all the other variables in the non-central F distribution are fixed. | |||

'''Second, let’s relate the level means with the regression coefficients. ''' | |||

Using the regression model, the mean response at the ith factor level is: | Using the regression model, the mean response at the ith factor level is: | ||

| Line 481: | Line 482: | ||

& {{\mu }_{i}}={{\beta }_{0}}+{{\beta }_{i}}\text{ for }i<r \\ | & {{\mu }_{i}}={{\beta }_{0}}+{{\beta }_{i}}\text{ for }i<r \\ | ||

& {{\mu }_{r}}={{\beta }_{0}}-\sum\limits_{i=1}^{r-1}{{{\beta }_{i}}\text{ for }i=r} \\ | & {{\mu }_{r}}={{\beta }_{0}}-\sum\limits_{i=1}^{r-1}{{{\beta }_{i}}\text{ for }i=r} \\ | ||

\end{align} \right.</math> | \end{align} \right.\,\!</math> | ||

The difference of level means can also be defined using the | The difference of level means can also be defined using the | ||

<math>\beta </math> values. For example, let | <math>\beta \,\!</math> values. For example, let | ||

<math>{{\Delta }_{ij}}={{\mu }_{i}}-{{\mu }_{j}}</math>, then: | <math>{{\Delta }_{ij}}={{\mu }_{i}}-{{\mu }_{j}}\,\!</math>, then: | ||

| Line 491: | Line 493: | ||

& \left| {{\beta }_{i}}-{{\beta }_{j}}\text{ } \right|\text{ }i<j,\text{ }j\ne r \\ | & \left| {{\beta }_{i}}-{{\beta }_{j}}\text{ } \right|\text{ }i<j,\text{ }j\ne r \\ | ||

& \left| 2{{\beta }_{i}}+\sum\limits_{l\ne i}^{{}}{{{\beta }_{l}}} \right|\text{ }i=r \\ | & \left| 2{{\beta }_{i}}+\sum\limits_{l\ne i}^{{}}{{{\beta }_{l}}} \right|\text{ }i=r \\ | ||

\end{align} \right.</math> | \end{align} \right.\,\!</math> | ||

Using | Using | ||

<math>\beta </math>, the non-centrality parameter | <math>\beta \,\!</math>, the non-centrality parameter | ||

<math>\phi </math> | <math>\phi \,\!</math> | ||

can be calculated as: | can be calculated as: | ||

:<math>\phi =\beta \Sigma _{\beta }^{-1}{{\beta }^{T}}</math> | :<math>\phi =\beta \Sigma _{\beta }^{-1}{{\beta }^{T}}\,\!</math> | ||

where <math>\beta =\left( {{\beta }_{1}},{{\beta }_{2}},...,{{\beta }_{r-1}} \right)</math> | where <math>\beta =\left( {{\beta }_{1}},{{\beta }_{2}},...,{{\beta }_{r-1}} \right)\,\!</math> | ||

and | and | ||

<math>{{\Sigma }_{\beta }}</math> | <math>{{\Sigma }_{\beta }}\,\!</math> | ||

is the variance and covariance matrix for | is the variance and covariance matrix for | ||

<math>\beta </math>. When the design is balanced, we know: | <math>\beta \,\!</math>. When the design is balanced, we know: | ||

:<math>\Sigma _{\beta }^{-1}=\frac{1}{{{\sigma }^{2}}}X_{\beta }^{T}{{X}_{\beta }}=\frac{1}{{{\sigma }^{2}}}\left( \begin{matrix} | :<math>\Sigma _{\beta }^{-1}=\frac{1}{{{\sigma }^{2}}}X_{\beta }^{T}{{X}_{\beta }}=\frac{1}{{{\sigma }^{2}}}\left( \begin{matrix} | ||

| Line 514: | Line 517: | ||

... & ... & ... & ... \\ | ... & ... & ... & ... \\ | ||

n & n & ... & 2n \\ | n & n & ... & 2n \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

where n is the sample size at each level. | where n is the sample size at each level. | ||

Third, let’s solve the optimization problem for balanced designs. | |||

'''Third, let’s solve the optimization problem for balanced designs.''' | |||

The power is calculated when | The power is calculated when | ||

<math>\phi </math> | <math>\phi \,\!</math> | ||

is at its minimum. Therefore, for balanced designs, the optimization issue becomes: | is at its minimum. Therefore, for balanced designs, the optimization issue becomes: | ||

:<math>\min \text{ }\phi \text{=}\frac{\text{2}n}{{{\sigma }^{2}}}\left[ \sum\limits_{i=1}^{r}{\beta _{i}^{2}}+\sum\limits_{i=1}^{r}{\sum\limits_{i\ne j}^{r}{{{\beta }_{i}}{{\beta }_{j}}}} \right]</math> | :<math>\min \text{ }\phi \text{=}\frac{\text{2}n}{{{\sigma }^{2}}}\left[ \sum\limits_{i=1}^{r}{\beta _{i}^{2}}+\sum\limits_{i=1}^{r}{\sum\limits_{i\ne j}^{r}{{{\beta }_{i}}{{\beta }_{j}}}} \right]\,\!</math> | ||

subject to | :<math>subject\text{ }to\text{ }\,\!</math> | ||

:<math>\begin{align} | :<math>\begin{align} | ||

& \max \left\{ \underset{i<j,j\ne r}{\mathop{|{{\mu }_{i}}-{{\mu }_{j}}|}}\,,\text{ }\underset{i<r,}{\mathop{\text{ }\!\!|\!\!\text{ }{{\mu }_{i}}-{{\mu }_{r}}\text{ }\!\!|\!\!\text{ }}}\, \right\} \\ | & \max \left\{ \underset{i<j,j\ne r}{\mathop{|{{\mu }_{i}}-{{\mu }_{j}}|}}\,,\text{ }\underset{i<r,}{\mathop{\text{ }\!\!|\!\!\text{ }{{\mu }_{i}}-{{\mu }_{r}}\text{ }\!\!|\!\!\text{ }}}\, \right\} \\ | ||

& =\max \left\{ \underset{i<j,j\ne r}{\mathop{|{{\beta }_{i}}-{{\beta }_{j}}|}}\,,\text{ }\!\!|\!\!\text{ }2{{\beta }_{i}}+\sum\limits_{l\ne i}^{{}}{{{\beta }_{l}}}\text{ }\!\!|\!\!\text{ } \right\}=\Delta \\ | & =\max \left\{ \underset{i<j,j\ne r}{\mathop{|{{\beta }_{i}}-{{\beta }_{j}}|}}\,,\text{ }\!\!|\!\!\text{ }2{{\beta }_{i}}+\sum\limits_{l\ne i}^{{}}{{{\beta }_{l}}}\text{ }\!\!|\!\!\text{ } \right\}=\Delta \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The two equations in the constraint represent two cases. Without losing generality, | The two equations in the constraint represent two cases. Without losing generality, <math>\Delta \,\!</math> | ||

is set to 1 in the following discussion. | is set to 1 in the following discussion. | ||

Case 1: | |||

'''Case 1''': | |||

, that is, the last level of the factor does not appear in the difference of level means. | <math>\Delta ={{\mu }_{k}}-{{\mu }_{l}}\,\!</math>, that is, the last level of the factor does not appear in the difference of level means. | ||

For example, let | For example, let | ||

<math>{{\Delta }_{kl}}={{\mu }_{k}}-{{\mu }_{l}}={{\beta }_{k}}-{{\beta }_{l}}=1</math>. | <math>{{\Delta }_{kl}}={{\mu }_{k}}-{{\mu }_{l}}={{\beta }_{k}}-{{\beta }_{l}}=1\,\!</math>. | ||

<math>k,l\ne r</math>. The optimal solution is | <math>k,l\ne r\,\!</math>. The optimal solution is | ||

<math>{{\beta }_{k}}=0.5</math>, | <math>{{\beta }_{k}}=0.5\,\!</math>, | ||

<math>{{\beta }_{l}}=-0.5</math>, | <math>{{\beta }_{l}}=-0.5\,\!</math>, | ||

<math>{{\beta }_{i}}=0</math> | <math>{{\beta }_{i}}=0\,\!</math> | ||

for | for | ||

<math>i\ne k,l</math>. This result means that at the optimal solution, | <math>i\ne k,l\,\!</math>. This result means that at the optimal solution, | ||

<math>{{\mu }_{k}}=0.5</math>, | <math>{{\mu }_{k}}=0.5\,\!</math>, | ||

<math>{{\mu }_{l}}=-0.5</math>, | <math>{{\mu }_{l}}=-0.5\,\!</math>, | ||

<math>{{\mu }_{i}}=0</math>, | <math>{{\mu }_{i}}=0\,\!</math>, | ||

<math>i\ne k,l</math>. | <math>i\ne k,l\,\!</math>. | ||

Case 2: In this case, one level in the comparisons is the last level of the factor in the largest difference of | |||

<math>\Delta =1</math>. | '''Case 2''': In this case, one level in the comparisons is the last level of the factor in the largest difference of | ||

<math>\Delta =1\,\!</math>. | |||

For example, let | For example, let <math>{{\Delta }_{kr}}={{\mu }_{k}}-{{\mu }_{r}}=\,\!</math> <math>2{{\beta }_{k}}+\sum\limits_{l\ne i}^{{}}{{{\beta }_{l}}}=1\,\!</math>. | ||

<math>{{\Delta }_{kr}}={{\mu }_{k}}-{{\mu }_{r}}=</math> | |||

<math>2{{\beta }_{k}}+\sum\limits_{l\ne i}^{{}}{{{\beta }_{l}}}=1</math>. | |||

The optimal solution is | The optimal solution is | ||

<math>{{\beta }_{k}}=0.5</math>, | <math>{{\beta }_{k}}=0.5\,\!</math>, | ||

<math>{{\beta }_{i}}=0</math> | <math>{{\beta }_{i}}=0\,\!</math> | ||

for <math>i\ne k</math>. This result means that at the optimal solution, <math>{{\mu }_{k}}=0.5</math>, | for <math>i\ne k\,\!</math>. This result means that at the optimal solution, <math>{{\mu }_{k}}=0.5\,\!</math>, | ||

<math>{{\mu }_{r}}=-0.5</math>, and | <math>{{\mu }_{r}}=-0.5\,\!</math>, and | ||

<math>{{\mu }_{i}}=0</math>, | <math>{{\mu }_{i}}=0\,\!</math>, | ||

<math>i\ne k,r</math>. | <math>i\ne k,r\,\!</math>. | ||

The proof for Case 1 and Case 2 is given in [Guo IEEM2012]. The results for Case 1 and Case 2 show that when one of the level means (adjusted by the grand mean) is | The proof for Case 1 and Case 2 is given in [Guo IEEM2012]. The results for Case 1 and Case 2 show that when one of the level means (adjusted by the grand mean) is | ||

<math>\Delta </math> | <math>\Delta/2 \,\!</math>, another level mean is -<math>\Delta/2 \,\!</math> and the rest level means are 0, the calculated power is the smallest power among all the possible scenarios. This result is the same as the observation for the 4-case example given at the beginning at this section. | ||

<math>\Delta </math> | |||

Let’s use the above optimization method to solve the example given in the previous section. In that example, the factor has 2 levels; the sample size is 5 at each level; the estimated | Let’s use the above optimization method to solve the example given in the previous section. In that example, the factor has 2 levels; the sample size is 5 at each level; the estimated | ||

<math>{{\sigma }^{2}}=155</math>; and | <math>{{\sigma }^{2}}=155\,\!</math>; and | ||

<math>\Delta =30</math>. The regression model is: | <math>\Delta =30\,\!</math>. The regression model is: | ||

:<math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{ij1}}+{{\varepsilon }_{ij}}</math> | :<math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{ij1}}+{{\varepsilon }_{ij}}\,\!</math> | ||

Since the sample size is 5, | Since the sample size is 5, | ||

<math>\Sigma _{\beta }^{-1}=\frac{2n}{{{\sigma }^{2}}}=\frac{10}{155}=0.064516</math>. From the above discussion, we know that when | <math>\Sigma _{\beta }^{-1}=\frac{2n}{{{\sigma }^{2}}}=\frac{10}{155}=0.064516\,\!</math>. From the above discussion, we know that when | ||

<math>{{\beta }_{1}}=0.5\Delta </math>, we get the minimal non-centrality parameter | <math>{{\beta }_{1}}=0.5\Delta \,\!</math>, we get the minimal non-centrality parameter | ||

<math>\phi ={{\beta }_{1}}\Sigma _{\beta }^{-1}{{\beta }_{1}}=14.51613</math>. This value is the same as what we got in the previous section using the non-central t and F distributions. Therefore, the method discussed in this section is a general method and can be used for cases with 2 level and multiple level factors. The previous non-central t and F distribution method is only for cases with 2 level factors. | <math>\phi ={{\beta }_{1}}\Sigma _{\beta }^{-1}{{\beta }_{1}}=14.51613\,\!</math>. This value is the same as what we got in the previous section using the non-central t and F distributions. Therefore, the method discussed in this section is a general method and can be used for cases with 2 level and multiple level factors. The previous non-central t and F distribution method is only for cases with 2 level factors. | ||

A 4 level balanced design example | |||

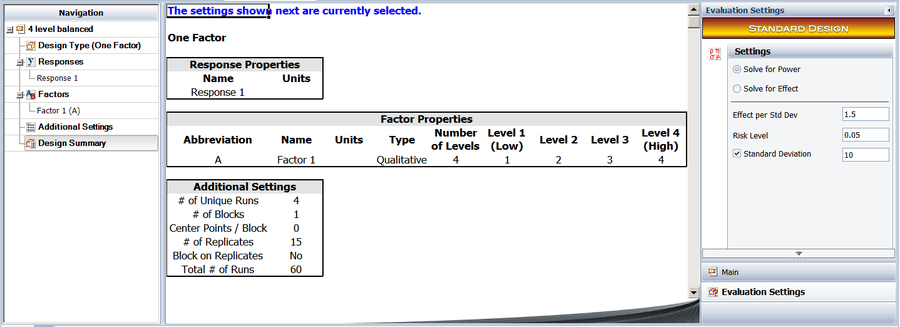

'''A 4 level balanced design example''' | |||

Assume an engineer wants to compare the performance of 4 different materials. Each material is a level of the factor. The sample size for each level is 15 and the standard deviation | Assume an engineer wants to compare the performance of 4 different materials. Each material is a level of the factor. The sample size for each level is 15 and the standard deviation | ||

<math>\sigma </math> | <math>\sigma \,\!</math> | ||

is 10. The engineer wants to calculate the power of this experiment when the largest difference among the materials is 15. If the power is less than 80%, he also wants to know what the sample size should be in order to obtain a power of 80%. Assume the significant level is 5%. | is 10. The engineer wants to calculate the power of this experiment when the largest difference among the materials is 15. If the power is less than 80%, he also wants to know what the sample size should be in order to obtain a power of 80%. Assume the significant level is 5%. | ||

Step 1: Build the linear regression model. Since there are 4 levels, we need 3 indicator variables. The model is: | '''Step 1''': Build the linear regression model. Since there are 4 levels, we need 3 indicator variables. The model is: | ||

:<math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{ij1}}+{{\beta }_{2}}{{X}_{ij2}}+{{\beta }_{3}}{{X}_{ij3}}+{{\varepsilon }_{ij}}</math> | :<math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{ij1}}+{{\beta }_{2}}{{X}_{ij2}}+{{\beta }_{3}}{{X}_{ij3}}+{{\varepsilon }_{ij}}\,\!</math> | ||

Step 2: Since the sample size is 15 and <math>\sigma </math> | '''Step 2''': Since the sample size is 15 and <math>\sigma \,\!</math> | ||

is 10: | is 10: | ||

| Line 611: | Line 616: | ||

0.15 & 0.30 & 0.15 \\ | 0.15 & 0.30 & 0.15 \\ | ||

0.15 & 0.15 & 0.30 \\ | 0.15 & 0.15 & 0.30 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

Step 3: Since there are 4 levels, there are 6 paired comparisons. For each comparison, the optimal | '''Step 3''': Since there are 4 levels, there are 6 paired comparisons. For each comparison, the optimal | ||

<math>\beta </math> | <math>\beta \,\!</math> | ||

is: | is: | ||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

|ID|| Paired Comparison|| beta1|| beta2|| beta3 | |ID|| Paired Comparison|| beta1|| beta2|| beta3 | ||

| Line 636: | Line 642: | ||

Step 4: Calculate the non-centrality parameter for each of the 6 solutions: | '''Step 4''': Calculate the non-centrality parameter for each of the 6 solutions: | ||

| Line 646: | Line 652: | ||

{} & {} & {} & {} & 16.875 & 8.4375 \\ | {} & {} & {} & {} & 16.875 & 8.4375 \\ | ||

{} & {} & {} & {} & {} & 16.875 \\ | {} & {} & {} & {} & {} & 16.875 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

The diagonal elements are the non-centrality parameter from each paired comparison. Denoting them as <math>{{\phi }_{i}}\,\!</math>, the power should be calculated using <math>\phi =\min \left( {{\phi }_{i}} \right)\,\!</math>. Since the design is balanced, we see here that all the <math>{{\phi }_{i}}\,\!</math> are the same. | |||

'''Step 5''': Calculate the critical F value. | |||

:<math>{{f}_{critical}}=F_{3,56}^{-1}(0.05)=2.7694\,\!</math> | |||

: | '''Step 6''': Calculate the power for this design using the non-central F distribution. | ||

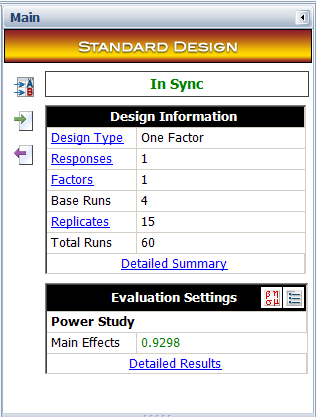

:<math>Power=1-F_{^{3,56}}^{{}}\left( {{f}_{critical}}|\phi =16.875 \right)=0.9298\,\!</math> | |||

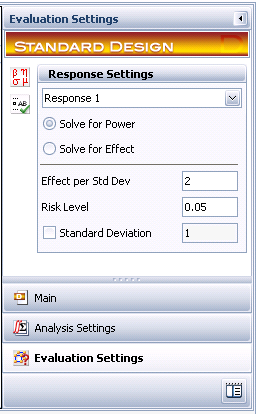

Since the power is greater than 80%, the sample size of 15 is sufficient. Otherwise, the sample size should be increased in order to achieve the desired power requirement. The settings and results in the DOE folio are given below. | |||

[[Image:DesignEvluation_4.png|center|900px|Design evaluation settings.]] | |||

[[Image:DesignEvluation_5.png|center|316px|Design evaluation summary of results.]] | |||

===Power Calculation for Comparing Multiple Means: Unbalanced Designs=== | |||

If the design is not balanced, the non-centrality parameter does not have the simple expression of <math>\phi \text{=}\frac{\text{2}n}{{{\sigma }^{2}}}\left[ \sum\limits_{i=1}^{r}{\beta _{i}^{2}}+\sum\limits_{i=1}^{r}{\sum\limits_{i\ne j}^{r}{{{\beta }_{i}}{{\beta }_{j}}}} \right]\,\!</math>, since <math>\Sigma _{\beta }^{-1}\,\!</math> will not have the simpler format seen in balanced designs. The optimization thus becomes more complicated. For each paired comparison, we need to solve an optimization problem by assuming this comparison has the largest difference. For example, assuming the ith comparison <math>\Delta =\underset{i<j}{\mathop{|{{\mu }_{i}}-{{\mu }_{j}}|}}\,\,\!</math> | |||

has the largest difference, we need to solve the following problem: | |||

:<math>\ | :<math>\min \text{ }{{\phi }_{i}}=\beta \Sigma _{\beta }^{-1}{{\beta }^{T}}\,\!</math> | ||

:<math> subject to \,\!</math> | |||

:<math>\Delta =\underset{i<j}{\mathop{|{{\mu }_{i}}-{{\mu }_{j}}|}}\,\,\!</math> | |||

:<math>\ | :<math> and \,\!</math> | ||

:<math>\left\{ \text{ }\underset{i<k,k\ne j}{\mathop{\text{ }\!\!|\!\!\text{ }{{\mu }_{i}}-{{\mu }_{k}}\text{ }\!\!|\!\!\text{ }}}\, \right\}\le \Delta \,\!</math> | |||

In total, we need to solve <math>\left( \begin{align} | |||

<math>\ | & r \\ | ||

& 2 \\ | |||

\end{align} \right)\,\!</math> optimization problems and use the smallest <math>\min ({{\phi }_{i}})\,\!</math> among all the solutions to calculate the power of the experiment. Clearly, the calculation will be very expensive. | |||

In a DOE folio, instead of calculating the exact solution, we use the optimal <math>\beta \,\!</math> for a balanced design to calculate the approximated power for an unbalanced design. It can be seen that the optimal <math>\beta \,\!</math> for a balanced design also can satisfy all the constraints for an unbalanced design. Therefore, the approximated power is always higher than the unknown true power when the design is unbalanced. | |||

<math>\ | |||

: | '''A 3-level unbalanced design example: exact solution''' | ||

and | Assume an engineer wants to compare the performance of three different materials. 4 samples are available for material A, 5 samples for material B and 13 samples for material C. The responses of different materials follow a normal distribution with a standard deviation of | ||

<math>\sigma =1\,\!</math>. The engineer is required to calculate the power of detecting difference of 1 | |||

<math>\sigma \,\!</math> | |||

among all the level means at a significance level of 0.05. | |||

From the design matrix of the test, <math>{{X}^{T}}X\,\!</math> and <math>\Sigma _{\beta }^{-1}\,\!</math> are calculated as: | |||

are calculated as: | |||

| Line 720: | Line 718: | ||

-9 & 17 & 13 \\ | -9 & 17 & 13 \\ | ||

-8 & 13 & 18 \\ | -8 & 13 & 18 \\ | ||

\end{matrix} \right)</math>, | \end{matrix} \right)\,\!</math>, | ||

:<math>\Sigma _{\beta }^{-1}=\left( \begin{matrix} | :<math>\Sigma _{\beta }^{-1}=\left( \begin{matrix} | ||

13.31818 & 9.727273 \\ | 13.31818 & 9.727273 \\ | ||

9.727273 & 15.09091 \\ | 9.727273 & 15.09091 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

There are 3 paired comparisons. They are | There are 3 paired comparisons. They are | ||

<math>{{\mu }_{1}}-{{\mu }_{2}},</math> | <math>{{\mu }_{1}}-{{\mu }_{2}}\,\!</math>, <math>{{\mu }_{1}}-{{\mu }_{3}}\,\!</math> and <math>{{\mu }_{2}}-{{\mu }_{3}}\,\!</math>. | ||

and | |||

If the first comparison <math>{{\mu }_{1}}-{{\mu }_{2}}\,\!</math> | |||

has the largest level mean difference of 1 | has the largest level mean difference of 1 | ||

<math>\sigma </math>, then the optimization problem becomes: | <math>\sigma \,\!</math>, then the optimization problem becomes: | ||

| Line 747: | Line 737: | ||

& \min \text{ }{{\phi }_{1}}=\beta \Sigma _{\beta }^{-1}{{\beta }^{T}} \\ | & \min \text{ }{{\phi }_{1}}=\beta \Sigma _{\beta }^{-1}{{\beta }^{T}} \\ | ||

& subject\text{ }to\text{ }{{\beta }_{1}}-{{\beta }_{2}}=1;\text{ }\left| \text{2}{{\beta }_{1}}+{{\beta }_{2}} \right|\le 1;\text{ }\left| \text{2}{{\beta }_{2}}+{{\beta }_{1}} \right|\le 1 \\ | & subject\text{ }to\text{ }{{\beta }_{1}}-{{\beta }_{2}}=1;\text{ }\left| \text{2}{{\beta }_{1}}+{{\beta }_{2}} \right|\le 1;\text{ }\left| \text{2}{{\beta }_{2}}+{{\beta }_{1}} \right|\le 1 \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||

The optimal solution is | The optimal solution is <math>{{\beta }_{1}}=0.51852;\text{ }{{\beta }_{2}}=-0.48148\,\!</math>, and the optimal | ||

<math>{{\phi }_{1}}=2.22222\,\!</math>. | |||

<math>{{\phi }_{1}}=2.22222 | |||

If the second comparison | If the second comparison <math>{{\mu }_{1}}-{{\mu }_{3}}\,\!</math> | ||

has the largest level mean difference, then the optimization is similar to the above problem. The optimal solution is | has the largest level mean difference, then the optimization is similar to the above problem. The optimal solution is | ||

<math>{{\beta }_{1}}=0.588235;\ | <math>{{\beta }_{1}}=0.588235\,\!</math>; <math>{{\beta }_{2}}=-0.17647\,\!</math> and the optimal | ||

<math>{{\phi }_{2}}=3.058824\,\!</math>. | |||

If the third comparison <math>{{\mu }_{2}}-{{\mu }_{3}}\,\!</math> | |||

If the third comparison | |||

has the largest level mean difference, then the optimal solution is | has the largest level mean difference, then the optimal solution is | ||

<math>{{\beta }_{1}}=-0.14815\,\!</math>; {{\beta }_{2}}=0.57407\,\!</math> and the optimal | |||

<math>{{\phi }_{3}}=3.61111 | <math>{{\phi }_{3}}=3.61111\,\!</math>. | ||

From the definition of power, we know that the power of a design should be calculated using the smallest non-centrality parameter of all possible outcomes. In this example, it is | From the definition of power, we know that the power of a design should be calculated using the smallest non-centrality parameter of all possible outcomes. In this example, it is | ||

<math>\phi =\min \left( {{\phi }_{i}} \right)=2.22222</math>. Since the significance level is 0.05, the critical value for the F test is | <math>\phi =\min \left( {{\phi }_{i}} \right)=2.22222\,\!</math>. Since the significance level is 0.05, the critical value for the F test is | ||

<math>{{f}_{citical}}=F_{2,19}^{-1}(0.05)=3.52189</math>. The power for this example is: | <math>{{f}_{citical}}=F_{2,19}^{-1}(0.05)=3.52189\,\!</math>. The power for this example is: | ||

:<math>Power=1-{{F}_{2,19}}\left( {{f}_{critical}}|\phi =2.22222 \right)=0.2161</math> | :<math>Power=1-{{F}_{2,19}}\left( {{f}_{critical}}|\phi =2.22222 \right)=0.2161\,\!</math> | ||

A 3-level unbalanced design example: approximated solution | '''A 3-level unbalanced design example: approximated solution''' | ||

For the above example, we can get the approximated power by using the optimal | For the above example, we can get the approximated power by using the optimal | ||

<math>\beta </math> | <math>\beta \,\!</math> | ||

of a balanced design. If the design is balanced, the optimal solution will be: | of a balanced design. If the design is balanced, the optimal solution will be: | ||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

|Solution ID|| Paired Comparison|| β1|| β2 | |Solution ID|| Paired Comparison|| β1|| β2 | ||

| Line 804: | Line 790: | ||

0.5 & 0 \\ | 0.5 & 0 \\ | ||

0 & 0.5 \\ | 0 & 0.5 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

Since the design is unbalanced, use | Since the design is unbalanced, use | ||

<math>\Sigma _{\beta }^{-1}</math> | <math>\Sigma _{\beta }^{-1}\,\!</math> | ||

from the above example to get: | from the above example to get: | ||

| Line 816: | Line 802: | ||

0.897727 & \text{3}.\text{329545} & \text{2}.\text{431818} \\ | 0.897727 & \text{3}.\text{329545} & \text{2}.\text{431818} \\ | ||

-1.34091 & \text{2}.\text{431818} & \text{3}.\text{772727} \\ | -1.34091 & \text{2}.\text{431818} & \text{3}.\text{772727} \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

The smallest | The smallest | ||

<math>{{\phi }_{i}}</math> | <math>{{\phi }_{i}}\,\!</math> | ||

is 2.238636. For this example, it is very close to the exact solution 2.22222 given in the previous calculation. The approximated power is: | is 2.238636. For this example, it is very close to the exact solution 2.22222 given in the previous calculation. The approximated power is: | ||

:<math>Power=1-{{F}_{2,19}}\left( {{f}_{critical}}|\phi =2.238636 \right)=0.2174</math> | :<math>Power=1-{{F}_{2,19}}\left( {{f}_{critical}}|\phi =2.238636 \right)=0.2174\,\!</math> | ||

| Line 831: | Line 817: | ||

In practical cases, the above method can be applied to quickly check the power of a design. If the calculated power cannot meet the required value, the true power definitely will not meet the requirement, since the calculated power using this procedure is always equal to (for balanced designs) or larger than (for unbalanced designs) the true value. | In practical cases, the above method can be applied to quickly check the power of a design. If the calculated power cannot meet the required value, the true power definitely will not meet the requirement, since the calculated power using this procedure is always equal to (for balanced designs) or larger than (for unbalanced designs) the true value. | ||

The result in DOE | The result in the DOE folio for this example is given as: | ||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

!bgcolor= #DDDDDD colspan="3" | Power Study | !bgcolor= #DDDDDD colspan="3" | Power Study | ||

| Line 844: | Line 831: | ||

|} | |} | ||

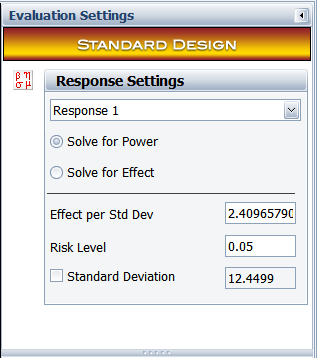

=Power Study for 2 Level Factorial Designs= | ==Power Study for 2 Level Factorial Designs== | ||

For 2 level factorial designs, each factor (effect) has only one coefficient. The linear regression model is: | For 2 level factorial designs, each factor (effect) has only one coefficient. The linear regression model is: | ||

:<math>{{Y}_{i}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{i,1}}+{{\beta }_{2}}{{X}_{i,2}}+{{\beta }_{3}}{{X}_{i,3}}+...+{{\beta }_{12}}{{X}_{i,1}}{{X}_{i,2}}+...+{{\varepsilon }_{i}}</math> | :<math>{{Y}_{i}}={{\beta }_{0}}+{{\beta }_{1}}{{X}_{i,1}}+{{\beta }_{2}}{{X}_{i,2}}+{{\beta }_{3}}{{X}_{i,3}}+...+{{\beta }_{12}}{{X}_{i,1}}{{X}_{i,2}}+...+{{\varepsilon }_{i}}\,\!</math> | ||

The model can include main effect terms and interaction effect terms. Each | The model can include main effect terms and interaction effect terms. Each | ||

<math>{{X}_{i}}</math> | <math>{{X}_{i}}\,\!</math> | ||

can be -1 (the low level) or +1 (the high level). The effect of a main effect term is defined as the difference of the mean value of Y at | can be -1 (the low level) or +1 (the high level). The effect of a main effect term is defined as the difference of the mean value of Y at | ||

<math>{{X}_{i}}=+1</math> | <math>{{X}_{i}}=+1\,\!</math> | ||

and | and | ||

<math>{{X}_{i}}=-1</math> | <math>{{X}_{i}}=-1\,\!</math> | ||

. Please notice that all the factor values here are coded values. For example, the effect of | . Please notice that all the factor values here are coded values. For example, the effect of | ||

<math>{{X}_{1}}</math> | <math>{{X}_{1}}\,\!</math> | ||

is defined by: | is defined by: | ||

:<math>Y\left( {{X}_{1}}=1 \right)-Y\left( {{X}_{1}}=-1 \right)=2{{\beta }_{1}}</math> | :<math>Y\left( {{X}_{1}}=1 \right)-Y\left( {{X}_{1}}=-1 \right)=2{{\beta }_{1}}\,\!</math> | ||

Similarly, the effect of an interaction term is also defined as the difference of the mean values of Y at the interaction terms of +1 and -1. For example, the effect of | Similarly, the effect of an interaction term is also defined as the difference of the mean values of Y at the interaction terms of +1 and -1. For example, the effect of | ||

<math>{{X}_{1}}{{X}_{2}}</math> | <math>{{X}_{1}}{{X}_{2}}\,\!</math> | ||

is: | is: | ||

:<math>Y\left( {{X}_{1}}{{X}_{2}}=1 \right)-Y\left( {{X}_{1}}{{X}_{2}}=-1 \right)=2{{\beta }_{12}}</math> | :<math>Y\left( {{X}_{1}}{{X}_{2}}=1 \right)-Y\left( {{X}_{1}}{{X}_{2}}=-1 \right)=2{{\beta }_{12}}\,\!</math> | ||

Therefore, if the effect of a term that we want to calculate the power for is | Therefore, if the effect of a term that we want to calculate the power for is | ||

<math>{{\Delta }_{i}}</math>, then the corresponding coefficient | <math>{{\Delta }_{i}}\,\!</math>, then the corresponding coefficient | ||

<math>{{\beta }_{i}}</math> | <math>{{\beta }_{i}}\,\!</math> | ||

must be | must be | ||

<math>{{\Delta }_{i}}/2</math> | <math>{{\Delta }_{i}}/2\,\!</math> | ||

. Therefore, the non-centrality parameter for each term in the model for a 2 level factorial design can be calculated as | . Therefore, the non-centrality parameter for each term in the model for a 2 level factorial design can be calculated as | ||

:<math>{{\phi }_{i}}=\frac{\beta _{i}^{2}}{Var({{\beta }_{i}})}=\frac{\Delta _{i}^{2}}{4Var({{\beta }_{i}})}</math> | :<math>{{\phi }_{i}}=\frac{\beta _{i}^{2}}{Var({{\beta }_{i}})}=\frac{\Delta _{i}^{2}}{4Var({{\beta }_{i}})}\,\!</math> | ||

Once | Once | ||

<math>{{\phi }_{i}}</math> | <math>{{\phi }_{i}}\,\!</math> | ||

is calculated, we can use it to calculate the power. If the design is balanced, the power of terms with the same order will be the same. In other words, all the main effects have the same power and all the k-way (k=2, 3, 4, …) interactions have the same power. | is calculated, we can use it to calculate the power. If the design is balanced, the power of terms with the same order will be the same. In other words, all the main effects have the same power and all the k-way (k=2, 3, 4, …) interactions have the same power. | ||

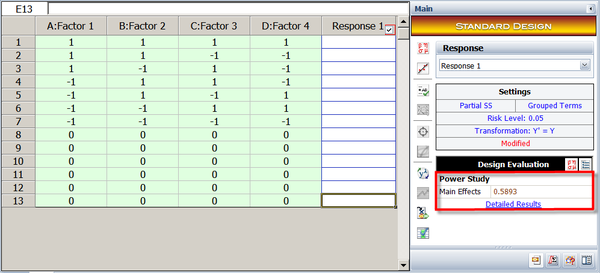

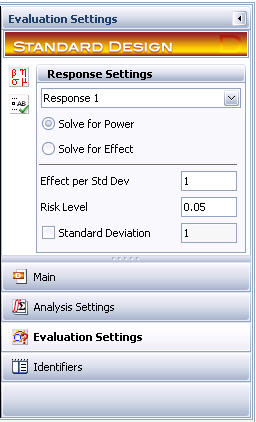

:{| style=" | '''Example:''' Due to the constraints of sample size and cost, an engineer can run only the following 13 tests for a 4 factorial design: | ||

:{| style="Width: 60%" cellpadding="2" border="1" align="center" text-align="center" | |||

|- | |- | ||

|Run|| A|| B|| C|| D | |Run|| A|| B|| C|| D | ||

| Line 924: | Line 912: | ||

Before doing the tests, he wants to evaluate the power for each main effect. Assume the amount of effect he wants to perform a power calculation for is 2 | Before doing the tests, he wants to evaluate the power for each main effect. Assume the amount of effect he wants to perform a power calculation for is 2 | ||

<math>\sigma </math> | <math>\sigma \,\!</math> | ||

. The significance level is 0.05. | . The significance level is 0.05. | ||

Step 1: Calculate the variance and covariance matrix for the model coefficients. | '''Step 1''': Calculate the variance and covariance matrix for the model coefficients. | ||

The main effect-only model is: | The main effect-only model is: | ||

:<math>{{Y}_{i}}={{\beta }_{0}}+{{\beta }_{1}}{{A}_{i}}+{{\beta }_{2}}{{B}_{i}}+{{\beta }_{3}}{{C}_{i}}+{{\beta }_{4}}{{D}_{i}}+{{\varepsilon }_{i}}</math> | :<math>{{Y}_{i}}={{\beta }_{0}}+{{\beta }_{1}}{{A}_{i}}+{{\beta }_{2}}{{B}_{i}}+{{\beta }_{3}}{{C}_{i}}+{{\beta }_{4}}{{D}_{i}}+{{\varepsilon }_{i}}\,\!</math> | ||

| Line 937: | Line 925: | ||

:<math>Var(\beta )={{\sigma }^{2}}{{\left( X'X \right)}^{-1}}</math> | :<math>Var(\beta )={{\sigma }^{2}}{{\left( X'X \right)}^{-1}}\,\!</math> | ||

The value for <math>{{\left( X'X \right)}^{-1}}\,\!</math> is | |||

: | ::::{| style="text-align:center;" cellpadding="2" border="1" align="center" | ||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

|- | |- | ||

| || beta0|| beta1 ||beta2 ||beta3|| beta4 | | || beta0|| beta1 ||beta2 ||beta3|| beta4 | ||

| Line 962: | Line 948: | ||

The diagonal elements are the variances for the coefficients. | The diagonal elements are the variances for the coefficients. | ||

Step 2: Calculate the non-centrality parameter for each term. In this example, all the main effect terms have the same variance, so they have the same non-centrality parameter value. | '''Step 2''': Calculate the non-centrality parameter for each term. In this example, all the main effect terms have the same variance, so they have the same non-centrality parameter value. | ||

:<math>{{\phi }_{i}}=\frac{\Delta _{i}^{2}}{4Var({{\beta }_{i}})}=\frac{1}{0.161458}=6.19355</math> | :<math>{{\phi }_{i}}=\frac{\Delta _{i}^{2}}{4Var({{\beta }_{i}})}=\frac{1}{0.161458}=6.19355\,\!</math> | ||

Step 3: Calculate the critical value for the F test. It is: | '''Step 3''': Calculate the critical value for the F test. It is: | ||

:<math>{{f}_{citical}}=F_{1,8}^{-1}(0.05)=5.31766</math> | :<math>{{f}_{citical}}=F_{1,8}^{-1}(0.05)=5.31766\,\!</math> | ||

Step 4: Calculate the power for each main effect term. For this example, the power is the same for all of them: | '''Step 4''': Calculate the power for each main effect term. For this example, the power is the same for all of them: | ||

:<math>Power=1-{{F}_{1,8}}\left( {{f}_{critical}}|\phi =6.19355 \right)=0.58926</math> | :<math>Power=1-{{F}_{1,8}}\left( {{f}_{critical}}|\phi =6.19355 \right)=0.58926\,\!</math> | ||

The settings and results in DOE | The settings and results in the DOE folio are given below. | ||

[[Image:design_eval3.png|center|256px|Evaluation settings.]] | |||

[[Image:DesignEvluation_6.png.png|center|600px|Evaluation results.]] | |||

In general, the calculated power for each term will be different for unbalanced designs. However, the above procedure can be applied for both balanced and unbalanced 2 level factorial designs. | In general, the calculated power for each term will be different for unbalanced designs. However, the above procedure can be applied for both balanced and unbalanced 2 level factorial designs. | ||

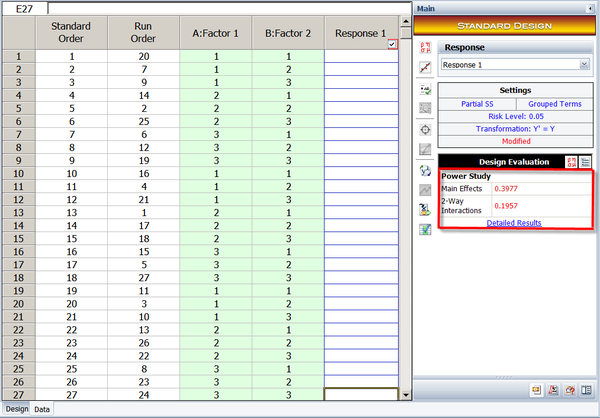

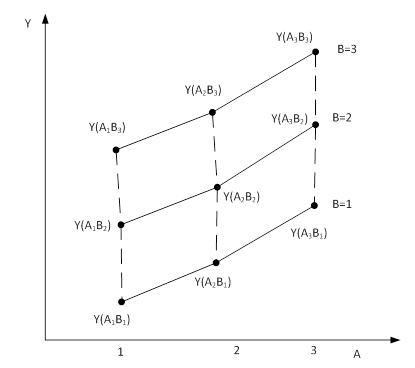

=Power Study for General Level Factorial Designs= | ==Power Study for General Level Factorial Designs== | ||

For a quantitative factor X with more than 2 levels, its effect is defined as: | For a quantitative factor X with more than 2 levels, its effect is defined as: | ||

:<math>Y\left( {{X}_{i}}=1 \right)-Y\left( {{X}_{i}}=-1 \right)=2{{\beta }_{i}}</math> | :<math>Y\left( {{X}_{i}}=1 \right)-Y\left( {{X}_{i}}=-1 \right)=2{{\beta }_{i}}\,\!</math> | ||

| Line 1,002: | Line 994: | ||

& Y={{\beta }_{0}}+{{\beta }_{1}}A[1]+{{\beta }_{2}}A[2]+{{\beta }_{3}}B[1]+{{\beta }_{4}}B[2]+{{\beta }_{11}}A[1]B[1] \\ | & Y={{\beta }_{0}}+{{\beta }_{1}}A[1]+{{\beta }_{2}}A[2]+{{\beta }_{3}}B[1]+{{\beta }_{4}}B[2]+{{\beta }_{11}}A[1]B[1] \\ | ||

& +{{\beta }_{12}}A[1]B[2]+{{\beta }_{21}}A[2]B[1]+{{\beta }_{22}}A[2]B[2] | & +{{\beta }_{12}}A[1]B[2]+{{\beta }_{21}}A[2]B[1]+{{\beta }_{22}}A[2]B[2] | ||

\end{align}</math> | \end{align}\,\!</math> | ||

:{| style="text-align:center;" cellpadding="2" border="1" | There are 2 regression terms for each main effect, and 4 regression terms for the interaction effect. We will use the above equation to explain how the power for the main effects and interaction effects is calculated in the DOE folio. The following balanced design is used for the calculation: | ||

:{| style="width: 60%; text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

|Run|| A|| B|| Run|| A|| B | |Run|| A|| B|| Run|| A|| B | ||

| Line 1,039: | Line 1,033: | ||

|} | |} | ||

===Power Study for Main Effects=== | |||

==Power Study for Main Effects== | |||

Let’s use factor A to show how the power is defined and calculated for the main effects. For the above design, if we ignore factor B, then it becomes a 1 factor design with 9 samples at each level. Therefore, the same linear regression model and power calculation method as discussed for 1 factor designs can be used to calculate the power for the main effects for this multiple level factorial design. | Let’s use factor A to show how the power is defined and calculated for the main effects. For the above design, if we ignore factor B, then it becomes a 1 factor design with 9 samples at each level. Therefore, the same linear regression model and power calculation method as discussed for 1 factor designs can be used to calculate the power for the main effects for this multiple level factorial design. | ||

Since A has 3 levels, it has 3 paired comparisons: | Since A has 3 levels, it has 3 paired comparisons: | ||

<math>{{\Delta }_{12}}={{\mu }_{1}}-{{\mu }_{2}}</math>; | <math>{{\Delta }_{12}}={{\mu }_{1}}-{{\mu }_{2}}\,\!</math>; | ||

<math>{{\Delta }_{13}}={{\mu }_{1}}-{{\mu }_{3}}</math> | <math>{{\Delta }_{13}}={{\mu }_{1}}-{{\mu }_{3}}\,\!</math> | ||

and | and | ||

<math>{{\Delta }_{23}}={{\mu }_{2}}-{{\mu }_{3}}</math>. | <math>{{\Delta }_{23}}={{\mu }_{2}}-{{\mu }_{3}}\,\!</math>. | ||

<math>{{\mu }_{i}}</math> | <math>{{\mu }_{i}}\,\!</math> | ||

is the average of the responses at the ith level. However, these three contrasts are not independent, since | is the average of the responses at the ith level. However, these three contrasts are not independent, since | ||

<math>{{\Delta }_{12}}={{\Delta }_{13}}-{{\Delta }_{23}}</math>. We are interested in the largest difference among all the contrasts. Let | <math>{{\Delta }_{12}}={{\Delta }_{13}}-{{\Delta }_{23}}\,\!</math>. We are interested in the largest difference among all the contrasts. Let | ||

<math>\Delta =\max ({{\Delta }_{ij}})</math>. Power is defined as the probability of detecting a given | <math>\Delta =\max ({{\Delta }_{ij}})\,\!</math>. Power is defined as the probability of detecting a given | ||

<math>\Delta </math> in an experiment. Using the linear regression equation, we get: | <math>\Delta \,\!</math> in an experiment. Using the linear regression equation, we get: | ||

:<math>{{\Delta }_{12}}={{\beta }_{1}}-{{\beta }_{2}};\text{ }{{\Delta }_{13}}=2{{\beta }_{1}}+{{\beta }_{2}};\text{ }{{\Delta }_{23}}=2{{\beta }_{2}}+{{\beta }_{1}}\,\!</math> | |||

Just as for the 1 factor design, we know the optimal solutions are: | Just as for the 1 factor design, we know the optimal solutions are: | ||

<math>{{\beta }_{1}}=0.5\Delta ,{{\beta }_{2}}=-0.5\Delta </math> | <math>{{\beta }_{1}}=0.5\Delta ,{{\beta }_{2}}=-0.5\Delta \,\!</math> | ||

when | when | ||

<math>{{\Delta }_{12}}</math> | <math>{{\Delta }_{12}}\,\!</math> | ||

is the largest difference | is the largest difference | ||

<math>\Delta </math>; | <math>\Delta \,\!</math>; | ||

<math>{{\beta }_{1}}=0.5\Delta ,{{\beta }_{2}}=0</math> | <math>{{\beta }_{1}}=0.5\Delta ,{{\beta }_{2}}=0\,\!</math> | ||

when | when | ||

<math>{{\Delta }_{13}}</math> | <math>{{\Delta }_{13}}\,\!</math> | ||

is the largest difference | is the largest difference | ||

<math>\Delta </math> | <math>\Delta \,\!</math> | ||

and | and | ||

<math>{{\beta }_{1}}=0,{{\beta }_{2}}=0.5\Delta </math> | <math>{{\beta }_{1}}=0,{{\beta }_{2}}=0.5\Delta \,\!</math> | ||

when | when | ||

<math>{{\Delta }_{23}}</math> | <math>{{\Delta }_{23}}\,\!</math> | ||

is the largest difference | is the largest difference | ||

<math>\Delta </math>. For each of the solution, a non-centrality parameter can be calculated using | <math>\Delta \,\!</math>. For each of the solution, a non-centrality parameter can be calculated using | ||

<math>\text{ }{{\phi }_{i}}=\beta \Sigma _{\beta }^{-1}{{\beta }^{T}}</math>. Here | <math>\text{ }{{\phi }_{i}}=\beta \Sigma _{\beta }^{-1}{{\beta }^{T}}\,\!</math>. Here | ||

<math>\beta =\left( {{\beta }_{1}},{{\beta }_{2}} \right)</math>, and | <math>\beta =\left( {{\beta }_{1}},{{\beta }_{2}} \right)\,\!</math>, and | ||

<math>\Sigma _{\beta }^{-1}</math> | <math>\Sigma _{\beta }^{-1}\,\!</math> | ||

is the inverse of the variance and covariance matrix obtained from the linear regression model when all the terms are included. | is the inverse of the variance and covariance matrix obtained from the linear regression model when all the terms are included. | ||

For this example, we have the coefficient matrix for the optimal solution: | For this example, we have the coefficient matrix for the optimal solution: | ||

:<math>B=\Delta \left( \begin{matrix} | :<math>B=\Delta \left( \begin{matrix} | ||

| Line 1,082: | Line 1,078: | ||

0.5 & 0 \\ | 0.5 & 0 \\ | ||

0 & 0.5 \\ | 0 & 0.5 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

The standard variance matrix | The standard variance matrix | ||

<math>{{\left( X'X \right)}^{-1}}</math> | <math>{{\left( X'X \right)}^{-1}}\,\!</math> | ||

for all the coefficients is: | for all the coefficients is: | ||

:{| style="text-align:center;" cellpadding="2" border="1" | |||

:{| style="text-align:center;" cellpadding="2" border="1" align="center" | |||

|- | |- | ||

|I|| A[1]|| A[2]|| B[1]|| B[2]|| A[1]B[1]|| A[1]B[2]|| A[2]B[1]|| A[2]B[2] | |I|| A[1]|| A[2]|| B[1]|| B[2]|| A[1]B[1]|| A[1]B[2]|| A[2]B[1]|| A[2]B[2] | ||

| Line 1,115: | Line 1,113: | ||

From the above table, we know the variance and covariance matrix | From the above table, we know the variance and covariance matrix | ||

<math>\Sigma _{\beta }^{{}}</math> | <math>\Sigma _{\beta }^{{}}\,\!</math> | ||

of A is: | of A is: | ||

| Line 1,122: | Line 1,120: | ||

0.0741 & -0.0370 \\ | 0.0741 & -0.0370 \\ | ||

-0.0370 & 0.0741 \\ | -0.0370 & 0.0741 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

Its inverse | Its inverse | ||

<math>\Sigma _{\beta }^{-1}</math> | <math>\Sigma _{\beta }^{-1}\,\!</math> | ||

for factor A is: | for factor A is: | ||

| Line 1,135: | Line 1,133: | ||

18 & 9 \\ | 18 & 9 \\ | ||

9 & 18 \\ | 9 & 18 \\ | ||

\end{matrix} \right)</math> | \end{matrix} \right)\,\!</math> | ||

Assuming that the | Assuming that the | ||

<math>\Delta </math> | <math>\Delta \,\!</math> | ||

we are interested in is | |||

<math>\sigma </math>, then the calculated non-centrality parameters are: | <math>\sigma \,\!</math>, then the calculated non-centrality parameters are: | ||

:<math>\Phi =</math> | :<math>\Phi = \beta \Sigma _{\beta }^{-1}\beta '{{\Delta }^{2}}\,\!</math> | ||

= | = | ||

4.5 2.25 -2.25 | |||

2.25 4.5 2.25 | :{|style="text-align:center; left-margin: 100px;" cellpadding="2" border="1" | ||

-2.25 2.25 4.5 | | 4.5 || 2.25 || -2.25 | ||

|- | |||

| 2.25 || 4.5 || 2.25 | |||

|- | |||

| -2.25 || 2.25 || 4.5 | |||

|- | |||

|} | |||

The power is calculated using the smallest value at the diagonal of the above matrix. Since the design is balanced, all the 3 non-centrality parameters are the same in this example (i.e., they are 4.5). | The power is calculated using the smallest value at the diagonal of the above matrix. Since the design is balanced, all the 3 non-centrality parameters are the same in this example (i.e., they are 4.5). | ||

| Line 1,155: | Line 1,161: | ||

:<math>{{f}_{citical}}=F_{2,18}^{-1}(0.05)=3.55456</math> | :<math>{{f}_{citical}}=F_{2,18}^{-1}(0.05)=3.55456\,\!</math> | ||

Please notice that for the F distribution, the first degree of freedom is 2 (the number of terms for factor A in the regression model) and the 2nd degree of freedom is 18 (the degrees of freedom of error). | Please notice that for the F distribution, the first degree of freedom is 2 (the number of terms for factor A in the regression model) and the 2nd degree of freedom is 18 (the degrees of freedom of error). | ||

The power for main effect A is: | The power for main effect A is: | ||

:<math>Power=1-{{F}_{2,18}}\left( {{f}_{critical}}|\phi =4.5 \right)=0.397729</math> | :<math>Power=1-{{F}_{2,18}}\left( {{f}_{critical}}|\phi =4.5 \right)=0.397729\,\!</math> | ||

[[Image:design_eval5.png|center|256px|Evaluation settings.]] | |||

[[Image:DesignEvluation_7.png|center|600px|Evaluation results.]] | |||

If the | If the | ||

<math>\Delta </math> | <math>\Delta \,\!</math> | ||

we are interested in is 2 | we are interested in is 2 | ||

<math>\sigma </math>, then the non-centrality parameter will be 18. The power for main effect A is: | <math>\sigma \,\!</math>, then the non-centrality parameter will be 18. The power for main effect A is: | ||

<math>Power=1-{{F}_{2,18}}\left( {{f}_{critical}}|\phi =18 \right)=0.9457</math> | <math>Power=1-{{F}_{2,18}}\left( {{f}_{critical}}|\phi =18 \right)=0.9457\,\!</math> | ||

The power is greater for a larger | The power is greater for a larger | ||

<math>\Delta </math>. The above calculation also can be used for unbalanced designs to get the approximated power. | <math>\Delta \,\!</math>. The above calculation also can be used for unbalanced designs to get the approximated power. | ||

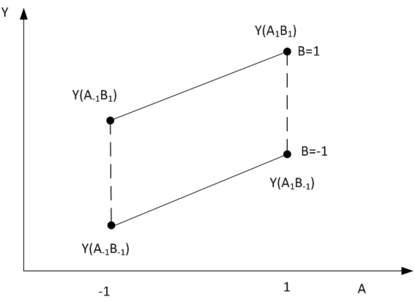

==Power Study for Interaction Effects== | ===Power Study for Interaction Effects=== | ||

First, we need to define what an “interaction effect” is. From the discussion for 2 level factorial designs, we know the interaction effect AB is defined by: | First, we need to define what an “interaction effect” is. From the discussion for 2 level factorial designs, we know the interaction effect AB is defined by: | ||

:<math>Y\left( AB=1 \right)-Y\left( AB=-1 \right)=2{{\beta }_{12}}</math> | |||

:<math>Y\left( AB=1 \right)-Y\left( AB=-1 \right)=2{{\beta }_{12}}\,\!</math> | |||

It is the difference between the average response at AB=1 and AB=-1. The above equation also can be written as: | It is the difference between the average response at AB=1 and AB=-1. The above equation also can be written as: | ||

:<math>\frac{Y\left( {{A}_{1}}{{B}_{1}} \right)+Y\left( {{A}_{-1}}{{B}_{-1}} \right)}{2}-\frac{Y\left( {{A}_{-1}}{{B}_{1}} \right)+Y\left( {{A}_{1}}{{B}_{-1}} \right)}{2}</math> | :<math>\frac{Y\left( {{A}_{1}}{{B}_{1}} \right)+Y\left( {{A}_{-1}}{{B}_{-1}} \right)}{2}-\frac{Y\left( {{A}_{-1}}{{B}_{1}} \right)+Y\left( {{A}_{1}}{{B}_{-1}} \right)}{2}\,\!</math> | ||

or: | or: | ||

:<math>\begin{align} | :<math>\begin{align} | ||

& \frac{Y\left( {{A}_{1}}{{B}_{1}} \right)-Y\left( {{A}_{1}}{{B}_{-1}} \right)}{2}-\frac{Y\left( {{A}_{-1}}{{B}_{1}} \right)-Y\left( {{A}_{-1}}{{B}_{-1}} \right)}{2} \\ | & \frac{Y\left( {{A}_{1}}{{B}_{1}} \right)-Y\left( {{A}_{1}}{{B}_{-1}} \right)}{2}-\frac{Y\left( {{A}_{-1}}{{B}_{1}} \right)-Y\left( {{A}_{-1}}{{B}_{-1}} \right)}{2} \\ | ||

& =\frac{\text{Effect of B at A=1}}{2}-\frac{\text{Effect of B at A=-1}}{2} \\ | & =\frac{\text{Effect of B at A=1}}{2}-\frac{\text{Effect of B at A=-1}}{2} \\ | ||

\end{align}</math> | \end{align}\,\!</math> | ||