Crow-AMSAA (NHPP): Difference between revisions

Lisa Hacker (talk | contribs) |

Chris Kahn (talk | contribs) |

||

| Line 713: | Line 713: | ||

==Goodness-of-Fit Tests== | ==Goodness-of-Fit Tests== | ||

{{:Goodness of Fit Tests}} | |||

: | |||

==Missing Data== | ==Missing Data== | ||

Revision as of 20:36, 18 December 2012

Dr. Larry H. Crow [17] noted that the Duane Model could be stochastically represented as a Weibull process, allowing for statistical procedures to be used in the application of this model in reliability growth. This statistical extension became what is known as the Crow-AMSAA (NHPP) model. This method was first developed at the U.S. Army Materiel Systems Analysis Activity (AMSAA). It is frequently used on systems when usage is measured on a continuous scale. It can also be applied for the analysis of one shot items when there is high reliability and a large number of trials.

Test programs are generally conducted on a phase by phase basis. The Crow-AMSAA model is designed for tracking the reliability within a test phase and not across test phases. A development testing program may consist of several separate test phases. If corrective actions are introduced during a particular test phase, then this type of testing and the associated data are appropriate for analysis by the Crow-AMSAA model. The model analyzes the reliability growth progress within each test phase and can aid in determining the following:

- Reliability of the configuration currently on test

- Reliability of the configuration on test at the end of the test phase

- Expected reliability if the test time for the phase is extended

- Growth rate

- Confidence intervals

- Applicable goodness-of-fit tests

Crow-AMSAA (NHPP) Model

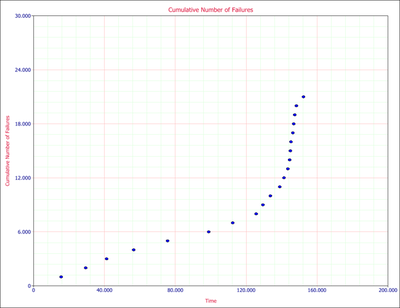

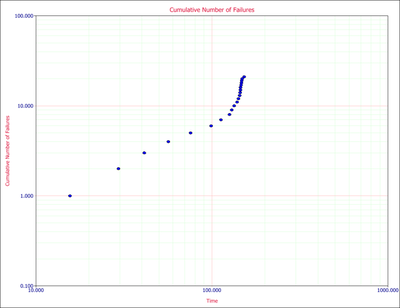

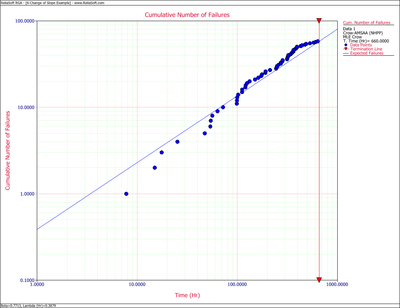

The reliability growth pattern for the Crow-AMSAA model is exactly the same pattern as for the Duane postulate, that is, the cumulative number of failures is linear when plotted on ln-ln scale. Unlike the Duane postulate, the Crow-AMSAA model is statistically based. Under the Duane postulate, the failure rate is linear on ln-ln scale. However, for the Crow-AMSAA model statistical structure, the failure intensity of the underlying non-homogeneous Poisson process (NHPP) is linear when plotted on ln-ln scale.

Let [math]\displaystyle{ N(t)\,\! }[/math] be the cumulative number of failures observed in cumulative test time [math]\displaystyle{ t\,\! }[/math], and let [math]\displaystyle{ \rho (t)\,\! }[/math] be the failure intensity for the Crow-AMSAA model. Under the NHPP model, [math]\displaystyle{ \rho (t)\Delta t\,\! }[/math] is approximately the probably of a failure occurring over the interval [math]\displaystyle{ [t,t+\Delta t]\,\! }[/math] for small [math]\displaystyle{ \Delta t\,\! }[/math]. In addition, the expected number of failures experienced over the test interval [math]\displaystyle{ [0,T]\,\! }[/math] under the Crow-AMSAA model is given by:

- [math]\displaystyle{ E[N(T)]=\mathop{}_{0}^{T}\rho (t)dt\,\! }[/math]

The Crow-AMSAA model assumes that [math]\displaystyle{ \rho (T)\,\! }[/math] may be approximated by the Weibull failure rate function:

- [math]\displaystyle{ \rho (T)=\frac{\beta }{{{\eta }^{\beta }}}{{T}^{\beta -1}}\,\! }[/math]

Therefore, if [math]\displaystyle{ \lambda =\tfrac{1}{{{\eta }^{\beta }}},\,\! }[/math] the intensity function, [math]\displaystyle{ \rho (T),\,\! }[/math] or the instantaneous failure intensity, [math]\displaystyle{ {{\lambda }_{i}}(T)\,\! }[/math], is defined as:

- [math]\displaystyle{ {{\lambda }_{i}}(T)=\lambda \beta {{T}^{\beta -1}},\text{with }T\gt 0,\text{ }\lambda \gt 0\text{ and }\beta \gt 0\,\! }[/math]

In the special case of exponential failure times, there is no growth and the failure intensity, [math]\displaystyle{ \rho (t)\,\! }[/math], is equal to [math]\displaystyle{ \lambda \,\! }[/math]. In this case, the expected number of failures is given by:

- [math]\displaystyle{ \begin{align} E[N(T)]= & \mathop{}_{0}^{T}\rho (t)dt \\ = & \lambda T \end{align}\,\! }[/math]

In order for the plot to be linear when plotted on ln-ln scale under the general reliability growth case, the following must hold true where the expected number of failures is equal to:

- [math]\displaystyle{ \begin{align} E[N(T)]= & \mathop{}_{0}^{T}\rho (t)dt \\ = & \lambda {{T}^{\beta }} \end{align}\,\! }[/math]

To put a statistical structure on the reliability growth process, consider again the special case of no growth. In this case the number of failures, [math]\displaystyle{ N(T),\,\! }[/math] experienced during the testing over [math]\displaystyle{ [0,T]\,\! }[/math] is random. The expected number of failures, [math]\displaystyle{ N(T),\,\! }[/math] is said to follow the homogeneous (constant) Poisson process with mean [math]\displaystyle{ \lambda T\,\! }[/math] and is given by:

- [math]\displaystyle{ \underset{}{\overset{}{\mathop{\Pr }}}\,[N(T)=n]=\frac{{{(\lambda T)}^{n}}{{e}^{-\lambda T}}}{n!};\text{ }n=0,1,2,\ldots \,\! }[/math]

The Crow-AMSAA model generalizes this no growth case to allow for reliability growth due to corrective actions. This generalization keeps the Poisson distribution for the number of failures but allows for the expected number of failures, [math]\displaystyle{ E[N(T)],\,\! }[/math] to be linear when plotted on ln-ln scale. The Crow-AMSAA model lets [math]\displaystyle{ E[N(T)]=\lambda {{T}^{\beta }}\,\! }[/math]. The probability that the number of failures, [math]\displaystyle{ N(T),\,\! }[/math] will be equal to [math]\displaystyle{ n\,\! }[/math] under growth is then given by the Poisson distribution:

- [math]\displaystyle{ \underset{}{\overset{}{\mathop{\Pr }}}\,[N(T)=n]=\frac{{{(\lambda {{T}^{\beta }})}^{n}}{{e}^{-\lambda {{T}^{\beta }}}}}{n!};\text{ }n=0,1,2,\ldots \,\! }[/math]

This is the general growth situation, and the number of failures, [math]\displaystyle{ N(T)\,\! }[/math], follows a non-homogeneous Poisson process. The exponential, "no growth" homogeneous Poisson process is a special case of the non-homogeneous Crow-AMSAA model. This is reflected in the Crow-AMSAA model parameter where [math]\displaystyle{ \beta =1\,\! }[/math].

The cumulative failure rate, [math]\displaystyle{ {{\lambda }_{c}}\,\! }[/math], is:

- [math]\displaystyle{ \begin{align} {{\lambda }_{c}}=\lambda {{T}^{\beta -1}} \end{align}\,\! }[/math]

The cumulative [math]\displaystyle{ MTB{{F}_{c}}\,\! }[/math] is:

- [math]\displaystyle{ MTB{{F}_{c}}=\frac{1}{\lambda }{{T}^{1-\beta }}\,\! }[/math]

As mentioned above, the local pattern for reliability growth within a test phase is the same as the growth pattern observed by Duane. The Duane [math]\displaystyle{ MTB{{F}_{c}}\,\! }[/math] is equal to:

- [math]\displaystyle{ MTB{{F}_{{{c}_{DUANE}}}}=b{{T}^{\alpha }}\,\! }[/math]

And the Duane cumulative failure rate, [math]\displaystyle{ {{\lambda }_{c}}\,\! }[/math], is:

- [math]\displaystyle{ {{\lambda }_{{{c}_{DUANE}}}}=\frac{1}{b}{{T}^{-\alpha }}\,\! }[/math]

Thus a relationship between Crow-AMSAA parameters and Duane parameters can be developed, such that:

- [math]\displaystyle{ \begin{align} {{b}_{DUANE}}= & \frac{1}{{{\lambda }_{AMSAA}}} \\ {{\alpha }_{DUANE}}= & 1-{{\beta }_{AMSAA}} \end{align}\,\! }[/math]

Note that these relationships are not absolute. They change according to how the parameters (slopes, intercepts, etc.) are defined when the analysis of the data is performed. For the exponential case, [math]\displaystyle{ \beta =1\,\! }[/math], then [math]\displaystyle{ {{\lambda }_{i}}(T)=\lambda \,\! }[/math], a constant. For [math]\displaystyle{ \beta \gt 1\,\! }[/math], [math]\displaystyle{ {{\lambda }_{i}}(T)\,\! }[/math] is increasing. This indicates a deterioration in system reliability. For [math]\displaystyle{ \beta \lt 1\,\! }[/math], [math]\displaystyle{ {{\lambda }_{i}}(T)\,\! }[/math] is decreasing. This is indicative of reliability growth. Note that the model assumes a Poisson process with the Weibull intensity function, not the Weibull distribution. Therefore, statistical procedures for the Weibull distribution do not apply for this model. The parameter [math]\displaystyle{ \lambda \,\! }[/math] is called a scale parameter because it depends upon the unit of measurement chosen for [math]\displaystyle{ T\,\! }[/math], while [math]\displaystyle{ \beta \,\! }[/math] is the shape parameter that characterizes the shape of the graph of the intensity function.

The total number of failures, [math]\displaystyle{ N(T)\,\! }[/math], is a random variable with Poisson distribution. Therefore, the probability that exactly [math]\displaystyle{ n\,\! }[/math] failures occur by time [math]\displaystyle{ T\,\! }[/math] is:

- [math]\displaystyle{ P[N(T)=n]=\frac{{{[\theta (T)]}^{n}}{{e}^{-\theta (T)}}}{n!}\,\! }[/math]

The number of failures occurring in the interval from [math]\displaystyle{ {{T}_{1}}\,\! }[/math] to [math]\displaystyle{ {{T}_{2}}\,\! }[/math] is a random variable having a Poisson distribution with mean:

- [math]\displaystyle{ \theta ({{T}_{2}})-\theta ({{T}_{1}})=\lambda (T_{2}^{\beta }-T_{1}^{\beta })\,\! }[/math]

The number of failures in any interval is statistically independent of the number of failures in any interval that does not overlap the first interval. At time [math]\displaystyle{ {{T}_{0}}\,\! }[/math], the failure intensity is [math]\displaystyle{ {{\lambda }_{i}}({{T}_{0}})=\lambda \beta T_{0}^{\beta -1}\,\! }[/math]. If improvements are not made to the system after time [math]\displaystyle{ {{T}_{0}}\,\! }[/math], it is assumed that failures would continue to occur at the constant rate [math]\displaystyle{ {{\lambda }_{i}}({{T}_{0}})=\lambda \beta T_{0}^{\beta -1}\,\! }[/math]. Future failures would then follow an exponential distribution with mean [math]\displaystyle{ m({{T}_{0}})=\tfrac{1}{\lambda \beta T_{0}^{\beta -1}}\,\! }[/math]. The instantaneous MTBF of the system at time [math]\displaystyle{ T\,\! }[/math] is:

- [math]\displaystyle{ m(T)=\frac{1}{\lambda \beta {{T}^{\beta -1}}}\,\! }[/math]

Note About Applicability

The Duane and Crow-AMSAA models are the most frequently used reliability growth models. Their relationship comes from the fact that both make use of the underlying observed linear relationship between the logarithm of cumulative MTBF and cumulative test time. However, the Duane model does not provide a capability to test whether the change in MTBF observed over time is significantly different from what might be seen due to random error between phases. The Crow-AMSAA model allows for such assessments. Also, the Crow-AMSAA allows for development of hypothesis testing procedures to determine growth presence in the data (where [math]\displaystyle{ \beta \lt 1\,\! }[/math] indicates that there is growth in MTBF, [math]\displaystyle{ \beta =1\,\! }[/math] indicates a constant MTBF and [math]\displaystyle{ \beta \gt 1\,\! }[/math] indicates a decreasing MTBF). Additionally, the Crow-AMSAA model views the process of reliability growth as probabilistic, while the Duane model views the process as deterministic.

Parameter Estimation

Maximum Likelihood Estimators

The probability density function (pdf) of the [math]\displaystyle{ {{i}^{th}}\,\! }[/math] event given that the [math]\displaystyle{ {{(i-1)}^{th}}\,\! }[/math] event occurred at [math]\displaystyle{ {{T}_{i-1}}\,\! }[/math] is:

- [math]\displaystyle{ f({{T}_{i}}|{{T}_{i-1}})=\frac{\beta }{\eta }{{\left( \frac{{{T}_{i}}}{\eta } \right)}^{\beta -1}}\cdot {{e}^{-\tfrac{1}{{{\eta }^{\beta }}}\left( T_{i}^{\beta }-T_{i-1}^{\beta } \right)}}\,\! }[/math]

The likelihood function is:

- [math]\displaystyle{ L={{\lambda }^{n}}{{\beta }^{n}}{{e}^{-\lambda {{T}^{*\beta }}}}\underset{i=1}{\overset{n}{\mathop \prod }}\,T_{i}^{\beta -1}\,\! }[/math]

where [math]\displaystyle{ {{T}^{*}}\,\! }[/math] is the termination time and is given by:

- [math]\displaystyle{ {{T}^{*}}=\left\{ \begin{matrix} {{T}_{n}}\text{ if the test is failure terminated} \\ T\gt {{T}_{n}}\text{ if the test is time terminated} \\ \end{matrix} \right\}\,\! }[/math]

Taking the natural log on both sides:

- [math]\displaystyle{ \Lambda =n\ln \lambda +n\ln \beta -\lambda {{T}^{*\beta }}+(\beta -1)\underset{i=1}{\overset{n}{\mathop \sum }}\,\ln {{T}_{i}}\,\! }[/math]

And differentiating with respect to [math]\displaystyle{ \lambda \,\! }[/math] yields:

- [math]\displaystyle{ \frac{\partial \Lambda }{\partial \lambda }=\frac{n}{\lambda }-{{T}^{*\beta }}\,\! }[/math]

Set equal to zero and solve for [math]\displaystyle{ \lambda \,\! }[/math] :

- [math]\displaystyle{ \widehat{\lambda }=\frac{n}{{{T}^{*\beta }}}\,\! }[/math]

Now differentiate with respect to [math]\displaystyle{ \beta \,\! }[/math] :

- [math]\displaystyle{ \frac{\partial \Lambda }{\partial \beta }=\frac{n}{\beta }-\lambda {{T}^{*\beta }}\ln {{T}^{*}}+\underset{i=1}{\overset{n}{\mathop \sum }}\,\ln {{T}_{i}}\,\! }[/math]

Set equal to zero and solve for [math]\displaystyle{ \beta \,\! }[/math] :

- [math]\displaystyle{ \widehat{\beta }=\frac{n}{n\ln {{T}^{*}}-\underset{i=1}{\overset{n}{\mathop{\sum }}}\,\ln {{T}_{i}}}\,\! }[/math]

Biasing and Unbiasing of Beta

The equation above returns the biased estimate of [math]\displaystyle{ \beta \,\! }[/math]. The unbiased estimate of [math]\displaystyle{ \beta \,\! }[/math] can be calculated by using the following relationships. For time terminated data (meaning that the test ends after a specified number of failures):

- [math]\displaystyle{ \bar{\beta }=\frac{N-1}{N}\hat{\beta }\,\! }[/math]

For failure terminated data (meaning that the test ends after a specified test time):

- [math]\displaystyle{ \bar{\beta }=\frac{N-2}{N}\hat{\beta }\,\! }[/math]

Crow-AMSAA Model Example

Two prototypes of a system were tested simultaneously with design changes incorporated during the test. The following table presents the data collected over the entire test. Find the Crow-AMSAA parameters and the intensity function using maximum likelihood estimators.

| Failure Number | Failed Unit | Test Time Unit 1(hours) | Test Time Unit 2(hours) | Total Test Time(hours) | [math]\displaystyle{ ln{(T)}\,\! }[/math] |

|---|---|---|---|---|---|

| 1 | 1 | 1.0 | 1.7 | 2.7 | 0.99325 |

| 2 | 1 | 7.3 | 3.0 | 10.3 | 2.33214 |

| 3 | 2 | 8.7 | 3.8 | 12.5 | 2.52573 |

| 4 | 2 | 23.3 | 7.3 | 30.6 | 3.42100 |

| 5 | 2 | 46.4 | 10.6 | 57.0 | 4.04305 |

| 6 | 1 | 50.1 | 11.2 | 61.3 | 4.11578 |

| 7 | 1 | 57.8 | 22.2 | 80.0 | 4.38203 |

| 8 | 2 | 82.1 | 27.4 | 109.5 | 4.69592 |

| 9 | 2 | 86.6 | 38.4 | 125.0 | 4.82831 |

| 10 | 1 | 87.0 | 41.6 | 128.6 | 4.85671 |

| 11 | 2 | 98.7 | 45.1 | 143.8 | 4.96842 |

| 12 | 1 | 102.2 | 65.7 | 167.9 | 5.12337 |

| 13 | 1 | 139.2 | 90.0 | 229.2 | 5.43459 |

| 14 | 1 | 166.6 | 130.1 | 296.7 | 5.69272 |

| 15 | 2 | 180.8 | 139.8 | 320.6 | 5.77019 |

| 16 | 1 | 181.3 | 146.9 | 328.2 | 5.79362 |

| 17 | 2 | 207.9 | 158.3 | 366.2 | 5.90318 |

| 18 | 2 | 209.8 | 186.9 | 396.7 | 5.98318 |

| 19 | 2 | 226.9 | 194.2 | 421.1 | 6.04287 |

| 20 | 1 | 232.2 | 206.0 | 438.2 | 6.08268 |

| 21 | 2 | 267.5 | 233.7 | 501.2 | 6.21701 |

| 22 | 2 | 330.1 | 289.9 | 620.0 | 6.42972 |

Solution

For the failure terminated test, [math]\displaystyle{ {\beta}\,\! }[/math] is:

- [math]\displaystyle{ \begin{align} \widehat{\beta }&=\frac{n}{n\ln {{T}^{*}}-\underset{i=1}{\overset{n}{\mathop{\sum }}}\,\ln {{T}_{i}}} \\ &=\frac{22}{22\ln 620-\underset{i=1}{\overset{22}{\mathop{\sum }}}\,\ln {{T}_{i}}} \\ \end{align}\,\! }[/math]

- where:

- [math]\displaystyle{ \underset{i=1}{\overset{22}{\mathop \sum }}\,\ln {{T}_{i}}=105.6355\,\! }[/math]

- Then:

- [math]\displaystyle{ \widehat{\beta }=\frac{22}{22\ln 620-105.6355}=0.6142\,\! }[/math]

And for [math]\displaystyle{ {\lambda}\,\! }[/math] :

- [math]\displaystyle{ \begin{align} \widehat{\lambda }&=\frac{n}{{{T}^{*\beta }}} \\ & =\frac{22}{{{620}^{0.6142}}}=0.4239 \\ \end{align}\,\! }[/math]

Therefore, [math]\displaystyle{ {{\lambda }_{i}}(T)\,\! }[/math] becomes:

- [math]\displaystyle{ \begin{align} {{\widehat{\lambda }}_{i}}(T)= & 0.4239\cdot 0.6142\cdot {{620}^{-0.3858}} \\ = & 0.0217906\frac{\text{failures}}{\text{hr}} \end{align}\,\! }[/math]

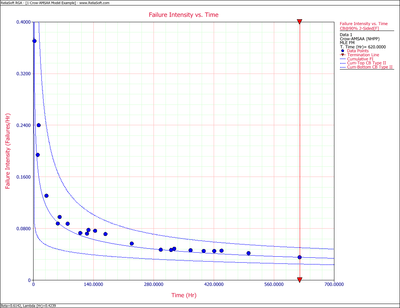

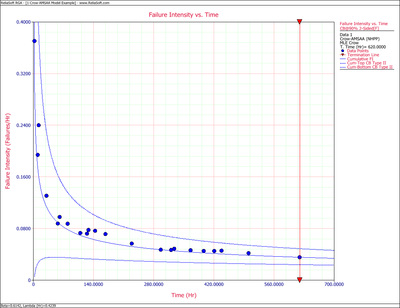

The next figure shows the plot of the failure rate. If no further changes are made, the estimated MTBF is [math]\displaystyle{ \tfrac{1}{0.0217906}\,\! }[/math] or 46 hours.

Confidence Bounds

The RGA software provides two methods to estimate the confidence bounds for the Crow Extended model when applied to developmental testing data. The Fisher Matrix approach is based on the Fisher Information Matrix and is commonly employed in the reliability field. The Crow bounds were developed by Dr. Larry Crow. See the Crow-AMSAA Confidence Bounds chapter for details on how the confidence bounds are calculated.

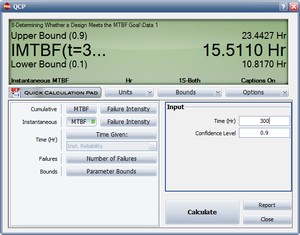

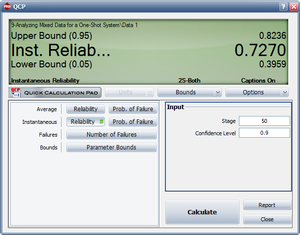

Confidence Bounds Example

Calculate the 90% 2-sided confidence bounds on the cumulative and instantaneous failure intensity for the data from the Crow-AMSAA Model Example given above.

Solution

Fisher Matrix Bounds

Using [math]\displaystyle{ \widehat{\beta }\,\! }[/math] and [math]\displaystyle{ \widehat{\lambda }\,\! }[/math] estimated in the Crow-AMSAA example, the Fisher Matrix bounds on beta are:

- [math]\displaystyle{ \begin{align} \frac{{{\partial }^{2}}\Lambda }{\partial {{\lambda }^{2}}} = & -\frac{22}{{{0.4239}^{2}}}=-122.43 \\ \frac{{{\partial }^{2}}\Lambda }{\partial {{\beta }^{2}}} = & -\frac{22}{{{0.6142}^{2}}}-0.4239\cdot {{620}^{0.6142}}{{(\ln 620)}^{2}}=-967.68 \\ \frac{{{\partial }^{2}}\Lambda }{\partial \lambda \partial \beta } = & -{{620}^{0.6142}}\ln 620=-333.64 \end{align}\,\! }[/math]

The Fisher Matrix then becomes:

- [math]\displaystyle{ \begin{align} \begin{bmatrix}122.43 & 333.64\\ 333.64 & 967.68\end{bmatrix}^{-1} & = \begin{bmatrix}Var(\hat{\lambda}) & Cov(\hat{\beta},\hat{\lambda})\\ Cov(\hat{\beta},\hat{\lambda}) & Var(\hat{\beta})\end{bmatrix} \\ & = \begin{bmatrix} 0.13519969 & -0.046614609\\ -0.046614609 & 0.017105343 \end{bmatrix} \end{align}\,\! }[/math]

For [math]\displaystyle{ T=620\,\! }[/math] hours, the partial derivatives of the cumulative and instantaneous failure intensities are:

- [math]\displaystyle{ \begin{align} \frac{\partial {{\lambda }_{c}}(T)}{\partial \beta }= & \widehat{\lambda }{{T}^{\widehat{\beta }-1}}\ln (T) \\ = & 0.4239\cdot {{620}^{-0.3858}}\ln 620 \\ = & 0.22811336 \\ \frac{\partial {{\lambda }_{c}}(T)}{\partial \lambda }= & {{T}^{\widehat{\beta }-1}} \\ = & {{620}^{-0.3858}} \\ = & 0.083694185 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} \frac{\partial {{\lambda }_{i}}(T)}{\partial \beta }= & \widehat{\lambda }{{T}^{\widehat{\beta }-1}}+\widehat{\lambda }\widehat{\beta }{{T}^{\widehat{\beta }-1}}\ln T \\ = & 0.4239\cdot {{620}^{-0.3858}}+0.4239\cdot 0.6142\cdot {{620}^{-0.3858}}\ln 620 \\ = & 0.17558519 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} \frac{\partial {{\lambda }_{i}}(T)}{\partial \lambda }= & \widehat{\beta }{{T}^{\widehat{\beta }-1}} \\ = & 0.6142\cdot {{620}^{-0.3858}} \\ = & 0.051404969 \end{align}\,\! }[/math]

Therefore, the variances become:

- [math]\displaystyle{ \begin{align} Var(\hat{\lambda_{c}}(T)) & = 0.22811336^{2}\cdot 0.017105343\ + 0.083694185^{2} \cdot 0.13519969\ -2\cdot 0.22811336\cdot 0.083694185\cdot 0.046614609 \\ & = 0.00005721408 \\ Var(\hat{\lambda_{i}}(T)) & = 0.17558519^{2}\cdot 0.01715343\ + 0.051404969^{2}\cdot 0.13519969\ -2\cdot 0.17558519\cdot 0.051404969\cdot 0.046614609 \\ &= 0.0000431393 \end{align}\,\! }[/math]

The cumulative and instantaneous failure intensities at [math]\displaystyle{ T=620\,\! }[/math] hours are:

- [math]\displaystyle{ \begin{align} {{\lambda }_{c}}(T)= & 0.03548 \\ {{\lambda }_{i}}(T)= & 0.02179 \end{align}\,\! }[/math]

So, at the 90% confidence level and for [math]\displaystyle{ T=620\,\! }[/math] hours, the Fisher Matrix confidence bounds for the cumulative failure intensity are:

- [math]\displaystyle{ \begin{align} {{[{{\lambda }_{c}}(T)]}_{L}}= & 0.02499 \\ {{[{{\lambda }_{c}}(T)]}_{U}}= & 0.05039 \end{align}\,\! }[/math]

The confidence bounds for the instantaneous failure intensity are:

- [math]\displaystyle{ \begin{align} {{[{{\lambda }_{i}}(T)]}_{L}}= & 0.01327 \\ {{[{{\lambda }_{i}}(T)]}_{U}}= & 0.03579 \end{align}\,\! }[/math]

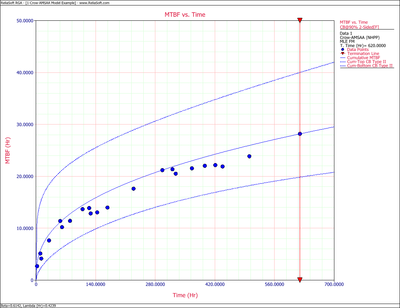

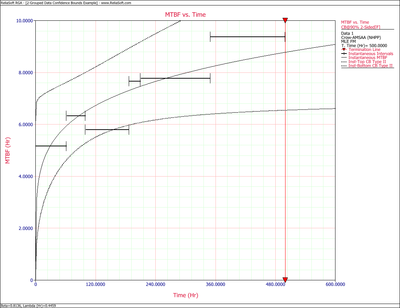

The following figures display plots of the Fisher Matrix confidence bounds for the cumulative and instantaneous failure intensity, respectively.

Crow Bounds

The Crow confidence bounds for the cumulative failure intensity at the 90% confidence level and for [math]\displaystyle{ T=620\,\! }[/math] hours are:

- [math]\displaystyle{ \begin{align} {{[{{\lambda }_{c}}(T)]}_{L}} = & \frac{\chi _{\tfrac{\alpha }{2},2N}^{2}}{2\cdot t} \\ = & \frac{29.787476}{2*620} \\ = & 0.02402 \\ {{[{{\lambda }_{c}}(T)]}_{U}} = & \frac{\chi _{1-\tfrac{\alpha }{2},2N+2}^{2}}{2\cdot t} \\ = & \frac{62.8296}{2*620} \\ = & 0.05067 \end{align}\,\! }[/math]

The Crow confidence bounds for the instantaneous failure intensity at the 90% confidence level and for [math]\displaystyle{ T=620\,\! }[/math] hours are:

- [math]\displaystyle{ \begin{align} {{[{{\lambda }_{i}}(t)]}_{L}} = & \frac{1}{{{[MTB{{F}_{i}}]}_{U}}} \\ = & \frac{1}{MTB{{F}_{i}}\cdot U} \\ = & 0.01179 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} {{[{{\lambda }_{i}}(t)]}_{U}}= & \frac{1}{{{[MTB{{F}_{i}}]}_{L}}} \\ = & \frac{1}{MTB{{F}_{i}}\cdot L} \\ = & 0.03253 \end{align}\,\! }[/math]

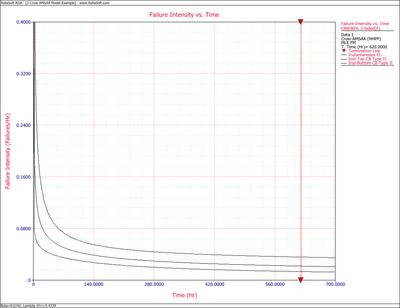

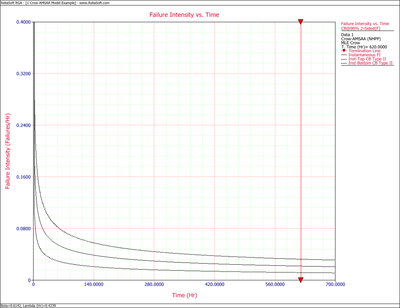

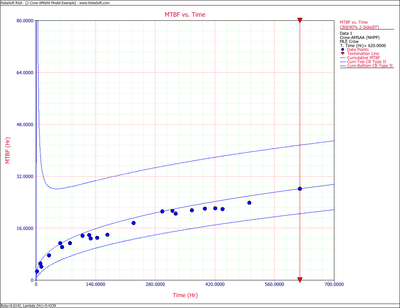

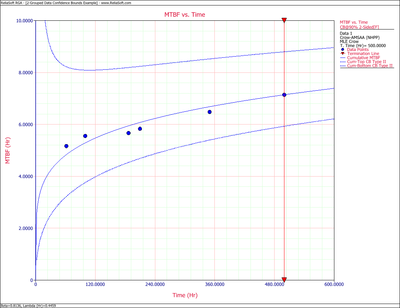

The following figures display plots of the Crow confidence bounds for the cumulative and instantaneous failure intensity, respectively.

Another Confidence Bounds Example

Calculate the confidence bounds on the cumulative and instantaneous MTBF for the data from the Crow-AMSAA Model Example given above.

Solution

Fisher Matrix Bounds

From the previous example:

- [math]\displaystyle{ \begin{align} Var(\widehat{\lambda }) = & 0.13519969 \\ Var(\widehat{\beta }) = & 0.017105343 \\ Cov(\widehat{\beta },\widehat{\lambda }) = & -0.046614609 \end{align}\,\! }[/math]

And for [math]\displaystyle{ T=620\,\! }[/math] hours, the partial derivatives of the cumulative and instantaneous MTBF are:

- [math]\displaystyle{ \begin{align} \frac{\partial {{m}_{c}}(T)}{\partial \beta }= & -\frac{1}{\widehat{\lambda }}{{T}^{1-\widehat{\beta }}}\ln T \\ = & -\frac{1}{0.4239}{{620}^{0.3858}}\ln 620 \\ = & -181.23135 \\ \frac{\partial {{m}_{c}}(T)}{\partial \lambda } = & -\frac{1}{{{\widehat{\lambda }}^{2}}}{{T}^{1-\widehat{\beta }}} \\ = & -\frac{1}{{{0.4239}^{2}}}{{620}^{0.3858}} \\ = & -66.493299 \\ \frac{\partial {{m}_{i}}(T)}{\partial \beta } = & -\frac{1}{\widehat{\lambda }{{\widehat{\beta }}^{2}}}{{T}^{1-\beta }}-\frac{1}{\widehat{\lambda }\widehat{\beta }}{{T}^{1-\widehat{\beta }}}\ln T \\ = & -\frac{1}{0.4239\cdot {{0.6142}^{2}}}{{620}^{0.3858}}-\frac{1}{0.4239\cdot 0.6142}{{620}^{0.3858}}\ln 620 \\ = & -369.78634 \\ \frac{\partial {{m}_{i}}(T)}{\partial \lambda } = & -\frac{1}{{{\widehat{\lambda }}^{2}}\widehat{\beta }}{{T}^{1-\widehat{\beta }}} \\ = & -\frac{1}{{{0.4239}^{2}}\cdot 0.6142}\cdot {{620}^{0.3858}} \\ = & -108.26001 \end{align}\,\! }[/math]

Therefore, the variances become:

- [math]\displaystyle{ \begin{align} Var({{\widehat{m}}_{c}}(T)) = & {{\left( -181.23135 \right)}^{2}}\cdot 0.017105343+{{\left( -66.493299 \right)}^{2}}\cdot 0.13519969 \\ & -2\cdot \left( -181.23135 \right)\cdot \left( -66.493299 \right)\cdot 0.046614609 \\ = & 36.113376 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} Var({{\widehat{m}}_{i}}(T)) = & {{\left( -369.78634 \right)}^{2}}\cdot 0.017105343+{{\left( -108.26001 \right)}^{2}}\cdot 0.13519969 \\ & -2\cdot \left( -369.78634 \right)\cdot \left( -108.26001 \right)\cdot 0.046614609 \\ = & 191.33709 \end{align}\,\! }[/math]

So, at 90% confidence level and [math]\displaystyle{ T=620\,\! }[/math] hours, the Fisher Matrix confidence bounds are:

- [math]\displaystyle{ \begin{align} {{[{{m}_{c}}(T)]}_{L}} = & {{{\hat{m}}}_{c}}(t){{e}^{-{{z}_{\alpha }}\sqrt{Var({{{\hat{m}}}_{c}}(t))}/{{{\hat{m}}}_{c}}(t)}} \\ = & 19.84581 \\ {{[{{m}_{c}}(T)]}_{U}} = & {{{\hat{m}}}_{c}}(t){{e}^{{{z}_{\alpha }}\sqrt{Var({{{\hat{m}}}_{c}}(t))}/{{{\hat{m}}}_{c}}(t)}} \\ = & 40.01927 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} {{[{{m}_{i}}(T)]}_{L}} = & {{{\hat{m}}}_{i}}(t){{e}^{-{{z}_{\alpha }}\sqrt{Var({{{\hat{m}}}_{i}}(t))}/{{{\hat{m}}}_{i}}(t)}} \\ = & 27.94261 \\ {{[{{m}_{i}}(T)]}_{U}} = & {{{\hat{m}}}_{i}}(t){{e}^{{{z}_{\alpha }}\sqrt{Var({{{\hat{m}}}_{i}}(t))}/{{{\hat{m}}}_{i}}(t)}} \\ = & 75.34193 \end{align}\,\! }[/math]

The following two figures show plots of the Fisher Matrix confidence bounds for the cumulative and instantaneous MTBFs.

Crow Bounds

The Crow confidence bounds for the cumulative MTBF and the instantaneous MTBF at the 90% confidence level and for [math]\displaystyle{ T=620\,\! }[/math] hours are:

- [math]\displaystyle{ \begin{align} {{[{{m}_{c}}(T)]}_{L}} = & \frac{1}{{{[{{\lambda }_{c}}(T)]}_{U}}} \\ = & 20.5023 \\ {{[{{m}_{c}}(T)]}_{U}} = & \frac{1}{{{[{{\lambda }_{c}}(T)]}_{L}}} \\ = & 41.6282 \end{align}\,\! }[/math]

- [math]\displaystyle{ \begin{align} {{[MTB{{F}_{i}}]}_{L}} = & MTB{{F}_{i}}\cdot {{\Pi }_{1}} \\ = & 30.7445 \\ {{[MTB{{F}_{i}}]}_{U}} = & MTB{{F}_{i}}\cdot {{\Pi }_{2}} \\ = & 84.7972 \end{align}\,\! }[/math]

The figures below show plots of the Crow confidence bounds for the cumulative and instantaneous MTBF.

Confidence bounds can also be obtained on the parameters [math]\displaystyle{ \widehat{\beta }\,\! }[/math] and [math]\displaystyle{ \widehat{\lambda }\,\! }[/math]. For Fisher Matrix confidence bounds:

- [math]\displaystyle{ \begin{align} {{\beta }_{L}} = & \hat{\beta }{{e}^{{{z}_{\alpha }}\sqrt{Var(\hat{\beta })}/\hat{\beta }}} \\ = & 0.4325 \\ {{\beta }_{U}} = & \hat{\beta }{{e}^{-{{z}_{\alpha }}\sqrt{Var(\hat{\beta })}/\hat{\beta }}} \\ = & 0.8722 \end{align}\,\! }[/math]

- and:

- [math]\displaystyle{ \begin{align} {{\lambda }_{L}} = & \hat{\lambda }{{e}^{{{z}_{\alpha }}\sqrt{Var(\hat{\lambda })}/\hat{\lambda }}} \\ = & 0.1016 \\ {{\lambda }_{U}} = & \hat{\lambda }{{e}^{-{{z}_{\alpha }}\sqrt{Var(\hat{\lambda })}/\hat{\lambda }}} \\ = & 1.7691 \end{align}\,\! }[/math]

For Crow confidence bounds:

- [math]\displaystyle{ \begin{align} {{\beta }_{L}}= & 0.4527 \\ {{\beta }_{U}}= & 0.9350 \end{align}\,\! }[/math]

- and:

- [math]\displaystyle{ \begin{align} {{\lambda }_{L}}= & 0.2870 \\ {{\lambda }_{U}}= & 0.5827 \end{align}\,\! }[/math]

Grouped Data

For analyzing grouped data, we follow the same logic described previously for the Duane model. If the [math]\displaystyle{ E[N(T)]\,\! }[/math] equation from the Crow-AMSAA (NHPP) Model section above is linearized:

- [math]\displaystyle{ \begin{align} \ln [E(N(T))]=\ln \lambda +\beta \ln T \end{align}\,\! }[/math]

According to Crow [9], the likelihood function for the grouped data case, (where [math]\displaystyle{ {{n}_{1}},\,\! }[/math] [math]\displaystyle{ {{n}_{2}},\,\! }[/math] [math]\displaystyle{ {{n}_{3}},\ldots ,\,\! }[/math] [math]\displaystyle{ {{n}_{k}}\,\! }[/math] failures are observed and [math]\displaystyle{ k\,\! }[/math] is the number of groups), is:

- [math]\displaystyle{ \underset{i=1}{\overset{k}{\mathop \prod }}\,\underset{}{\overset{}{\mathop{\Pr }}}\,({{N}_{i}}={{n}_{i}})=\underset{i=1}{\overset{k}{\mathop \prod }}\,\frac{{{(\lambda T_{i}^{\beta }-\lambda T_{i-1}^{\beta })}^{{{n}_{i}}}}\cdot {{e}^{-(\lambda T_{i}^{\beta }-\lambda T_{i-1}^{\beta })}}}{{{n}_{i}}!}\,\! }[/math]

And the MLE of [math]\displaystyle{ \lambda \,\! }[/math] based on this relationship is:

- [math]\displaystyle{ \widehat{\lambda }=\frac{n}{T_{k}^{\widehat{\beta }}}\,\! }[/math]

where [math]\displaystyle{ n \,\! }[/math] is the total number of failures from all the groups.

The estimate of [math]\displaystyle{ \beta \,\! }[/math] is the value [math]\displaystyle{ \widehat{\beta }\,\! }[/math] that satisfies:

- [math]\displaystyle{ \underset{i=1}{\overset{k}{\mathop \sum }}\,{{n}_{i}}\left[ \frac{T_{i}^{\widehat{\beta }}\ln {{T}_{i}}-T_{i-1}^{\widehat{\beta }}\ln {{T}_{i-1}}}{T_{i}^{\widehat{\beta }}-T_{i-1}^{\widehat{\beta }}}-\ln {{T}_{k}} \right]=0\,\! }[/math]

See the Crow-AMSAA Confidence Bounds for details on how confidence bounds for grouped data are calculated.

Grouped Data Example

Consider the grouped failure times data given in the following table. Solve for the Crow-AMSAA parameters using MLE.

| Run Number | Cumulative Failures | End Time(hours) | [math]\displaystyle{ \ln{(T_i)}\,\! }[/math] | [math]\displaystyle{ \ln{(T_i)^2}\,\! }[/math] | [math]\displaystyle{ \ln{(\theta_i)}\,\! }[/math] | [math]\displaystyle{ \ln{(T_i)}\cdot\ln{(\theta_i)}\,\! }[/math] |

|---|---|---|---|---|---|---|

| 1 | 2 | 200 | 5.298 | 28.072 | 0.693 | 3.673 |

| 2 | 3 | 400 | 5.991 | 35.898 | 1.099 | 6.582 |

| 3 | 4 | 600 | 6.397 | 40.921 | 1.386 | 8.868 |

| 4 | 11 | 3000 | 8.006 | 64.102 | 2.398 | 19.198 |

| Sum = | 25.693 | 168.992 | 5.576 | 38.321 |

Solution

Using RGA, the value of [math]\displaystyle{ \widehat{\beta }\,\! }[/math], which must be solved numerically, is 0.6315. Using this value, the estimator of [math]\displaystyle{ \lambda \,\! }[/math] is:

- [math]\displaystyle{ \begin{align} \widehat{\lambda } = & \frac{11}{3,{{000}^{0.6315}}} \\ = & 0.0701 \end{align}\,\! }[/math]

Therefore, the intensity function becomes:

- [math]\displaystyle{ \widehat{\rho }(T)=0.0701\cdot 0.6315\cdot {{T}^{-0.3685}}\,\! }[/math]

Grouped Data Confidence Bounds Example

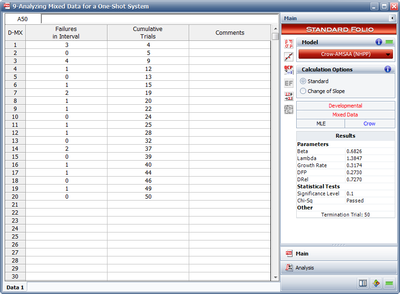

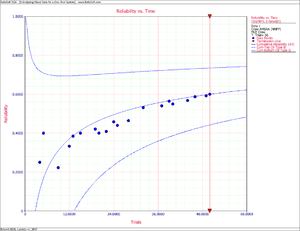

A new helicopter system is under development. System failure data has been collected on five helicopters during the final test phase. The actual failure times cannot be determined since the failures are not discovered until after the helicopters are brought into the maintenance area. However, total flying hours are known when the helicopters are brought in for service, and every 2 weeks each helicopter undergoes a thorough inspection to uncover any failures that may have occurred since the last inspection. Therefore, the cumulative total number of flight hours and the cumulative total number of failures for the 5 helicopters are known for each 2-week period. The total number of flight hours from the test phase is 500, which was accrued over a period of 12 weeks (six 2-week intervals). For each 2-week interval, the total number of flight hours and total number of failures for the 5 helicopters were recorded. The grouped data set is displayed in the following table.

| Interval | Interval Length | Failures in Interval |

|---|---|---|

| 1 | 0 - 62 | 12 |

| 2 | 62 -100 | 6 |

| 3 | 100 - 187 | 15 |

| 4 | 187 - 210 | 3 |

| 5 | 210 - 350 | 18 |

| 6 | 350 - 500 | 16 |

- 1) Estimate the parameters of the Crow-AMSAA model using maximum likelihood estimation.

- 2) Calculate the confidence bounds on the cumulative and instantaneous MTBF using the Fisher Matrix and Crow methods.

Solution

- 1) Using RGA, the value of [math]\displaystyle{ \widehat{\beta }\,\! }[/math], which must be solved numerically, is 0.81361. Using this value, [math]\displaystyle{ \widehat{\lambda }\,\! }[/math] is:

- [math]\displaystyle{ \widehat{\lambda }=0.44585\,\! }[/math]

The grouped Fisher Matrix confidence bounds can be obtained on the parameters [math]\displaystyle{ \widehat{\beta }\,\! }[/math] and [math]\displaystyle{ \widehat{\lambda }\,\! }[/math] at the 90% confidence level by:

- [math]\displaystyle{ \begin{align} {{\beta }_{L}} = & \hat{\beta }{{e}^{{{z}_{\alpha }}\sqrt{Var(\hat{\beta })}/\hat{\beta }}} \\ = & 0.6546 \\ {{\beta }_{U}} = & \hat{\beta }{{e}^{-{{z}_{\alpha }}\sqrt{Var(\hat{\beta })}/\hat{\beta }}} \\ = & 1.0112 \end{align}\,\! }[/math]

- and:

- [math]\displaystyle{ \begin{align} {{\lambda }_{L}} = & \hat{\lambda }{{e}^{{{z}_{\alpha }}\sqrt{Var(\hat{\lambda })}/\hat{\lambda }}} \\ = & 0.14594 \\ {{\lambda }_{U}} = & \hat{\lambda }{{e}^{-{{z}_{\alpha }}\sqrt{Var(\hat{\lambda })}/\hat{\lambda }}} \\ = & 1.36207 \end{align}\,\! }[/math]

Crow confidence bounds can also be obtained on the parameters [math]\displaystyle{ \widehat{\beta }\,\! }[/math] and [math]\displaystyle{ \widehat{\lambda }\,\! }[/math] at the 90% confidence level, as:

- [math]\displaystyle{ \begin{align} {{\beta }_{L}} = & \hat{\beta }(1-S) \\ = & 0.63552 \\ {{\beta }_{U}} = & \hat{\beta }(1+S) \\ = & 0.99170 \end{align}\,\! }[/math]

- and:

- [math]\displaystyle{ \begin{align} {{\lambda }_{L}} = & \frac{\chi _{\tfrac{\alpha }{2},2N}^{2}}{2\cdot T_{k}^{\beta }} \\ = & 0.36197 \\ {{\lambda }_{U}} = & \frac{\chi _{1-\tfrac{\alpha }{2},2N+2}^{2}}{2\cdot T_{k}^{\beta }} \\ = & 0.53697 \end{align}\,\! }[/math]

- 2) The Fisher Matrix confidence bounds for the cumulative MTBF and the instantaneous MTBF at the 90% 2-sided confidence level and for [math]\displaystyle{ T=500\,\! }[/math] hour are:

- [math]\displaystyle{ \begin{align} {{[{{m}_{c}}(T)]}_{L}} = & {{{\hat{m}}}_{c}}(t){{e}^{{{z}_{\alpha /2}}\sqrt{Var({{{\hat{m}}}_{c}}(t))}/{{{\hat{m}}}_{c}}(t)}} \\ = & 5.8680 \\ {{[{{m}_{c}}(T)]}_{U}} = & {{{\hat{m}}}_{c}}(t){{e}^{-{{z}_{\alpha /2}}\sqrt{Var({{{\hat{m}}}_{c}}(t))}/{{{\hat{m}}}_{c}}(t)}} \\ = & 8.6947 \end{align}\,\! }[/math]

- and:

- [math]\displaystyle{ \begin{align} {{[MTB{{F}_{i}}]}_{L}} = & {{{\hat{m}}}_{i}}(t){{e}^{{{z}_{\alpha /2}}\sqrt{Var({{{\hat{m}}}_{i}}(t))}/{{{\hat{m}}}_{i}}(t)}} \\ = & 6.6483 \\ {{[MTB{{F}_{i}}]}_{U}} = & {{{\hat{m}}}_{i}}(t){{e}^{-{{z}_{\alpha /2}}\sqrt{Var({{{\hat{m}}}_{i}}(t))}/{{{\hat{m}}}_{i}}(t)}} \\ = & 11.5932 \end{align}\,\! }[/math]

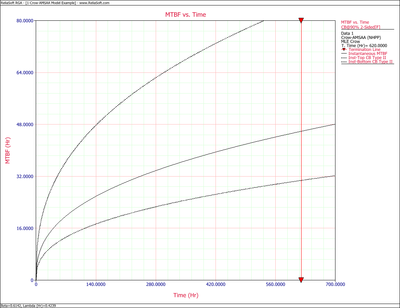

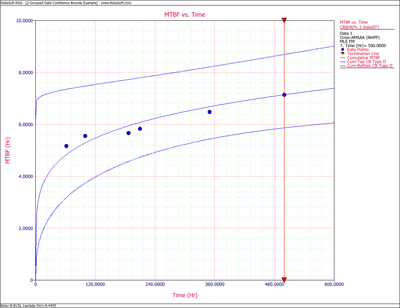

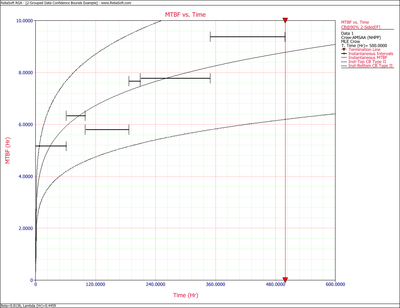

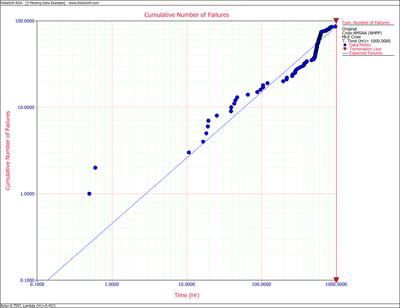

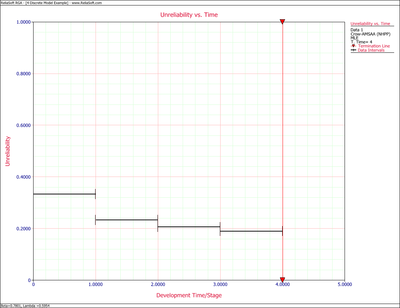

The next two figures show plots of the Fisher Matrix confidence bounds for the cumulative and instantaneous MTBF.

The Crow confidence bounds for the cumulative and instantaneous MTBF at the 90% 2-sided confidence level and for [math]\displaystyle{ T = 500\,\! }[/math]hours are:

- [math]\displaystyle{ \begin{align} {{[{{m}_{c}}(T)]}_{L}} = & \frac{1}{C{{(t)}_{U}}} \\ = & 5.85449 \\ {{[{{m}_{c}}(T)]}_{U}} = & \frac{1}{C{{(t)}_{L}}} \\ = & 8.79822 \end{align}\,\! }[/math]

- and:

- [math]\displaystyle{ \begin{align} {{[MTB{{F}_{i}}]}_{L}} = & {{\widehat{m}}_{i}}(1-W) \\ = & 6.19623 \\ {{[MTB{{F}_{i}}]}_{U}} = & {{\widehat{m}}_{i}}(1+W) \\ = & 11.36223 \end{align}\,\! }[/math]

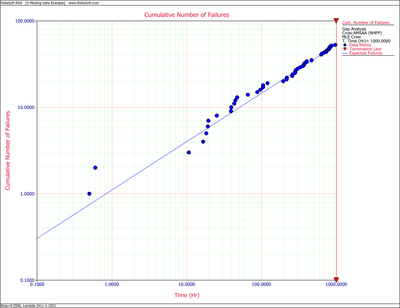

The next two figures show plots of the Crow confidence bounds for the cumulative and instantaneous MTBF.

Goodness-of-Fit Tests

The term parameter estimation refers to the process of using sample data (in reliability engineering, usually times-to-failure or success data) to estimate the parameters of the selected distribution. Several parameter estimation methods are available. This section presents an overview of the available methods used in life data analysis. More specifically, we start with the relatively simple method of Probability Plotting and continue with the more sophisticated methods of Rank Regression (or Least Squares), Maximum Likelihood Estimation and Bayesian Estimation Methods.

Probability Plotting

The least mathematically intensive method for parameter estimation is the method of probability plotting. As the term implies, probability plotting involves a physical plot of the data on specially constructed probability plotting paper. This method is easily implemented by hand, given that one can obtain the appropriate probability plotting paper.

The method of probability plotting takes the cdf of the distribution and attempts to linearize it by employing a specially constructed paper. The following sections illustrate the steps in this method using the 2-parameter Weibull distribution as an example. This includes:

- Linearize the unreliability function

- Construct the probability plotting paper

- Determine the X and Y positions of the plot points

And then using the plot to read any particular time or reliability/unreliability value of interest.

Linearizing the Unreliability Function

In the case of the 2-parameter Weibull, the cdf (also the unreliability [math]\displaystyle{ Q(t)\,\! }[/math]) is given by:

- [math]\displaystyle{ F(t)=Q(t)=1-{e^{-\left(\tfrac{t}{\eta}\right)^{\beta}}}\,\! }[/math]

This function can then be linearized (i.e., put in the common form of [math]\displaystyle{ y = m'x + b\,\! }[/math] format) as follows:

- [math]\displaystyle{ \begin{align} Q(t)= & 1-{e^{-\left(\tfrac{t}{\eta}\right)^{\beta}}} \\ \ln (1-Q(t))= & \ln \left[ {e^{-\left(\tfrac{t}{\eta}\right)^{\beta}}} \right] \\ \ln (1-Q(t))=& -\left(\tfrac{t}{\eta}\right)^{\beta} \\ \ln ( -\ln (1-Q(t)))= & \beta \left(\ln \left( \frac{t}{\eta }\right)\right) \\ \ln \left( \ln \left( \frac{1}{1-Q(t)}\right) \right) = & \beta\ln{ t} -\beta\ln{\eta} \\ \end{align}\,\! }[/math]

Then by setting:

- [math]\displaystyle{ y=\ln \left( \ln \left( \frac{1}{1-Q(t)} \right) \right)\,\! }[/math]

and:

- [math]\displaystyle{ x=\ln \left( t \right)\,\! }[/math]

the equation can then be rewritten as:

- [math]\displaystyle{ y=\beta x-\beta \ln \left( \eta \right)\,\! }[/math]

which is now a linear equation with a slope of:

- [math]\displaystyle{ \begin{align} m = \beta \end{align}\,\! }[/math]

and an intercept of:

- [math]\displaystyle{ b=-\beta \cdot \ln(\eta)\,\! }[/math]

Constructing the Paper

The next task is to construct the Weibull probability plotting paper with the appropriate y and x axes. The x-axis transformation is simply logarithmic. The y-axis is a bit more complex, requiring a double log reciprocal transformation, or:

- [math]\displaystyle{ y=\ln \left(\ln \left( \frac{1}{1-Q(t)} ) \right) \right)\,\! }[/math]

where [math]\displaystyle{ Q(t)\,\! }[/math] is the unreliability.

Such papers have been created by different vendors and are called probability plotting papers. ReliaSoft's reliability engineering resource website at www.weibull.com has different plotting papers available for download.

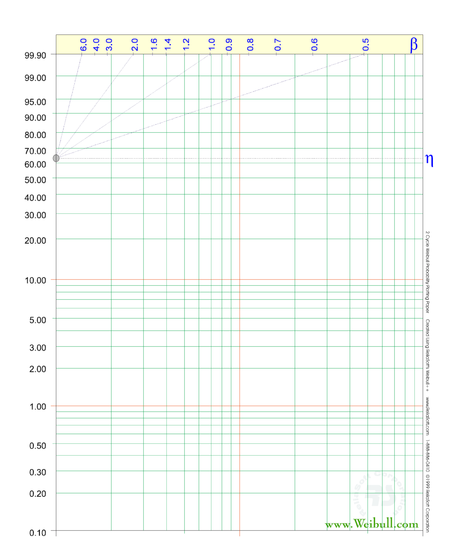

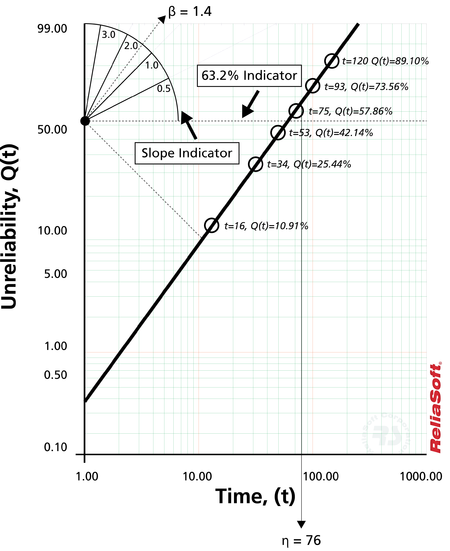

To illustrate, consider the following probability plot on a slightly different type of Weibull probability paper.

This paper is constructed based on the mentioned y and x transformations, where the y-axis represents unreliability and the x-axis represents time. Both of these values must be known for each time-to-failure point we want to plot.

Then, given the [math]\displaystyle{ y\,\! }[/math] and [math]\displaystyle{ x\,\! }[/math] value for each point, the points can easily be put on the plot. Once the points have been placed on the plot, the best possible straight line is drawn through these points. Once the line has been drawn, the slope of the line can be obtained (some probability papers include a slope indicator to simplify this calculation). This is the parameter [math]\displaystyle{ \beta\,\! }[/math], which is the value of the slope. To determine the scale parameter, [math]\displaystyle{ \eta\,\! }[/math] (also called the characteristic life), one reads the time from the x-axis corresponding to [math]\displaystyle{ Q(t)=63.2%\,\! }[/math].

Note that at:

- [math]\displaystyle{ \begin{align} Q(t=\eta)= & 1-{{e}^{-{{\left( \tfrac{t}{\eta } \right)}^{\beta }}}} \\ = & 1-{{e}^{-1}} \\ = & 0.632 \\ = & 63.2% \end{align}\,\! }[/math]

Thus, if we enter the y axis at [math]\displaystyle{ Q(t)=63.2%\,\! }[/math], the corresponding value of [math]\displaystyle{ t\,\! }[/math] will be equal to [math]\displaystyle{ \eta\,\! }[/math]. Thus, using this simple methodology, the parameters of the Weibull distribution can be estimated.

Determining the X and Y Position of the Plot Points

The points on the plot represent our data or, more specifically, our times-to-failure data. If, for example, we tested four units that failed at 10, 20, 30 and 40 hours, then we would use these times as our x values or time values.

Determining the appropriate y plotting positions, or the unreliability values, is a little more complex. To determine the y plotting positions, we must first determine a value indicating the corresponding unreliability for that failure. In other words, we need to obtain the cumulative percent failed for each time-to-failure. For example, the cumulative percent failed by 10 hours may be 25%, by 20 hours 50%, and so forth. This is a simple method illustrating the idea. The problem with this simple method is the fact that the 100% point is not defined on most probability plots; thus, an alternative and more robust approach must be used. The most widely used method of determining this value is the method of obtaining the median rank for each failure, as discussed next.

Median Ranks

The Median Ranks method is used to obtain an estimate of the unreliability for each failure. The median rank is the value that the true probability of failure, [math]\displaystyle{ Q({{T}_{j}})\,\! }[/math], should have at the [math]\displaystyle{ {{j}^{th}}\,\! }[/math] failure out of a sample of [math]\displaystyle{ N\,\! }[/math] units at the 50% confidence level.

The rank can be found for any percentage point, [math]\displaystyle{ P\,\! }[/math], greater than zero and less than one, by solving the cumulative binomial equation for [math]\displaystyle{ Z\,\! }[/math]. This represents the rank, or unreliability estimate, for the [math]\displaystyle{ {{j}^{th}}\,\! }[/math] failure in the following equation for the cumulative binomial:

- [math]\displaystyle{ P=\underset{k=j}{\overset{N}{\mathop \sum }}\,\left( \begin{matrix} N \\ k \\ \end{matrix} \right){{Z}^{k}}{{\left( 1-Z \right)}^{N-k}}\,\! }[/math]

where [math]\displaystyle{ N\,\! }[/math] is the sample size and [math]\displaystyle{ j\,\! }[/math] the order number.

The median rank is obtained by solving this equation for [math]\displaystyle{ Z\,\! }[/math] at [math]\displaystyle{ P = 0.50\,\! }[/math]:

- [math]\displaystyle{ 0.50=\underset{k=j}{\overset{N}{\mathop \sum }}\,\left( \begin{matrix} N \\ k \\ \end{matrix} \right){{Z}^{k}}{{\left( 1-Z \right)}^{N-k}}\,\! }[/math]

For example, if [math]\displaystyle{ N=4\,\! }[/math] and we have four failures, we would solve the median rank equation for the value of [math]\displaystyle{ Z\,\! }[/math] four times; once for each failure with [math]\displaystyle{ j= 1, 2, 3 \text{ and }4\,\! }[/math]. This result can then be used as the unreliability estimate for each failure or the [math]\displaystyle{ y\,\! }[/math] plotting position. (See also The Weibull Distribution for a step-by-step example of this method.) The solution of cumulative binomial equation for [math]\displaystyle{ Z\,\! }[/math] requires the use of numerical methods.

Beta and F Distributions Approach

A more straightforward and easier method of estimating median ranks is by applying two transformations to the cumulative binomial equation, first to the beta distribution and then to the F distribution, resulting in [12, 13]:

- [math]\displaystyle{ \begin{array}{*{35}{l}} MR & = & \tfrac{1}{1+\tfrac{N-j+1}{j}{{F}_{0.50;m;n}}} \\ m & = & 2(N-j+1) \\ n & = & 2j \\ \end{array}\,\! }[/math]

where [math]\displaystyle{ {{F}_{0.50;m;n}}\,\! }[/math] denotes the [math]\displaystyle{ F\,\! }[/math] distribution at the 0.50 point, with [math]\displaystyle{ m\,\! }[/math] and [math]\displaystyle{ n\,\! }[/math] degrees of freedom, for failure [math]\displaystyle{ j\,\! }[/math] out of [math]\displaystyle{ N\,\! }[/math] units.

Benard's Approximation for Median Ranks

Another quick, and less accurate, approximation of the median ranks is also given by:

- [math]\displaystyle{ MR = \frac{{j - 0.3}}{{N + 0.4}}\,\! }[/math]

This approximation of the median ranks is also known as Benard's approximation.

Kaplan-Meier

The Kaplan-Meier estimator (also known as the product limit estimator) is used as an alternative to the median ranks method for calculating the estimates of the unreliability for probability plotting purposes. The equation of the estimator is given by:

- [math]\displaystyle{ \widehat{F}({{t}_{i}})=1-\underset{j=1}{\overset{i}{\mathop \prod }}\,\frac{{{n}_{j}}-{{r}_{j}}}{{{n}_{j}}},\text{ }i=1,...,m\,\! }[/math]

where:

- [math]\displaystyle{ \begin{align} m = & {\text{total number of data points}} \\ n = & {\text{the total number of units}} \\ {n_i} = & n - \sum_{j = 0}^{i - 1}{s_j} - \sum_{j = 0}^{i - 1}{r_j}, \text{i = 1,...,m }\\ {r_j} = & {\text{ number of failures in the }}{j^{th}}{\text{ data group, and}} \\ {s_j} = & {\text{number of surviving units in the }}{j^{th}}{\text{ data group}} \\ \end{align} \,\! }[/math]

Probability Plotting Example

This same methodology can be applied to other distributions with cdf equations that can be linearized. Different probability papers exist for each distribution, because different distributions have different cdf equations. ReliaSoft's software tools automatically create these plots for you. Special scales on these plots allow you to derive the parameter estimates directly from the plots, similar to the way [math]\displaystyle{ \beta\,\! }[/math] and [math]\displaystyle{ \eta\,\! }[/math] were obtained from the Weibull probability plot. The following example demonstrates the method again, this time using the 1-parameter exponential distribution.

Let's assume six identical units are reliability tested at the same application and operation

stress levels. All of these units fail during the test after operating for the following times (in hours): 96, 257, 498, 763, 1051 and 1744.

The steps for using the probability plotting method to determine the parameters of the exponential pdf representing the data are as follows:

Rank the times-to-failure in ascending order as shown next.

Obtain their median rank plotting positions. Median rank positions are used instead of other ranking methods because median ranks are at a specific confidence level (50%).

The times-to-failure, with their corresponding median ranks, are shown next:

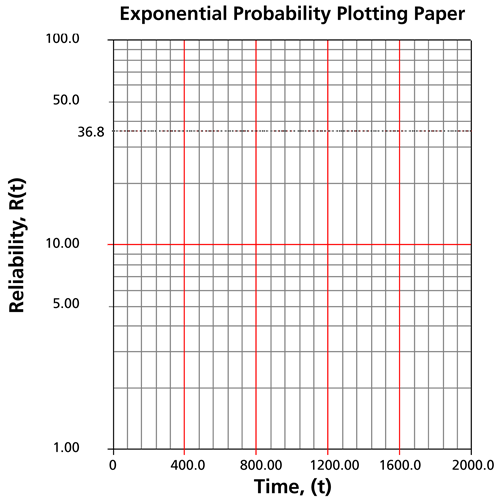

On an exponential probability paper, plot the times on the x-axis and their corresponding rank value on the y-axis. The next figure displays an example of an exponential probability paper. The paper is simply a log-linear paper.

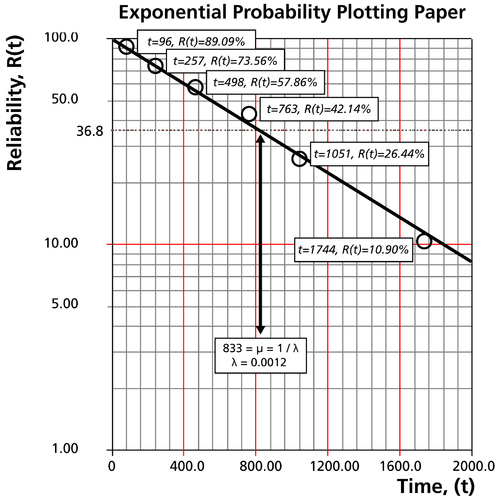

Draw the best possible straight line that goes through the [math]\displaystyle{ t=0\,\! }[/math] and [math]\displaystyle{ (t)=100%\,\! }[/math] point and through the plotted points (as shown in the plot below).

At the [math]\displaystyle{ Q(t)=63.2%\,\! }[/math] or [math]\displaystyle{ R(t)=36.8%\,\! }[/math] ordinate point, draw a straight horizontal line until this line intersects the fitted straight line. Draw a vertical line through this intersection until it crosses the abscissa. The value at the intersection of the abscissa is the estimate of the mean. For this case, [math]\displaystyle{ \widehat{\mu }=833\,\! }[/math] hours which means that [math]\displaystyle{ \lambda =\tfrac{1}{\mu }=0.0012\,\! }[/math] (This is always at 63.2% because [math]\displaystyle{ (T)=1-{{e}^{-\tfrac{\mu }{\mu }}}=1-{{e}^{-1}}=0.632=63.2%)\,\! }[/math].

Now any reliability value for any mission time [math]\displaystyle{ t\,\! }[/math] can be obtained. For example, the reliability for a mission of 15 hours, or any other time, can now be obtained either from the plot or analytically.

To obtain the value from the plot, draw a vertical line from the abscissa, at [math]\displaystyle{ t=15\,\! }[/math] hours, to the fitted line. Draw a horizontal line from this intersection to the ordinate and read [math]\displaystyle{ R(t)\,\! }[/math]. In this case, [math]\displaystyle{ R(t=15)=98.15%\,\! }[/math]. This can also be obtained analytically, from the exponential reliability function.

Comments on the Probability Plotting Method

Besides the most obvious drawback to probability plotting, which is the amount of effort required, manual probability plotting is not always consistent in the results. Two people plotting a straight line through a set of points will not always draw this line the same way, and thus will come up with slightly different results. This method was used primarily before the widespread use of computers that could easily perform the calculations for more complicated parameter estimation methods, such as the least squares and maximum likelihood methods.

Least Squares (Rank Regression)

Using the idea of probability plotting, regression analysis mathematically fits the best straight line to a set of points, in an attempt to estimate the parameters. Essentially, this is a mathematically based version of the probability plotting method discussed previously.

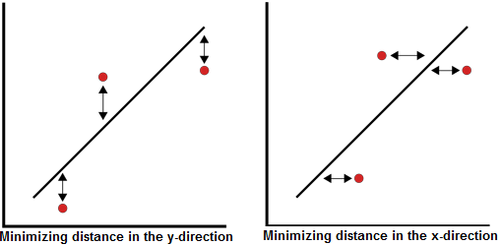

The method of linear least squares is used for all regression analysis performed by Weibull++, except for the cases of the 3-parameter Weibull, mixed Weibull, gamma and generalized gamma distributions, where a non-linear regression technique is employed. The terms linear regression and least squares are used synonymously in this reference. In Weibull++, the term rank regression is used instead of least squares, or linear regression, because the regression is performed on the rank values, more specifically, the median rank values (represented on the y-axis). The method of least squares requires that a straight line be fitted to a set of data points, such that the sum of the squares of the distance of the points to the fitted line is minimized. This minimization can be performed in either the vertical or horizontal direction. If the regression is on [math]\displaystyle{ X\,\! }[/math], then the line is fitted so that the horizontal deviations from the points to the line are minimized. If the regression is on Y, then this means that the distance of the vertical deviations from the points to the line is minimized. This is illustrated in the following figure.

Rank Regression on Y

Assume that a set of data pairs [math]\displaystyle{ ({{x}_{1}},{{y}_{1}})\,\! }[/math], [math]\displaystyle{ ({{x}_{2}},{{y}_{2}})\,\! }[/math],..., [math]\displaystyle{ ({{x}_{N}},{{y}_{N}})\,\! }[/math] were obtained and plotted, and that the [math]\displaystyle{ x\,\! }[/math]-values are known exactly. Then, according to the least squares principle, which minimizes the vertical distance between the data points and the straight line fitted to the data, the best fitting straight line to these data is the straight line [math]\displaystyle{ y=\hat{a}+\hat{b}x\,\! }[/math] (where the recently introduced ([math]\displaystyle{ \hat{ }\,\! }[/math]) symbol indicates that this value is an estimate) such that:

- [math]\displaystyle{ \sum\limits_{i=1}^{N}{{{\left( \hat{a}+\hat{b}{{x}_{i}}-{{y}_{i}} \right)}^{2}}=\min \sum\limits_{i=1}^{N}{{{\left( a+b{{x}_{i}}-{{y}_{i}} \right)}^{2}}}}\,\! }[/math]

and where [math]\displaystyle{ \hat{a}\,\! }[/math] and [math]\displaystyle{ \hat b\,\! }[/math] are the least squares estimates of [math]\displaystyle{ a\,\! }[/math] and [math]\displaystyle{ b\,\! }[/math], and [math]\displaystyle{ N\,\! }[/math] is the number of data points. These equations are minimized by estimates of [math]\displaystyle{ \widehat a\,\! }[/math] and [math]\displaystyle{ \widehat{b}\,\! }[/math] such that:

- [math]\displaystyle{ \hat{a}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}}{N}=\bar{y}-\hat{b}\bar{x}\,\! }[/math]

and:

- [math]\displaystyle{ \hat{b}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}{{y}_{i}}-\tfrac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}}{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,x_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}} \right)}^{2}}}{N}}\,\! }[/math]

Rank Regression on X

Assume that a set of data pairs .., [math]\displaystyle{ ({{x}_{2}},{{y}_{2}})\,\! }[/math],..., [math]\displaystyle{ ({{x}_{N}},{{y}_{N}})\,\! }[/math] were obtained and plotted, and that the y-values are known exactly. The same least squares principle is applied, but this time, minimizing the horizontal distance between the data points and the straight line fitted to the data. The best fitting straight line to these data is the straight line [math]\displaystyle{ x=\widehat{a}+\widehat{b}y\,\! }[/math] such that:

- [math]\displaystyle{ \underset{i=1}{\overset{N}{\mathop \sum }}\,{{(\widehat{a}+\widehat{b}{{y}_{i}}-{{x}_{i}})}^{2}}=min(a,b)\underset{i=1}{\overset{N}{\mathop \sum }}\,{{(a+b{{y}_{i}}-{{x}_{i}})}^{2}}\,\! }[/math]

Again, [math]\displaystyle{ \widehat{a}\,\! }[/math] and [math]\displaystyle{ \widehat b\,\! }[/math] are the least squares estimates of and [math]\displaystyle{ b\,\! }[/math], and [math]\displaystyle{ N\,\! }[/math] is the number of data points. These equations are minimized by estimates of [math]\displaystyle{ \widehat a\,\! }[/math] and [math]\displaystyle{ \widehat{b}\,\! }[/math] such that:

- [math]\displaystyle{ \hat{a}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}}{N}-\hat{b}\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}=\bar{x}-\hat{b}\bar{y}\,\! }[/math]

- and:

- [math]\displaystyle{ \widehat{b}=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}{{y}_{i}}-\tfrac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}}{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,y_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}} \right)}^{2}}}{N}}\,\! }[/math]

The corresponding relations for determining the parameters for specific distributions (i.e., Weibull, exponential, etc.), are presented in the chapters covering that distribution.

Correlation Coefficient

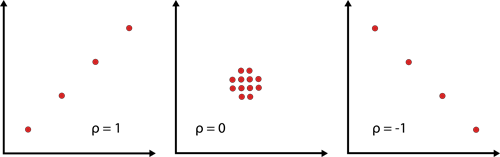

The correlation coefficient is a measure of how well the linear regression model fits the data and is usually denoted by [math]\displaystyle{ \rho\,\! }[/math]. In the case of life data analysis, it is a measure for the strength of the linear relation (correlation) between the median ranks and the data. The population correlation coefficient is defined as follows:

- [math]\displaystyle{ \rho =\frac{{{\sigma }_{xy}}}{{{\sigma }_{x}}{{\sigma }_{y}}}\,\! }[/math]

where [math]\displaystyle{ {{\sigma}_{xy}} = \,\! }[/math] covariance of [math]\displaystyle{ x\,\! }[/math] and [math]\displaystyle{ y\,\! }[/math], [math]\displaystyle{ {{\sigma}_{x}} = \,\! }[/math] standard deviation of [math]\displaystyle{ x\,\! }[/math], and [math]\displaystyle{ {{\sigma}_{y}} = \,\! }[/math] standard deviation of [math]\displaystyle{ y\,\! }[/math].

The estimator of [math]\displaystyle{ \rho\,\! }[/math] is the sample correlation coefficient, [math]\displaystyle{ \hat{\rho }\,\! }[/math], given by:

- [math]\displaystyle{ \hat{\rho }=\frac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}{{y}_{i}}-\tfrac{\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}}\underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}}}{N}}{\sqrt{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,x_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{x}_{i}} \right)}^{2}}}{N} \right)\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,y_{i}^{2}-\tfrac{{{\left( \underset{i=1}{\overset{N}{\mathop{\sum }}}\,{{y}_{i}} \right)}^{2}}}{N} \right)}}\,\! }[/math]

The range of [math]\displaystyle{ \hat \rho \,\! }[/math] is [math]\displaystyle{ -1\le \hat{\rho }\le 1\,\! }[/math].

The closer the value is to [math]\displaystyle{ \pm 1\,\! }[/math], the better the linear fit. Note that +1 indicates a perfect fit (the paired values ([math]\displaystyle{ {{x}_{i}},{{y}_{i}}\,\! }[/math]) lie on a straight line) with a positive slope, while -1 indicates a perfect fit with a negative slope. A correlation coefficient value of zero would indicate that the data are randomly scattered and have no pattern or correlation in relation to the regression line model.

Comments on the Least Squares Method

The least squares estimation method is quite good for functions that can be linearized. For these distributions, the calculations are relatively easy and straightforward, having closed-form solutions that can readily yield an answer without having to resort to numerical techniques or tables. Furthermore, this technique provides a good measure of the goodness-of-fit of the chosen distribution in the correlation coefficient. Least squares is generally best used with data sets containing complete data, that is, data consisting only of single times-to-failure with no censored or interval data. (See Life Data Classification for information about the different data types, including complete, left censored, right censored (or suspended) and interval data.)

See also:

Rank Methods for Censored Data

All available data should be considered in the analysis of times-to-failure data. This includes the case when a particular unit in a sample has been removed from the test prior to failure. An item, or unit, which is removed from a reliability test prior to failure, or a unit which is in the field and is still operating at the time the reliability of these units is to be determined, is called a suspended item or right censored observation or right censored data point. Suspended items analysis would also be considered when:

- We need to make an analysis of the available results before test completion.

- The failure modes which are occurring are different than those anticipated and such units are withdrawn from the test.

- We need to analyze a single mode and the actual data set comprises multiple modes.

- A warranty analysis is to be made of all units in the field (non-failed and failed units). The non-failed units are considered to be suspended items (or right censored).

This section describes the rank methods that are used in both probability plotting and least squares (rank regression) to handle censored data. This includes:

- The rank adjustment method for right censored (suspension) data.

- ReliaSoft's alternative ranking method for censored data including left censored, right censored, and interval data.

Rank Adjustment Method for Right Censored Data

When using the probability plotting or least squares (rank regression) method for data sets where some of the units did not fail, or were suspended, we need to adjust their probability of failure, or unreliability. As discussed before, estimates of the unreliability for complete data are obtained using the median ranks approach. The following methodology illustrates how adjusted median ranks are computed to account for right censored data. To better illustrate the methodology, consider the following example in Kececioglu [20] where five items are tested resulting in three failures and two suspensions.

| Item Number (Position) |

Failure (F) or Suspension (S) |

Life of item, hr |

|---|---|---|

| 1 | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | 5,100 |

| 2 | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | 9,500 |

| 3 | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | 15,000 |

| 4 | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | 22,000 |

| 5 | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | 40,000 |

The methodology for plotting suspended items involves adjusting the rank positions and plotting the data based on new positions, determined by the location of the suspensions. If we consider these five units, the following methodology would be used: The first item must be the first failure; hence, it is assigned failure order number [math]\displaystyle{ j = 1\,\! }[/math]. The actual failure order number (or position) of the second failure, [math]\displaystyle{ {{F}_{2}}\,\! }[/math] is in doubt. It could either be in position 2 or in position 3. Had [math]\displaystyle{ {{S}_{1}}\,\! }[/math] not been withdrawn from the test at 9,500 hours, it could have operated successfully past 15,000 hours, thus placing [math]\displaystyle{ {{F}_{2}}\,\! }[/math] in position 2. Alternatively, [math]\displaystyle{ {{S}_{1}}\,\! }[/math] could also have failed before 15,000 hours, thus placing [math]\displaystyle{ {{F}_{2}}\,\! }[/math] in position 3. In this case, the failure order number for [math]\displaystyle{ {{F}_{2}}\,\! }[/math] will be some number between 2 and 3. To determine this number, consider the following:

We can find the number of ways the second failure can occur in either order number 2 (position 2) or order number 3 (position 3). The possible ways are listed next.

| [math]\displaystyle{ {{F}_{2}}\,\! }[/math] in Position 2 | OR | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] in Position 3 | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 1 | 2 | |

| [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | |

| [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | |

| [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | |

| [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | |

| [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | |

It can be seen that [math]\displaystyle{ {{F}_{2}}\,\! }[/math] can occur in the second position six ways and in the third position two ways. The most probable position is the average of these possible ways, or the mean order number ( MON ), given by:

- [math]\displaystyle{ {{F}_{2}}=MO{{N}_{2}}=\frac{(6\times 2)+(2\times 3)}{6+2}=2.25\,\! }[/math]

Using the same logic on the third failure, it can be located in position numbers 3, 4 and 5 in the possible ways listed next.

| [math]\displaystyle{ {{F}_{3}}\,\! }[/math] in Position 3 | OR | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] in Position 4 | OR | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] in Position 5 | |||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 1 | 2 | 3 | 1 | 2 | 3 | ||

| [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math]> | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | ||

| [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | ||

| [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | ||

| [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | ||

| [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | [math]\displaystyle{ {{F}_{3}}\,\! }[/math] | ||

Then, the mean order number for the third failure, (item 5) is:

- [math]\displaystyle{ MO{{N}_{3}}=\frac{(2\times 3)+(3\times 4)+(3\times 5)}{2+3+3}=4.125\,\! }[/math]

Once the mean order number for each failure has been established, we obtain the median rank positions for these failures at their mean order number. Specifically, we obtain the median rank of the order numbers 1, 2.25 and 4.125 out of a sample size of 5, as given next.

| Plotting Positions for the Failures (Sample Size=5) | ||

|---|---|---|

| Failure Number | MON | Median Rank Position(%) |

| 1:[math]\displaystyle{ {{F}_{1}}\,\! }[/math] | 1 | 13% |

| 2:[math]\displaystyle{ {{F}_{2}}\,\! }[/math] | 2.25 | 36% |

| 3:[math]\displaystyle{ {{F}_{3}}\,\! }[/math] | 4.125 | 71% |

Once the median rank values have been obtained, the probability plotting analysis is identical to that presented before. As you might have noticed, this methodology is rather laborious. Other techniques and shortcuts have been developed over the years to streamline this procedure. For more details on this method, see Kececioglu [20]. Here, we will introduce one of these methods. This method calculates MON using an increment, I, which is defined by:

- [math]\displaystyle{ {{I}_{i}}=\frac{N+1-PMON}{1+NIBPSS} }[/math]

Where

- N= the sample size, or total number of items in the test

- PMON = previous mean order number

- NIBPSS = the number of items beyond the present suspended set. It is the number of units (including all the failures and suspensions) at the current failure time.

- i = the ith failure item

MON is given as:

- [math]\displaystyle{ MO{{N}_{i}}=MO{{N}_{i-1}}+{{I}_{i}} }[/math]

Let's calculate the previous example using the method.

For F1:

- [math]\displaystyle{ MO{{N}_{1}}=MO{{N}_{0}}+{{I}_{1}}=\frac{5+1-0}{1+5}=1 }[/math]

For F2:

- [math]\displaystyle{ MO{{N}_{2}}=MO{{N}_{1}}+{{I}_{2}}=1+\frac{5+1-1}{1+3}=2.25 }[/math]

For F3:

- [math]\displaystyle{ MO{{N}_{3}}=MO{{N}_{2}}+{{I}_{3}}=2.25+\frac{5+1-2.25}{1+1}=4.125 }[/math]

The MON obtained for each failure item via this method is same as from the first method, so the median rank values will also be the same.

For Grouped data, the increment [math]\displaystyle{ {{I}_{i}} }[/math] at each failure group will be multiplied by the number of failures in that group.

Shortfalls of the Rank Adjustment Method

Even though the rank adjustment method is the most widely used method for performing analysis for analysis of suspended items, we would like to point out the following shortcoming. As you may have noticed, only the position where the failure occurred is taken into account, and not the exact time-to-suspension. For example, this methodology would yield the exact same results for the next two cases.

| Case 1 | Case 2 | ||||

|---|---|---|---|---|---|

| Item Number | State*"F" or "S" | Life of an item, hr | Item number | State*,"F" or "S" | Life of item, hr |

| 1 | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | 1,000 | 1 | [math]\displaystyle{ {{F}_{1}}\,\! }[/math] | 1,000 |

| 2 | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | 1,100 | 2 | [math]\displaystyle{ {{S}_{1}}\,\! }[/math] | 9,700 |

| 3 | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | 1,200 | 3 | [math]\displaystyle{ {{S}_{2}}\,\! }[/math] | 9,800 |

| 4 | [math]\displaystyle{ {{S}_{3}}\,\! }[/math] | 1,300 | 4 | [math]\displaystyle{ {{S}_{3}}\,\! }[/math] | 9,900 |

| 5 | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | 10,000 | 5 | [math]\displaystyle{ {{F}_{2}}\,\! }[/math] | 10,000 |

| * F - Failed, S - Suspended | * F - Failed, S - Suspended | ||||

This shortfall is significant when the number of failures is small and the number of suspensions is large and not spread uniformly between failures, as with these data. In cases like this, it is highly recommended to use maximum likelihood estimation (MLE) to estimate the parameters instead of using least squares, because MLE does not look at ranks or plotting positions, but rather considers each unique time-to-failure or suspension. For the data given above, the results are as follows. The estimated parameters using the method just described are the same for both cases (1 and 2):

- [math]\displaystyle{ \begin{array}{*{35}{l}} \widehat{\beta }= & \text{0}\text{.81} \\ \widehat{\eta }= & \text{11,400 hr} \\ \end{array} \,\! }[/math]

However, the MLE results for Case 1 are:

- [math]\displaystyle{ \begin{array}{*{35}{l}} \widehat{\beta }= & \text{1.33} \\ \widehat{\eta }= & \text{6,920 hr} \\ \end{array}\,\! }[/math]

And the MLE results for Case 2 are:

- [math]\displaystyle{ \begin{array}{*{35}{l}} \widehat{\beta }= & \text{0}\text{.93} \\ \widehat{\eta }= & \text{21,300 hr} \\ \end{array}\,\! }[/math]

As we can see, there is a sizable difference in the results of the two sets calculated using MLE and the results using regression with the SRM. The results for both cases are identical when using the regression estimation technique with SRM, as SRM considers only the positions of the suspensions. The MLE results are quite different for the two cases, with the second case having a much larger value of [math]\displaystyle{ \eta \,\! }[/math], which is due to the higher values of the suspension times in Case 2. This is because the maximum likelihood technique, unlike rank regression with SRM, considers the values of the suspensions when estimating the parameters. This is illustrated in the discussion of MLE given below.

One alternative to improve the regression method is to use the following ReliaSoft Ranking Method (RRM) to calculate the rank. RRM does consider the effect of the censoring time.

ReliaSoft's Ranking Method (RRM) for Interval Censored Data

When analyzing interval data, it is commonplace to assume that the actual failure time occurred at the midpoint of the interval. To be more conservative, you can use the starting point of the interval or you can use the end point of the interval to be most optimistic. Weibull++ allows you to employ ReliaSoft's ranking method (RRM) when analyzing interval data. Using an iterative process, this ranking method is an improvement over the standard ranking method (SRM).

When analyzing left or right censored data, RRM also considers the effect of the actual censoring time. Therefore, the resulted rank will be more accurate than the SRM where only the position not the exact time of censoring is used.

For more details on this method see ReliaSoft's Ranking Method.

Maximum Likelihood Estimation (MLE)

From a statistical point of view, the method of maximum likelihood estimation method is, with some exceptions, considered to be the most robust of the parameter estimation techniques discussed here. The method presented in this section is for complete data (i.e., data consisting only of times-to-failure). The analysis for right censored (suspension) data, and for interval or left censored data, are then discussed in the following sections.

The basic idea behind MLE is to obtain the most likely values of the parameters, for a given distribution, that will best describe the data. As an example, consider the following data (-3, 0, 4) and assume that you are trying to estimate the mean of the data. Now, if you have to choose the most likely value for the mean from -5, 1 and 10, which one would you choose? In this case, the most likely value is 1 (given your limit on choices). Similarly, under MLE, one determines the most likely values for the parameters of the assumed distribution. It is mathematically formulated as follows.

If [math]\displaystyle{ x\,\! }[/math] is a continuous random variable with pdf:

- [math]\displaystyle{ \begin{align} & f(x;{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}) \\ \end{align} \,\! }[/math]

where [math]\displaystyle{ {{\theta}_{1}},{{\theta}_{2}},...,{{\theta}_{k}}\,\! }[/math] are [math]\displaystyle{ k\,\! }[/math] unknown parameters which need to be estimated, with R independent observations,[math]\displaystyle{ {{x}_{1,}}{{x}_{2}},\cdots ,{{x}_{R}}\,\! }[/math], which correspond in the case of life data analysis to failure times. The likelihood function is given by:

- [math]\displaystyle{ L({{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}|{{x}_{1}},{{x}_{2}},...,{{x}_{R}})=L=\underset{i=1}{\overset{R}{\mathop \prod }}\,f({{x}_{i}};{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}) \,\! }[/math]

- [math]\displaystyle{ i = 1,2,...,R\,\! }[/math]

The logarithmic likelihood function is given by:

- [math]\displaystyle{ \Lambda = \ln L =\sum_{i = 1}^R \ln f({x_i};{\theta _1},{\theta _2},...,{\theta _k})\,\! }[/math]

The maximum likelihood estimators (or parameter values) of [math]\displaystyle{ {{\theta}_{1}},{{\theta}_{2}},...,{{\theta}_{k}}\,\! }[/math] are obtained by maximizing [math]\displaystyle{ L\,\! }[/math] or [math]\displaystyle{ \Lambda\,\! }[/math].

By maximizing [math]\displaystyle{ \Lambda\,\! }[/math] which is much easier to work with than [math]\displaystyle{ L\,\! }[/math], the maximum likelihood estimators (MLE) of [math]\displaystyle{ {{\theta}_{1}},{{\theta}_{2}},...,{{\theta}_{k}}\,\! }[/math] are the simultaneous solutions of [math]\displaystyle{ k\,\! }[/math] equations such that:

- [math]\displaystyle{ \frac{\partial{\Lambda}}{\partial{\theta_j}}=0, \text{ j=1,2...,k}\,\! }[/math]

Even though it is common practice to plot the MLE solutions using median ranks (points are plotted according to median ranks and the line according to the MLE solutions), this is not completely representative. As can be seen from the equations above, the MLE method is independent of any kind of ranks. For this reason, the MLE solution often appears not to track the data on the probability plot. This is perfectly acceptable because the two methods are independent of each other, and in no way suggests that the solution is wrong.

MLE for Right Censored Data

When performing maximum likelihood analysis on data with suspended items, the likelihood function needs to be expanded to take into account the suspended items. The overall estimation technique does not change, but another term is added to the likelihood function to account for the suspended items. Beyond that, the method of solving for the parameter estimates remains the same. For example, consider a distribution where [math]\displaystyle{ x\,\! }[/math] is a continuous random variable with pdf and cdf:

- [math]\displaystyle{ \begin{align} & f(x;{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}) \\ & F(x;{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}) \end{align} \,\! }[/math]

where [math]\displaystyle{ {{\theta}_{1}},{{\theta}_{2}},...,{{\theta}_{k}}\,\! }[/math] are the unknown parameters which need to be estimated from [math]\displaystyle{ R\,\! }[/math] observed failures at [math]\displaystyle{ {{T}_{1}},{{T}_{2}},...,{{T}_{R}}\,\! }[/math], and [math]\displaystyle{ M\,\! }[/math] observed suspensions at [math]\displaystyle{ {{S}_{1}},{{S}_{2}},...,{{S}_{M}}\,\! }[/math] then the likelihood function is formulated as follows:

- [math]\displaystyle{ \begin{align} L({{\theta }_{1}},...,{{\theta }_{k}}|{{T}_{1}},...,{{T}_{R,}}{{S}_{1}},...,{{S}_{M}})= & \underset{i=1}{\overset{R}{\mathop \prod }}\,f({{T}_{i}};{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}) \\ & \cdot \underset{j=1}{\overset{M}{\mathop \prod }}\,[1-F({{S}_{j}};{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}})] \end{align}\,\! }[/math]

The parameters are solved by maximizing this equation. In most cases, no closed-form solution exists for this maximum or for the parameters. Solutions specific to each distribution utilizing MLE are presented in Appendix D.

MLE for Interval and Left Censored Data

The inclusion of left and interval censored data in an MLE solution for parameter estimates involves adding a term to the likelihood equation to account for the data types in question. When using interval data, it is assumed that the failures occurred in an interval; i.e., in the interval from time [math]\displaystyle{ A\,\! }[/math] to time [math]\displaystyle{ B\,\! }[/math] (or from time 0 to time [math]\displaystyle{ B\,\! }[/math] if left censored), where [math]\displaystyle{ A \lt B\,\! }[/math]. In the case of interval data, and given [math]\displaystyle{ P\,\! }[/math] interval observations, the likelihood function is modified by multiplying the likelihood function with an additional term as follows:

- [math]\displaystyle{ \begin{align} L({{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}|{{x}_{1}},{{x}_{2}},...,{{x}_{P}})= & \underset{i=1}{\overset{P}{\mathop \prod }}\,\{F({{x}_{i}};{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}}) \\ & \ \ -F({{x}_{i-1}};{{\theta }_{1}},{{\theta }_{2}},...,{{\theta }_{k}})\} \end{align}\,\! }[/math]

Note that if only interval data are present, this term will represent the entire likelihood function for the MLE solution. The next section gives a formulation of the complete likelihood function for all possible censoring schemes.

The Complete Likelihood Function