Basic Statistical Background: Difference between revisions

| Line 32: | Line 32: | ||

The cumulative distribution function, <math>cdf</math>, is a function <math>F(x)</math> of a random variable, <math>X</math>, and is defined for a number <math>x</math> by: | The cumulative distribution function, <math>cdf</math>, is a function <math>F(x)</math> of a random variable, <math>X</math>, and is defined for a number <math>x</math> by: | ||

[[Image: | [[Image:yellow.png|thumb|center|400px|]] | ||

::<math>F(x)=P(X\le x)=\int_0^\infty xf(s)ds </math> | ::<math>F(x)=P(X\le x)=\int_0^\infty xf(s)ds </math> | ||

Revision as of 22:16, 30 September 2011

Statistical Background

In this section provides a brief elementary introduction to the most common and fundamental statistical equations and definitions used in reliability engineering and life data analysis.

Random Variables

In general, most problems in reliability engineering deal with quantitative measures, such as the time-to-failure of a component, or whether the component fails or does not fail. In judging a component to be defective or non-defective, only two outcomes are possible. We can then denote a random variable X as representative of these possible outcomes (i.e. defective or non-defective). In this case, X is a random variable that can only take on these values.

In the case of times-to-failure, our random variable X can take on the time-to-failure (or time to an event of interest) of the product or component and can be in a range from 0 to infinity (since we do not know the exact time a priori).

In the first case, where the random variable can take on only two discrete values (let's say defective =0 and non-defective=1>), the variable is said to be a discrete random variable. In the second case, our product can be found failed at any time after time 0, i.e. at 12.4 hours or at 100.12 miles and so forth, thus X can take on any value in this range. In this case, our random variable X is said to be a continuous random variable.

The Probability Density and Cumulative Distribution Functions

Designations

From probability and statistics, given a continuous random variable, we denote:

- The probability density function, pdf, as f(x).

- The cumulative distribution function, cdf, as F(x).

The pdf and cdf give a complete description of the probability distribution of a random variable.

Definitions

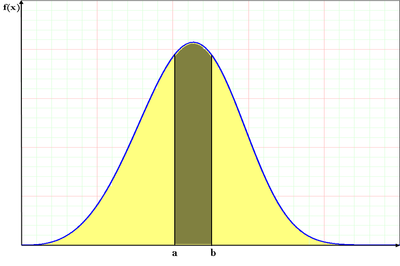

If [math]\displaystyle{ X }[/math] is a continuous random variable, then the probability density function, [math]\displaystyle{ pdf }[/math], of [math]\displaystyle{ X }[/math], is a function [math]\displaystyle{ f(x) }[/math] such that for two numbers, [math]\displaystyle{ a }[/math] and [math]\displaystyle{ b }[/math] with [math]\displaystyle{ a\le b }[/math]:

- [math]\displaystyle{ P(a \le X \le b)=\int_a^b f(x)dx }[/math] and [math]\displaystyle{ f(x)\ge 0 }[/math] for all x

That is, the probability that takes on a value in the interval [a,b] is the area under the density function from [math]\displaystyle{ a }[/math] to [math]\displaystyle{ b }[/math]. The cumulative distribution function, [math]\displaystyle{ cdf }[/math], is a function [math]\displaystyle{ F(x) }[/math] of a random variable, [math]\displaystyle{ X }[/math], and is defined for a number [math]\displaystyle{ x }[/math] by:

- [math]\displaystyle{ F(x)=P(X\le x)=\int_0^\infty xf(s)ds }[/math]

That is, for a given value [math]\displaystyle{ x }[/math], [math]\displaystyle{ F(x) }[/math] is the probability that the observed value of [math]\displaystyle{ X }[/math] will be at most [math]\displaystyle{ x }[/math].

Note that the limits of integration depend on the domain of [math]\displaystyle{ f(x) }[/math]. For example, for all the distributions considered in this reference, this domain would be [math]\displaystyle{ [0,+\infty] }[/math], [math]\displaystyle{ [-\infty ,+\infty] }[/math] or [math]\displaystyle{ [\gamma ,+\infty] }[/math]. In the case of [math]\displaystyle{ [\gamma ,+\infty ] }[/math], we use the constant [math]\displaystyle{ \gamma }[/math] to denote an arbitrary non-zero point (or a location that indicates the starting point for the distribution). Figure 3-1, on the next page, illustrates the relationship between the probability density function and the cumulative distribution function.

Mathematical Relationship Between the [math]\displaystyle{ pdf }[/math] and [math]\displaystyle{ cdf }[/math]

The mathematical relationship between the [math]\displaystyle{ pdf }[/math] and [math]\displaystyle{ cdf }[/math] is given by:

- [math]\displaystyle{ F(x)=\int_{-\infty }^x f(s)ds }[/math]

- Conversely:

- [math]\displaystyle{ f(x)=\frac{d(F(x))}{dx} }[/math]

In plain English, the value of the [math]\displaystyle{ cdf }[/math] at [math]\displaystyle{ x }[/math] is the area under the probability density function up to [math]\displaystyle{ x }[/math], if so chosen. It should also be pointed out that the total area under the [math]\displaystyle{ pdf }[/math] is always equal to 1, or mathematically:

- [math]\displaystyle{ \int_{-\infty }^{\infty }f(x)dx=1 }[/math]

An example of a probability density function is the well-known normal distribution, whose [math]\displaystyle{ pdf }[/math] is given by:

- [math]\displaystyle{ f(t)={\frac{1}{\sigma \sqrt{2\pi }}}{e^{-\frac{1}{2}}(\frac{t-\mu}{\sigma})^2} }[/math]

where [math]\displaystyle{ \mu }[/math] is the mean and .. is the standard deviation. The normal distribution is a two-parameter distribution, i.e. with two parameters [math]\displaystyle{ \mu }[/math] and [math]\displaystyle{ \sigma }[/math]. Another two-parameter distribution is the lognormal distribution, whose [math]\displaystyle{ pdf }[/math] is given by:

- [math]\displaystyle{ f(t)=\frac{1}{t\cdot {{\sigma }^{\prime }}\sqrt{2\pi }}{e}^{-\tfrac{1}{2}(\tfrac{t^{\prime}-{\mu^{\prime}}}{\sigma^{\prime}})^2} }[/math]

where [math]\displaystyle{ t^{\prime} }[/math] is the natural logarithm of the times-to-failure, [math]\displaystyle{ \mu^{\prime} }[/math] is the mean of the natural logarithms of the times-to-failure and [math]\displaystyle{ \sigma^{\prime} }[/math] is the standard deviation of the natural logarithms of the times-to-failure, [math]\displaystyle{ t^{\prime } }[/math].

The Reliability Function

The reliability function can be derived using the previous definition of the cumulative distribution function, Eqn. (e21). The probability of an event happening by time [math]\displaystyle{ t }[/math] is given by:

- [math]\displaystyle{ F(t)=\int_{0,\gamma}^{t}f(s)ds }[/math]

In particular, this represents the probability of a unit failing by time [math]\displaystyle{ t }[/math]. From this, we obtain the most commonly used function in reliability engineering, the reliability function, which represents the probability of success of a unit in undertaking a mission of a prescribed duration. To mathematically show this, we first define the unreliability function, [math]\displaystyle{ Q(t) }[/math], which is the probability of failure, or the probability that our time-to-failure is in the region of [math]\displaystyle{ 0 }[/math] (or [math]\displaystyle{ \gamma }[/math]) and [math]\displaystyle{ t }[/math]. So from Eqn.(ee34):

- [math]\displaystyle{ F(t)=Q(t)=\int_{0,\gamma}^{t}f(s)ds }[/math]

In this situation, there are only two states that can occur: success or failure. These two states are also mutually exclusive. Since reliability and unreliability are the probabilities of these two mutually exclusive states, the sum of these probabilities is always equal to unity. So then:

- [math]\displaystyle{ \begin{align} Q(t)+R(t)& = 1 \\ R(t) & = 1-Q(t) \\ R(t) & = 1-\int_{0,\gamma}^{t}f(s)ds \\ R(t) & = \int_{t}^{\infty }f(s)ds \end{align} }[/math]

- Conversely:

- [math]\displaystyle{ f(t)=-\frac{d(R(t))}{dt} }[/math]

The Conditional Reliability Function

The reliability function discussed previously assumes that the unit is starting the mission with no accumulated time, i.e. brand new. Conditional reliability calculations allow one to calculate the probability of a unit successfully completing a mission of a particular duration given that it has already successfully completed a mission of a certain duration. In this respect, the conditional reliability function could be considered to be the reliability of used equipmen.

The conditional reliability function is given by the equation,

- [math]\displaystyle{ R(t|T)=\frac{R(T+t)}{R(T)} }[/math]

- where:

- t is the duration of the new mission, and

- T is the duration of the successfully completed previous mission.

In other words, the fact that the equipment has already successfully completed a mission, [math]\displaystyle{ T }[/math], tells us that the product successfully traversed the failure rate path for the period from [math]\displaystyle{ 0\to T }[/math], and it will now be failing according to the failure rate from [math]\displaystyle{ T\to T+t }[/math] . This is used when analyzing warranty data (Chapter 11).

The Failure Rate Function

The failure rate function enables the determination of the number of failures occurring per unit time. Omitting the derivation (see [19; Chapter 4]), the failure rate is mathematically given as,

- [math]\displaystyle{ \begin{align} \lambda(t) & = \frac{f(t)}{1-\int_{0,\gamma}^{t}f(s)ds} \\ & = \frac{f(t)}{R(t)} \\ \end{align} }[/math]

The failure rate function has the units of failures per unit time among surviving, e.g. 1 failure per month.

The Mean Life Function

The mean life function, which provides a measure of the average time of operation to failure, is given by:

- [math]\displaystyle{ \mu = m =\int_{0,\gamma}^{\infty}t\cdot f(t)dt }[/math]

It should be noted that this is the expected or average time-to-failure and is denoted as the MTBF (Mean-Time-Before Failure) and is also called MTTF (Mean-Time-To-Failure) by many authors.

The Median Life Function

Median life,[math]\displaystyle{ \breve{T} }[/math] is the value of the random variable that has exactly one-half of the area under the [math]\displaystyle{ pdf }[/math] to its left and one-half to its right. The median is obtained from:

- [math]\displaystyle{ \int_{-\infty}^{\breve{T}}f(t)dt=0.5 }[/math]

For sample data, e.g. 12, 20, 21, the median is the midpoint value, or 20 in this case.

The Mode Function

The modal (or mode) life, is the maximum value of [math]\displaystyle{ T }[/math] that satisfies:

- [math]\displaystyle{ \frac{d\left[ f(t) \right]}{dt}=0 }[/math]

For a continuous distribution, the mode is that value of the variate which corresponds to the maximum probability density (the value where the [math]\displaystyle{ pdf }[/math] has its maximum value).

Additional Resources

References

See Also

See Also

Notes

Notes