|

|

| (34 intermediate revisions by 3 users not shown) |

| Line 1: |

Line 1: |

| {{template:ALTABOOK|A}} | | {{template:ALTABOOK|Appendix A|Brief Statistical Background}} |

| =Reference Appendix A: Brief Statistical Background=

| | In this appendix, we attempt to provide a brief elementary introduction to the most common and fundamental statistical equations and definitions used in reliability engineering and life data analysis. The equations and concepts presented in this appendix are used extensively throughout this reference. |

| <br> | | <br> |

| In this appendix we attempt to provide a brief elementary introduction to the most common and fundamental statistical equations and definitions used in reliability engineering and life data analysis. The equations and concepts presented in this appendix are used extensively throughout this reference.

| | {{:Brief Statistical Background}} |

| <br>

| |

| ==Basic Statistical Definitions==

| |

| <br>

| |

| | |

| ===Random Variables===

| |

| | |

| [[Image:Randomvariables.png|thumb|center|200px|]]

| |

| | |

| | |

| In general, most problems in reliability engineering deal with quantitative measures, such as the time-to-failure of a product, or whether the product fails or does not fail. In judging a product to be defective or non-defective, only two outcomes are possible. We can use a random variable to denote these possible outcomes (i.e. defective or non-defective). In this case, <math>X</math> is a random variable that can take on only these values.

| |

| | |

| In the case of times-to-failure, our random variable <math>X</math> can take on the time-to-failure of the product and can be in a range from <math>0</math> to infinity (since we do not know the exact time a priori).

| |

| | |

| In the first case in which the random variable can take on discrete values (let's say <math>defective=0</math> and <math>non-defective=1</math> ), the variable is said to be a <math>discrete</math> <math>random</math> <math>variable.</math> In the second case, our product can be found failed at any time after time 0 (i.e. at 12 hr or at 100 hr and so forth), thus <math>X</math> can take on any value in this range. In this case, our random variable <math>X</math> is said to be a <math>continous</math> <math>random</math> <math>variable.</math> In this reference, we will deal almost exclusively with continuous random variables.

| |

|

| |

| <br>

| |

| | |

| ==The Probability Density and Cumulative Density Functions==

| |

| ===Designations===

| |

| From probability and statistics, given a continuous random variable <math>X,</math> we denote:

| |

| | |

| • The probability density (distribution) function, <math>pdf</math> , as <math>f(x).</math>

| |

| | |

| • The cumulative density function, <math>cdf</math> , as <math>F(x).</math>

| |

| | |

| The <math>pdf</math> and <math>cdf</math> give a complete description of the probability distribution of a random variable.

| |

| <br>

| |

| ===Definitions===

| |

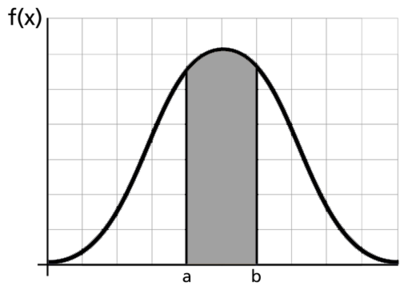

| [[Image:ALTAappendixA.1.png|thumb|center|300px|]]

| |

| <math></math>

| |

| If <math>X</math> is a continuous random variable, then the <math>probability</math> <math>density</math> <math>function,</math> <math>pdf</math> , of <math>X</math> is a function <math>f(x)</math> such that for two numbers, <math>a</math> and <math>b</math> with <math>a\le b</math> :

| |

| | |

| ::<math>P(a\le X\le b)=\mathop{}_{a}^{b}f(x)dx</math>

| |

| | |

| That is, the probability that <math>X</math> takes on a value in the interval <math>[a,b]</math> is the area under the density function from <math>a</math> to <math>b</math> .

| |

| The <math>cumulative</math> <math>distribution</math> <math>function</math> , <math>cdf</math> , is a function <math>F(x),</math> of a random variable <math>X</math> , and is defined for a number <math>x</math> by:

| |

| | |

| ::<math>F(x)=P(X\le x)=\mathop{}_{0}^{x}f(s)ds</math>

| |

| | |

| That is, for a number <math>x</math> , <math>F(x)</math> is the probability that the observed value of <math>X</math> will be at most <math>x</math> .

| |

| Note that depending on the function denoted by <math>f(x)</math> , or more specifically the distribution denoted by <math>f(x),</math> the limits will vary depending on the region over which the distribution is defined. For example, for all the life distributions considered in this reference, this range would be <math>[0,+\infty ].</math>

| |

| | |

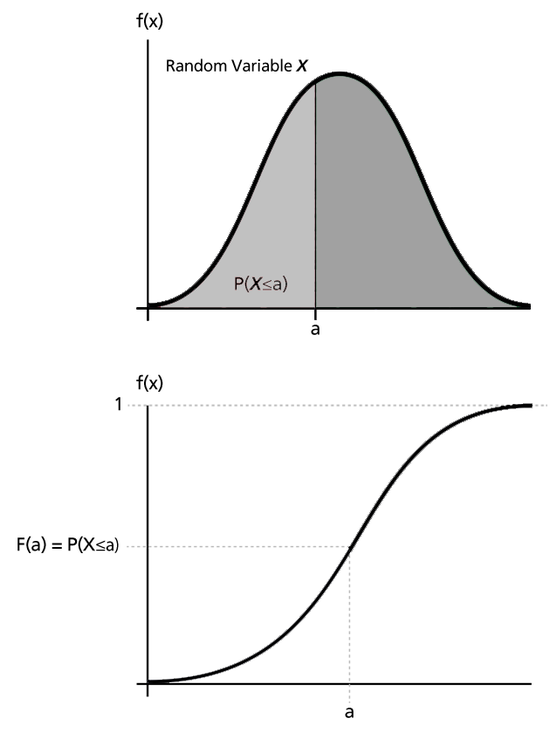

| ===Graphical representation of the <math>pdf</math> and <math>cdf</math>===

| |

| [[Image:ALTAappendixA.2.png|thumb|center|300px|]]

| |

| | |

| ===Mathematical Relationship Between the <math>pdf</math> and <math>cdf</math>===

| |

| The mathematical relationship between the <math>pdf</math> and <math>cdf</math> is given by:

| |

| | |

| ::<math>F(x)=\mathop{}_{0}^{x}f(s)ds</math>

| |

| | |

| where <math>s</math> is a dummy integration variable.

| |

| <br>

| |

| :Conversely:

| |

| | |

| ::<math>f(x)=-\frac{d(F(x))}{dx}</math>

| |

| | |

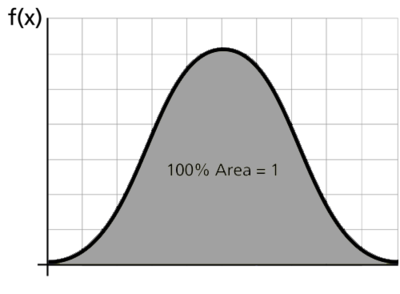

| In plain English, the <math>cdf</math> is the area under the probability density function, up to a value of <math>x</math> , if so chosen. The total area under the <math>pdf</math> is always equal to 1, or mathematically:

| |

| | |

| ::<math>\int_{0}^{\infty }f(x)dx=1</math>

| |

| | |

| [[Image:ALTAappendixA.3.png|thumb|center|300px|]]

| |

| | |

| An example of a probability density function is the well known normal distribution, for which the <math>pdf</math> is given by:

| |

| | |

| ::<math>f(t)=\frac{1}{\sigma \sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{t-\mu }{\sigma } \right)}^{2}}}}</math>

| |

| | |

| where <math>\mu </math> is the mean and <math>\sigma </math> is the standard deviation.

| |

| | |

| The normal distribution is a two parameter distribution, i.e. with two parameters <math>\mu </math> and <math>\sigma </math> .

| |

| | |

| Another is the lognormal distribution, whose <math>pdf</math> is given by:

| |

| | |

| ::<math>f(t)=\frac{1}{t\cdot {{\sigma }^{\prime }}\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{{{t}^{\prime }}-{{\mu }^{\prime }}}{{{\sigma }^{\prime }}} \right)}^{2}}}}</math>

| |

| | |

| where <math>{\mu }'</math> is the mean of the natural logarithms of the times-to-failure, and <math>{\sigma }'</math> is the standard deviation of the natural logarithms of the times-to-failure. Again, this is a two parameter distribution.

| |

| | |

| ==The Reliability Function==

| |

| <br>

| |

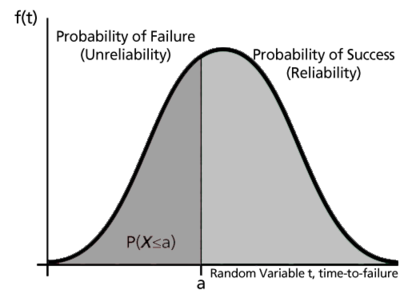

| The reliability function can be derived using the previous definition of the cumulative density function, Eqn. (pv21a). Note that the probability of an event occurring by time <math>t</math> (based on a continuous distribution given by <math>f(x),</math> or henceforth <math>f(t)</math> since our random variable of interest in life data analysis is time, or <math>t</math> ), is given by:

| |

| | |

| ::<math>F(t)=\mathop{}_{0}^{t}f(s)ds</math>

| |

| | |

| | |

| One could equate this event to the probability of a unit failing by time <math>t</math> .

| |

| | |

| <math></math>

| |

| | |

| [[Image:ALTAappendixA.4.png|thumb|center|300px|]]

| |

| | |

| | |

| From this fact, the most commonly used function in reliability engineering, the reliability function, can then be obtained. The reliability function enables the determination of the probability of success of a unit, in undertaking a mission of a prescribed duration.

| |

| | |

| To show this mathematically, we first define the unreliability function, <math>Q(t)</math> , which is the probability of failure, or the probability that our time-to-failure is in the region of <math>0</math> and <math>t</math> . So from Eqn. (ee34):

| |

| | |

| ::<math>F(t)=Q(t)=\mathop{}_{0}^{t}f(s)ds</math>

| |

| | |

| | |

| Reliability and unreliability are success and failure probabilities, are the only two events being considered, and are mutually exclusive; hence, the sum of these probabilities is equal to unity. So then:

| |

| | |

| ::<math>\begin{align}

| |

| & Q(t)+R(t)= & 1 \\

| |

| & R(t)= & 1-Q(t) \\

| |

| & R(t)= & 1-\mathop{}_{0}^{t}f(s)ds \\

| |

| & R(t)= & \mathop{}_{t}^{\infty }f(s)ds

| |

| \end{align}</math>

| |

| | |

| :Conversely:

| |

| | |

| ::<math>f(t)=\frac{d(R(t))}{dt}</math>

| |

| | |

| ==The Failure Rate Function==

| |

| <br>

| |

| The failure rate function enables the determination of the number of failures occurring per unit time. Omitting the derivation, see [18; Ch. 4], the failure rate is mathematically given as:

| |

| | |

| <br>

| |

| ::<math>\lambda (t)=\frac{f(t)}{R(t)}</math>

| |

| | |

| | |

| Failure rate is denoted as failures per unit time.

| |

| <br>

| |

| <br>

| |

| | |

| ==The Mean Life Function==

| |

| <br>

| |

| The mean life function, which provides a measure of the average time of operation to failure is given by:

| |

| | |

| ::<math>\overline{T}=m=\mathop{}_{0}^{\infty }t\cdot f(t)dt</math>

| |

| | |

| | |

| This is the expected or average time-to-failure and is denoted as the <math>MTTF</math> (Mean Time-to-Failure) and synonymously called <math>MTBF</math> (Mean Time Before Failure) by many authors.

| |

| <br>

| |

| <br>

| |

| | |

| ==Median Life==

| |

| <br>

| |

| Median life, <math>\breve{T}</math>,

| |

| is the value of the random variable that has exactly one-half of the area under the <math>pdf</math> to its left and one-half to its right. The median is obtained from:

| |

| | |

| | |

| ::<math>\mathop{}_{0}^{{\breve{T}}}f(t)dt=0.5</math>

| |

| | |

| | |

| (For individual data, e.g. 12, 20, 21, the median is the midpoint value, or 20 in this case.)

| |

| <br>

| |

| <br>

| |

| | |

| ==Mode==

| |

| <br>

| |

| The modal (or mode) life, <math>\widetilde{T}</math>,

| |

| is the maximum value of <math>T</math> that satisfies:

| |

| | |

| ::<math>\frac{d\left[ f(t) \right]}{dt}=0</math>

| |

| | |

| | |

| For a continuous distribution, the mode is that value of the variate which corresponds to the maximum probability density (the value at which the <math>pdf</math> has its maximum value).

| |

| <br>

| |

| <br>

| |

| | |

| =Distributions=

| |

| <br>

| |

| A statistical distribution is fully described by its <math>pdf</math> (probability density function). In the previous sections, we used the definition of the <math>pdf</math> to show how all other functions most commonly used in reliability engineering and life data analysis can be derived. The reliability function, failure rate function, mean time function, and median life function can be determined directly from the <math>pdf</math> definition, or <math>f(t)</math> . Different distributions exist, such as the normal, exponential, etc., and each has a predefined <math>f(t)</math> which can be found in most references. These distributions were formulated by statisticians, mathematicians and/or engineers to mathematically model or represent certain behavior. For example, the Weibull distribution was formulated by Waloddi Weibull and thus it bears his name. Some distributions tend to better represent life data and are most commonly called lifetime distributions.

| |

| | |

| The exponential distribution is a very commonly used distribution in reliability engineering. Due to its simplicity, it has been widely employed even in cases to which it does not apply. The <math>pdf</math> of the exponential distribution is mathematically defined as:

| |

| | |

| ::<math>f(t)=\lambda {{e}^{-\lambda t}}</math>

| |

| | |

| In this definition, note that <math>t</math> is our random variable, which represents time, and the Greek letter <math>\lambda </math> (lambda) represents what is commonly referred to as the parameter of the distribution. For any distribution, the parameter or parameters of the distribution are estimated (obtained) from the data. For example, in the case of the most well known distribution, namely the normal distribution:

| |

| | |

| ::<math>f(t)=\frac{1}{\sigma \sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{t-\mu }{\sigma } \right)}^{2}}}}</math>

| |

| | |

| where the mean, <math>\mu ,</math> and the standard deviation, <math>\sigma ,</math> are its parameters. Both of these parameters are estimated from the data, i.e. the mean and standard deviation of the data. Once these parameters are estimated, our function <math>f(t)</math> is fully defined and we can obtain any value for <math>f(t)</math> given any value of <math>t</math> .

| |

| Given the mathematical representation of a distribution, we can also derive all of the functions needed for life data analysis, which again will only depend on the value of <math>t</math> after the value of the distribution parameter or parameters are estimated from data.

| |

| <br>

| |

| For example, we know that the exponential distribution <math>pdf</math> is given by:

| |

| | |

| ::<math>f(t)=\lambda {{e}^{-\lambda t}}</math>

| |

| | |

| Thus the reliability function can be derived:

| |

| | |

| ::<math>\begin{align}

| |

| & R(t)= & 1-\mathop{}_{0}^{t}\lambda {{e}^{-\lambda T}}dT \\

| |

| & = & 1-\left[ 1-{{e}^{-\lambda (t)}} \right] \\

| |

| & = & {{e}^{-\lambda (t)}}

| |

| \end{align}</math>

| |

| <br>

| |

| The failure rate function is given by:

| |

| | |

| ::<math>\begin{align}

| |

| & \lambda (t)= & \frac{f(t)}{R(t)} \\

| |

| & = & \frac{\lambda {{e}^{-\lambda (t)}}}{{{e}^{-\lambda (t)}}} \\

| |

| & = & \lambda

| |

| \end{align}</math>

| |

| | |

| The mean time to/before failure (MTTF / MTBF) is given by:

| |

| | |

| ::<math>\begin{align}

| |

| & \overline{T}= & \underset{0}{\overset{\infty }{\mathop \int }}\,t\cdot f(t)dt \\

| |

| & = & \underset{0}{\overset{\infty }{\mathop \int }}\,t\cdot \lambda \cdot {{e}^{-\lambda t}}dt \\

| |

| & = & \frac{1}{\lambda }

| |

| \end{align}</math>

| |

| | |

| Exactly the same methodology can be applied to any distribution given its <math>pdf,</math> with various degrees of difficulty depending on the complexity of <math>f(t)</math> .

| |

| <br>

| |

| ==Most Commonly Used Distributions==

| |

| <br>

| |

| There are many different lifetime distributions that can be used. ReliaSoft [31] presents a thorough overview of lifetime distributions. Leemis [22] and others also present good overviews of many of these distributions. The three distributions used in ALTA, the 1-parameter exponential, 2-parameter Weibull, and the lognormal, are presented in greater detail in Chapter 5.

| |

| <br>

| |

| | |

| =Confidence Intervals (or Bounds)=

| |

| <br>

| |

| One of the most confusing concepts to an engineer new to the field is the concept of putting a probability on a probability. In life data analysis, this concept is referred to as confidence intervals or confidence bounds. In this section, we will try to briefly present the concept, in less than statistical terms, but based on solid common sense.

| |

| <br>

| |

| <br>

| |

| ==The Black and White Marbles==

| |

| <math></math>

| |

| [[Image:poolwmarbles.png|thumb|center|300px|]]

| |

| | |

| To illustrate, imagine a situation in which there are millions of black and white marbles in a rather large swimming pool, and our job is to estimate the percentage of black marbles. One way to do this (other than counting all the marbles!) is to estimate the percentage of black marbles by taking a sample and then counting the number of black marbles in the sample.

| |

| <br>

| |

| <br>

| |

| ===Taking a Small Sample of Marbles===

| |

| | |

| [[Image:poolwmarbles2.png|thumb|center|300px|]]

| |

| | |

| First, let's pick out a small sample of marbles and count the black ones. Say you picked out 10 marbles and counted 4 black marbles. Based on this, your estimate would be that 40% of the marbles are black.

| |

| <br>

| |

| If you put the 10 marbles back into the pool and repeated this example, you might get 5 black marbles, changing your estimate to 50% black marbles.

| |

| | |

| [[Image:poolwmarbles3.png|thumb|center|300px|]]

| |

| | |

| Which of the two estimates is correct? Both estimates are correct! As you repeat this experiment over and over again, you might find out that this estimate is usually between <math>{{X}_{1}}%</math> and <math>{{X}_{2}}%</math> , or maybe 90% of the time this estimate is between <math>{{X}_{1}}%</math> and <math>{{X}_{2}}%.</math>

| |

| <br>

| |

| <br>

| |

| ===Taking a Larger Sample of Marbles===

| |

| <br>

| |

| If we now repeat the experiment and pick out 1,000 marbles, we might get results such as 545, 570, 530, etc. for the number of black marbles in each trial. Note that the range in this case will be much narrower than before. For example, let's say that 90% of the time, the number of black marbles will be from <math>{{Y}_{1}}%</math> to <math>{{Y}_{2}}%</math> , where <math>{{X}_{1}}%<{{Y}_{1}}%</math> and <math>{{X}_{2}}%>{{Y}_{2}}%</math> , thus giving us a narrower interval. For confidence intervals, the larger the sample size, the narrower the confidence intervals.

| |

| <br>

| |

| <br>

| |

| | |

| ==Back to Reliability==

| |

| <br>

| |

| Returning to the subject at hand, our task is to determine the probability of failure or reliability of all of our units. However, until all units fail, we will never know the exact value. Our task is to estimate the reliability based on a sample, much like estimating the number of black marbles in the pool. If we perform 10 different reliability tests for our units, and estimate the parameters using ALTA, we will obtain slightly different parameters for the distribution each time, and thus slightly different reliability results. However, when employing confidence bounds, we obtain a range in which these values are more likely to occur <math>X</math> percent of the time. Remember that each parameter is an estimate of the true parameter, a true parameter that is unknown to us.

| |

| <br>

| |

| <br>

| |

| ==One-Sided and Two-Sided Confidence Bounds==

| |

| <br>

| |

| Confidence bounds (or intervals) are generally described as one-sided or two-sided.

| |

| <br>

| |

| <br>

| |

| ===Two-Sided Bounds===

| |

| | |

| [[Image:bkljh.png|thumb|center|300px|]]

| |

| | |

| When we use two-sided confidence bounds (or intervals), we are looking at where most of the population is likely to lie. For example, when using 90% two-sided confidence bounds, we are saying that 90% lies between <math>X</math> and <math>Y</math> , with 5% less than <math>X</math> and 5% greater than <math>Y</math> .

| |

| <br>

| |

| | |

| ===One-Sided Bounds===

| |

| <br>

| |

| When using one-sided intervals, we are looking at the percentage of units that are greater or less (upper and lower) than a certain point <math>X</math> .

| |

| | |

| [[Image:ALTAone-sided-bounds.png|thumb|center|300px|]]

| |

| <math></math>

| |

|

| |

| For example, 95% one-sided confidence bounds would indicate that 95% of the population is greater than <math>X</math> (if 95% is a lower confidence bound), or that 95% is less than <math>X</math> (if 95% is an upper confidence bound).

| |

| | |

| In ALTA, we use upper to mean the higher limit and lower to mean the lower limit, regardless of their position, but based on the value of the results. So for example, when returning the confidence bounds on the reliability, we would term the lower value of reliability as the lower limit and the higher value of reliability as the higher limit. When returning the confidence bounds on probability of failure, we will again term the lower numeric value for the probability of failure as the lower limit and the higher value as the higher limit.

| |

| <br>

| |

| <br>

| |

| | |

| =Confidence Limits Determination=

| |

| <br>

| |

| This section presents an overview of the theory on obtaining approximate confidence bounds on suspended (multiply censored) data. The methodology used is the so-called Fisher Matrix Bounds, described in Nelson [27] and Lloyd and Lipow [24].

| |

| <br>

| |

| <br>

| |

| ==Suggested References==

| |

| <br>

| |

| This section presents a brief introduction into how the confidence intervals are calculated by ALTA. By no means do we intend to cover the full theory behind this methodology. More complete details on confidence intervals can be found in the following books:

| |

| <br>

| |

| • Nelson, Wayne, Applied Life Data Analysis, 1982, John Wiley & Sons, New York, New York.

| |

| | |

| • Nelson, Wayne, Accelerated Testing: Statistical Models, Test Plans, and Data Analyses, 1990, John Wiley & Sons, New York, New York.

| |

| | |

| • David K. Lloyd and Myron Lipow, Reliability: Management, Methods, and Mathematics, 1962, Prentice Hall, Englewood Cliffs, New Jersey.

| |

| | |

| • H. Cramer, Mathematical Methods of Statistics, 1946, Princeton University Press, Princeton, New Jersey.

| |

| | |

| ==Approximate Estimates of the Mean and Variance of a Function==

| |

| ===Single Parameter Case===

| |

| For simplicity, consider a one parameter distribution represented by a general function <math>G,</math> which is a function of one parameter estimator, say <math>G(\widehat{\theta }).</math> Then, in general, the expected value of <math>G\left( \widehat{\theta } \right)</math> can be found by:

| |

| <br>

| |

| ::<math>E\left( G\left( \widehat{\theta } \right) \right)=G(\theta )+O\left( \frac{1}{n} \right)</math>

| |

| | |

| where <math>G(\theta )</math> is some function of <math>\theta </math> , such as the reliability function, and <math>\theta </math> is the population moment, or parameter such that <math>E\left( \widehat{\theta } \right)=\theta </math> as .. . The term <math>O\left( \tfrac{1}{n} \right)</math> is a function of <math>n</math> , the sample size, and tends to zero, as fast as .. as <math>n\to \infty .</math> For example, in the case of <math>\widehat{\theta }=\overline{x}</math> and <math>G(x)={{x}^{2}}</math> , then <math>E(G(\overline{x}))={{\mu }^{2}}+O\left( \tfrac{1}{n} \right)</math> where <math>O\left( \tfrac{1}{n} \right)=\tfrac{{{\sigma }^{2}}}{n},</math> thus as <math>n\to \infty </math> , <math>E(G(\overline{x}))={{\mu }^{2}}</math> ( <math>\mu </math> and <math>\sigma </math> are the mean and standard deviation, respectively). Using the same one parameter distribution, the variance of the function <math>G\left( \widehat{\theta } \right)</math> can then be estimated by:

| |

| | |

| ::<math>Var\left( G\left( \widehat{\theta } \right) \right)=\left( \frac{\partial G}{\partial \widehat{\beta }} \right)_{\widehat{\theta }=\theta }^{2}Var\left( \widehat{\theta } \right)+O\left( \frac{1}{{{n}^{\tfrac{3}{2}}}} \right)</math>

| |

| <br>

| |

| | |

| ===Two Parameter Case===

| |

| Repeating the previous method for the case of a two parameter distribution, it is generally true that for a function <math>G</math> , which is a function of two parameter estimators, say <math>G\left( {{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}} \right)</math> , that:

| |

| <br>

| |

| ::<math>E\left( G\left( {{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}} \right) \right)=G\left( {{\theta }_{1}},{{\theta }_{2}} \right)+O\left( \frac{1}{n} \right)</math>

| |

| | |

| :and:

| |

| | |

| ::<math>\begin{align}

| |

| & Var\left( G\left( {{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}} \right) \right)= & \left( \frac{\partial G}{\partial {{\widehat{\theta }}_{1}}} \right)_{{{\widehat{\theta }}_{1}}={{\theta }_{1}}}^{2}Var\left( {{\widehat{\theta }}_{1}} \right)+\left( \frac{\partial G}{\partial {{\widehat{\theta }}_{2}}} \right)_{{{\widehat{\theta }}_{2}}={{\theta }_{1}}}^{2}Var\left( {{\widehat{\theta }}_{2}} \right) \\

| |

| & & +2{{\left( \frac{\partial G}{\partial {{\widehat{\theta }}_{1}}} \right)}_{{{\widehat{\theta }}_{1}}={{\theta }_{1}}}}{{\left( \frac{\partial G}{\partial {{\widehat{\theta }}_{2}}} \right)}_{{{\widehat{\theta }}_{2}}={{\theta }_{1}}}}Cov\left( {{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}} \right)+O\left( \frac{1}{{{n}^{\tfrac{3}{2}}}} \right)

| |

| \end{align}</math>

| |

| | |

| Note that the derivatives of Eqn. (var) are evaluated at <math>{{\widehat{\theta }}_{1}}={{\theta }_{1}}</math> and <math>{{\widehat{\theta }}_{2}}={{\theta }_{1}},</math> where E <math>\left( {{\widehat{\theta }}_{1}} \right)\simeq {{\theta }_{1}}</math> and E <math>\left( {{\widehat{\theta }}_{2}} \right)\simeq {{\theta }_{2}}.</math>

| |

| <br>

| |

| <br>

| |

| | |

| ===Variance and Covariance Determination of the Parameters===

| |

| <br>

| |

| The determination of the variance and covariance of the parameters is accomplished via the use of the Fisher information matrix. For a two parameter distribution, and using maximum likelihood estimates, the log likelihood function for censored data (without the constant coefficient) is given by:

| |

| | |

| ::<math>\begin{align}

| |

| & \ln [L]= & \Lambda =\underset{i=1}{\overset{R}{\mathop \sum }}\,\ln [f({{T}_{i}};{{\theta }_{1}},{{\theta }_{2}})] \\

| |

| & & \text{ }+\underset{j=1}{\overset{M}{\mathop \sum }}\,\ln [1-F({{S}_{j}};{{\theta }_{1}},{{\theta }_{2}})] \\

| |

| & & \text{ }+\underset{k=1}{\overset{P}{\mathop \sum }}\,\ln F({{I}_{i}};{{\theta }_{1}},{{\theta }_{2}})-F({{I}_{i-1}};{{\theta }_{1}},{{\theta }_{2}})\}

| |

| \end{align}</math>

| |

| | |

| <br>

| |

| Then the Fisher information matrix is given by:

| |

| | |

| <br>

| |

| ::<math>{{F}_{0}}=\left[ \begin{matrix}

| |

| {{E}_{0}}{{\left[ -\tfrac{{{\partial }^{2}}\Lambda }{\partial \theta _{1}^{2}} \right]}_{0}} & {} & {{E}_{0}}{{\left[ -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} \right]}_{0}} \\

| |

| {} & {} & {} \\

| |

| {{E}_{0}}{{\left[ -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{2}}\partial {{\theta }_{1}}} \right]}_{0}} & {} & {{E}_{0}}{{\left[ -\tfrac{{{\partial }^{2}}\Lambda }{\partial \theta _{2}^{2}} \right]}_{0}} \\

| |

| \end{matrix} \right]</math>

| |

| | |

| :where

| |

| | |

| ::<math>{{\theta }_{1}}={{\theta }_{{{1}_{0}}}},</math> and <math>{{\theta }_{2}}={{\theta }_{{{2}_{0}}}}.</math>

| |

| | |

| So for a sample of <math>N</math> units where <math>R</math> units have failed, <math>S</math> have been suspended, and <math>P</math> have failed within a time interval, and <math>N=R+M+P,</math> one could obtain the sample local information matrix by:

| |

| | |

| ::<math>F=\left[ \begin{matrix}

| |

| -\tfrac{{{\partial }^{2}}\Lambda }{\partial \theta _{1}^{2}} & {} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} \\

| |

| {} & {} & {} \\

| |

| -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{2}}\partial {{\theta }_{1}}} & {} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \theta _{2}^{2}} \\

| |

| \end{matrix} \right]</math>

| |

| | |

| By substituting in the values of the estimated parameters, in this case <math>{{\widehat{\theta }}_{1}}</math> and <math>{{\widehat{\theta }}_{2}},</math> and inverting the matrix, one can then obtain the local estimate of the covariance matrix or,

| |

| | |

| ::<math>{{F}^{-1}}={{\left[ \begin{matrix}

| |

| -\tfrac{{{\partial }^{2}}\Lambda }{\partial \theta _{1}^{2}} & {} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{1}}\partial {{\theta }_{2}}} \\

| |

| {} & {} & {} \\

| |

| -\tfrac{{{\partial }^{2}}\Lambda }{\partial {{\theta }_{2}}\partial {{\theta }_{1}}} & {} & -\tfrac{{{\partial }^{2}}\Lambda }{\partial \theta _{2}^{2}} \\

| |

| \end{matrix} \right]}^{-1}}=\left[ \begin{matrix}

| |

| Var\left( {{\widehat{\theta }}_{1}} \right) & {} & Cov\left( {{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}} \right) \\

| |

| {} & {} & {} \\

| |

| Cov\left( {{\widehat{\theta }}_{1}},{{\widehat{\theta }}_{2}} \right) & {} & Var\left( {{\widehat{\theta }}_{2}} \right) \\

| |

| \end{matrix} \right]</math>

| |

| | |

| Then the variance of a function ( <math>Var(G)</math> ) can be estimated using Eqn. (var). Values for the variance and covariance of the parameters are obtained from Eqn. (Fisher2).

| |

| Once they are obtained, the approximate confidence bounds on the function are given as:

| |

| | |

| ::<math>C{{B}_{R}}=E(G)\pm {{z}_{\alpha }}\sqrt{Var(G)}</math>

| |

| <br>

| |

| | |

| ==Approximate Confidence Intervals on the Parameters==

| |

| <br>

| |

| In general, MLE estimates of the parameters are asymptotically normal, thus if <math>\widehat{\theta }</math> is the MLE estimator for <math>\theta </math> , in the case of a single parameter distribution, estimated from a sample of <math>n</math> units, and if:

| |

| | |

| | |

| ::<math>z\equiv \frac{\widehat{\theta }-\theta }{\sqrt{Var\left( \widehat{\theta } \right)}}</math>

| |

| | |

| then:

| |

| | |

| ::<math>P\left( z\le x \right)\to \Phi \left( z \right)=\frac{1}{\sqrt{2\pi }}\mathop{}_{-\infty }^{x}{{e}^{-\tfrac{{{t}^{2}}}{2}}}dt</math>

| |

| | |

| for large <math>n</math> . If one now wishes to place confidence bounds on <math>\theta ,</math> at some confidence level <math>\delta </math> , bounded by the two end points <math>{{C}_{1}}</math> and <math>{{C}_{2}}</math> , and where:

| |

| | |

| ::<math>P\left( {{C}_{1}}<\theta <{{C}_{2}} \right)=\delta </math>

| |

| | |

| then from Eqn. (e729):

| |

| | |

| ::<math>P\left( -{{K}_{\tfrac{1-\delta }{2}}}<\frac{\widehat{\theta }-\theta }{\sqrt{Var\left( \widehat{\theta } \right)}}<{{K}_{\tfrac{1-\delta }{2}}} \right)\simeq \delta </math>

| |

| | |

| where <math>{{K}_{\alpha }}</math> is defined by:

| |

| | |

| ::<math>\alpha =\frac{1}{\sqrt{2\pi }}\mathop{}_{{{K}_{\alpha }}}^{\infty }{{e}^{-\tfrac{{{t}^{2}}}{2}}}dt=1-\Phi \left( {{K}_{\alpha }} \right)</math>

| |

| | |

| | |

| Now by simplifying Eqn. (e731), one can obtain the approximate confidence bounds on the parameter <math>\theta ,</math> at a confidence level <math>\delta </math> or:

| |

| | |

| | |

| ::<math>\left( \widehat{\theta }-{{K}_{\tfrac{1-\delta }{2}}}\cdot \sqrt{Var\left( \widehat{\theta } \right)}<\theta <\widehat{\theta }+{{K}_{\tfrac{1-\delta }{2}}}\cdot \sqrt{Var\left( \widehat{\theta } \right)} \right)</math>

| |

| | |

| | |

| If <math>\widehat{\theta }</math> must be positive, then <math>\ln \widehat{\theta }</math> is treated as normally distributed. The two-sided approximate confidence bounds on the parameter <math>\theta </math> , at confidence level <math>\delta </math> , then become:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{\theta }_{U}}= & \widehat{\theta }\cdot {{e}^{\tfrac{{{K}_{\tfrac{1-\delta }{2}}}\sqrt{Var\left( \widehat{\theta } \right)}}{\widehat{\theta }}}}\text{ (Two-sided Upper)} \\

| |

| & {{\theta }_{L}}= & \frac{\widehat{\theta }}{{{e}^{\tfrac{{{K}_{\tfrac{1-\delta }{2}}}\sqrt{Var\left( \widehat{\theta } \right)}}{\widehat{\theta }}}}}\text{ (Two-sided Lower)}

| |

| \end{align}</math>

| |

| | |

| | |

| The one-sided approximate confidence bounds on the parameter <math>\theta </math> , at confidence level <math>\delta </math> can be found from:

| |

| | |

| | |

| ::<math>\begin{align}

| |

| & {{\theta }_{U}}= & \widehat{\theta }\cdot {{e}^{\tfrac{{{K}_{1-\delta }}\sqrt{Var\left( \widehat{\theta } \right)}}{\widehat{\theta }}}}\text{ (One-sided Upper)} \\

| |

| & {{\theta }_{L}}= & \frac{\widehat{\theta }}{{{e}^{\tfrac{{{K}_{1-\delta }}\sqrt{Var\left( \widehat{\theta } \right)}}{\widehat{\theta }}}}}\text{ (One-sided Lower)}

| |

| \end{align}</math>

| |

| | |

| | |

| The same procedure can be repeated for the case of a two or more parameter distribution. Lloyd and Lipow [24] elaborate on this procedure.

| |

| <br>

| |

| <br>

| |

| | |

| ==Percentile Confidence Bounds (Type 1 in ALTA)==

| |

| <br>

| |

| Percentile confidence bounds are confidence bounds around time. For example, when using the 1-parameter exponential distribution, the corresponding time for a given exponential percentile (i.e., y-ordinate or unreliability, <math>Q=1-R)</math> is determined by solving the unreliability function for the time, <math>T</math> , or:

| |

| | |

| <br>

| |

| ::<math>\begin{align}

| |

| & T(Q)= & -\frac{1}{\widehat{\lambda }}\ln (1-Q) \\

| |

| & = & -\frac{1}{\widehat{\lambda }}\ln (R)

| |

| \end{align}</math>

| |

| | |

| <br>

| |

| Percentile bounds (Type 1) return the confidence bounds by determining the confidence intervals around <math>\widehat{\lambda }</math> and substituting into Eqn. (cb). The bounds on <math>\widehat{\lambda }</math> were determined using Eqns. (cblmu) and (cblml), with its variance obtained from Eqn. (Fisher2).

| |

| <br>

| |

| <br>

| |

| | |

| ==Reliability Confidence Bounds (Type 2 in ALTA)==

| |

| <br>

| |

| Type 2 bounds in ALTA are confidence bounds around reliability. For example, when using the 1-parameter exponential distribution, the reliability function is:

| |

| | |

| | |

| ::<math>R(T)={{e}^{-\widehat{\lambda }\cdot T}}</math>

| |

| | |

| | |

| Reliability bounds (Type 2) return the confidence bounds by determining the confidence intervals around <math>\widehat{\lambda }</math> and substituting into Eqn. (cbr). The bounds on <math>\widehat{\lambda }</math> were determined using Eqns. (cblmu) and (cblml), with its variance obtained from Eqn. (Fisher2).

| |

New format available! This reference is now available in a new format that offers faster page load, improved display for calculations and images, more targeted search and the latest content available as a PDF. As of September 2023, this Reliawiki page will not continue to be updated. Please update all links and bookmarks to the latest reference at help.reliasoft.com/reference/accelerated_life_testing_data_analysis

|

Chapter Appendix A: Appendix A: Brief Statistical Background

|

|

| Chapter Appendix A

|

| Appendix A: Brief Statistical Background

|

Available Software:

ALTA

More Resources:

ALTA Examples

|

In this appendix, we attempt to provide a brief elementary introduction to the most common and fundamental statistical equations and definitions used in reliability engineering and life data analysis. The equations and concepts presented in this appendix are used extensively throughout this reference.

Random Variables

In general, most problems in reliability engineering deal with quantitative measures, such as the time-to-failure of a component, or qualitative measures, such as whether a component is defective or non-defective. We can then use a random variable [math]\displaystyle{ X\,\! }[/math] to denote these possible measures.

In the case of times-to-failure, our random variable [math]\displaystyle{ X\,\! }[/math] is the time-to-failure of the component and can take on an infinite number of possible values in a range from 0 to infinity (since we do not know the exact time a priori). Our component can be found failed at any time after time 0 (e.g., at 12 hours or at 100 hours and so forth), thus [math]\displaystyle{ X\,\! }[/math] can take on any value in this range. In this case, our random variable [math]\displaystyle{ X\,\! }[/math] is said to be a continuous random variable. In this reference, we will deal almost exclusively with continuous random variables.

In judging a component to be defective or non-defective, only two outcomes are possible. That is, [math]\displaystyle{ X\,\! }[/math] is a random variable that can take on one of only two values (let's say defective = 0 and non-defective = 1). In this case, the variable is said to be a discrete random variable.

The Probability Density Function and the Cumulative Distribution Function

The probability density function (pdf) and cumulative distribution function (cdf) are two of the most important statistical functions in reliability and are very closely related. When these functions are known, almost any other reliability measure of interest can be derived or obtained. We will now take a closer look at these functions and how they relate to other reliability measures, such as the reliability function and failure rate.

From probability and statistics, given a continuous random variable [math]\displaystyle{ X,\,\! }[/math] we denote:

- The probability density function, pdf, as [math]\displaystyle{ f(x)\,\! }[/math].

- The cumulative distribution function, cdf, as [math]\displaystyle{ F(x)\,\! }[/math].

The pdf and cdf give a complete description of the probability distribution of a random variable. The following figure illustrates a pdf.

The next figures illustrate the pdf - cdf relationship.

If [math]\displaystyle{ X\,\! }[/math] is a continuous random variable, then the pdf of [math]\displaystyle{ X\,\! }[/math] is a function, [math]\displaystyle{ f(x)\,\! }[/math], such that for any two numbers, [math]\displaystyle{ a\,\! }[/math] and [math]\displaystyle{ b\,\! }[/math] with [math]\displaystyle{ a\le b\,\! }[/math] :

- [math]\displaystyle{ P(a\le X\le b)=\int_{a}^{b}f(x)dx\ \,\! }[/math]

That is, the probability that [math]\displaystyle{ X\,\! }[/math] takes on a value in the interval [math]\displaystyle{ [a,b]\,\! }[/math] is the area under the density function from [math]\displaystyle{ a\,\! }[/math] to [math]\displaystyle{ b,\,\! }[/math] as shown above. The pdf represents the relative frequency of failure times as a function of time.

The cdf is a function, [math]\displaystyle{ F(x)\,\! }[/math], of a random variable [math]\displaystyle{ X\,\! }[/math], and is defined for a number [math]\displaystyle{ x\,\! }[/math] by:

- [math]\displaystyle{ F(x)=P(X\le x)=\int_{0}^{x}f(s)ds\ \,\! }[/math]

That is, for a number [math]\displaystyle{ x\,\! }[/math], [math]\displaystyle{ F(x)\,\! }[/math] is the probability that the observed value of [math]\displaystyle{ X\,\! }[/math] will be at most [math]\displaystyle{ x\,\! }[/math]. The cdf represents the cumulative values of the pdf. That is, the value of a point on the curve of the cdf represents the area under the curve to the left of that point on the pdf. In reliability, the cdf is used to measure the probability that the item in question will fail before the associated time value, [math]\displaystyle{ t\,\! }[/math], and is also called unreliability.

Note that depending on the density function, denoted by [math]\displaystyle{ f(x)\,\! }[/math], the limits will vary based on the region over which the distribution is defined. For example, for the life distributions considered in this reference, with the exception of the normal distribution, this range would be [math]\displaystyle{ [0,+\infty ].\,\! }[/math]

Mathematical Relationship: pdf and cdf

The mathematical relationship between the pdf and cdf is given by:

- [math]\displaystyle{ F(x)=\int_{0}^{x}f(s)ds \,\! }[/math]

where [math]\displaystyle{ s\,\! }[/math] is a dummy integration variable.

Conversely:

- [math]\displaystyle{ f(x)=\frac{d(F(x))}{dx}\,\! }[/math]

The cdf is the area under the probability density function up to a value of [math]\displaystyle{ x\,\! }[/math]. The total area under the pdf is always equal to 1, or mathematically:

- [math]\displaystyle{ \int_{-\infty}^{+\infty }f(x)dx=1\,\! }[/math]

The well-known normal (or Gaussian) distribution is an example of a probability density function. The pdf for this distribution is given by:

- [math]\displaystyle{ f(t)=\frac{1}{\sigma \sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{t-\mu }{\sigma } \right)}^{2}}}}\,\! }[/math]

where [math]\displaystyle{ \mu \,\! }[/math] is the mean and [math]\displaystyle{ \sigma \,\! }[/math] is the standard deviation. The normal distribution has two parameters, [math]\displaystyle{ \mu \,\! }[/math] and [math]\displaystyle{ \sigma \,\! }[/math].

Another is the lognormal distribution, whose pdf is given by:

- [math]\displaystyle{ f(t)=\frac{1}{t\cdot {{\sigma }^{\prime }}\sqrt{2\pi }}{{e}^{-\tfrac{1}{2}{{\left( \tfrac{{{t}^{\prime }}-{{\mu }^{\prime }}}{{{\sigma }^{\prime }}} \right)}^{2}}}}\,\! }[/math]

where [math]\displaystyle{ {\mu }'\,\! }[/math] is the mean of the natural logarithms of the times-to-failure and [math]\displaystyle{ {\sigma }'\,\! }[/math] is the standard deviation of the natural logarithms of the times-to-failure. Again, this is a 2-parameter distribution.

Reliability Function

The reliability function can be derived using the previous definition of the cumulative distribution function, [math]\displaystyle{ F(x)=\int_{0}^{x}f(s)ds \,\! }[/math]. From our definition of the cdf, the probability of an event occurring by time [math]\displaystyle{ t\,\! }[/math] is given by:

- [math]\displaystyle{ F(t)=\int_{0}^{t}f(s)ds\ \,\! }[/math]

Or, one could equate this event to the probability of a unit failing by time [math]\displaystyle{ t\,\! }[/math].

Since this function defines the probability of failure by a certain time, we could consider this the unreliability function. Subtracting this probability from 1 will give us the reliability function, one of the most important functions in life data analysis. The reliability function gives the probability of success of a unit undertaking a mission of a given time duration.

The following figure illustrates this.

To show this mathematically, we first define the unreliability function, [math]\displaystyle{ Q(t)\,\! }[/math], which is the probability of failure, or the probability that our time-to-failure is in the region of 0 and [math]\displaystyle{ t\,\! }[/math]. This is the same as the cdf. So from [math]\displaystyle{ F(t)=\int_{0}^{t}f(s)ds\ \,\! }[/math]:

- [math]\displaystyle{ Q(t)=F(t)=\int_{0}^{t}f(s)ds\,\! }[/math]

Reliability and unreliability are the only two events being considered and they are mutually exclusive; hence, the sum of these probabilities is equal to unity.

Then:

- [math]\displaystyle{ \begin{align}

Q(t)+R(t)= & 1 \\

R(t)= & 1-Q(t) \\

R(t)= & 1-\int_{0}^{t}f(s)ds \\

R(t)= & \int_{t}^{\infty }f(s)ds

\end{align}\,\! }[/math]

Conversely:

- [math]\displaystyle{ f(t)=-\frac{d(R(t))}{dt}\,\! }[/math]

Conditional Reliability Function

Conditional reliability is the probability of successfully completing another mission following the successful completion of a previous mission. The time of the previous mission and the time for the mission to be undertaken must be taken into account for conditional reliability calculations. The conditional reliability function is given by:

- [math]\displaystyle{ R(t|T)=\frac{R(T+t)}{R(T)}\ \,\! }[/math]

Failure Rate Function

The failure rate function enables the determination of the number of failures occurring per unit time. Omitting the derivation, the failure rate is mathematically given as:

- [math]\displaystyle{ \lambda (t)=\frac{f(t)}{R(t)}\ \,\! }[/math]

This gives the instantaneous failure rate, also known as the hazard function. It is useful in characterizing the failure behavior of a component, determining maintenance crew allocation, planning for spares provisioning, etc. Failure rate is denoted as failures per unit time.

Mean Life (MTTF)

The mean life function, which provides a measure of the average time of operation to failure, is given by:

- [math]\displaystyle{ \overline{T}=m=\int_{0}^{\infty }t\cdot f(t)dt\,\! }[/math]

This is the expected or average time-to-failure and is denoted as the MTTF (Mean Time To Failure).

The MTTF, even though an index of reliability performance, does not give any information on the failure distribution of the component in question when dealing with most lifetime distributions. Because vastly different distributions can have identical means, it is unwise to use the MTTF as the sole measure of the reliability of a component.

Median Life

Median life,

[math]\displaystyle{ \tilde{T}\,\! }[/math],

is the value of the random variable that has exactly one-half of the area under the pdf to its left and one-half to its right.

It represents the centroid of the distribution.

The median is obtained by solving the following equation for [math]\displaystyle{ \breve{T}\,\! }[/math]. (For individual data, the median is the midpoint value.)

- [math]\displaystyle{ \int_{-\infty}^{{\breve{T}}}f(t)dt=0.5\ \,\! }[/math]

Modal Life (or Mode)

The modal life (or mode), [math]\displaystyle{ \tilde{T}\,\! }[/math], is the value of [math]\displaystyle{ T\,\! }[/math] that satisfies:

- [math]\displaystyle{ \frac{d\left[ f(t) \right]}{dt}=0\ \,\! }[/math]

For a continuous distribution, the mode is that value of [math]\displaystyle{ t\,\! }[/math] that corresponds to the maximum probability density (the value at which the pdf has its maximum value, or the peak of the curve).

Lifetime Distributions

A statistical distribution is fully described by its pdf. In the previous sections, we used the definition of the pdf to show how all other functions most commonly used in reliability engineering and life data analysis can be derived. The reliability function, failure rate function, mean time function, and median life function can be determined directly from the pdf definition, or [math]\displaystyle{ f(t)\,\! }[/math]. Different distributions exist, such as the normal (Gaussian), exponential, Weibull, etc., and each has a predefined form of [math]\displaystyle{ f(t)\,\! }[/math] that can be found in many references. In fact, there are certain references that are devoted exclusively to different types of statistical distributions. These distributions were formulated by statisticians, mathematicians and engineers to mathematically model or represent certain behavior. For example, the Weibull distribution was formulated by Waloddi Weibull and thus it bears his name. Some distributions tend to better represent life data and are most commonly called "lifetime distributions".

A more detailed introduction to this topic is presented in Life Distributions.