ANOVA for Designed Experiments: Difference between revisions

Chris Kahn (talk | contribs) No edit summary |

|||

| (45 intermediate revisions by 4 users not shown) | |||

| Line 4: | Line 4: | ||

The studies that enable the establishment of a cause-and-effect relationship are called ''experiments''. In experiments the response is investigated by studying only the effect of the factor(s) of interest and excluding all other effects that may provide alternative justifications to the observed change in response. This is done in two ways. First, the levels of the factors to be investigated are carefully selected and then strictly controlled during the execution of the experiment. The aspect of selecting what factor levels should be investigated in the experiment is called the ''design'' of the experiment. The second distinguishing feature of experiments is that observations in an experiment are recorded in a random order. By doing this, it is hoped that the effect of all other factors not being investigated in the experiment will get cancelled out so that the change in the response is the result of only the investigated factors. Using these two techniques, experiments tend to ensure that alternative justifications to observed changes in the response are voided, thereby enabling the establishment of a cause-and-effect relationship between the response and the investigated factors. | The studies that enable the establishment of a cause-and-effect relationship are called ''experiments''. In experiments the response is investigated by studying only the effect of the factor(s) of interest and excluding all other effects that may provide alternative justifications to the observed change in response. This is done in two ways. First, the levels of the factors to be investigated are carefully selected and then strictly controlled during the execution of the experiment. The aspect of selecting what factor levels should be investigated in the experiment is called the ''design'' of the experiment. The second distinguishing feature of experiments is that observations in an experiment are recorded in a random order. By doing this, it is hoped that the effect of all other factors not being investigated in the experiment will get cancelled out so that the change in the response is the result of only the investigated factors. Using these two techniques, experiments tend to ensure that alternative justifications to observed changes in the response are voided, thereby enabling the establishment of a cause-and-effect relationship between the response and the investigated factors. | ||

'''Randomization''' | |||

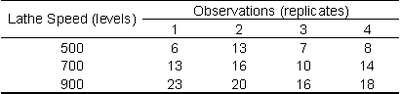

The aspect of recording observations in an experiment in a random order is referred to as ''randomization''. Specifically, randomization is the process of assigning the various levels of the investigated factors to the experimental units in a random fashion. An experiment is said to be ''completely randomized'' if the probability of an experimental unit to be subjected to any level of a factor is equal for all the experimental units. The importance of randomization can be illustrated using an example. Consider an experiment where the effect of the speed of a lathe machine on the surface finish of a product is being investigated. In order to save time, the experimenter records surface finish values by running the lathe machine continuously and recording observations in the order of increasing speeds. The analysis of the experiment data shows that an increase in lathe speeds causes a decrease in the quality of surface finish. However the results of the experiment are disputed by the lathe operator who claims that he has been able to obtain better surface finish quality in the products by operating the lathe machine at higher speeds. It is later found that the faulty results were caused because of overheating of the tool used in the machine. Since the lathe was run continuously in the order of increased speeds the observations were recorded in the order of increased tool temperatures. This problem could have been avoided if the experimenter had randomized the experiment and taken reading at the various lathe speeds in a random fashion. This would require the experimenter to stop and restart the machine at every observation, thereby keeping the temperature of the tool within a reasonable range. Randomization would have ensured that the effect of heating of the machine tool is not included in the experiment. | The aspect of recording observations in an experiment in a random order is referred to as ''randomization''. Specifically, randomization is the process of assigning the various levels of the investigated factors to the experimental units in a random fashion. An experiment is said to be ''completely randomized'' if the probability of an experimental unit to be subjected to any level of a factor is equal for all the experimental units. The importance of randomization can be illustrated using an example. Consider an experiment where the effect of the speed of a lathe machine on the surface finish of a product is being investigated. In order to save time, the experimenter records surface finish values by running the lathe machine continuously and recording observations in the order of increasing speeds. The analysis of the experiment data shows that an increase in lathe speeds causes a decrease in the quality of surface finish. However the results of the experiment are disputed by the lathe operator who claims that he has been able to obtain better surface finish quality in the products by operating the lathe machine at higher speeds. It is later found that the faulty results were caused because of overheating of the tool used in the machine. Since the lathe was run continuously in the order of increased speeds the observations were recorded in the order of increased tool temperatures. This problem could have been avoided if the experimenter had randomized the experiment and taken reading at the various lathe speeds in a random fashion. This would require the experimenter to stop and restart the machine at every observation, thereby keeping the temperature of the tool within a reasonable range. Randomization would have ensured that the effect of heating of the machine tool is not included in the experiment. | ||

| Line 83: | Line 83: | ||

The coefficients of the treatment effects <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math> can be expressed using two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, as follows: | The coefficients of the treatment effects <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math> can be expressed using two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, as follows: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 103: | Line 104: | ||

The equation given above represents the "regression version" of the ANOVA model. | The equation given above represents the "regression version" of the ANOVA model. | ||

It can be seen from the equation given above that in an ANOVA model each factor is treated as a qualitative factor. In the present example the factor, lathe speed, is a quantitative factor with three levels. But the ANOVA model treats this factor as a qualitative factor with three levels. Therefore, two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, are required to represent this factor | ===Treat Numerical Factors as Qualitative or Quantitative ?=== | ||

It can be seen from the equation given above that in an ANOVA model each factor is treated as a qualitative factor. In the present example the factor, lathe speed, is a quantitative factor with three levels. But the ANOVA model treats this factor as a qualitative factor with three levels. Therefore, two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, are required to represent this factor. | |||

The | Note that in a regression model a variable can either be treated as a quantitative or a qualitative variable. The factor, lathe speed, would be used as a quantitative factor and represented with a single predictor variable in a regression model. For example, if a first order model were to be fitted to the data in the first table, then the regression model would take the form <math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\epsilon }_{ij}}\,\!</math>. If a second order regression model were to be fitted, the regression model would be <math>{{Y}_{ij}}={{\beta }_{0}}+{{\beta }_{1}}{{x}_{i1}}+{{\beta }_{2}}x_{i1}^{2}+{{\epsilon }_{ij}}\,\!</math>. Notice that unlike these regression models, the regression version of the ANOVA model does not make any assumption about the nature of relationship between the response and the factor being investigated. | ||

The choice of treating a particular factor as a quantitative or qualitative variable depends on the objective of the experimenter. In the case of the data of the first table, the objective of the experimenter is to compare the levels of the factor to see if change in the levels leads to a significant change in the response. The objective is not to make predictions on the response for a given level of the factor. Therefore, the factor is treated as a qualitative factor in this case. If the objective of the experimenter were prediction or optimization, the experimenter would focus on aspects such as the nature of relationship between the factor, lathe speed, and the response, surface finish, so that the factor should be modeled as a quantitative factor to make accurate predictions. | |||

====Expression of the ANOVA Model in the Form <math>y=X\beta +\epsilon \,\!</math>==== | ====Expression of the ANOVA Model in the Form <math>y=X\beta +\epsilon \,\!</math>==== | ||

| Line 131: | Line 134: | ||

::<math>y=X\beta +\epsilon \,\!</math> | ::<math>y=X\beta +\epsilon \,\!</math> | ||

:where | :where | ||

::<math>y=\left[ \begin{matrix} | ::<math>y=\left[ \begin{matrix} | ||

| Line 172: | Line 177: | ||

:Thus: | :Thus: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 222: | Line 228: | ||

The hypothesis test in single factor experiments examines the ANOVA model to see if the response at any level of the investigated factor is significantly different from that at the other levels. If this is not the case and the response at all levels is not significantly different, then it can be concluded that the investigated factor does not affect the response. The test on the ANOVA model is carried out by checking to see if any of the treatment effects, <math>{{\tau }_{i}}\,\!</math>, are non-zero. The test is similar to the test of significance of regression mentioned in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]] and [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]] in the context of regression models. The hypotheses statements for this test are: | The hypothesis test in single factor experiments examines the ANOVA model to see if the response at any level of the investigated factor is significantly different from that at the other levels. If this is not the case and the response at all levels is not significantly different, then it can be concluded that the investigated factor does not affect the response. The test on the ANOVA model is carried out by checking to see if any of the treatment effects, <math>{{\tau }_{i}}\,\!</math>, are non-zero. The test is similar to the test of significance of regression mentioned in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]] and [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]] in the context of regression models. The hypotheses statements for this test are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 230: | Line 237: | ||

The test for <math>{{H}_{0}}\,\!</math> is carried out using the following statistic: | The test for <math>{{H}_{0}}\,\!</math> is carried out using the following statistic: | ||

::<math>{{F}_{0}}=\frac{M{{S}_{TR}}}{M{{S}_{E}}}\,\!</math> | ::<math>{{F}_{0}}=\frac{M{{S}_{TR}}}{M{{S}_{E}}}\,\!</math> | ||

where <math>M{{S}_{TR}}\,\!</math> represents the mean square for the ANOVA model and <math>M{{S}_{E}}\,\!</math> is the error mean square. Note that in the case of ANOVA models we use the notation <math>M{{S}_{TR}}\,\!</math> (treatment mean square) for the model mean square and <math>S{{S}_{TR}}\,\!</math> (treatment sum of squares) for the model sum of squares (instead of <math>M{{S}_{R}}\,\!</math>, regression mean square, and <math>S{{S}_{R}}\,\!</math>, regression sum of squares, used in | where <math>M{{S}_{TR}}\,\!</math> represents the mean square for the ANOVA model and <math>M{{S}_{E}}\,\!</math> is the error mean square. Note that in the case of ANOVA models we use the notation <math>M{{S}_{TR}}\,\!</math> (treatment mean square) for the model mean square and <math>S{{S}_{TR}}\,\!</math> (treatment sum of squares) for the model sum of squares (instead of <math>M{{S}_{R}}\,\!</math>, regression mean square, and <math>S{{S}_{R}}\,\!</math>, regression sum of squares, used in [[Simple_Linear_Regression_Analysis| Simple Linear Regression Analysis]] and [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]]). This is done to indicate that the model under consideration is the ANOVA model and not the regression model. The calculations to obtain <math>M{{S}_{TR}}\,\!</math> and <math>S{{S}_{TR}}\,\!</math> are identical to the calculations to obtain <math>M{{S}_{R}}\,\!</math> and <math>S{{S}_{R}}\,\!</math> explained in [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]]. | ||

| Line 240: | Line 248: | ||

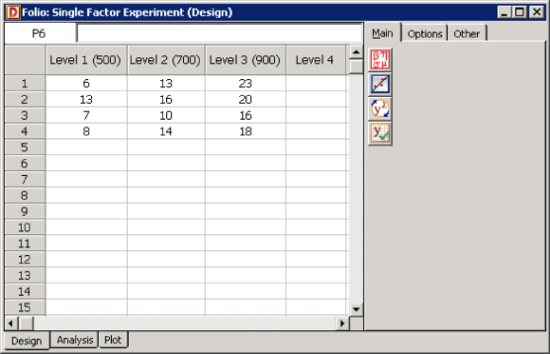

The sum of squares to obtain the statistic <math>{{F}_{0}}\,\!</math> can be calculated as explained in [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]]. Using the data in the first table, the model sum of squares, <math>S{{S}_{TR}}\,\!</math>, can be calculated as: | The sum of squares to obtain the statistic <math>{{F}_{0}}\,\!</math> can be calculated as explained in [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]]. Using the data in the first table, the model sum of squares, <math>S{{S}_{TR}}\,\!</math>, can be calculated as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 268: | Line 277: | ||

In the previous equation, <math>{{n}_{a}}\,\!</math> represents the number of levels of the factor, <math>m\,\!</math> represents the replicates at each level, <math>y\,\!</math> represents the vector of the response values, <math>H\,\!</math> represents the hat matrix and <math>J\,\!</math> represents the matrix of ones. (For details on each of these terms, refer to [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]].) | In the previous equation, <math>{{n}_{a}}\,\!</math> represents the number of levels of the factor, <math>m\,\!</math> represents the replicates at each level, <math>y\,\!</math> represents the vector of the response values, <math>H\,\!</math> represents the hat matrix and <math>J\,\!</math> represents the matrix of ones. (For details on each of these terms, refer to [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]].) | ||

Since two effect terms, <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math>, are used in the regression version of the ANOVA model, the degrees of freedom associated with the model sum of squares, <math>S{{S}_{TR}}\,\!</math>, is two. | Since two effect terms, <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math>, are used in the regression version of the ANOVA model, the degrees of freedom associated with the model sum of squares, <math>S{{S}_{TR}}\,\!</math>, is two. | ||

::<math>dof(S{{S}_{TR}})=2\,\!</math> | ::<math>dof(S{{S}_{TR}})=2\,\!</math> | ||

| Line 273: | Line 283: | ||

The total sum of squares, <math>S{{S}_{T}}\,\!</math>, can be obtained as follows: | The total sum of squares, <math>S{{S}_{T}}\,\!</math>, can be obtained as follows: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 298: | Line 309: | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

In the previous equation, <math>I\,\!</math> is the identity matrix. Since there are 12 data points in all, the number of degrees of freedom associated with <math>S{{S}_{T}}\,\!</math> is 11. | In the previous equation, <math>I\,\!</math> is the identity matrix. Since there are 12 data points in all, the number of degrees of freedom associated with <math>S{{S}_{T}}\,\!</math> is 11. | ||

| Line 338: | Line 348: | ||

The <math>p\,\!</math> value for the statistic based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 9 degrees of freedom in the denominator is: | The <math>p\,\!</math> value for the statistic based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 9 degrees of freedom in the denominator is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 349: | Line 360: | ||

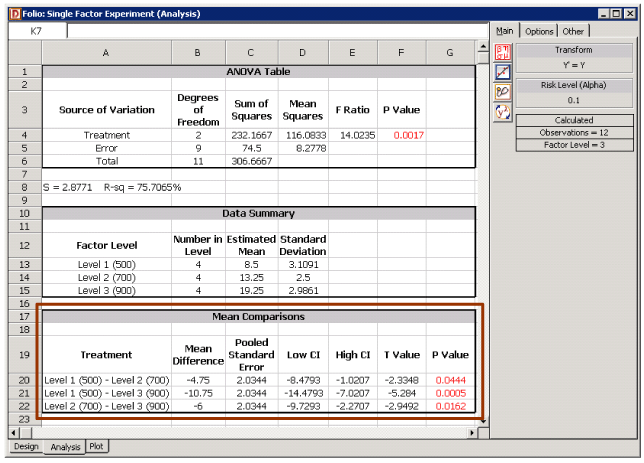

[[Image:doe6.2.png|thumb|center| | [[Image:doe6.2.png|thumb|center|650px|ANOVA table for the data in the first table.]] | ||

===Confidence Interval on the < | ===Confidence Interval on the ''i''<sup>th</sup> Treatment Mean=== | ||

The response at each treatment of a single factor experiment can be assumed to be a normal population with a mean of <math>{{\mu }_{i}}\,\!</math> and variance of <math>{{\sigma }^{2}}\,\!</math> provided that the error terms can be assumed to be normally distributed. A point estimator of <math>{{\mu }_{i}}\,\!</math> is the average response at each treatment, <math>{{\bar{y}}_{i\cdot }}\,\!</math>. Since this is a sample average, the associated variance is <math>{{\sigma }^{2}}/{{m}_{i}}\,\!</math>, where <math>{{m}_{i}}\,\!</math> is the number of replicates at the <math>i\,\!</math>th treatment. Therefore, the confidence interval on <math>{{\mu }_{i}}\,\!</math> is based on the <math>t\,\!</math> distribution. Recall from [[Statistical_Background_on_DOE| Statistical Background on DOE]] (inference on population mean when variance is unknown) that: | The response at each treatment of a single factor experiment can be assumed to be a normal population with a mean of <math>{{\mu }_{i}}\,\!</math> and variance of <math>{{\sigma }^{2}}\,\!</math> provided that the error terms can be assumed to be normally distributed. A point estimator of <math>{{\mu }_{i}}\,\!</math> is the average response at each treatment, <math>{{\bar{y}}_{i\cdot }}\,\!</math>. Since this is a sample average, the associated variance is <math>{{\sigma }^{2}}/{{m}_{i}}\,\!</math>, where <math>{{m}_{i}}\,\!</math> is the number of replicates at the <math>i\,\!</math>th treatment. Therefore, the confidence interval on <math>{{\mu }_{i}}\,\!</math> is based on the <math>t\,\!</math> distribution. Recall from [[Statistical_Background_on_DOE| Statistical Background on DOE]] (inference on population mean when variance is unknown) that: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 362: | Line 375: | ||

has a <math>t\,\!</math> distribution with degrees of freedom <math>=dof(S{{S}_{E}})\,\!</math>. Therefore, a 100 (<math>1-\alpha \,\!</math>) percent confidence interval on the <math>i\,\!</math>th treatment mean, <math>{{\mu }_{i}}\,\!</math>, is: | has a <math>t\,\!</math> distribution with degrees of freedom <math>=dof(S{{S}_{E}})\,\!</math>. Therefore, a 100 (<math>1-\alpha \,\!</math>) percent confidence interval on the <math>i\,\!</math>th treatment mean, <math>{{\mu }_{i}}\,\!</math>, is: | ||

::<math>{{\bar{y}}_{i\cdot }}\pm {{t}_{\alpha /2,dof(S{{S}_{E}})}}\sqrt{\frac{M{{S}_{E}}}{{{m}_{i}}}}\,\!</math> | ::<math>{{\bar{y}}_{i\cdot }}\pm {{t}_{\alpha /2,dof(S{{S}_{E}})}}\sqrt{\frac{M{{S}_{E}}}{{{m}_{i}}}}\,\!</math> | ||

| Line 367: | Line 381: | ||

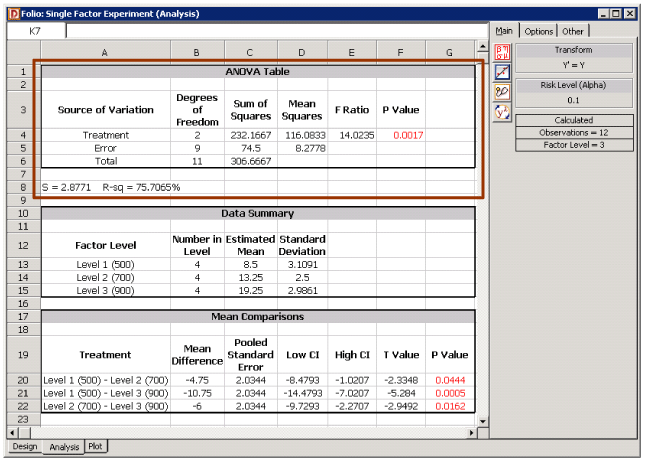

For example, for the first treatment of the lathe speed we have: | For example, for the first treatment of the lathe speed we have: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 375: | Line 390: | ||

In DOE++, this value is displayed as the Estimated Mean for the first level, as shown in the Data Summary | In DOE++, this value is displayed as the Estimated Mean for the first level, as shown in the Data Summary table in the figure below. The value displayed as the standard deviation for this level is simply the sample standard deviation calculated using the observations corresponding to this level. The 90% confidence interval for this treatment is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 389: | Line 405: | ||

[[Image:doe6.3.png|thumb|center| | [[Image:doe6.3.png|thumb|center|650px|Data Summary table for the single factor experiment in the first table.]] | ||

===Confidence Interval on the Difference in Two Treatment Means=== | ===Confidence Interval on the Difference in Two Treatment Means=== | ||

The confidence interval on the difference in two treatment means, <math>{{\mu }_{i}}-{{\mu }_{j}}\,\!</math>, is used to compare two levels of the factor at a given significance. If the confidence interval does not include the value of zero, it is concluded that the two levels of the factor are significantly different. The point estimator of <math>{{\mu }_{i}}-{{\mu }_{j}}\,\!</math> is <math>{{\bar{y}}_{i\cdot }}-{{\bar{y}}_{j\cdot }}\,\!</math>. The variance for <math>{{\bar{y}}_{i\cdot }}-{{\bar{y}}_{j\cdot }}\,\!</math> is: | The confidence interval on the difference in two treatment means, <math>{{\mu }_{i}}-{{\mu }_{j}}\,\!</math>, is used to compare two levels of the factor at a given significance. If the confidence interval does not include the value of zero, it is concluded that the two levels of the factor are significantly different. The point estimator of <math>{{\mu }_{i}}-{{\mu }_{j}}\,\!</math> is <math>{{\bar{y}}_{i\cdot }}-{{\bar{y}}_{j\cdot }}\,\!</math>. The variance for <math>{{\bar{y}}_{i\cdot }}-{{\bar{y}}_{j\cdot }}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 426: | Line 444: | ||

Then a 100 (1- <math>\alpha \,\!</math>) percent confidence interval on the difference in two treatment means, <math>{{\mu }_{i}}-{{\mu }_{j}}\,\!</math>, is: | Then a 100 (1- <math>\alpha \,\!</math>) percent confidence interval on the difference in two treatment means, <math>{{\mu }_{i}}-{{\mu }_{j}}\,\!</math>, is: | ||

::<math>{{\bar{y}}_{i\cdot }}-{{\bar{y}}_{j\cdot }}\pm {{t}_{\alpha /2,dof(S{{S}_{E}})}}\sqrt{\frac{2M{{S}_{E}}}{m}}\,\!</math> | ::<math>{{\bar{y}}_{i\cdot }}-{{\bar{y}}_{j\cdot }}\pm {{t}_{\alpha /2,dof(S{{S}_{E}})}}\sqrt{\frac{2M{{S}_{E}}}{m}}\,\!</math> | ||

| Line 442: | Line 459: | ||

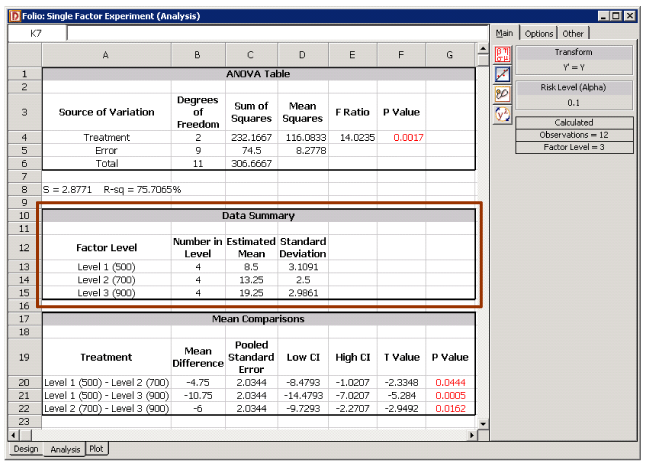

The pooled standard error for this difference is: | The pooled standard error for this difference is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 452: | Line 470: | ||

To test <math>{{H}_{0}} | To test <math>{{H}_{0}}:{{\mu }_{1}}-{{\mu }_{2}}=0\,\!</math>, the <math>t\,\!</math> statistic is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 463: | Line 482: | ||

In DOE++, the value of the statistic is displayed in the Mean Comparisons table under the column T Value as shown in the figure below. The 90% confidence interval on the difference <math>{{\mu }_{1}}-{{\mu }_{2}}\,\!</math> is: | In DOE++, the value of the statistic is displayed in the Mean Comparisons table under the column T Value as shown in the figure below. The 90% confidence interval on the difference <math>{{\mu }_{1}}-{{\mu }_{2}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 491: | Line 511: | ||

[[Image:doe6.4.png|thumb|center| | [[Image:doe6.4.png|thumb|center|644px|Mean Comparisons table for the data in the first table.]] | ||

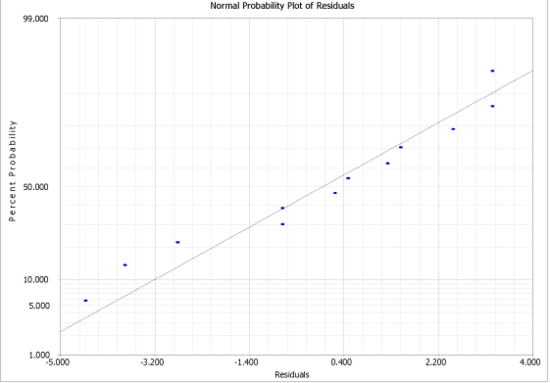

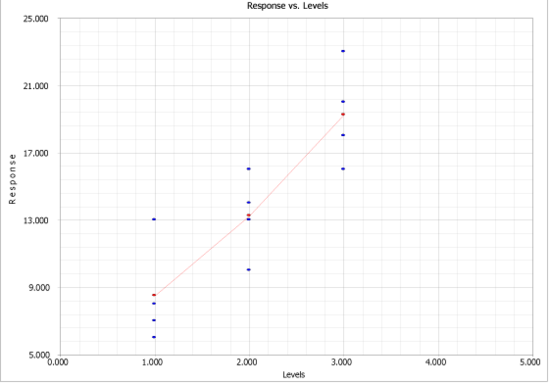

Equality of variance is checked by plotting residuals against the treatments and the treatment averages, <math>{{\bar{y}}_{i\cdot }}\,\!</math> (also referred to as fitted values), and inspecting the spread in the residuals. If a pattern is seen in these plots, then this indicates the need to use a suitable transformation on the response that will ensure variance equality. Box-Cox transformations are discussed in the next section. To check for independence of the random error terms residuals are plotted against time or run-order to ensure that a pattern does not exist in these plots. Residual plots for the given example are shown in the following two figures. The plots show that the assumptions associated with the ANOVA model are not violated. | Equality of variance is checked by plotting residuals against the treatments and the treatment averages, <math>{{\bar{y}}_{i\cdot }}\,\!</math> (also referred to as fitted values), and inspecting the spread in the residuals. If a pattern is seen in these plots, then this indicates the need to use a suitable transformation on the response that will ensure variance equality. Box-Cox transformations are discussed in the next section. To check for independence of the random error terms residuals are plotted against time or run-order to ensure that a pattern does not exist in these plots. Residual plots for the given example are shown in the following two figures. The plots show that the assumptions associated with the ANOVA model are not violated. | ||

[[Image:doe6.5.png|thumb|center| | [[Image:doe6.5.png|thumb|center|550px|Normal probability plot of residuals for the single factor experiment in the first table.]] | ||

[[Image:doe6.6.png|thumb|center|550px|Plot of residuals against fitted values for the single factor experiment in the first table.]] | |||

===Box-Cox Method=== | ===Box-Cox Method=== | ||

Transformations on the response may be used when residual plots for an experiment show a pattern. This indicates that the equality of variance does not hold for the residuals of the given model. The Box-Cox method can be used to automatically identify a suitable power transformation for the data based on the | Transformations on the response may be used when residual plots for an experiment show a pattern. This indicates that the equality of variance does not hold for the residuals of the given model. The Box-Cox method can be used to automatically identify a suitable power transformation for the data based on the following relationship: | ||

::<math>{{Y}^{*}}={{Y}^{\lambda }}\,\!</math> | ::<math>{{Y}^{*}}={{Y}^{\lambda }}\,\!</math> | ||

<math>\lambda \,\!</math> is determined using the given data such that <math>S{{S}_{E}}\,\!</math> is minimized. The values of <math>{{Y}^{\lambda }}\,\!</math> are not used as is because of issues related to calculation or comparison of <math>S{{S}_{E}}\,\!</math> values for different values of <math>\lambda \,\!</math>. For example, for <math>\lambda =0\,\!</math> all response values will become 1. Therefore, the following | <math>\lambda \,\!</math> is determined using the given data such that <math>S{{S}_{E}}\,\!</math> is minimized. The values of <math>{{Y}^{\lambda }}\,\!</math> are not used as is because of issues related to calculation or comparison of <math>S{{S}_{E}}\,\!</math> values for different values of <math>\lambda \,\!</math>. For example, for <math>\lambda =0\,\!</math> all response values will become 1. Therefore, the following relationship is used to obtain <math>{{Y}^{\lambda }}\,\!</math> : | ||

::<math>Y^{\lambda }=\left\{ | |||

\begin{array}{cc} | |||

\frac{y^{\lambda }-1}{\lambda \dot{y}^{\lambda -1}} & \lambda \neq 0 \\ | |||

\dot{y}\ln y & \lambda =0 | |||

\end{array} | |||

\right.\,\!</math> | |||

where <math>\dot{y}=\exp \left[ \frac{1}{n}\sum\limits_{i=1}^{n}y_{i}\right]\,\!</math>. | |||

Once all <math>{{Y}^{\lambda }}\,\!</math> values are obtained for a value of <math>\lambda \,\!</math>, the corresponding <math>S{{S}_{E}}\,\!</math> for these values is obtained using <math>{{y}^{\lambda \prime }}[I-H]{{y}^{\lambda }}\,\!</math>. The process is repeated for a number of <math>\lambda \,\!</math> values to obtain a plot of <math>S{{S}_{E}}\,\!</math> against <math>\lambda \,\!</math>. Then the value of <math>\lambda \,\!</math> corresponding to the minimum <math>S{{S}_{E}}\,\!</math> is selected as the required transformation for the given data. DOE++ plots <math>\ln S{{S}_{E}}\,\!</math> values against <math>\lambda \,\!</math> values because the range of <math>S{{S}_{E}}\,\!</math> values is large and if this is not done, all values cannot be displayed on the same plot. The range of search for the best <math>\lambda \,\!</math> value in the software is from <math>-5\,\!</math> to <math>5\,\!</math>, because larger values of of <math>\lambda \,\!</math> are usually not meaningful. DOE++ also displays a recommended transformation based on the best <math>\lambda \,\!</math> value obtained as shown in the table below. | |||

<table border="2" cellpadding="5" align="center" > | |||

<tr> | |||

<th><font size="3">Best Lambda</font></th> | |||

<th><font size="3">Recommended Transformation</font></th> | |||

<th><font size="3">Equation</font></th> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>-2.5<\lambda \leq -1.5\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{Power} \\ \lambda =-2\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=\frac{1}{Y^{2}}\,\!</math></td> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>-1.5<\lambda \leq -0.75\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{Reciprocal} \\ \lambda =-1\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=\frac{1}{Y}\,\!</math></td> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>-0.75<\lambda \leq -0.25\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{Reciprocal Square Root} \\ \lambda =-0.5\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=\frac{1}{\sqrt{Y}}\,\!</math></td> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>-0.25<\lambda \leq 0.25\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{Natural Log} \\ \lambda =0\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=\ln Y\,\!</math></td> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>0.25<\lambda \leq 0.75\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{Square Root} \\ \lambda =0.5\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=\sqrt{Y}\,\!</math></td> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>0.75<\lambda \leq 1.5\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{None} \\ \lambda =1\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=Y\,\!</math></td> | |||

</tr> | |||

<tr align="center"> | |||

<td><math>1.5<\lambda \leq 2.5\,\!</math></td> | |||

<td><math>\begin{array}{c}\text{Power} \\ \lambda =2\end{array}\,\!</math></td> | |||

<td><math>Y^{\ast }=Y^{2}\,\!</math></td> | |||

</tr> | |||

</table> | |||

Confidence intervals on the selected <math>\lambda \,\!</math> values are also available. Let <math>S{{S}_{E}}(\lambda )\,\!</math> be the value of <math>S{{S}_{E}}\,\!</math> corresponding to the selected value of <math>\lambda \,\!</math>. Then, to calculate the 100 (1- <math>\alpha \,\!</math>) percent confidence intervals on <math>\lambda \,\!</math>, we need to calculate <math>S{{S}^{*}}\,\!</math> as shown next: | Confidence intervals on the selected <math>\lambda \,\!</math> values are also available. Let <math>S{{S}_{E}}(\lambda )\,\!</math> be the value of <math>S{{S}_{E}}\,\!</math> corresponding to the selected value of <math>\lambda \,\!</math>. Then, to calculate the 100 (1- <math>\alpha \,\!</math>) percent confidence intervals on <math>\lambda \,\!</math>, we need to calculate <math>S{{S}^{*}}\,\!</math> as shown next: | ||

::<math>S{{S}^{*}}=S{{S}_{E}}(\lambda )\left( 1+\frac{t_{\alpha /2,dof(S{{S}_{E}})}^{2}}{dof(S{{S}_{E}})} \right)\,\!</math> | ::<math>S{{S}^{*}}=S{{S}_{E}}(\lambda )\left( 1+\frac{t_{\alpha /2,dof(S{{S}_{E}})}^{2}}{dof(S{{S}_{E}})} \right)\,\!</math> | ||

| Line 538: | Line 600: | ||

Note that the power transformations are not defined for response values that are negative or zero. DOE++ deals with negative and zero response values using the following equations (that involve addition of a suitable quantity to all of the response values if a zero or negative response value is encountered). | Note that the power transformations are not defined for response values that are negative or zero. DOE++ deals with negative and zero response values using the following equations (that involve addition of a suitable quantity to all of the response values if a zero or negative response value is encountered). | ||

::<math>\begin{ | |||

::<math>\begin{array}{rll} | |||

y\left( i\right) =& y\left( i\right) +\left| y_{\min }\right|\times 1.1 & \text{Negative Response} \\ | |||

\end{ | y\left( i\right) =& y\left( i\right) +1 & \text{Zero Response} | ||

\end{array}\,\!</math> | |||

Here <math>{{y}_{\min }}\,\!</math> represents the minimum response value and <math>\left| {{y}_{\min }} \right|\,\!</math> represents the absolute value of the minimum response. | Here <math>{{y}_{\min }}\,\!</math> represents the minimum response value and <math>\left| {{y}_{\min }} \right|\,\!</math> represents the absolute value of the minimum response. | ||

====Example==== | ====Example==== | ||

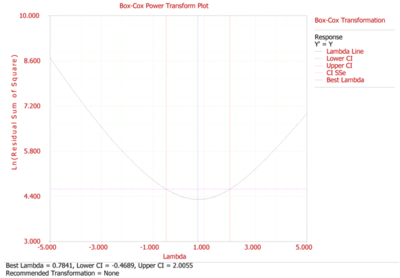

To illustrate the Box-Cox method, consider the experiment given in the first table. Transformed response values for various values of <math>\lambda \,\!</math> can be calculated using the equation for <math>{Y}^{\lambda}\,\!</math> given in [[ANOVA_for_Designed_Experiments#Box-Cox_Method|Box-Cox Method]]. Knowing the hat matrix, <math>H\,\!</math>, <math>S{{S}_{E}}\,\!</math> values corresponding to each of these <math>\lambda \,\!</math> values can easily be obtained using <math>{{y}^{\lambda \prime }}[I-H]{{y}^{\lambda }}\,\!</math>. <math>S{{S}_{E}}\,\!</math> values calculated for <math>\lambda \,\!</math> values between <math>-5\,\!</math> and <math>5\,\!</math> for the given data are shown below: | |||

<center><math>\begin{matrix} | <center><math>\begin{matrix} | ||

| Line 570: | Line 632: | ||

A plot of <math>\ln S{{S}_{E}}\,\!</math> for various <math>\lambda \,\!</math> values, as obtained from DOE++, is shown in the following figure. The value of <math>\lambda \,\!</math> that gives the minimum <math>S{{S}_{E}}\,\!</math> is identified as 0.7841. The <math>S{{S}_{E}}\,\!</math> value corresponding to this value of <math>\lambda \,\!</math> is 73.74. A 90% confidence interval on this <math>\lambda \,\!</math> value is calculated as follows. <math>S{{S}^{*}}\,\!</math> can be obtained as shown next: | A plot of <math>\ln S{{S}_{E}}\,\!</math> for various <math>\lambda \,\!</math> values, as obtained from DOE++, is shown in the following figure. The value of <math>\lambda \,\!</math> that gives the minimum <math>S{{S}_{E}}\,\!</math> is identified as 0.7841. The <math>S{{S}_{E}}\,\!</math> value corresponding to this value of <math>\lambda \,\!</math> is 73.74. A 90% confidence interval on this <math>\lambda \,\!</math> value is calculated as follows. <math>S{{S}^{*}}\,\!</math> can be obtained as shown next: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 577: | Line 640: | ||

= & 101.27 | = & 101.27 | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

Therefore, <math>\ln S{{S}^{*}}=4.6178\,\!</math>. The <math>\lambda \,\!</math> values corresponding to this value from the following figure are <math>-0.4686\,\!</math> and <math>2.0052\,\!</math>. Therefore, the 90% confidence limits on are <math>-0.4689\,\!</math> and <math>2.0055\,\!</math>. Since the confidence limits include the value of 1, this indicates that a transformation is not required for the data in the first table. | Therefore, <math>\ln S{{S}^{*}}=4.6178\,\!</math>. The <math>\lambda \,\!</math> values corresponding to this value from the following figure are <math>-0.4686\,\!</math> and <math>2.0052\,\!</math>. Therefore, the 90% confidence limits on are <math>-0.4689\,\!</math> and <math>2.0055\,\!</math>. Since the confidence limits include the value of 1, this indicates that a transformation is not required for the data in the first table. | ||

| Line 591: | Line 655: | ||

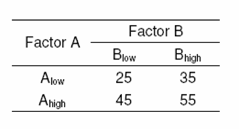

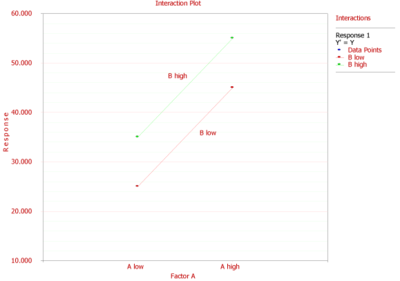

[[Image:doet6.3.png|thumb|center|400px|Two-factor factorial experiment.]] | [[Image:doet6.3.png|thumb|center|400px|Two-factor factorial experiment.]] | ||

[[Image:doe6.8.png|thumb|center|400px|Interaction plot for the data in the third table.]] | [[Image:doe6.8.png|thumb|center|400px|Interaction plot for the data in the third table.]] | ||

| Line 599: | Line 663: | ||

The effect of factor <math>A\,\!</math> on the response can be obtained by taking the difference between the average response when <math>A\,\!</math> is high and the average response when <math>A\,\!</math> is low. The change in the response due to a change in the level of a factor is called the main effect of the factor. The main effect of <math>A\,\!</math> as per the response values in the third table is: | The effect of factor <math>A\,\!</math> on the response can be obtained by taking the difference between the average response when <math>A\,\!</math> is high and the average response when <math>A\,\!</math> is low. The change in the response due to a change in the level of a factor is called the main effect of the factor. The main effect of <math>A\,\!</math> as per the response values in the third table is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 609: | Line 674: | ||

Therefore, when <math>A\,\!</math> is changed from the lower level to the higher level, the response increases by 20 units. A plot of the response for the two levels of <math>A\,\!</math> at different levels of <math>B\,\!</math> is shown in the figure above. The plot shows that change in the level of <math>A\,\!</math> leads to an increase in the response by 20 units regardless of the level of <math>B\,\!</math>. Therefore, no interaction exists in this case as indicated by the parallel lines on the plot. The main effect of <math>B\,\!</math> can be obtained as: | Therefore, when <math>A\,\!</math> is changed from the lower level to the higher level, the response increases by 20 units. A plot of the response for the two levels of <math>A\,\!</math> at different levels of <math>B\,\!</math> is shown in the figure above. The plot shows that change in the level of <math>A\,\!</math> leads to an increase in the response by 20 units regardless of the level of <math>B\,\!</math>. Therefore, no interaction exists in this case as indicated by the parallel lines on the plot. The main effect of <math>B\,\!</math> can be obtained as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 616: | Line 682: | ||

= & 10 | = & 10 | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

===Investigating Interactions=== | ===Investigating Interactions=== | ||

| Line 621: | Line 688: | ||

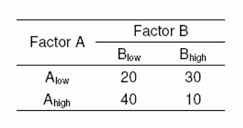

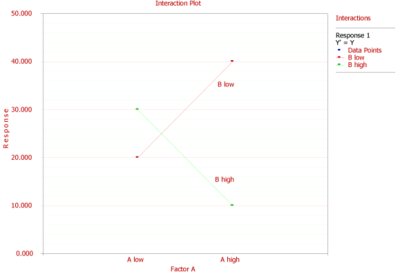

Now assume that the response values for each of the four treatment combinations were obtained as shown in the fourth table. The main effect of <math>A\,\!</math> in this case is: | Now assume that the response values for each of the four treatment combinations were obtained as shown in the fourth table. The main effect of <math>A\,\!</math> in this case is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 629: | Line 697: | ||

[[Image:doet6.4.png|thumb|center|400px|Two | [[Image:doet6.4.png|thumb|center|400px|Two factor factorial experiment.]] | ||

| Line 650: | Line 718: | ||

==Analysis of General Factorial Experiments== | ==Analysis of General Factorial Experiments== | ||

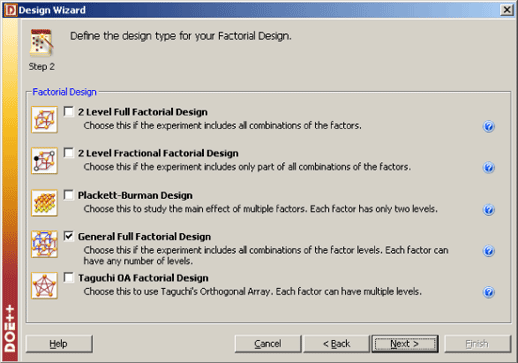

In DOE++, factorial experiments are referred to as factorial designs. The experiments explained in this section are referred to as | In DOE++, factorial experiments are referred to as ''factorial designs''. The experiments explained in this section are referred to as g''eneral factorial designs''. This is done to distinguish these experiments from the other factorial designs supported by DOE++ (see the figure below). | ||

::<math>{{Y}_{ijk}}=\mu +{{\tau }_{i}}+{{\delta }_{j}}+{{(\tau \delta )}_{ij}}+{{\epsilon }_{ijk}}\,\!</math> | |||

[[Image:doe6.10.png|thumb|center|518px|Factorial experiments available in DOE++.]] | |||

The other designs (such as the two level full factorial designs that are explained in [[Two_Level_Factorial_Experiments| Two Level Factorial Experiments]]) are special cases of these experiments in which factors are limited to a specified number of levels. The ANOVA model for the analysis of factorial experiments is formulated as shown next. Assume a factorial experiment in which the effect of two factors, <math>A\,\!</math> and <math>B\,\!</math>, on the response is being investigated. Let there be <math>{{n}_{a}}\,\!</math> levels of factor <math>A\,\!</math> and <math>{{n}_{b}}\,\!</math> levels of factor <math>B\,\!</math>. The ANOVA model for this experiment can be stated as: | |||

::<math>{{Y}_{ijk}}=\mu +{{\tau }_{i}}+{{\delta }_{j}}+{{(\tau \delta )}_{ij}}+{{\epsilon }_{ijk}}\,\!</math> | |||

where: | where: | ||

< | |||

*<math>\mu \,\!</math> represents the overall mean effect | |||

*<math>{{\tau }_{i}}\,\!</math> is the effect of the <math>i\,\!</math>th level of factor <math>A\,\!</math> (<math>i=1,2,...,{{n}_{a}}\,\!</math>) | |||

*<math>{{\delta }_{j}}\,\!</math> is the effect of the <math>j\,\!</math>th level of factor <math>B\,\!</math> (<math>j=1,2,...,{{n}_{b}}\,\!</math>) | |||

*<math>{{(\tau \delta )}_{ij}}\,\!</math> represents the interaction effect between <math>A\,\!</math> and <math>B\,\!</math> | |||

*<math>{{\epsilon }_{ijk}}\,\!</math> represents the random error terms (which are assumed to be normally distributed with a mean of zero and variance of <math>{{\sigma }^{2}}\,\!</math>) | |||

*and the subscript <math>k\,\!</math> denotes the <math>m\,\!</math> replicates (<math>k=1,2,...,m\,\!</math>) | |||

Since the effects <math>{{\tau }_{i}}\,\!</math>, <math>{{\delta }_{j}}\,\!</math> and <math>{{(\tau \delta )}_{ij}}\,\!</math> represent deviations from the overall mean, the following constraints exist: | Since the effects <math>{{\tau }_{i}}\,\!</math>, <math>{{\delta }_{j}}\,\!</math> and <math>{{(\tau \delta )}_{ij}}\,\!</math> represent deviations from the overall mean, the following constraints exist: | ||

::<math>\underset{i=1}{\overset{{{n}_{a}}}{\mathop \sum }}\,{{\tau }_{i}}=0\,\!</math> | ::<math>\underset{i=1}{\overset{{{n}_{a}}}{\mathop \sum }}\,{{\tau }_{i}}=0\,\!</math> | ||

::<math>\underset{j=1}{\overset{{{n}_{b}}}{\mathop \sum }}\,{{\delta }_{j}}=0\,\!</math> | |||

::<math>\underset{i=1}{\overset{{{n}_{a}}}{\mathop \sum }}\,{{(\tau \delta )}_{ij}}=0\,\!</math> | |||

::<math> | ::<math>\underset{j=1}{\overset{{{n}_{b}}}{\mathop \sum }}\,{{(\tau \delta )}_{ij}}=0\,\!</math> | ||

\underset{j=1}{\overset{{{n}_{b}}}{\mathop \sum }}\,{{(\tau \delta )}_{ij}}= | |||

===Hypothesis Tests in General Factorial Experiments=== | ===Hypothesis Tests in General Factorial Experiments=== | ||

These tests are used to check whether each of the factors investigated in the experiment is significant or not. For the previous example, with two factors, <math>A\,\!</math> and <math>B\,\!</math>, and their interaction, <math>AB\,\!</math>, the statements for the hypothesis tests can be formulated as follows: | These tests are used to check whether each of the factors investigated in the experiment is significant or not. For the previous example, with two factors, <math>A\,\!</math> and <math>B\,\!</math>, and their interaction, <math>AB\,\!</math>, the statements for the hypothesis tests can be formulated as follows: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

& \begin{matrix} | & \begin{matrix} | ||

1. & {} \\ | 1. & {} \\ | ||

\end{matrix}{{H}_{0}}: | \end{matrix}{{H}_{0}}:{{\tau }_{1}}={{\tau }_{2}}=...={{\tau }_{{{n}_{a}}}}=0\text{ (Main effect of }A\text{ is absent)} \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

{} & {} \\ | {} & {} \\ | ||

\end{matrix}{{H}_{1}}: | \end{matrix}{{H}_{1}}:{{\tau }_{i}}\ne 0\text{ for at least one }i \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

2. & {} \\ | 2. & {} \\ | ||

\end{matrix}{{H}_{0}}: | \end{matrix}{{H}_{0}}:{{\delta }_{1}}={{\delta }_{2}}=...={{\delta }_{{{n}_{b}}}}=0\text{ (Main effect of }B\text{ is absent)} \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

{} & {} \\ | {} & {} \\ | ||

\end{matrix}{{H}_{1}}: | \end{matrix}{{H}_{1}}:{{\delta }_{j}}\ne 0\text{ for at least one }j \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

3. & {} \\ | 3. & {} \\ | ||

\end{matrix}{{H}_{0}}: | \end{matrix}{{H}_{0}}:{{(\tau \delta )}_{11}}={{(\tau \delta )}_{12}}=...={{(\tau \delta )}_{{{n}_{a}}{{n}_{b}}}}=0\text{ (Interaction }AB\text{ is absent)} \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

{} & {} \\ | {} & {} \\ | ||

\end{matrix}{{H}_{1}}: | \end{matrix}{{H}_{1}}:{{(\tau \delta )}_{ij}}\ne 0\text{ for at least one }ij | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

The test statistics for the three tests are as follows: | The test statistics for the three tests are as follows: | ||

::1)<math>{(F_{0})}_{A} = \frac{MS_{A}}{MS_{E}}\,\!</math><br> | ::1)<math>{(F_{0})}_{A} = \frac{MS_{A}}{MS_{E}}\,\!</math><br> | ||

| Line 717: | Line 796: | ||

The tests are identical to the partial <math>F\,\!</math> test explained in [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]]. The sum of squares for these tests (to obtain the mean squares) are calculated by splitting the model sum of squares into the extra sum of squares due to each factor. The extra sum of squares calculated for each of the factors may either be partial or sequential. For the present example, if the extra sum of squares used is sequential, then the model sum of squares can be written as: | The tests are identical to the partial <math>F\,\!</math> test explained in [[Multiple_Linear_Regression_Analysis| Multiple Linear Regression Analysis]]. The sum of squares for these tests (to obtain the mean squares) are calculated by splitting the model sum of squares into the extra sum of squares due to each factor. The extra sum of squares calculated for each of the factors may either be partial or sequential. For the present example, if the extra sum of squares used is sequential, then the model sum of squares can be written as: | ||

::<math>S{{S}_{TR}}=S{{S}_{A}}+S{{S}_{B}}+S{{S}_{AB}}\,\!</math> | ::<math>S{{S}_{TR}}=S{{S}_{A}}+S{{S}_{B}}+S{{S}_{AB}}\,\!</math> | ||

| Line 722: | Line 802: | ||

where <math>S{{S}_{TR}}\,\!</math> represents the model sum of squares, <math>S{{S}_{A}}\,\!</math> represents the sequential sum of squares due to factor <math>A\,\!</math>, <math>S{{S}_{B}}\,\!</math> represents the sequential sum of squares due to factor and <math>S{{S}_{AB}}\,\!</math> represents the sequential sum of squares due to the interaction <math>AB\,\!</math>. | where <math>S{{S}_{TR}}\,\!</math> represents the model sum of squares, <math>S{{S}_{A}}\,\!</math> represents the sequential sum of squares due to factor <math>A\,\!</math>, <math>S{{S}_{B}}\,\!</math> represents the sequential sum of squares due to factor and <math>S{{S}_{AB}}\,\!</math> represents the sequential sum of squares due to the interaction <math>AB\,\!</math>. | ||

The mean squares are obtained by dividing the sum of squares by the associated degrees of freedom. Once the mean squares are known the test statistics can be calculated. For example, the test statistic to test the significance of factor <math>A\,\!</math> (or the hypothesis <math>{{H}_{0}} | The mean squares are obtained by dividing the sum of squares by the associated degrees of freedom. Once the mean squares are known the test statistics can be calculated. For example, the test statistic to test the significance of factor <math>A\,\!</math> (or the hypothesis <math>{{H}_{0}}:{{\tau }_{i}}=0\,\!</math>) can then be obtained as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 731: | Line 812: | ||

Similarly the test statistic to test significance of factor <math>B\,\!</math> and the interaction <math>AB\,\!</math> can be respectively obtained as: | Similarly the test statistic to test significance of factor <math>B\,\!</math> and the interaction <math>AB\,\!</math> can be respectively obtained as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 757: | Line 839: | ||

& \begin{matrix} | & \begin{matrix} | ||

1. & {} \\ | 1. & {} \\ | ||

\end{matrix}{{H}_{0}}: | \end{matrix}{{H}_{0}}:{{\tau }_{1}}={{\tau }_{2}}={{\tau }_{3}}=0\text{ (No main effect of factor }A\text{, speed)} \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

{} & {} \\ | {} & {} \\ | ||

\end{matrix}{{H}_{1}}: | \end{matrix}{{H}_{1}}:{{\tau }_{i}}\ne 0\text{ for at least one }i \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

2. & {} \\ | 2. & {} \\ | ||

\end{matrix}{{H}_{0}}: | \end{matrix}{{H}_{0}}:{{\delta }_{1}}={{\delta }_{2}}={{\delta }_{3}}=0\text{ (No main effect of factor }B\text{, fuel additive)} \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

{} & {} \\ | {} & {} \\ | ||

\end{matrix}{{H}_{1}}: | \end{matrix}{{H}_{1}}:{{\delta }_{j}}\ne 0\text{ for at least one }j \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

3. & {} \\ | 3. & {} \\ | ||

\end{matrix}{{H}_{0}}: | \end{matrix}{{H}_{0}}:{{(\tau \delta )}_{11}}={{(\tau \delta )}_{12}}=...={{(\tau \delta )}_{33}}=0\text{ (No interaction }AB\text{)} \\ | ||

& \begin{matrix} | & \begin{matrix} | ||

{} & {} \\ | {} & {} \\ | ||

\end{matrix}{{H}_{1}}: | \end{matrix}{{H}_{1}}:{{(\tau \delta )}_{ij}}\ne 0\text{ for at least one }ij | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

The test statistics for the three tests are: | The test statistics for the three tests are: | ||

::1.<math>{{({{F}_{0}})}_{A}}=\frac{M{{S}_{A}}}{M{{S}_{E}}}\,\!</math><br> | ::1.<math>{{({{F}_{0}})}_{A}}=\frac{M{{S}_{A}}}{M{{S}_{E}}}\,\!</math><br> | ||

:::where <math>M{{S}_{A}}\,\!</math> is the mean square for factor <math>A\,\!</math> and <math>M{{S}_{E}}\,\!</math> is the error mean square | :::where <math>M{{S}_{A}}\,\!</math> is the mean square for factor <math>A\,\!</math> and <math>M{{S}_{E}}\,\!</math> is the error mean square | ||

::2.<math>{{({{F}_{0}})}_{B}}=\frac{M{{S}_{B}}}{M{{S}_{E}}}\,\!</math> | ::2.<math>{{({{F}_{0}})}_{B}}=\frac{M{{S}_{B}}}{M{{S}_{E}}}\,\!</math> | ||

:::where <math>M{{S}_{B}}\,\!</math> is the mean square for factor <math>B\,\!</math> and <math>M{{S}_{E}}\,\!</math> is the error mean square | :::where <math>M{{S}_{B}}\,\!</math> is the mean square for factor <math>B\,\!</math> and <math>M{{S}_{E}}\,\!</math> is the error mean square | ||

::3.<math>{{({{F}_{0}})}_{AB}}=\frac{M{{S}_{AB}}}{M{{S}_{E}}}\,\!</math> | ::3.<math>{{({{F}_{0}})}_{AB}}=\frac{M{{S}_{AB}}}{M{{S}_{E}}}\,\!</math> | ||

| Line 787: | Line 873: | ||

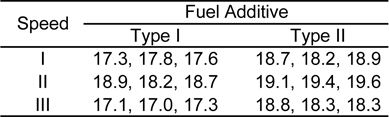

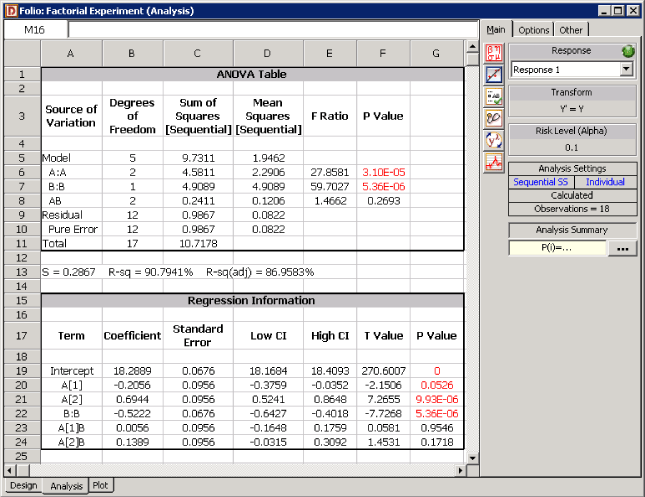

[[Image:doe6.11.png|thumb|center|639px|Experimental design for the data in the fifth table.]] | |||

The ANOVA model for this experiment can be written as: | The ANOVA model for this experiment can be written as: | ||

::<math>{{Y}_{ijk}}=\mu +{{\tau }_{i}}+{{\delta }_{j}}+{{(\tau \delta )}_{ij}}+{{\epsilon }_{ijk}}\,\!</math> | ::<math>{{Y}_{ijk}}=\mu +{{\tau }_{i}}+{{\delta }_{j}}+{{(\tau \delta )}_{ij}}+{{\epsilon }_{ijk}}\,\!</math> | ||

where <math>{{\tau }_{i}}\,\!</math> represents the <math>i\,\!</math>th treatment of factor <math>A\,\!</math> (speed) with <math>i\,\!</math> =1, 2, 3; <math>{{\delta }_{j}}\,\!</math> represents the <math>j\,\!</math>th treatment of factor <math>B\,\!</math> (fuel additive) with <math>j\,\!</math> =1, 2; and <math>{{(\tau \delta )}_{ij}}\,\!</math> represents the interaction effect. In order to calculate the test statistics, it is convenient to express the ANOVA model of the equation given above in the form <math>y=X\beta +\epsilon \,\!</math>. This can be done as explained next. | where <math>{{\tau }_{i}}\,\!</math> represents the <math>i\,\!</math>th treatment of factor <math>A\,\!</math> (speed) with <math>i\,\!</math> =1, 2, 3; <math>{{\delta }_{j}}\,\!</math> represents the <math>j\,\!</math>th treatment of factor <math>B\,\!</math> (fuel additive) with <math>j\,\!</math> =1, 2; and <math>{{(\tau \delta )}_{ij}}\,\!</math> represents the interaction effect. In order to calculate the test statistics, it is convenient to express the ANOVA model of the equation given above in the form <math>y=X\beta +\epsilon \,\!</math>. This can be done as explained next. | ||

| Line 800: | Line 888: | ||

Since the effects <math>{{\tau }_{i}}\,\!</math>, <math>{{\delta }_{j}}\,\!</math> and <math>{{(\tau \delta )}_{ij}}\,\!</math> represent deviations from the overall mean, the following constraints exist. | Since the effects <math>{{\tau }_{i}}\,\!</math>, <math>{{\delta }_{j}}\,\!</math> and <math>{{(\tau \delta )}_{ij}}\,\!</math> represent deviations from the overall mean, the following constraints exist. | ||

Constraints on <math>{{\tau }_{i}}\,\!</math> are: | Constraints on <math>{{\tau }_{i}}\,\!</math> are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 807: | Line 896: | ||

Therefore, only two of the <math>{{\tau }_{i}}\,\!</math> effects are independent. Assuming that <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math> are independent, <math>{{\tau }_{3}}=-({{\tau }_{1}}+{{\tau }_{2}})\,\!</math>. (The null hypothesis to test the significance of factor <math>A\,\!</math> can be rewritten using only the independent effects as <math>{{H}_{0}} | Therefore, only two of the <math>{{\tau }_{i}}\,\!</math> effects are independent. Assuming that <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math> are independent, <math>{{\tau }_{3}}=-({{\tau }_{1}}+{{\tau }_{2}})\,\!</math>. (The null hypothesis to test the significance of factor <math>A\,\!</math> can be rewritten using only the independent effects as <math>{{H}_{0}}:{{\tau }_{1}}={{\tau }_{2}}=0\,\!</math>.) DOE++ displays only the independent effects because only these effects are important to the analysis. The independent effects, <math>{{\tau }_{1}}\,\!</math> and <math>{{\tau }_{2}}\,\!</math>, are displayed as A[1] and A[2] respectively because these are the effects associated with factor <math>A\,\!</math> (speed). | ||

Constraints on <math>{{\delta }_{j}}\,\!</math> are: | Constraints on <math>{{\delta }_{j}}\,\!</math> are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 816: | Line 906: | ||

Therefore, only one of the <math>{{\delta }_{j}}\,\!</math> effects are independent. Assuming that <math>{{\delta }_{1}}\,\!</math> is independent, <math>{{\delta }_{2}}=-{{\delta }_{1}}\,\!</math>. (The null hypothesis to test the significance of factor <math>B\,\!</math> can be rewritten using only the independent effect as <math>{{H}_{0}} | Therefore, only one of the <math>{{\delta }_{j}}\,\!</math> effects are independent. Assuming that <math>{{\delta }_{1}}\,\!</math> is independent, <math>{{\delta }_{2}}=-{{\delta }_{1}}\,\!</math>. (The null hypothesis to test the significance of factor <math>B\,\!</math> can be rewritten using only the independent effect as <math>{{H}_{0}}:{{\delta }_{1}}=0\,\!</math>.) The independent effect <math>{{\delta }_{1}}\,\!</math> is displayed as B:B in DOE++. | ||

Constraints on <math>{{(\tau \delta )}_{ij}}\,\!</math> are: | Constraints on <math>{{(\tau \delta )}_{ij}}\,\!</math> are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 830: | Line 921: | ||

The last five equations given above represent four constraints, as only four of these five equations are independent. Therefore, only two out of the six <math>{{(\tau \delta )}_{ij}}\,\!</math> effects are independent. Assuming that <math>{{(\tau \delta )}_{11}}\,\!</math> and <math>{{(\tau \delta )}_{21}}\,\!</math> are independent, the other four effects can be expressed in terms of these effects. (The null hypothesis to test the significance of interaction <math>AB\,\!</math> can be rewritten using only the independent effects as <math>{{H}_{0}} | The last five equations given above represent four constraints, as only four of these five equations are independent. Therefore, only two out of the six <math>{{(\tau \delta )}_{ij}}\,\!</math> effects are independent. Assuming that <math>{{(\tau \delta )}_{11}}\,\!</math> and <math>{{(\tau \delta )}_{21}}\,\!</math> are independent, the other four effects can be expressed in terms of these effects. (The null hypothesis to test the significance of interaction <math>AB\,\!</math> can be rewritten using only the independent effects as <math>{{H}_{0}}:{{(\tau \delta )}_{11}}={{(\tau \delta )}_{21}}=0\,\!</math>.) The effects <math>{{(\tau \delta )}_{11}}\,\!</math> and <math>{{(\tau \delta )}_{21}}\,\!</math> are displayed as A[1]B and A[2]B respectively in DOE++. | ||

The regression version of the ANOVA model can be obtained using indicator variables, similar to the case of the single factor experiment in [[ANOVA_for_Designed_Experiments#Fitting_ANOVA_Models|Fitting ANOVA Models]]. Since factor <math>A\,\!</math> has three levels, two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, are required which need to be coded as shown next: | The regression version of the ANOVA model can be obtained using indicator variables, similar to the case of the single factor experiment in [[ANOVA_for_Designed_Experiments#Fitting_ANOVA_Models|Fitting ANOVA Models]]. Since factor <math>A\,\!</math> has three levels, two indicator variables, <math>{{x}_{1}}\,\!</math> and <math>{{x}_{2}}\,\!</math>, are required which need to be coded as shown next: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 843: | Line 935: | ||

Factor <math>B\,\!</math> has two levels and can be represented using one indicator variable, <math>{{x}_{3}}\,\!</math>, as follows: | Factor <math>B\,\!</math> has two levels and can be represented using one indicator variable, <math>{{x}_{3}}\,\!</math>, as follows: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

\text{Treatment Effect }{{\delta }_{1}}: & {{x}_{3}}=1 \\ | \text{Treatment Effect }{{\delta }_{1}}: & {{x}_{3}}=1 \\ | ||

| Line 851: | Line 943: | ||

The <math>AB\,\!</math> interaction will be represented by all possible terms resulting from the product of the indicator variables representing factors <math>A\,\!</math> and <math>B\,\!</math>. There are two such terms here - <math>{{x}_{1}}{{x}_{3}}\,\!</math> and <math>{{x}_{2}}{{x}_{3}}\,\!</math>. The regression version of the ANOVA model can finally be obtained as: | The <math>AB\,\!</math> interaction will be represented by all possible terms resulting from the product of the indicator variables representing factors <math>A\,\!</math> and <math>B\,\!</math>. There are two such terms here - <math>{{x}_{1}}{{x}_{3}}\,\!</math> and <math>{{x}_{2}}{{x}_{3}}\,\!</math>. The regression version of the ANOVA model can finally be obtained as: | ||

::<math>Y=\mu +{{\tau }_{1}}\cdot {{x}_{1}}+{{\tau }_{2}}\cdot {{x}_{2}}+{{\delta }_{1}}\cdot {{x}_{3}}+{{(\tau \delta )}_{11}}\cdot {{x}_{1}}{{x}_{3}}+{{(\tau \delta )}_{21}}\cdot {{x}_{2}}{{x}_{3}}+\epsilon \,\!</math> | ::<math>Y=\mu +{{\tau }_{1}}\cdot {{x}_{1}}+{{\tau }_{2}}\cdot {{x}_{2}}+{{\delta }_{1}}\cdot {{x}_{3}}+{{(\tau \delta )}_{11}}\cdot {{x}_{1}}{{x}_{3}}+{{(\tau \delta )}_{21}}\cdot {{x}_{2}}{{x}_{3}}+\epsilon \,\!</math> | ||

| Line 856: | Line 949: | ||

In matrix notation this model can be expressed as: | In matrix notation this model can be expressed as: | ||

::<math>y=X\beta +\epsilon \,\!</math> | ::<math>y=X\beta +\epsilon \,\!</math> | ||

:where: | :where: | ||

::<math>y=\left[ \begin{matrix} | ::<math>y=\left[ \begin{matrix} | ||

| Line 942: | Line 1,038: | ||

The model sum of squares, <math>S{{S}_{TR}}\,\!</math>, for the regression version of the ANOVA model can be obtained as: | The model sum of squares, <math>S{{S}_{TR}}\,\!</math>, for the regression version of the ANOVA model can be obtained as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 953: | Line 1,050: | ||

The total sum of squares, <math>S{{S}_{T}}\,\!</math>, can be calculated as: | The total sum of squares, <math>S{{S}_{T}}\,\!</math>, can be calculated as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 962: | Line 1,060: | ||

Since there are 18 observed response values, the number of degrees of freedom associated with the total sum of squares is 17 (<math>dof(S{{S}_{T}})=17\,\!</math>). The error sum of squares can now be obtained: | Since there are 18 observed response values, the number of degrees of freedom associated with the total sum of squares is 17 (<math>dof(S{{S}_{T}})=17\,\!</math>). The error sum of squares can now be obtained: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 971: | Line 1,070: | ||

Since there are three replicates of the full factorial experiment, all of the error sum of squares is pure error. (This can also be seen from the preceding figure, where each treatment combination of the full factorial design is repeated three times.) The number of degrees of freedom associated with the error sum of squares is: | Since there are three replicates of the full factorial experiment, all of the error sum of squares is pure error. (This can also be seen from the preceding figure, where each treatment combination of the full factorial design is repeated three times.) The number of degrees of freedom associated with the error sum of squares is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 982: | Line 1,082: | ||

The sequential sum of squares for factor <math>A\,\!</math> can be calculated as: | The sequential sum of squares for factor <math>A\,\!</math> can be calculated as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 990: | Line 1,091: | ||

where <math>{{H}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}}={{X}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}}{{(X_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}^{\prime }{{X}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}})}^{-1}}X_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}^{\prime }\,\!</math> and <math>{{X}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}}\,\!</math> is the matrix containing only the first three columns of the <math>X\,\!</math> matrix. Thus: | where <math>{{H}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}}={{X}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}}{{(X_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}^{\prime }{{X}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}})}^{-1}}X_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}^{\prime }\,\!</math> and <math>{{X}_{\mu ,{{\tau }_{1}},{{\tau }_{2}}}}\,\!</math> is the matrix containing only the first three columns of the <math>X\,\!</math> matrix. Thus: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,001: | Line 1,103: | ||

Similarly, the sum of squares for factor <math>B\,\!</math> can be calculated as: | Similarly, the sum of squares for factor <math>B\,\!</math> can be calculated as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,013: | Line 1,116: | ||

The sum of squares for the interaction <math>AB\,\!</math> is: | The sum of squares for the interaction <math>AB\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,027: | Line 1,131: | ||

Knowing the sum of squares, the test statistic for each of the factors can be calculated. Analyzing the interaction first, the test statistic for interaction <math>AB\,\!</math> is: | Knowing the sum of squares, the test statistic for each of the factors can be calculated. Analyzing the interaction first, the test statistic for interaction <math>AB\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,036: | Line 1,141: | ||

The <math>p\,\!</math> value corresponding to this statistic, based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 12 degrees of freedom in the denominator, is: | The <math>p\,\!</math> value corresponding to this statistic, based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 12 degrees of freedom in the denominator, is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,043: | Line 1,149: | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

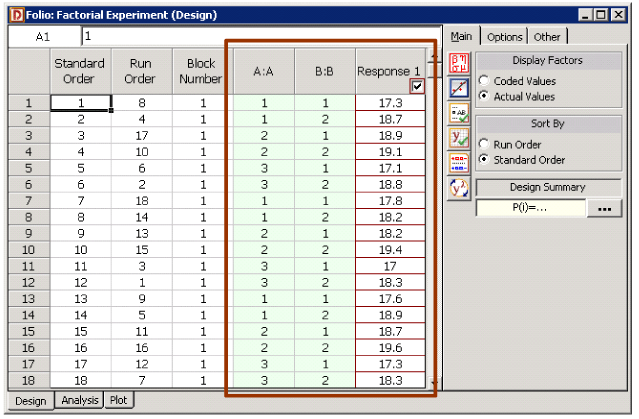

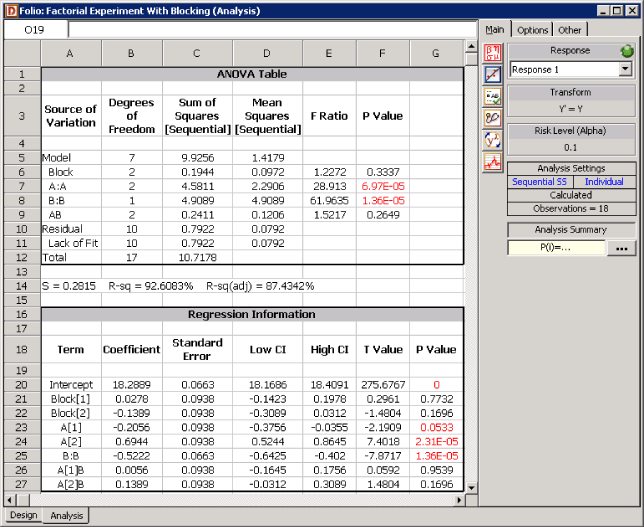

Assuming that the desired significance level is 0.1, since <math>p\,\!</math> value > 0.1, we fail to reject <math>{{H}_{0}} | |||

Assuming that the desired significance level is 0.1, since <math>p\,\!</math> value > 0.1, we fail to reject <math>{{H}_{0}}:{{(\tau \delta )}_{ij}}=0\,\!</math> and conclude that the interaction between speed and fuel additive does not significantly affect the mileage of the sports utility vehicle. DOE++ displays this result in the ANOVA table, as shown in the following figure. In the absence of the interaction, the analysis of main effects becomes important. | |||

The test statistic for factor <math>A\,\!</math> is: | The test statistic for factor <math>A\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,058: | Line 1,166: | ||

The <math>p\,\!</math> value corresponding to this statistic based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 12 degrees of freedom in the denominator is: | The <math>p\,\!</math> value corresponding to this statistic based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 12 degrees of freedom in the denominator is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,066: | Line 1,175: | ||

Since <math>p\,\!</math> value < 0.1, <math>{{H}_{0}} | Since <math>p\,\!</math> value < 0.1, <math>{{H}_{0}}:{{\tau }_{i}}=0\,\!</math> is rejected and it is concluded that factor <math>A\,\!</math> (or speed) has a significant effect on the mileage. | ||

The test statistic for factor <math>B\,\!</math> is: | The test statistic for factor <math>B\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,078: | Line 1,188: | ||

The <math>p\,\!</math> value corresponding to this statistic based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 12 degrees of freedom in the denominator is: | The <math>p\,\!</math> value corresponding to this statistic based on the <math>F\,\!</math> distribution with 2 degrees of freedom in the numerator and 12 degrees of freedom in the denominator is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,086: | Line 1,197: | ||

Since <math>p\,\!</math> value < 0.1, <math>{{H}_{0}} | Since <math>p\,\!</math> value < 0.1, <math>{{H}_{0}}:{{\delta }_{j}}=0\,\!</math> is rejected and it is concluded that factor <math>B\,\!</math> (or fuel additive type) has a significant effect on the mileage. | ||

Therefore, it can be concluded that speed and fuel additive type affect the mileage of the vehicle significantly. The results are displayed in the ANOVA table of the following figure. | Therefore, it can be concluded that speed and fuel additive type affect the mileage of the vehicle significantly. The results are displayed in the ANOVA table of the following figure. | ||

[[Image:doe6.12.png|thumb|center|645px|Analysis results for the experiment in the fifth table.]] | |||

====Calculation of Effect Coefficients==== | ====Calculation of Effect Coefficients==== | ||

Results for the effect coefficients of the model of the regression version of the ANOVA model are displayed in the Regression Information table in the following figure. Calculations of the results in this table are discussed next. The effect coefficients can be calculated as follows: | Results for the effect coefficients of the model of the regression version of the ANOVA model are displayed in the Regression Information table in the following figure. Calculations of the results in this table are discussed next. The effect coefficients can be calculated as follows: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,105: | Line 1,221: | ||

\end{align}\,\!</math> | \end{align}\,\!</math> | ||

Therefore, <math>\hat{\mu }=18.2889\,\!</math>, <math>{{\hat{\tau }}_{1}}=-0.2056\,\!</math>, <math>{{\hat{\tau }}_{2}}=0.6944\,\!</math> etc. As mentioned previously, these coefficients are displayed as Intercept, A[1] and A[2] respectively depending on the name of the factor used in the experimental design. The standard error for each of these estimates is obtained using the diagonal elements of the variance-covariance matrix <math>C\,\!</math>. | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,125: | Line 1,240: | ||

For example, the standard error for <math>{{\hat{\tau }}_{1}}\,\!</math> is: | For example, the standard error for <math>{{\hat{\tau }}_{1}}\,\!</math> is: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,134: | Line 1,250: | ||

Then the <math>t\,\!</math> statistic for <math>{{\hat{\tau }}_{1}}\,\!</math> can be obtained as: | Then the <math>t\,\!</math> statistic for <math>{{\hat{\tau }}_{1}}\,\!</math> can be obtained as: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,146: | Line 1,263: | ||

Confidence intervals on <math>{{\tau }_{1}}\,\!</math> can also be calculated. The 90% limits on <math>{{\tau }_{1}}\,\!</math> are: | Confidence intervals on <math>{{\tau }_{1}}\,\!</math> can also be calculated. The 90% limits on <math>{{\tau }_{1}}\,\!</math> are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,170: | Line 1,288: | ||

==Blocking== | ==Blocking== | ||

Many times a factorial experiment requires so many runs that all of them | Many times a factorial experiment requires so many runs that not all of them can be completed under homogeneous conditions. This may lead to inclusion of the effects of ''nuisance factors'' into the investigation. Nuisance factors are factors that have an effect on the response but are not of primary interest to the investigator. For example, two replicates of a two factor factorial experiment require eight runs. If four runs require the duration of one day to be completed, then the total experiment will require two days to be completed. The difference in the conditions on the two days may introduce effects on the response that are not the result of the two factors being investigated. Therefore, the day is a nuisance factor for this experiment. | ||

Nuisance factors can be accounted for using blocking. In blocking, experimental runs are separated based on levels of the nuisance factor. For the case of the two | Nuisance factors can be accounted for using ''blocking''. In blocking, experimental runs are separated based on levels of the nuisance factor. For the case of the two factor factorial experiment (where the day is a nuisance factor), separation can be made into two groups or ''blocks'': runs that are carried out on the first day belong to block 1, and runs that are carried out on the second day belong to block 2. Thus, within each block conditions are the same with respect to the nuisance factor. As a result, each block investigates the effects of the factors of interest, while the difference in the blocks measures the effect of the nuisance factor. | ||

For the example of the two factor factorial experiment, a possible assignment of runs to the blocks could be | For the example of the two factor factorial experiment, a possible assignment of runs to the blocks could be as follows: one replicate of the experiment is assigned to block 1 and the second replicate is assigned to block 2 (now each block contains all possible treatment combinations). Within each block, runs are subjected to randomization (i.e., randomization is now restricted to the runs within a block). Such a design, where each block contains one complete replicate and the treatments within a block are subjected to randomization, is called ''randomized complete block design''. | ||

In summary, blocking should always be used to account for the effects of nuisance factors if it is not possible to hold the nuisance factor at a constant level through all of the experimental runs. Randomization should be used within each block to counter the effects of any unknown variability that may still be present. | In summary, blocking should always be used to account for the effects of nuisance factors if it is not possible to hold the nuisance factor at a constant level through all of the experimental runs. Randomization should be used within each block to counter the effects of any unknown variability that may still be present. | ||

| Line 1,179: | Line 1,297: | ||

====Example==== | ====Example==== | ||

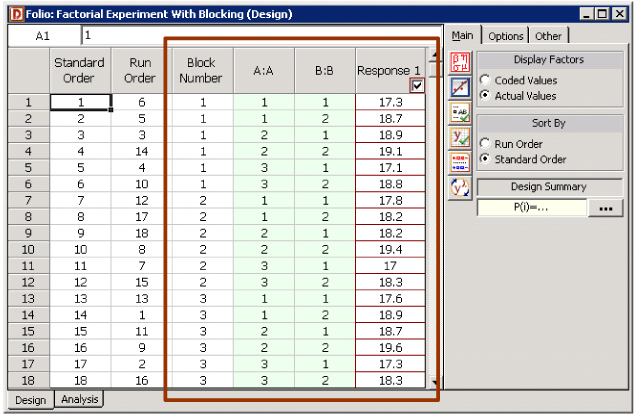

[[Image:doe6.13.png|thumb|center| | Consider the experiment of the fifth table where the mileage of a sports utility vehicle was investigated for the effects of speed and fuel additive type. Now assume that the three replicates for this experiment were carried out on three different vehicles. To ensure that the variation from one vehicle to another does not have an effect on the analysis, each vehicle is considered as one block. See the experiment design in the following figure. | ||

[[Image:doe6.13.png|thumb|center|643px|Randomized complete block design for the experiment in the fifth table using three blocks.]] | |||

For the purpose of the analysis, the block is considered as a main effect except that it is assumed that interactions between the block and the other main effects do not exist. Therefore, there is one block main effect (having three levels - block 1, block 2 and block 3), two main effects (speed -having three levels; and fuel additive type - having two levels) and one interaction effect (speed-fuel additive interaction) for this experiment. Let <math>{{\zeta }_{i}}\,\!</math> represent the block effects. The hypothesis test on the block main effect checks if there is a significant variation from one vehicle to the other. The statements for the hypothesis test are: | |||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,190: | Line 1,313: | ||

The test statistic for this test is: | The test statistic for this test is: | ||

::<math>{{F}_{0}}=\frac{M{{S}_{Block}}}{M{{S}_{E}}}\,\!</math> | ::<math>{{F}_{0}}=\frac{M{{S}_{Block}}}{M{{S}_{E}}}\,\!</math> | ||

| Line 1,195: | Line 1,319: | ||

where <math>M{{S}_{Block}}\,\!</math> represents the mean square for the block main effect and <math>M{{S}_{E}}\,\!</math> is the error mean square. The hypothesis statements and test statistics to test the significance of factors <math>A\,\!</math> (speed), <math>B\,\!</math> (fuel additive) and the interaction <math>AB\,\!</math> (speed-fuel additive interaction) can be obtained as explained in the [[ANOVA_for_Designed_Experiments#Example_2| example]]. The ANOVA model for this example can be written as: | where <math>M{{S}_{Block}}\,\!</math> represents the mean square for the block main effect and <math>M{{S}_{E}}\,\!</math> is the error mean square. The hypothesis statements and test statistics to test the significance of factors <math>A\,\!</math> (speed), <math>B\,\!</math> (fuel additive) and the interaction <math>AB\,\!</math> (speed-fuel additive interaction) can be obtained as explained in the [[ANOVA_for_Designed_Experiments#Example_2| example]]. The ANOVA model for this example can be written as: | ||

::<math>{{Y}_{ijk}}=\mu +{{\zeta }_{i}}+{{\tau }_{j}}+{{\delta }_{k}}+{{(\tau \delta )}_{jk}}+{{\epsilon }_{ijk}}\,\!</math> | ::<math>{{Y}_{ijk}}=\mu +{{\zeta }_{i}}+{{\tau }_{j}}+{{\delta }_{k}}+{{(\tau \delta )}_{jk}}+{{\epsilon }_{ijk}}\,\!</math> | ||

where: | where: | ||

*<math>\mu \,\!</math> represents the overall mean effect | |||

*<math>{{\zeta }_{i}}\,\!</math> is the effect of the <math>i\,\!</math>th level of the block (<math>i=1,2,3\,\!</math>) | |||

*<math>{{\tau }_{j}}\,\!</math> is the effect of the <math>j\,\!</math>th level of factor <math>A\,\!</math> (<math>j=1,2,3\,\!</math>) | |||

*<math>{{\delta }_{k}}\,\!</math> is the effect of the <math>k\,\!</math>th level of factor <math>B\,\!</math> (<math>k=1,2\,\!</math>) | |||

*<math>{{(\tau \delta )}_{jk}}\,\!</math> represents the interaction effect between <math>A\,\!</math> and <math>B\,\!</math> | |||

*and <math>{{\epsilon }_{ijk}}\,\!</math> represents the random error terms (which are assumed to be normally distributed with a mean of zero and variance of <math>{{\sigma }^{2}}\,\!</math>) | |||

In order to calculate the test statistics, it is convenient to express the ANOVA model of the equation given above in the form <math>y=X\beta +\epsilon \,\!</math>. This can be done as explained next. | In order to calculate the test statistics, it is convenient to express the ANOVA model of the equation given above in the form <math>y=X\beta +\epsilon \,\!</math>. This can be done as explained next. | ||

====Expression of the ANOVA Model as <math>y=X\beta +\epsilon \,\!</math>==== | ====Expression of the ANOVA Model as <math>y=X\beta +\epsilon \,\!</math>==== | ||

| Line 1,215: | Line 1,342: | ||

<br> | <br> | ||

Constraints on <math>{{\zeta }_{i}}\,\!</math> are: | Constraints on <math>{{\zeta }_{i}}\,\!</math> are: | ||

::<math>\begin{align} | ::<math>\begin{align} | ||

| Line 1,222: | Line 1,350: | ||